André van Schaik

Neural Signal Compression using RAMAN tinyML Accelerator for BCI Applications

Apr 09, 2025Abstract:High-quality, multi-channel neural recording is indispensable for neuroscience research and clinical applications. Large-scale brain recordings often produce vast amounts of data that must be wirelessly transmitted for subsequent offline analysis and decoding, especially in brain-computer interfaces (BCIs) utilizing high-density intracortical recordings with hundreds or thousands of electrodes. However, transmitting raw neural data presents significant challenges due to limited communication bandwidth and resultant excessive heating. To address this challenge, we propose a neural signal compression scheme utilizing Convolutional Autoencoders (CAEs), which achieves a compression ratio of up to 150 for compressing local field potentials (LFPs). The CAE encoder section is implemented on RAMAN, an energy-efficient tinyML accelerator designed for edge computing, and subsequently deployed on an Efinix Ti60 FPGA with 37.3k LUTs and 8.6k register utilization. RAMAN leverages sparsity in activation and weights through zero skipping, gating, and weight compression techniques. Additionally, we employ hardware-software co-optimization by pruning CAE encoder model parameters using a hardware-aware balanced stochastic pruning strategy, resolving workload imbalance issues and eliminating indexing overhead to reduce parameter storage requirements by up to 32.4%. Using the proposed compact depthwise separable convolutional autoencoder (DS-CAE) model, the compressed neural data from RAMAN is reconstructed offline with superior signal-to-noise and distortion ratios (SNDR) of 22.6 dB and 27.4 dB, along with R2 scores of 0.81 and 0.94, respectively, evaluated on two monkey neural recordings.

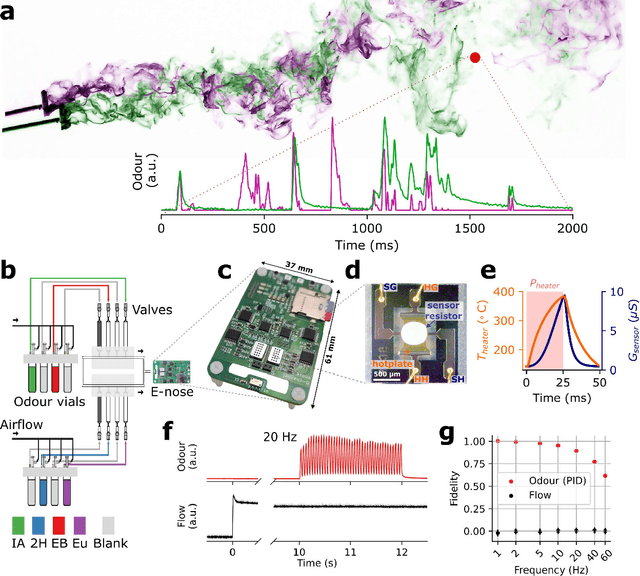

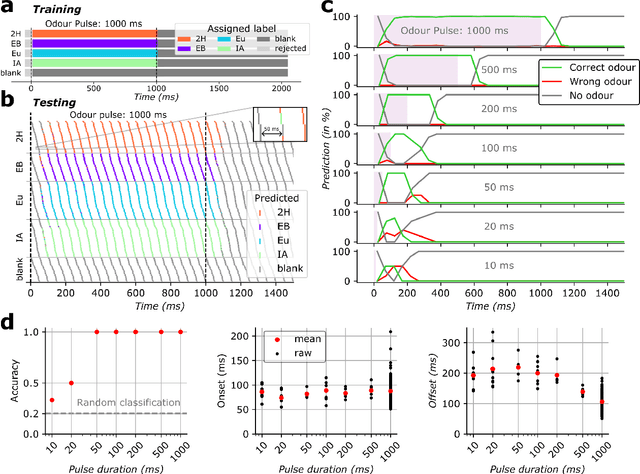

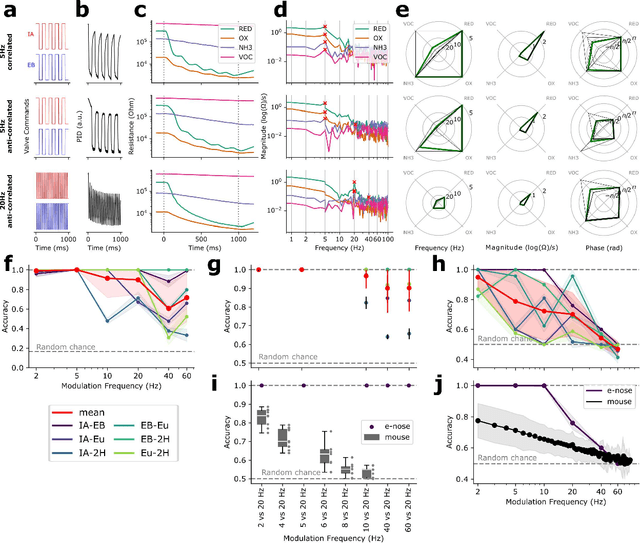

Neuromorphic circuit for temporal odor encoding in turbulent environments

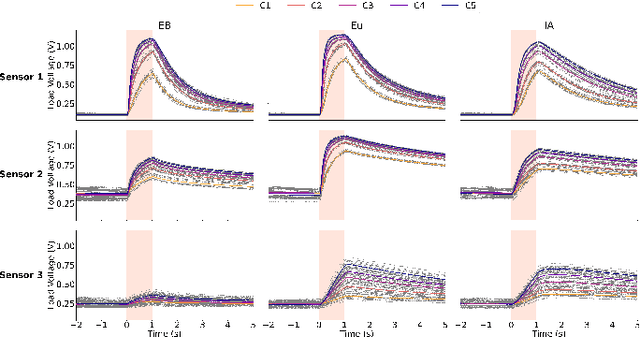

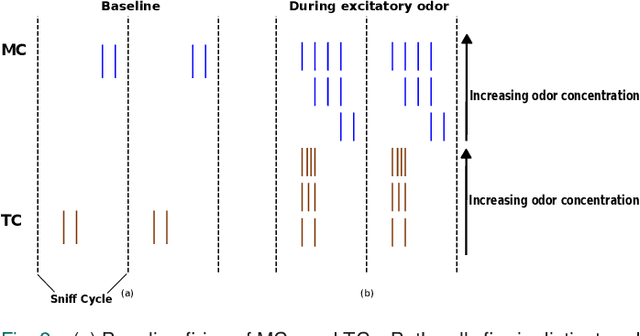

Dec 28, 2024Abstract:Natural odor environments present turbulent and dynamic conditions, causing chemical signals to fluctuate in space, time, and intensity. While many species have evolved highly adaptive behavioral responses to such variability, the emerging field of neuromorphic olfaction continues to grapple with the challenge of efficiently sampling and identifying odors in real-time. In this work, we investigate Metal-Oxide (MOx) gas sensor recordings of constant airflow-embedded artificial odor plumes. We discover a data feature that is representative of the presented odor stimulus at a certain concentration - irrespective of temporal variations caused by the plume dynamics. Further, we design a neuromorphic electronic nose front-end circuit for extracting and encoding this feature into analog spikes for gas detection and concentration estimation. The design is inspired by the spiking output of parallel neural pathways in the mammalian olfactory bulb. We test the circuit for gas recognition and concentration estimation in artificial environments, where either single gas pulses or pre-recorded odor plumes were deployed in a constant flow of air. For both environments, our results indicate that the gas concentration is encoded in -- and inversely proportional to the time difference of analog spikes emerging out of two parallel pathways, similar to the spiking output of a mammalian olfactory bulb. The resulting neuromorphic nose could enable data-efficient, real-time robotic plume navigation systems, advancing the capabilities of odor source localization in applications such as environmental monitoring and search-and-rescue.

The Neuromorphic Analog Electronic Nose

Oct 24, 2024

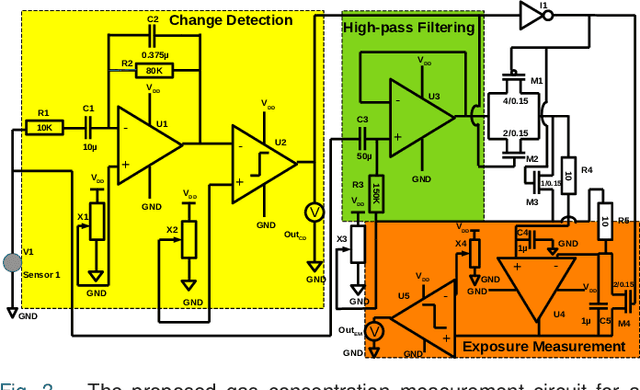

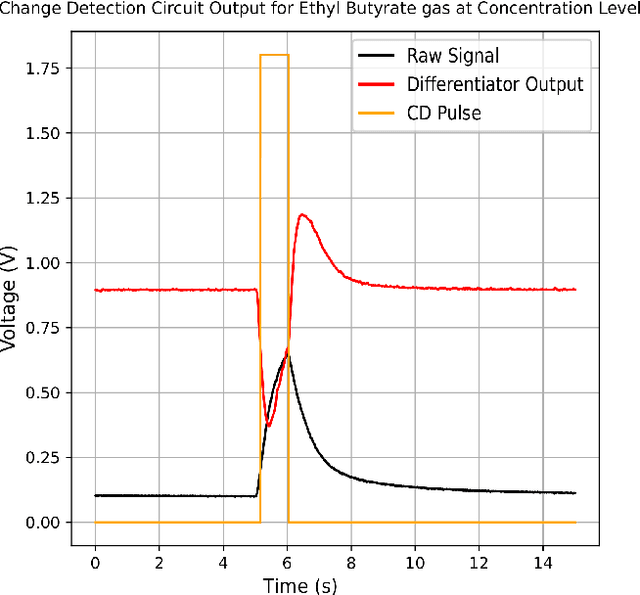

Abstract:Rapid detection of gas concentration is important in different domains like gas leakage monitoring, pollution control, and so on, for the prevention of health hazards. Out of different types of gas sensors, Metal oxide (MOx) sensors are extensively used in such applications because of their portability, low cost, and high sensitivity for specific gases. However, how to effectively sample the MOx data for the real-time detection of gas and its concentration level remains an open question. Here we introduce a simple analog front-end for one MOx sensor that encodes the gas concentration in the time difference between pulses of two separate pathways. This front-end design is inspired by the spiking output of a mammalian olfactory bulb. We show that for a gas pulse injected in a constant airflow, the time difference between pulses decreases with increasing gas concentration, similar to the spike time difference between the two principal output neurons in the olfactory bulb. The circuit design is further extended to a MOx sensor array and this sensor array front-end was tested in the same environment for gas identification and concentration estimation. Encoding of gas stimulus features in analog spikes at the sensor level itself may result in data and power-efficient real-time gas sensing systems in the future that can ultimately be used in uncontrolled and turbulent environments for longer periods without data explosion.

High-speed odour sensing using miniaturised electronic nose

Jun 04, 2024

Abstract:Animals have evolved to rapidly detect and recognise brief and intermittent encounters with odour packages, exhibiting recognition capabilities within milliseconds. Artificial olfaction has faced challenges in achieving comparable results -- existing solutions are either slow; or bulky, expensive, and power-intensive -- limiting applicability in real-world scenarios for mobile robotics. Here we introduce a miniaturised high-speed electronic nose; characterised by high-bandwidth sensor readouts, tightly controlled sensing parameters and powerful algorithms. The system is evaluated on a high-fidelity odour delivery benchmark. We showcase successful classification of tens-of-millisecond odour pulses, and demonstrate temporal pattern encoding of stimuli switching with up to 60 Hz. Those timescales are unprecedented in miniaturised low-power settings, and demonstrably exceed the performance observed in mice. For the first time, it is possible to match the temporal resolution of animal olfaction in robotic systems. This will allow for addressing challenges in environmental and industrial monitoring, security, neuroscience, and beyond.

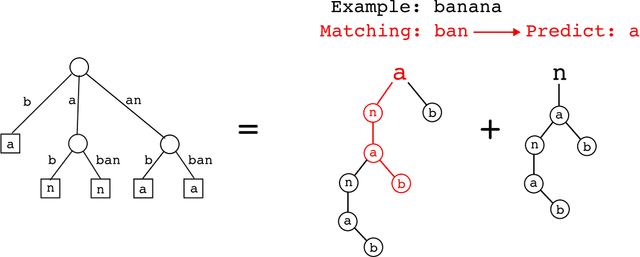

An Event based Prediction Suffix Tree

Oct 20, 2023

Abstract:This article introduces the Event based Prediction Suffix Tree (EPST), a biologically inspired, event-based prediction algorithm. The EPST learns a model online based on the statistics of an event based input and can make predictions over multiple overlapping patterns. The EPST uses a representation specific to event based data, defined as a portion of the power set of event subsequences within a short context window. It is explainable, and possesses many promising properties such as fault tolerance, resistance to event noise, as well as the capability for one-shot learning. The computational features of the EPST are examined in a synthetic data prediction task with additive event noise, event jitter, and dropout. The resulting algorithm outputs predicted projections for the near term future of the signal, which may be applied to tasks such as event based anomaly detection or pattern recognition.

Spike-time encoding of gas concentrations using neuromorphic analog sensory front-end

Oct 11, 2023Abstract:Gas concentration detection is important for applications such as gas leakage monitoring. Metal Oxide (MOx) sensors show high sensitivities for specific gases, which makes them particularly useful for such monitoring applications. However, how to efficiently sample and further process the sensor responses remains an open question. Here we propose a simple analog circuit design inspired by the spiking output of the mammalian olfactory bulb and by event-based vision sensors. Our circuit encodes the gas concentration in the time difference between the pulses of two separate pathways. We show that in the setting of controlled airflow-embedded gas injections, the time difference between the two generated pulses varies inversely with gas concentration, which is in agreement with the spike timing difference between tufted cells and mitral cells of the mammalian olfactory bulb. Encoding concentration information in analog spike timings may pave the way for rapid and efficient gas detection, and ultimately lead to data- and power-efficient monitoring devices to be deployed in uncontrolled and turbulent environments.

Limitations in odour recognition and generalisation in a neuromorphic olfactory circuit

Sep 20, 2023Abstract:Neuromorphic computing is one of the few current approaches that have the potential to significantly reduce power consumption in Machine Learning and Artificial Intelligence. Imam & Cleland presented an odour-learning algorithm that runs on a neuromorphic architecture and is inspired by circuits described in the mammalian olfactory bulb. They assess the algorithm's performance in "rapid online learning and identification" of gaseous odorants and odorless gases (short "gases") using a set of gas sensor recordings of different odour presentations and corrupting them by impulse noise. We replicated parts of the study and discovered limitations that affect some of the conclusions drawn. First, the dataset used suffers from sensor drift and a non-randomised measurement protocol, rendering it of limited use for odour identification benchmarks. Second, we found that the model is restricted in its ability to generalise over repeated presentations of the same gas. We demonstrate that the task the study refers to can be solved with a simple hash table approach, matching or exceeding the reported results in accuracy and runtime. Therefore, a validation of the model that goes beyond restoring a learned data sample remains to be shown, in particular its suitability to odour identification tasks.

RAMAN: A Re-configurable and Sparse tinyML Accelerator for Inference on Edge

Jun 10, 2023

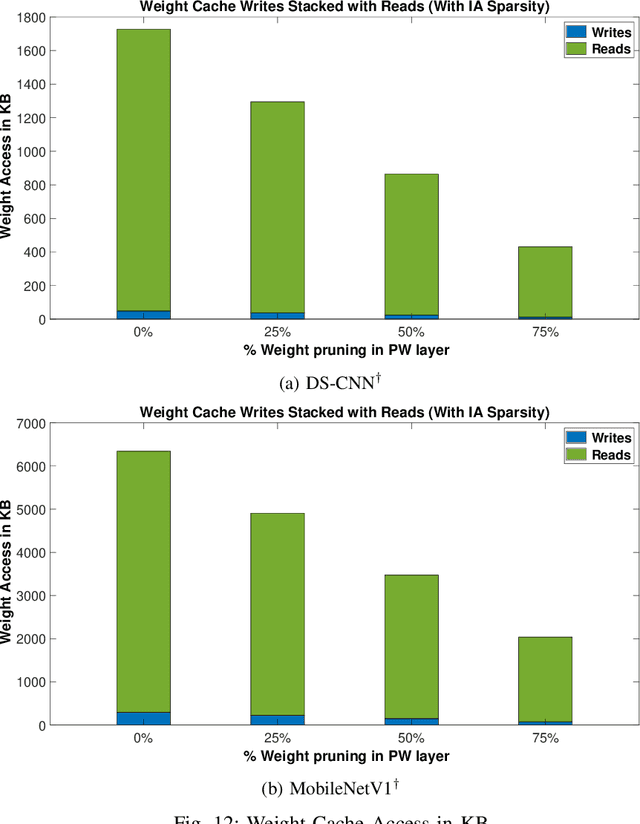

Abstract:Deep Neural Network (DNN) based inference at the edge is challenging as these compute and data-intensive algorithms need to be implemented at low cost and low power while meeting the latency constraints of the target applications. Sparsity, in both activations and weights inherent to DNNs, is a key knob to leverage. In this paper, we present RAMAN, a Re-configurable and spArse tinyML Accelerator for infereNce on edge, architected to exploit the sparsity to reduce area (storage), power as well as latency. RAMAN can be configured to support a wide range of DNN topologies - consisting of different convolution layer types and a range of layer parameters (feature-map size and the number of channels). RAMAN can also be configured to support accuracy vs power/latency tradeoffs using techniques deployed at compile-time and run-time. We present the salient features of the architecture, provide implementation results and compare the same with the state-of-the-art. RAMAN employs novel dataflow inspired by Gustavson's algorithm that has optimal input activation (IA) and output activation (OA) reuse to minimize memory access and the overall data movement cost. The dataflow allows RAMAN to locally reduce the partial sum (Psum) within a processing element array to eliminate the Psum writeback traffic. Additionally, we suggest a method to reduce peak activation memory by overlapping IA and OA on the same memory space, which can reduce storage requirements by up to 50%. RAMAN was implemented on a low-power and resource-constrained Efinix Ti60 FPGA with 37.2K LUTs and 8.6K register utilization. RAMAN processes all layers of the MobileNetV1 model at 98.47 GOp/s/W and the DS-CNN model at 79.68 GOp/s/W by leveraging both weight and activation sparsity.

Efficient Implementation of a Multi-Layer Gradient-Free Online-Trainable Spiking Neural Network on FPGA

May 31, 2023Abstract:This paper presents an efficient hardware implementation of the recently proposed Optimized Deep Event-driven Spiking Neural Network Architecture (ODESA). ODESA is the first network to have end-to-end multi-layer online local supervised training without using gradients and has the combined adaptation of weights and thresholds in an efficient hierarchical structure. This research shows that the network architecture and the online training of weights and thresholds can be implemented efficiently on a large scale in hardware. The implementation consists of a multi-layer Spiking Neural Network (SNN) and individual training modules for each layer that enable online self-learning without using back-propagation. By using simple local adaptive selection thresholds, a Winner-Takes-All (WTA) constraint on each layer, and a modified weight update rule that is more amenable to hardware, the trainer module allocates neuronal resources optimally at each layer without having to pass high-precision error measurements across layers. All elements in the system, including the training module, interact using event-based binary spikes. The hardware-optimized implementation is shown to preserve the performance of the original algorithm across multiple spatial-temporal classification problems with significantly reduced hardware requirements.

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge