Nicholas Owen Ralph

Optical Linear Systems Framework for Event Sensing and Computational Neuromorphic Imaging

Jan 20, 2026Abstract:Event vision sensors (neuromorphic cameras) output sparse, asynchronous ON/OFF events triggered by log-intensity threshold crossings, enabling microsecond-scale sensing with high dynamic range and low data bandwidth. As a nonlinear system, this event representation does not readily integrate with the linear forward models that underpin most computational imaging and optical system design. We present a physics-grounded processing pipeline that maps event streams to estimates of per-pixel log-intensity and intensity derivatives, and embeds these measurements in a dynamic linear systems model with a time-varying point spread function. This enables inverse filtering directly from event data, using frequency-domain Wiener deconvolution with a known (or parameterised) dynamic transfer function. We validate the approach in simulation for single and overlapping point sources under modulated defocus, and on real event data from a tunable-focus telescope imaging a star field, demonstrating source localisation and separability. The proposed framework provides a practical bridge between event sensing and model-based computational imaging for dynamic optical systems.

Seeing like a Cephalopod: Colour Vision with a Monochrome Event Camera

Apr 15, 2025

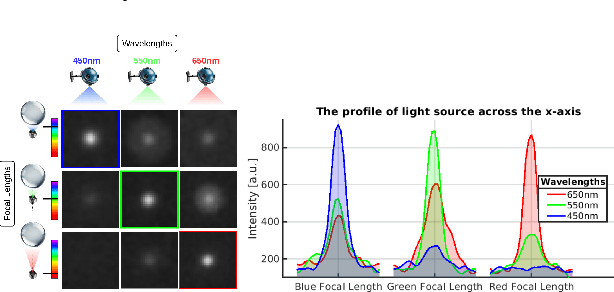

Abstract:Cephalopods exhibit unique colour discrimination capabilities despite having one type of photoreceptor, relying instead on chromatic aberration induced by their ocular optics and pupil shapes to perceive spectral information. We took inspiration from this biological mechanism to design a spectral imaging system that combines a ball lens with an event-based camera. Our approach relies on a motorised system that shifts the focal position, mirroring the adaptive lens motion in cephalopods. This approach has enabled us to achieve wavelength-dependent focusing across the visible light and near-infrared spectrum, making the event a spectral sensor. We characterise chromatic aberration effects, using both event-based and conventional frame-based sensors, validating the effectiveness of bio-inspired spectral discrimination both in simulation and in a real setup as well as assessing the spectral discrimination performance. Our proposed approach provides a robust spectral sensing capability without conventional colour filters or computational demosaicing. This approach opens new pathways toward new spectral sensing systems inspired by nature's evolutionary solutions. Code and analysis are available at: https://samiarja.github.io/neuromorphic_octopus_eye/

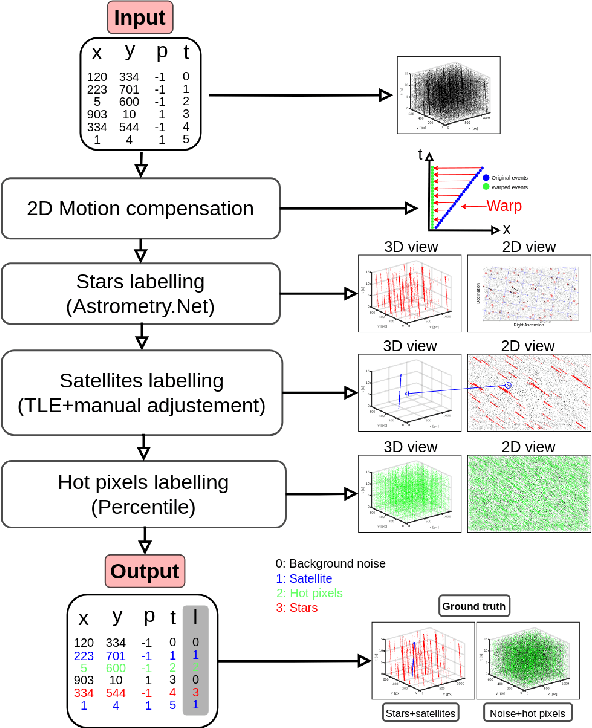

Noise Filtering Benchmark for Neuromorphic Satellites Observations

Nov 18, 2024

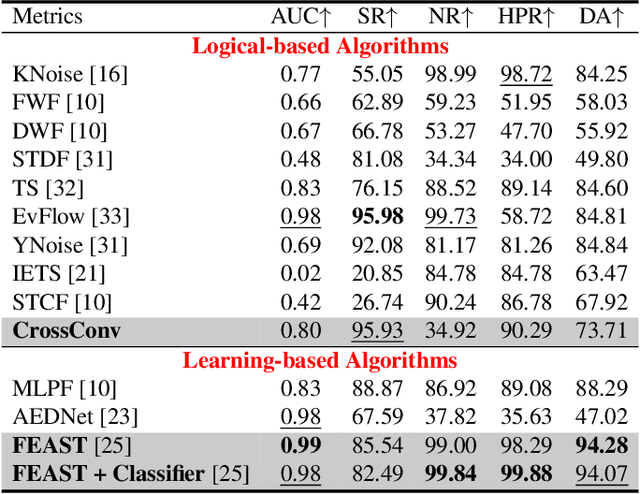

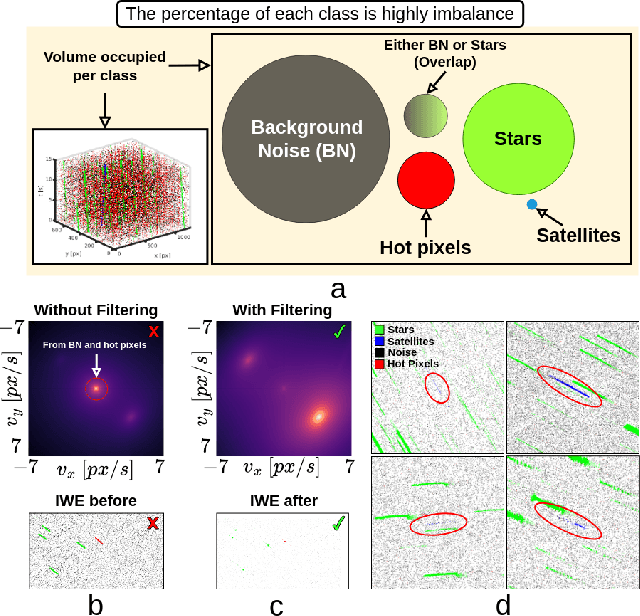

Abstract:Event cameras capture sparse, asynchronous brightness changes which offer high temporal resolution, high dynamic range, low power consumption, and sparse data output. These advantages make them ideal for Space Situational Awareness, particularly in detecting resident space objects moving within a telescope's field of view. However, the output from event cameras often includes substantial background activity noise, which is known to be more prevalent in low-light conditions. This noise can overwhelm the sparse events generated by satellite signals, making detection and tracking more challenging. Existing noise-filtering algorithms struggle in these scenarios because they are typically designed for denser scenes, where losing some signal is acceptable. This limitation hinders the application of event cameras in complex, real-world environments where signals are extremely sparse. In this paper, we propose new event-driven noise-filtering algorithms specifically designed for very sparse scenes. We categorise the algorithms into logical-based and learning-based approaches and benchmark their performance against 11 state-of-the-art noise-filtering algorithms, evaluating how effectively they remove noise and hot pixels while preserving the signal. Their performance was quantified by measuring signal retention and noise removal accuracy, with results reported using ROC curves across the parameter space. Additionally, we introduce a new high-resolution satellite dataset with ground truth from a real-world platform under various noise conditions, which we have made publicly available. Code, dataset, and trained weights are available at \url{https://github.com/samiarja/dvs_sparse_filter}.

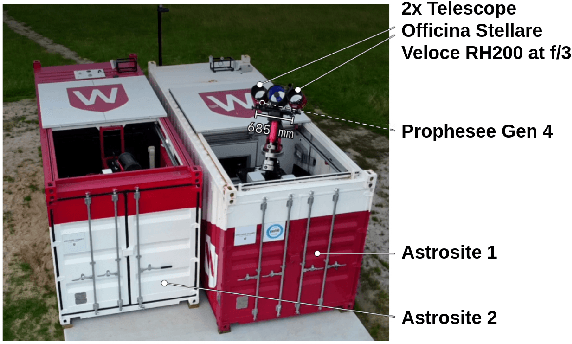

Astrometric Calibration and Source Characterisation of the Latest Generation Neuromorphic Event-based Cameras for Space Imaging

Nov 17, 2022Abstract:As an emerging approach to space situational awareness and space imaging, the practical use of an event-based camera in space imaging for precise source analysis is still in its infancy. The nature of event-based space imaging and data collection needs to be further explored to develop more effective event-based space image systems and advance the capabilities of event-based tracking systems with improved target measurement models. Moreover, for event measurements to be meaningful, a framework must be investigated for event-based camera calibration to project events from pixel array coordinates in the image plane to coordinates in a target resident space object's reference frame. In this paper, the traditional techniques of conventional astronomy are reconsidered to properly utilise the event-based camera for space imaging and space situational awareness. This paper presents the techniques and systems used for calibrating an event-based camera for reliable and accurate measurement acquisition. These techniques are vital in building event-based space imaging systems capable of real-world space situational awareness tasks. By calibrating sources detected using the event-based camera, the spatio-temporal characteristics of detected sources or `event sources' can be related to the photometric characteristics of the underlying astrophysical objects. Finally, these characteristics are analysed to establish a foundation for principled processing and observing techniques which appropriately exploit the capabilities of the event-based camera.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge