George Barbastathis

Department of Mechanical Engineering, Massachusetts Institute of Technology, Cambridge, Massachusetts, 02139, USA, Singapore-MIT Alliance for Research and Technology

Introducing the Biomechanics-Function Relationship in Glaucoma: Improved Visual Field Loss Predictions from intraocular pressure-induced Neural Tissue Strains

Jun 21, 2024

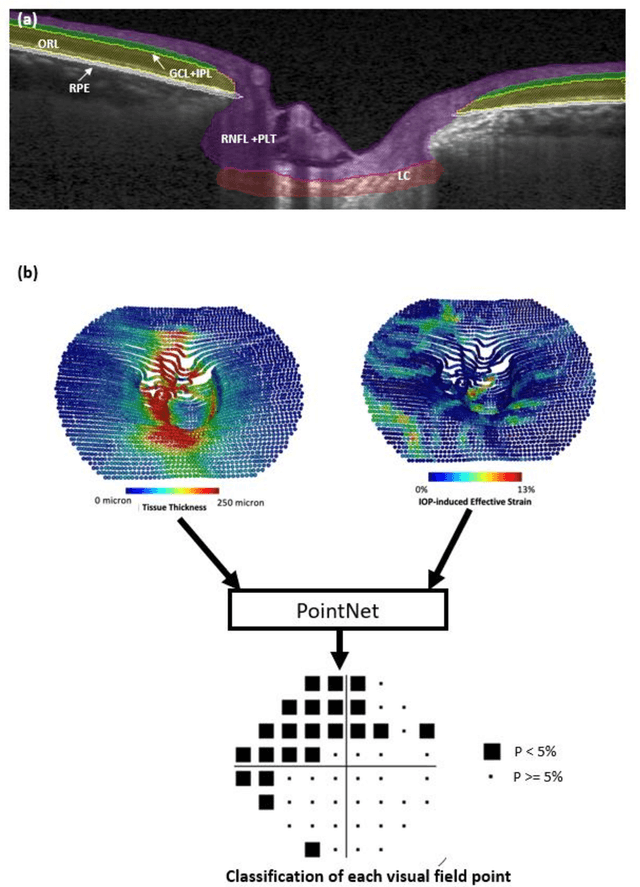

Abstract:Objective. (1) To assess whether neural tissue structure and biomechanics could predict functional loss in glaucoma; (2) To evaluate the importance of biomechanics in making such predictions. Design, Setting and Participants. We recruited 238 glaucoma subjects. For one eye of each subject, we imaged the optic nerve head (ONH) using spectral-domain OCT under the following conditions: (1) primary gaze and (2) primary gaze with acute IOP elevation. Main Outcomes: We utilized automatic segmentation of optic nerve head (ONH) tissues and digital volume correlation (DVC) analysis to compute intraocular pressure (IOP)-induced neural tissue strains. A robust geometric deep learning approach, known as Point-Net, was employed to predict the full Humphrey 24-2 pattern standard deviation (PSD) maps from ONH structural and biomechanical information. For each point in each PSD map, we predicted whether it exhibited no defect or a PSD value of less than 5%. Predictive performance was evaluated using 5-fold cross-validation and the F1-score. We compared the model's performance with and without the inclusion of IOP-induced strains to assess the impact of biomechanics on prediction accuracy. Results: Integrating biomechanical (IOP-induced neural tissue strains) and structural (tissue morphology and neural tissues thickness) information yielded a significantly better predictive model (F1-score: 0.76+-0.02) across validation subjects, as opposed to relying only on structural information, which resulted in a significantly lower F1-score of 0.71+-0.02 (p < 0.05). Conclusion: Our study has shown that the integration of biomechanical data can significantly improve the accuracy of visual field loss predictions. This highlights the importance of the biomechanics-function relationship in glaucoma, and suggests that biomechanics may serve as a crucial indicator for the development and progression of glaucoma.

On the use of deep learning for phase recovery

Aug 02, 2023Abstract:Phase recovery (PR) refers to calculating the phase of the light field from its intensity measurements. As exemplified from quantitative phase imaging and coherent diffraction imaging to adaptive optics, PR is essential for reconstructing the refractive index distribution or topography of an object and correcting the aberration of an imaging system. In recent years, deep learning (DL), often implemented through deep neural networks, has provided unprecedented support for computational imaging, leading to more efficient solutions for various PR problems. In this review, we first briefly introduce conventional methods for PR. Then, we review how DL provides support for PR from the following three stages, namely, pre-processing, in-processing, and post-processing. We also review how DL is used in phase image processing. Finally, we summarize the work in DL for PR and outlook on how to better use DL to improve the reliability and efficiency in PR. Furthermore, we present a live-updating resource (https://github.com/kqwang/phase-recovery) for readers to learn more about PR.

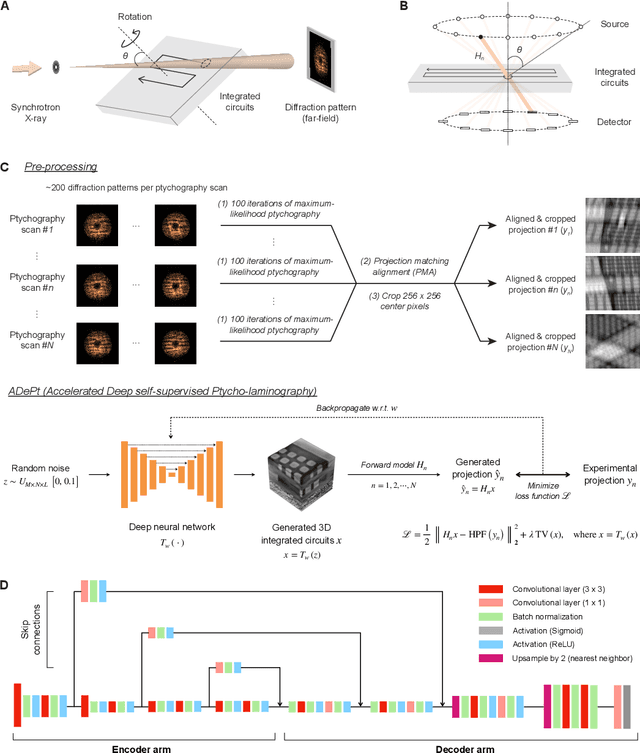

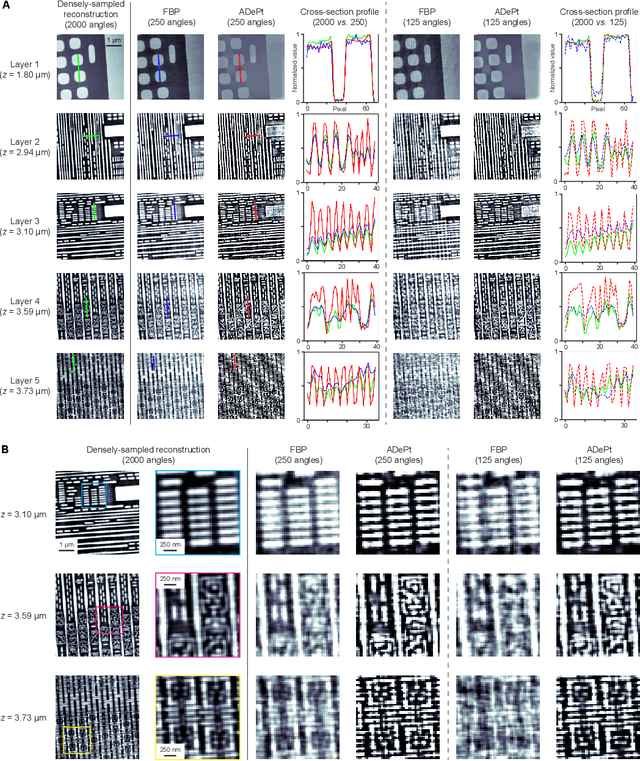

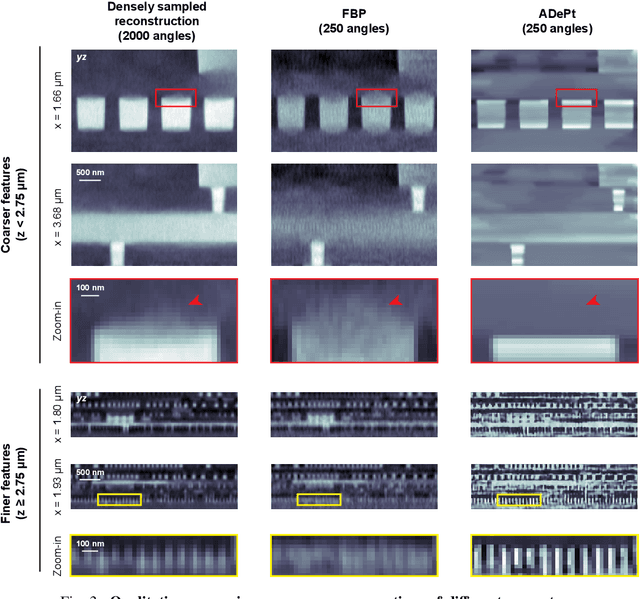

Accelerated deep self-supervised ptycho-laminography for three-dimensional nanoscale imaging of integrated circuits

Apr 10, 2023

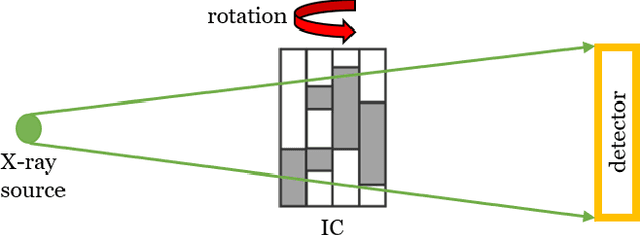

Abstract:Three-dimensional inspection of nanostructures such as integrated circuits is important for security and reliability assurance. Two scanning operations are required: ptychographic to recover the complex transmissivity of the specimen; and rotation of the specimen to acquire multiple projections covering the 3D spatial frequency domain. Two types of rotational scanning are possible: tomographic and laminographic. For flat, extended samples, for which the full 180 degree coverage is not possible, the latter is preferable because it provides better coverage of the 3D spatial frequency domain compared to limited-angle tomography. It is also because the amount of attenuation through the sample is approximately the same for all projections. However, both techniques are time consuming because of extensive acquisition and computation time. Here, we demonstrate the acceleration of ptycho-laminographic reconstruction of integrated circuits with 16-times fewer angular samples and 4.67-times faster computation by using a physics-regularized deep self-supervised learning architecture. We check the fidelity of our reconstruction against a densely sampled reconstruction that uses full scanning and no learning. As already reported elsewhere [Zhou and Horstmeyer, Opt. Express, 28(9), pp. 12872-12896], we observe improvement of reconstruction quality even over the densely sampled reconstruction, due to the ability of the self-supervised learning kernel to fill the missing cone.

The 3D Structural Phenotype of the Glaucomatous Optic Nerve Head and its Relationship with The Severity of Visual Field Damage

Jan 07, 2023Abstract:$\bf{Purpose}$: To describe the 3D structural changes in both connective and neural tissues of the optic nerve head (ONH) that occur concurrently at different stages of glaucoma using traditional and AI-driven approaches. $\bf{Methods}$: We included 213 normal, 204 mild glaucoma (mean deviation [MD] $\ge$ -6.00 dB), 118 moderate glaucoma (MD of -6.01 to -12.00 dB), and 118 advanced glaucoma patients (MD < -12.00 dB). All subjects had their ONHs imaged in 3D with Spectralis optical coherence tomography. To describe the 3D structural phenotype of glaucoma as a function of severity, we used two different approaches: (1) We extracted human-defined 3D structural parameters of the ONH including retinal nerve fiber layer (RNFL) thickness, lamina cribrosa (LC) shape and depth at different stages of glaucoma; (2) we also employed a geometric deep learning method (i.e. PointNet) to identify the most important 3D structural features that differentiate ONHs from different glaucoma severity groups without any human input. $\bf{Results}$: We observed that the majority of ONH structural changes occurred in the early glaucoma stage, followed by a plateau effect in the later stages. Using PointNet, we also found that 3D ONH structural changes were present in both neural and connective tissues. In both approaches, we observed that structural changes were more prominent in the superior and inferior quadrant of the ONH, particularly in the RNFL, the prelamina, and the LC. As the severity of glaucoma increased, these changes became more diffuse (i.e. widespread), particularly in the LC. $\bf{Conclusions}$: In this study, we were able to uncover complex 3D structural changes of the ONH in both neural and connective tissues as a function of glaucoma severity. We hope to provide new insights into the complex pathophysiology of glaucoma that might help clinicians in their daily clinical care.

Attentional Ptycho-Tomography (APT) for three-dimensional nanoscale X-ray imaging with minimal data acquisition and computation time

Nov 29, 2022Abstract:Noninvasive X-ray imaging of nanoscale three-dimensional objects, e.g. integrated circuits (ICs), generally requires two types of scanning: ptychographic, which is translational and returns estimates of complex electromagnetic field through ICs; and tomographic scanning, which collects complex field projections from multiple angles. Here, we present Attentional Ptycho-Tomography (APT), an approach trained to provide accurate reconstructions of ICs despite incomplete measurements, using a dramatically reduced amount of angular scanning. Training process includes regularizing priors based on typical IC patterns and the physics of X-ray propagation. We demonstrate that APT with 12-time reduced angles achieves fidelity comparable to the gold standard with the original set of angles. With the same set of reduced angles, APT also outperforms baseline reconstruction methods. In our experiments, APT achieves 108-time aggregate reduction in data acquisition and computation without compromising quality. We expect our physics-assisted machine learning framework could also be applied to other branches of nanoscale imaging.

Noise-resilient approach for deep tomographic imaging

Nov 22, 2022

Abstract:We propose a noise-resilient deep reconstruction algorithm for X-ray tomography. Our approach shows strong noise resilience without obtaining noisy training examples. The advantages of our framework may further enable low-photon tomographic imaging.

AI-based Clinical Assessment of Optic Nerve Head Robustness Superseding Biomechanical Testing

Jun 09, 2022

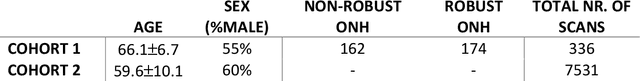

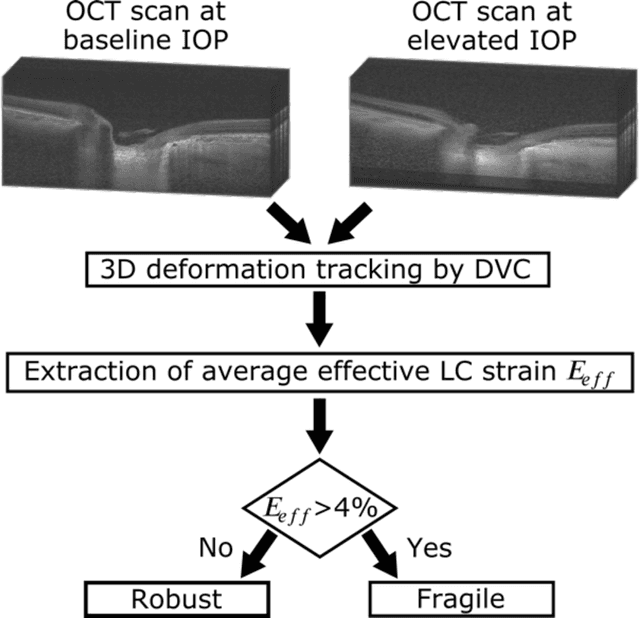

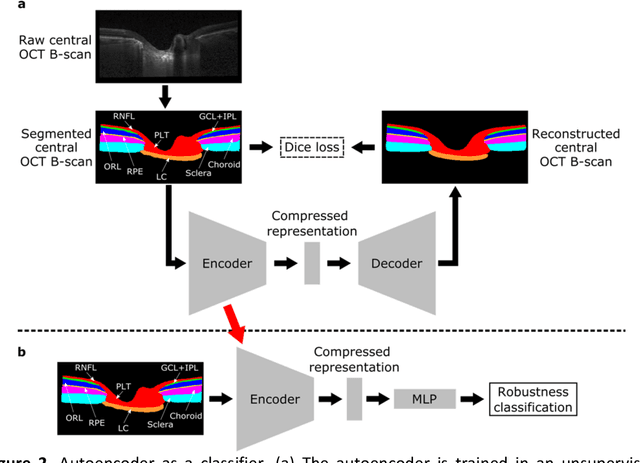

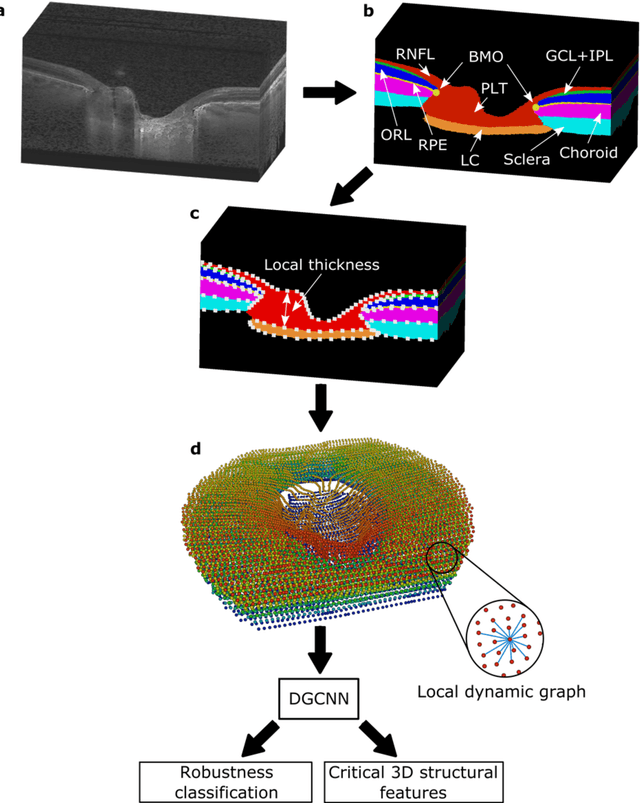

Abstract:$\mathbf{Purpose}$: To use artificial intelligence (AI) to: (1) exploit biomechanical knowledge of the optic nerve head (ONH) from a relatively large population; (2) assess ONH robustness from a single optical coherence tomography (OCT) scan of the ONH; (3) identify what critical three-dimensional (3D) structural features make a given ONH robust. $\mathbf{Design}$: Retrospective cross-sectional study. $\mathbf{Methods}$: 316 subjects had their ONHs imaged with OCT before and after acute intraocular pressure (IOP) elevation through ophthalmo-dynamometry. IOP-induced lamina-cribrosa deformations were then mapped in 3D and used to classify ONHs. Those with LC deformations superior to 4% were considered fragile, while those with deformations inferior to 4% robust. Learning from these data, we compared three AI algorithms to predict ONH robustness strictly from a baseline (undeformed) OCT volume: (1) a random forest classifier; (2) an autoencoder; and (3) a dynamic graph CNN (DGCNN). The latter algorithm also allowed us to identify what critical 3D structural features make a given ONH robust. $\mathbf{Results}$: All 3 methods were able to predict ONH robustness from 3D structural information alone and without the need to perform biomechanical testing. The DGCNN (area under the receiver operating curve [AUC]: 0.76 $\pm$ 0.08) outperformed the autoencoder (AUC: 0.70 $\pm$ 0.07) and the random forest classifier (AUC: 0.69 $\pm$ 0.05). Interestingly, to assess ONH robustness, the DGCNN mainly used information from the scleral canal and the LC insertion sites. $\mathbf{Conclusions}$: We propose an AI-driven approach that can assess the robustness of a given ONH solely from a single OCT scan of the ONH, and without the need to perform biomechanical testing. Longitudinal studies should establish whether ONH robustness could help us identify fast visual field loss progressors.

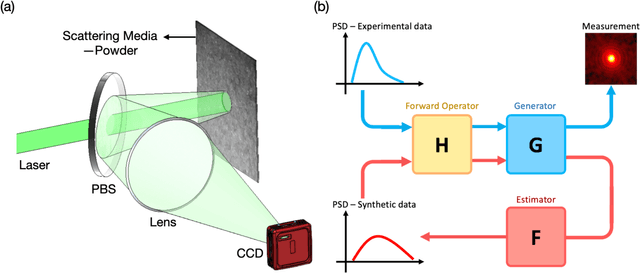

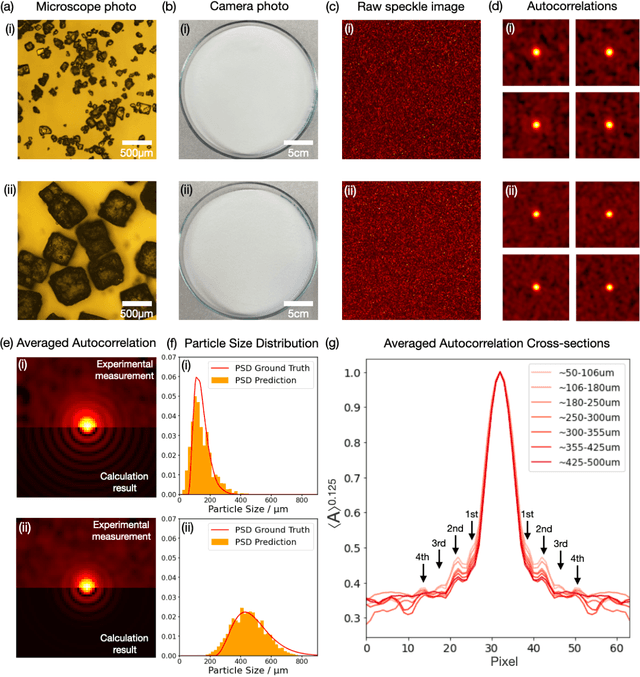

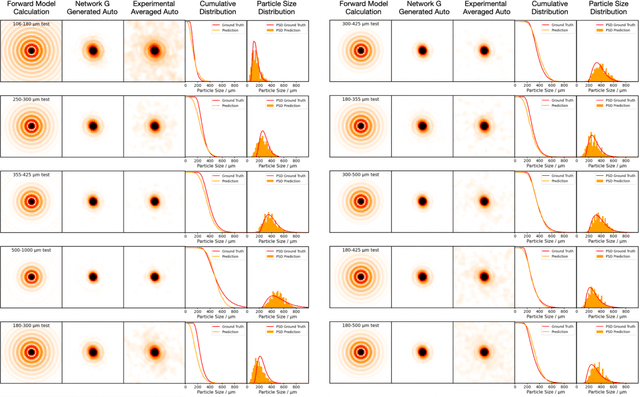

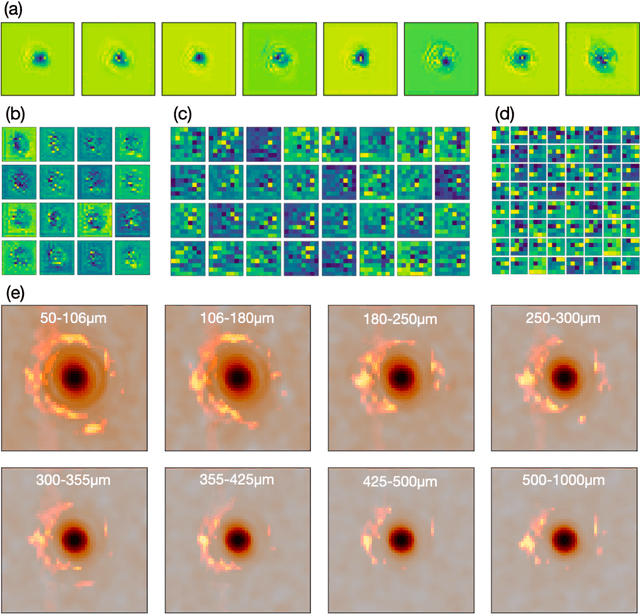

From Laser Speckle to Particle Size Distribution in drying powders: A Physics-Enhanced AutoCorrelation-based Estimator (PEACE)

Apr 20, 2022

Abstract:Extracting quantitative information about highly scattering surfaces from an imaging system is challenging because the phase of the scattered light undergoes multiple folds upon propagation, resulting in complex speckle patterns. One specific application is the drying of wet powders in the pharmaceutical industry, where quantifying the particle size distribution (PSD) is of particular interest. A non-invasive and real-time monitoring probe in the drying process is required, but there is no suitable candidate for this purpose. In this report, we develop a theoretical relationship from the PSD to the speckle image and describe a physics-enhanced autocorrelation-based estimator (PEACE) machine learning algorithm for speckle analysis to measure the PSD of a powder surface. This method solves both the forward and inverse problems together and enjoys increased interpretability, since the machine learning approximator is regularized by the physical law.

Geometric Deep Learning to Identify the Critical 3D Structural Features of the Optic Nerve Head for Glaucoma Diagnosis

Apr 20, 2022

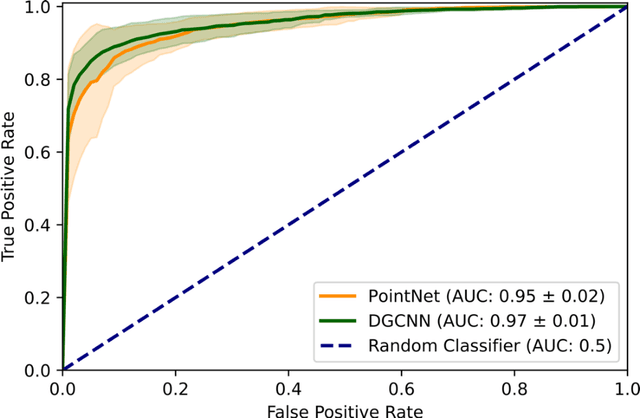

Abstract:Purpose: The optic nerve head (ONH) undergoes complex and deep 3D morphological changes during the development and progression of glaucoma. Optical coherence tomography (OCT) is the current gold standard to visualize and quantify these changes, however the resulting 3D deep-tissue information has not yet been fully exploited for the diagnosis and prognosis of glaucoma. To this end, we aimed: (1) To compare the performance of two relatively recent geometric deep learning techniques in diagnosing glaucoma from a single OCT scan of the ONH; and (2) To identify the 3D structural features of the ONH that are critical for the diagnosis of glaucoma. Methods: In this study, we included a total of 2,247 non-glaucoma and 2,259 glaucoma scans from 1,725 subjects. All subjects had their ONHs imaged in 3D with Spectralis OCT. All OCT scans were automatically segmented using deep learning to identify major neural and connective tissues. Each ONH was then represented as a 3D point cloud. We used PointNet and dynamic graph convolutional neural network (DGCNN) to diagnose glaucoma from such 3D ONH point clouds and to identify the critical 3D structural features of the ONH for glaucoma diagnosis. Results: Both the DGCNN (AUC: 0.97$\pm$0.01) and PointNet (AUC: 0.95$\pm$0.02) were able to accurately detect glaucoma from 3D ONH point clouds. The critical points formed an hourglass pattern with most of them located in the inferior and superior quadrant of the ONH. Discussion: The diagnostic accuracy of both geometric deep learning approaches was excellent. Moreover, we were able to identify the critical 3D structural features of the ONH for glaucoma diagnosis that tremendously improved the transparency and interpretability of our method. Consequently, our approach may have strong potential to be used in clinical applications for the diagnosis and prognosis of a wide range of ophthalmic disorders.

Physics-assisted Generative Adversarial Network for X-Ray Tomography

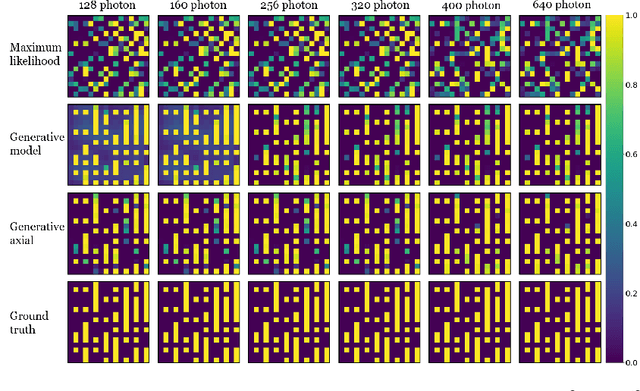

Apr 07, 2022

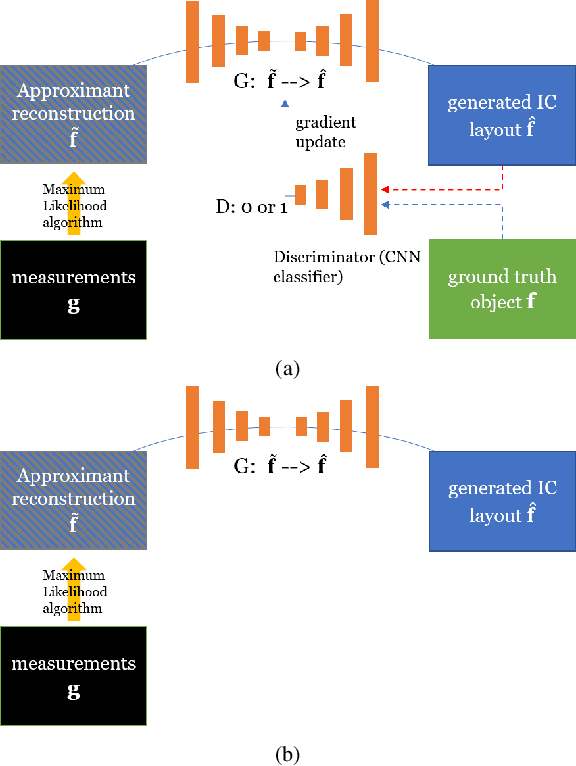

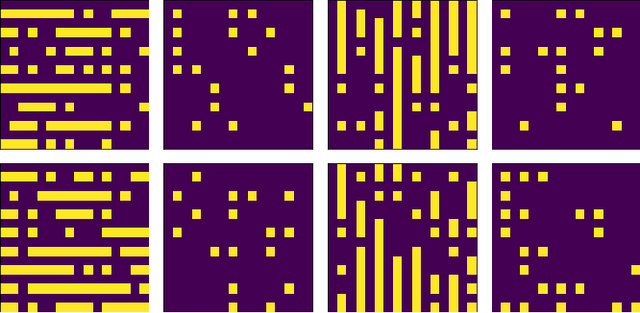

Abstract:X-ray tomography is capable of imaging the interior of objects in three dimensions non-invasively, with applications in biomedical imaging, materials science, electronic inspection, and other fields. The reconstruction process can be an ill-conditioned inverse problem, requiring regularization to obtain satisfactory reconstructions. Recently, deep learning has been adopted for tomographic reconstruction. Unlike iterative algorithms which require a distribution that is known a priori, deep reconstruction networks can learn a prior distribution through sampling the training distributions. In this work, we develop a Physics-assisted Generative Adversarial Network (PGAN), a two-step algorithm for tomographic reconstruction. In contrast to previous efforts, our PGAN utilizes maximum-likelihood estimates derived from the measurements to regularize the reconstruction with both known physics and the learned prior. Synthetic objects with spatial correlations are integrated circuits (IC) from a proposed model CircuitFaker. Compared with maximum-likelihood estimation, PGAN can reduce the photon requirement with limited projection angles to achieve a given error rate. We further attribute the improvement to the learned prior by reconstructing objects created without spatial correlations. The advantages of using a prior from deep learning in X-ray tomography may further enable low-photon nanoscale imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge