Georg Carle

\textit{FedABC}: Attention-Based Client Selection for Federated Learning with Long-Term View

Jul 28, 2025Abstract:Native AI support is a key objective in the evolution of 6G networks, with Federated Learning (FL) emerging as a promising paradigm. FL allows decentralized clients to collaboratively train an AI model without directly sharing their data, preserving privacy. Clients train local models on private data and share model updates, which a central server aggregates to refine the global model and redistribute it for the next iteration. However, client data heterogeneity slows convergence and reduces model accuracy, and frequent client participation imposes communication and computational burdens. To address these challenges, we propose \textit{FedABC}, an innovative client selection algorithm designed to take a long-term view in managing data heterogeneity and optimizing client participation. Inspired by attention mechanisms, \textit{FedABC} prioritizes informative clients by evaluating both model similarity and each model's unique contributions to the global model. Moreover, considering the evolving demands of the global model, we formulate an optimization problem to guide \textit{FedABC} throughout the training process. Following the ``later-is-better" principle, \textit{FedABC} adaptively adjusts the client selection threshold, encouraging greater participation in later training stages. Extensive simulations on CIFAR-10 demonstrate that \textit{FedABC} significantly outperforms existing approaches in model accuracy and client participation efficiency, achieving comparable performance with 32\% fewer clients than the classical FL algorithm \textit{FedAvg}, and 3.5\% higher accuracy with 2\% fewer clients than the state-of-the-art. This work marks a step toward deploying FL in heterogeneous, resource-constrained environments, thereby supporting native AI capabilities in 6G networks.

Fast and Scalable Network Slicing by Integrating Deep Learning with Lagrangian Methods

Jan 22, 2024

Abstract:Network slicing is a key technique in 5G and beyond for efficiently supporting diverse services. Many network slicing solutions rely on deep learning to manage complex and high-dimensional resource allocation problems. However, deep learning models suffer limited generalization and adaptability to dynamic slicing configurations. In this paper, we propose a novel framework that integrates constrained optimization methods and deep learning models, resulting in strong generalization and superior approximation capability. Based on the proposed framework, we design a new neural-assisted algorithm to allocate radio resources to slices to maximize the network utility under inter-slice resource constraints. The algorithm exhibits high scalability, accommodating varying numbers of slices and slice configurations with ease. We implement the proposed solution in a system-level network simulator and evaluate its performance extensively by comparing it to state-of-the-art solutions including deep reinforcement learning approaches. The numerical results show that our solution obtains near-optimal quality-of-service satisfaction and promising generalization performance under different network slicing scenarios.

Advancing Federated Learning in 6G: A Trusted Architecture with Graph-based Analysis

Sep 27, 2023

Abstract:Integrating native AI support into the network architecture is an essential objective of 6G. Federated Learning (FL) emerges as a potential paradigm, facilitating decentralized AI model training across a diverse range of devices under the coordination of a central server. However, several challenges hinder its wide application in the 6G context, such as malicious attacks and privacy snooping on local model updates, and centralization pitfalls. This work proposes a trusted architecture for supporting FL, which utilizes Distributed Ledger Technology (DLT) and Graph Neural Network (GNN), including three key features. First, a pre-processing layer employing homomorphic encryption is incorporated to securely aggregate local models, preserving the privacy of individual models. Second, given the distributed nature and graph structure between clients and nodes in the pre-processing layer, GNN is leveraged to identify abnormal local models, enhancing system security. Third, DLT is utilized to decentralize the system by selecting one of the candidates to perform the central server's functions. Additionally, DLT ensures reliable data management by recording data exchanges in an immutable and transparent ledger. The feasibility of the novel architecture is validated through simulations, demonstrating improved performance in anomalous model detection and global model accuracy compared to relevant baselines.

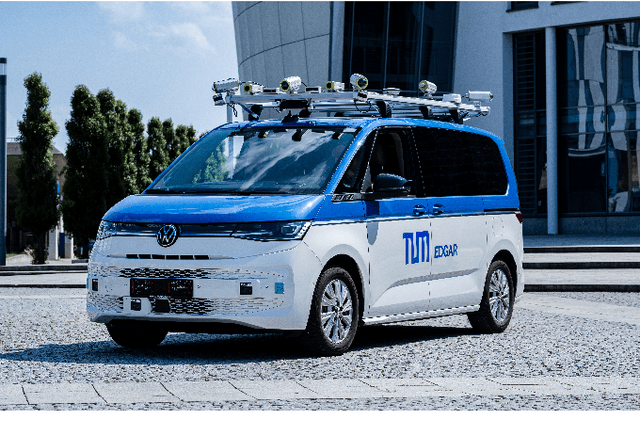

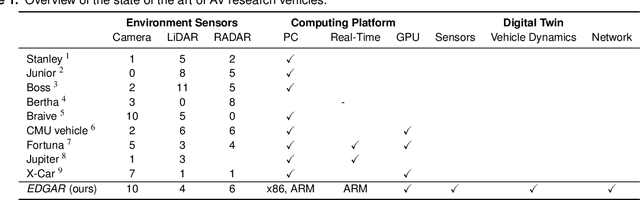

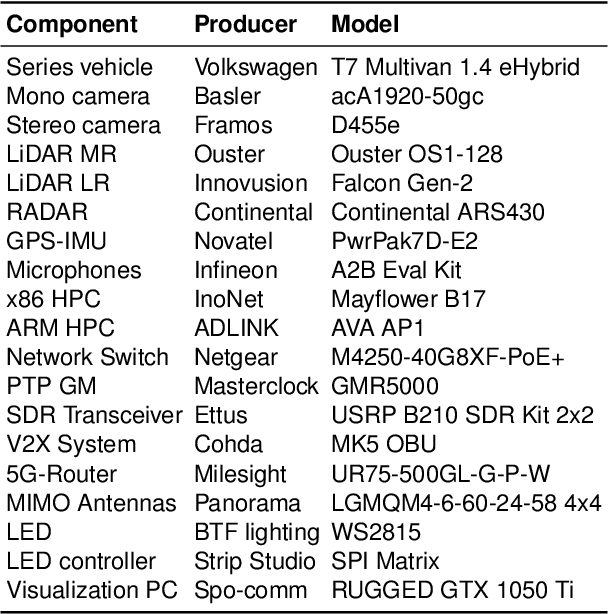

EDGAR: An Autonomous Driving Research Platform -- From Feature Development to Real-World Application

Sep 27, 2023

Abstract:While current research and development of autonomous driving primarily focuses on developing new features and algorithms, the transfer from isolated software components into an entire software stack has been covered sparsely. Besides that, due to the complexity of autonomous software stacks and public road traffic, the optimal validation of entire stacks is an open research problem. Our paper targets these two aspects. We present our autonomous research vehicle EDGAR and its digital twin, a detailed virtual duplication of the vehicle. While the vehicle's setup is closely related to the state of the art, its virtual duplication is a valuable contribution as it is crucial for a consistent validation process from simulation to real-world tests. In addition, different development teams can work with the same model, making integration and testing of the software stacks much easier, significantly accelerating the development process. The real and virtual vehicles are embedded in a comprehensive development environment, which is also introduced. All parameters of the digital twin are provided open-source at https://github.com/TUMFTM/edgar_digital_twin.

Inter-Cell Network Slicing With Transfer Learning Empowered Multi-Agent Deep Reinforcement Learning

Jun 20, 2023Abstract:Network slicing enables operators to efficiently support diverse applications on a common physical infrastructure. The ever-increasing densification of network deployment leads to complex and non-trivial inter-cell interference, which requires more than inaccurate analytic models to dynamically optimize resource management for network slices. In this paper, we develop a DIRP algorithm with multiple deep reinforcement learning (DRL) agents to cooperatively optimize resource partition in individual cells to fulfill the requirements of each slice, based on two alternative reward functions. Nevertheless, existing DRL approaches usually tie the pretrained model parameters to specific network environments with poor transferability, which raises practical deployment concerns in large-scale mobile networks. Hence, we design a novel transfer learning-aided DIRP (TL-DIRP) algorithm to ease the transfer of DIRP agents across different network environments in terms of sample efficiency, model reproducibility, and algorithm scalability. The TL-DIRP algorithm first centrally trains a generalized model and then transfers the "generalist" to each local agent as "specialist" with distributed finetuning and execution. TL-DIRP consists of two steps: 1) centralized training of a generalized distributed model, 2) transferring the "generalist" to each "specialist" with distributed finetuning and execution. The numerical results show that not only DIRP outperforms existing baseline approaches in terms of faster convergence and higher reward, but more importantly, TL-DIRP significantly improves the service performance, with reduced exploration cost, accelerated convergence rate, and enhanced model reproducibility. As compared to a traffic-aware baseline, TL-DIRP provides about 15% less violation ratio of the quality of service (QoS) for the worst slice service and 8.8% less violation on the average service QoS.

* 14 pages, 14 figures, IEEE Open Journal of the Communications Society

Network Slicing via Transfer Learning aided Distributed Deep Reinforcement Learning

Jan 09, 2023Abstract:Deep reinforcement learning (DRL) has been increasingly employed to handle the dynamic and complex resource management in network slicing. The deployment of DRL policies in real networks, however, is complicated by heterogeneous cell conditions. In this paper, we propose a novel transfer learning (TL) aided multi-agent deep reinforcement learning (MADRL) approach with inter-agent similarity analysis for inter-cell inter-slice resource partitioning. First, we design a coordinated MADRL method with information sharing to intelligently partition resource to slices and manage inter-cell interference. Second, we propose an integrated TL method to transfer the learned DRL policies among different local agents for accelerating the policy deployment. The method is composed of a new domain and task similarity measurement approach and a new knowledge transfer approach, which resolves the problem of from whom to transfer and how to transfer. We evaluated the proposed solution with extensive simulations in a system-level simulator and show that our approach outperforms the state-of-the-art solutions in terms of performance, convergence speed and sample efficiency. Moreover, by applying TL, we achieve an additional gain over 27% higher than the coordinate MADRL approach without TL.

Q-Learning for Conflict Resolution in B5G Network Automation

Jul 28, 2021

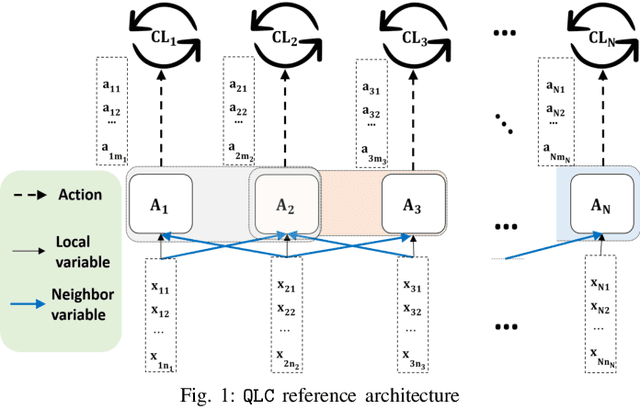

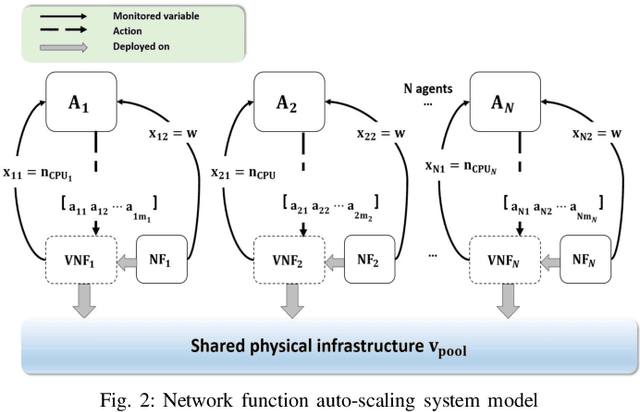

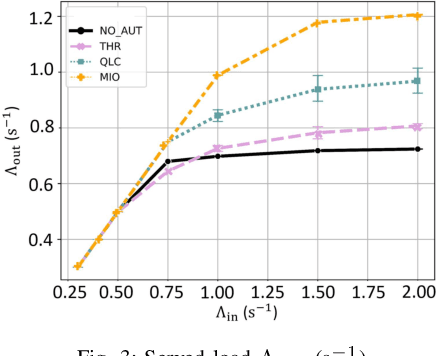

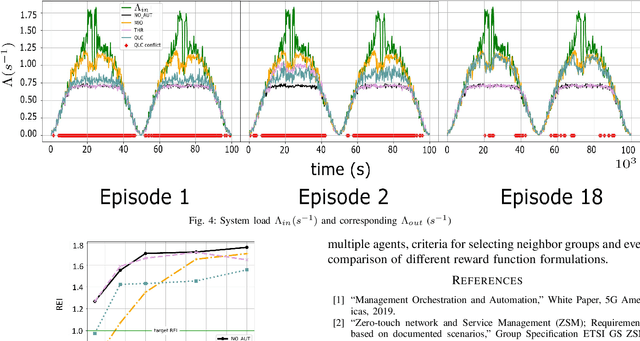

Abstract:Network automation is gaining significant attention in the development of B5G networks, primarily for reducing operational complexity, expenditures and improving network efficiency. Concurrently operating closed loops aiming for individual optimization targets may cause conflicts which, left unresolved, would lead to significant degradation in network Key Performance Indicators (KPIs), thereby resulting in sub-optimal network performance. Centralized coordination, albeit optimal, is impractical in large scale networks and for time-critical applications. Decentralized approaches are therefore envisaged in the evolution to B5G and subsequently, 6G networks. This work explores pervasive intelligence for conflict resolution in network automation, as an alternative to centralized orchestration. A Q-Learning decentralized approach to network automation is proposed, and an application to network slice auto-scaling is designed and evaluated. Preliminary results highlight the potential of the proposed scheme and justify further research work in this direction.

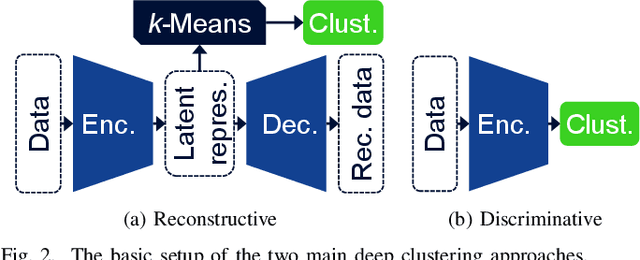

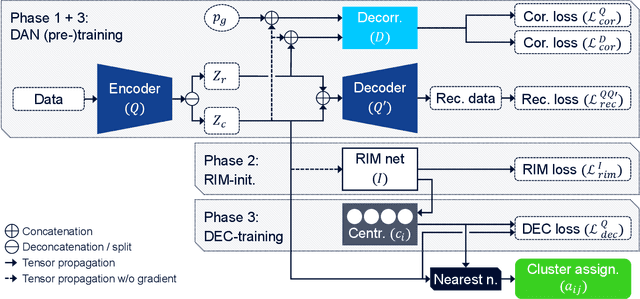

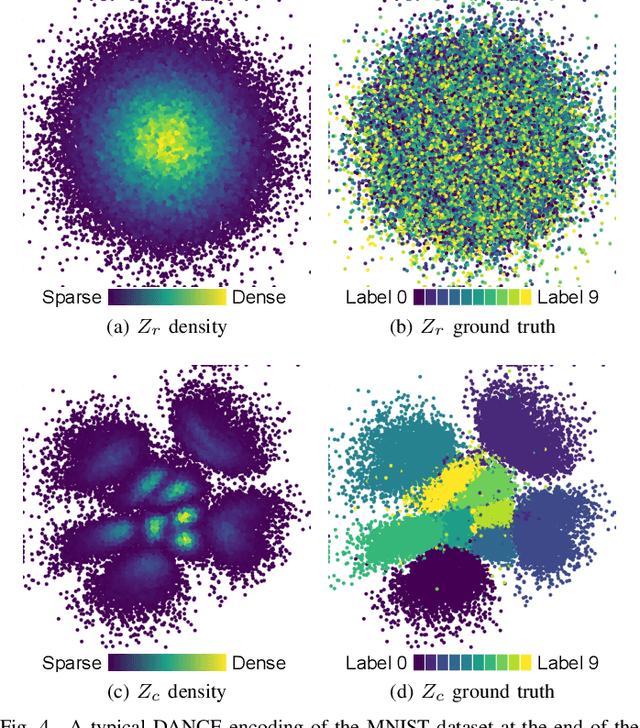

Decorrelating Adversarial Nets for Clustering Mobile Network Data

Mar 11, 2021

Abstract:Deep learning will play a crucial role in enabling cognitive automation for the mobile networks of the future. Deep clustering, a subset of deep learning, could be a valuable tool for many network automation use-cases. Unfortunately, most state-of-the-art clustering algorithms target image datasets, which makes them hard to apply to mobile network data due to their highly tuned nature and related assumptions about the data. In this paper, we propose a new algorithm, DANCE (Decorrelating Adversarial Nets for Clustering-friendly Encoding), intended to be a reliable deep clustering method which also performs well when applied to network automation use-cases. DANCE uses a reconstructive clustering approach, separating clustering-relevant from clustering-irrelevant features in a latent representation. This separation removes unnecessary information from the clustering, increasing consistency and peak performance. We comprehensively evaluate DANCE and other select state-of-the-art deep clustering algorithms, and show that DANCE outperforms these algorithms by a significant margin on a mobile network dataset.

Neural Network-based Quantization for Network Automation

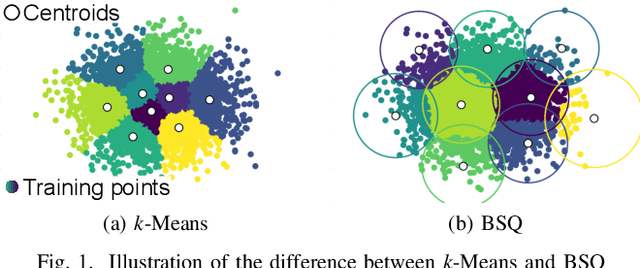

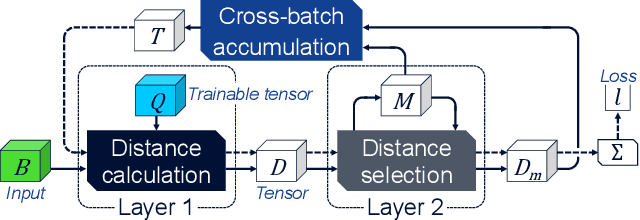

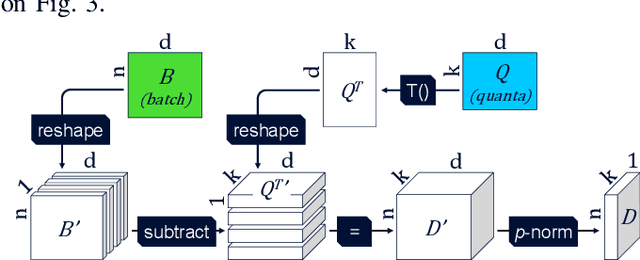

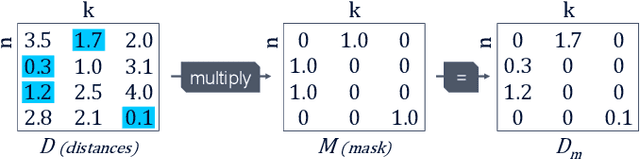

Mar 04, 2021

Abstract:Deep Learning methods have been adopted in mobile networks, especially for network management automation where they provide means for advanced machine cognition. Deep learning methods utilize cutting-edge hardware and software tools, allowing complex cognitive algorithms to be developed. In a recent paper, we introduced the Bounding Sphere Quantization (BSQ) algorithm, a modification of the k-Means algorithm, that was shown to create better quantizations for certain network management use-cases, such as anomaly detection. However, BSQ required a significantly longer time to train than k-Means, a challenge which can be overcome with a neural network-based implementation. In this paper, we present such an implementation of BSQ that utilizes state-of-the-art deep learning tools to achieve a competitive training speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge