Qi Liao

Reinforcement Learning (RL) Meets Urban Climate Modeling: Investigating the Efficacy and Impacts of RL-Based HVAC Control

May 11, 2025Abstract:Reinforcement learning (RL)-based heating, ventilation, and air conditioning (HVAC) control has emerged as a promising technology for reducing building energy consumption while maintaining indoor thermal comfort. However, the efficacy of such strategies is influenced by the background climate and their implementation may potentially alter both the indoor climate and local urban climate. This study proposes an integrated framework combining RL with an urban climate model that incorporates a building energy model, aiming to evaluate the efficacy of RL-based HVAC control across different background climates, impacts of RL strategies on indoor climate and local urban climate, and the transferability of RL strategies across cities. Our findings reveal that the reward (defined as a weighted combination of energy consumption and thermal comfort) and the impacts of RL strategies on indoor climate and local urban climate exhibit marked variability across cities with different background climates. The sensitivity of reward weights and the transferability of RL strategies are also strongly influenced by the background climate. Cities in hot climates tend to achieve higher rewards across most reward weight configurations that balance energy consumption and thermal comfort, and those cities with more varying atmospheric temperatures demonstrate greater RL strategy transferability. These findings underscore the importance of thoroughly evaluating RL-based HVAC control strategies in diverse climatic contexts. This study also provides a new insight that city-to-city learning will potentially aid the deployment of RL-based HVAC control.

Fast and Scalable Network Slicing by Integrating Deep Learning with Lagrangian Methods

Jan 22, 2024

Abstract:Network slicing is a key technique in 5G and beyond for efficiently supporting diverse services. Many network slicing solutions rely on deep learning to manage complex and high-dimensional resource allocation problems. However, deep learning models suffer limited generalization and adaptability to dynamic slicing configurations. In this paper, we propose a novel framework that integrates constrained optimization methods and deep learning models, resulting in strong generalization and superior approximation capability. Based on the proposed framework, we design a new neural-assisted algorithm to allocate radio resources to slices to maximize the network utility under inter-slice resource constraints. The algorithm exhibits high scalability, accommodating varying numbers of slices and slice configurations with ease. We implement the proposed solution in a system-level network simulator and evaluate its performance extensively by comparing it to state-of-the-art solutions including deep reinforcement learning approaches. The numerical results show that our solution obtains near-optimal quality-of-service satisfaction and promising generalization performance under different network slicing scenarios.

Inter-Cell Network Slicing With Transfer Learning Empowered Multi-Agent Deep Reinforcement Learning

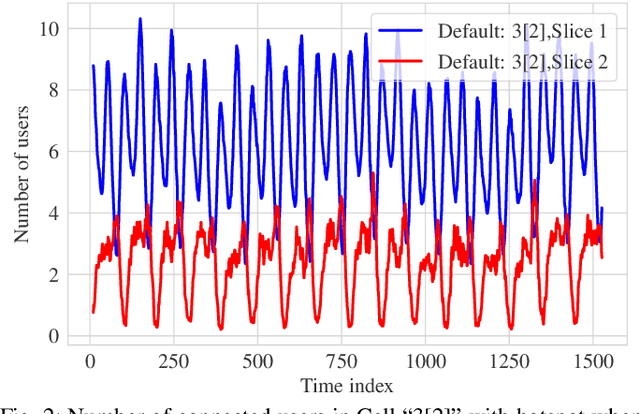

Jun 20, 2023Abstract:Network slicing enables operators to efficiently support diverse applications on a common physical infrastructure. The ever-increasing densification of network deployment leads to complex and non-trivial inter-cell interference, which requires more than inaccurate analytic models to dynamically optimize resource management for network slices. In this paper, we develop a DIRP algorithm with multiple deep reinforcement learning (DRL) agents to cooperatively optimize resource partition in individual cells to fulfill the requirements of each slice, based on two alternative reward functions. Nevertheless, existing DRL approaches usually tie the pretrained model parameters to specific network environments with poor transferability, which raises practical deployment concerns in large-scale mobile networks. Hence, we design a novel transfer learning-aided DIRP (TL-DIRP) algorithm to ease the transfer of DIRP agents across different network environments in terms of sample efficiency, model reproducibility, and algorithm scalability. The TL-DIRP algorithm first centrally trains a generalized model and then transfers the "generalist" to each local agent as "specialist" with distributed finetuning and execution. TL-DIRP consists of two steps: 1) centralized training of a generalized distributed model, 2) transferring the "generalist" to each "specialist" with distributed finetuning and execution. The numerical results show that not only DIRP outperforms existing baseline approaches in terms of faster convergence and higher reward, but more importantly, TL-DIRP significantly improves the service performance, with reduced exploration cost, accelerated convergence rate, and enhanced model reproducibility. As compared to a traffic-aware baseline, TL-DIRP provides about 15% less violation ratio of the quality of service (QoS) for the worst slice service and 8.8% less violation on the average service QoS.

* 14 pages, 14 figures, IEEE Open Journal of the Communications Society

Network Slicing via Transfer Learning aided Distributed Deep Reinforcement Learning

Jan 09, 2023Abstract:Deep reinforcement learning (DRL) has been increasingly employed to handle the dynamic and complex resource management in network slicing. The deployment of DRL policies in real networks, however, is complicated by heterogeneous cell conditions. In this paper, we propose a novel transfer learning (TL) aided multi-agent deep reinforcement learning (MADRL) approach with inter-agent similarity analysis for inter-cell inter-slice resource partitioning. First, we design a coordinated MADRL method with information sharing to intelligently partition resource to slices and manage inter-cell interference. Second, we propose an integrated TL method to transfer the learned DRL policies among different local agents for accelerating the policy deployment. The method is composed of a new domain and task similarity measurement approach and a new knowledge transfer approach, which resolves the problem of from whom to transfer and how to transfer. We evaluated the proposed solution with extensive simulations in a system-level simulator and show that our approach outperforms the state-of-the-art solutions in terms of performance, convergence speed and sample efficiency. Moreover, by applying TL, we achieve an additional gain over 27% higher than the coordinate MADRL approach without TL.

A Generative Approach for Production-Aware Industrial Network Traffic Modeling

Nov 11, 2022

Abstract:The new wave of digitization induced by Industry 4.0 calls for ubiquitous and reliable connectivity to perform and automate industrial operations. 5G networks can afford the extreme requirements of heterogeneous vertical applications, but the lack of real data and realistic traffic statistics poses many challenges for the optimization and configuration of the network for industrial environments. In this paper, we investigate the network traffic data generated from a laser cutting machine deployed in a Trumpf factory in Germany. We analyze the traffic statistics, capture the dependencies between the internal states of the machine, and model the network traffic as a production state dependent stochastic process. The two-step model is proposed as follows: first, we model the production process as a multi-state semi-Markov process, then we learn the conditional distributions of the production state dependent packet interarrival time and packet size with generative models. We compare the performance of various generative models including variational autoencoder (VAE), conditional variational autoencoder (CVAE), and generative adversarial network (GAN). The numerical results show a good approximation of the traffic arrival statistics depending on the production state. Among all generative models, CVAE provides in general the best performance in terms of the smallest Kullback-Leibler divergence.

Augmented Reality-Empowered Network Planning Services for Private Networks

Jun 24, 2022

Abstract:To support Industry 4.0 applications with haptics and human-machine interaction, the sixth generation (6G) requires a new framework that is fully autonomous, visual, and interactive. In this paper, we propose a novel framework for private network planning services, providing an end-to-end solution that receives visual and sensory data from the user device, reconstructs the 3D network environment and performs network planning on the server, and visualizes the network performance with augmented reality (AR) on the display of the user devices. The solution is empowered by three key technical components: 1) vision- and sensor fusion-based 3D environment reconstruction, 2) ray tracing-based radio map generation and network planning, and 3) AR-empowered network visualization enabled by real-time camera relocalization. We conducted the proof-of-concept in a Bosch plant in Germany and showed good network coverage of the optimized antenna location, as well as high accuracy in both environment reconstruction and camera relocalization. We also achieved real-time AR-supported network monitoring with an end-to-end latency of about 32 ms per frame.

Knowledge Transfer in Deep Reinforcement Learning for Slice-Aware Mobility Robustness Optimization

Mar 07, 2022

Abstract:The legacy mobility robustness optimization (MRO) in self-organizing networks aims at improving handover performance by optimizing cell-specific handover parameters. However, such solutions cannot satisfy the needs of next-generation network with network slicing, because it only guarantees the received signal strength but not the per-slice service quality. To provide the truly seamless mobility service, we propose a deep reinforcement learning-based slice-aware mobility robustness optimization (SAMRO) approach, which improves handover performance with per-slice service assurance by optimizing slice-specific handover parameters. Moreover, to allow safe and sample efficient online training, we develop a two-step transfer learning scheme: 1) regularized offline reinforcement learning, and 2) effective online fine-tuning with mixed experience replay. System-level simulations show that compared against the legacy MRO algorithms, SAMRO significantly improves slice-aware service continuation while optimizing the handover performance.

Leveraging Machine Learning for Industrial Wireless Communications

May 05, 2021

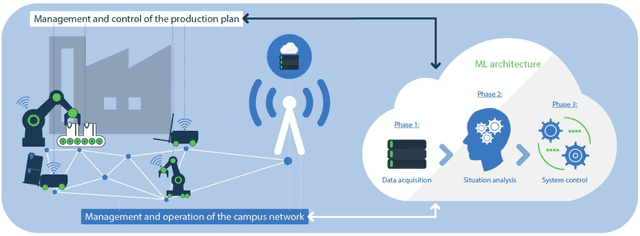

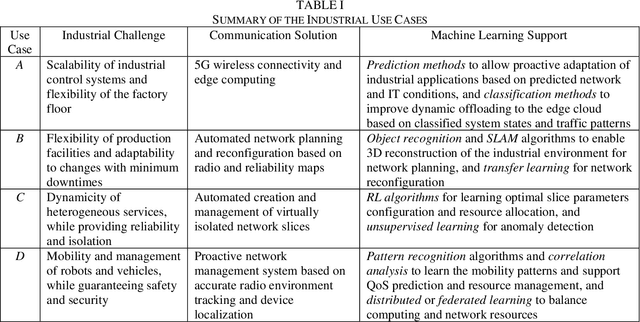

Abstract:Two main trends characterize today's communication landscape and are finding their way into industrial facilities: the rollout of 5G with its distinct support for vertical industries and the increasing success of machine learning (ML). The combination of those two technologies open the doors to many exciting industrial applications and its impact is expected to rapidly increase in the coming years, given the abundant data growth and the availability of powerful edge computers in production facilities. Unlike most previous work that has considered the application of 5G and ML in industrial environment separately, this paper highlights the potential and synergies that result from combining them. The overall vision presented here generates from the KICK project, a collaboration of several partners from the manufacturing and communication industry as well as research institutes. This unprecedented blend of 5G and ML expertise creates a unique perspective on ML-supported industrial communications and their role in facilitating industrial automation. The paper identifies key open industrial challenges that are grouped into four use cases: wireless connectivity and edge-cloud integration, flexibility in network reconfiguration, dynamicity of heterogeneous network services, and mobility of robots and vehicles. Moreover, the paper provides insights into the advantages of ML-based industrial communications and discusses current challenges of data acquisition in real systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge