Martin Kasparick

Doppler Power Spectrum in Channels with von Mises-Fisher Distribution of Scatterers

Sep 03, 2024Abstract:This paper presents an analytical analysis of the Doppler spectrum in von Mises-Fisher (vMF) scattering channels. A closed-form expression for the Doppler spectrum is derived and used to investigate the impact of vMF scattering parameters, i.e., the mean direction and the degree of concentration of scatterers. The spectrum is observed to exhibit exponential behavior for the mobile antenna motion parallel to the mean direction of scatterers, while conforming to a Gaussian-like shape for the perpendicular motion. The validity of the obtained results is verified by comparison against the results of Monte Carlo simulations, where an exact match is observed.

Correlation Properties in Channels with von Mises-Fisher Distribution of Scatterers

Sep 03, 2024Abstract:This letter presents simple analytical expressions for the spatial and temporal correlation functions in channels with von Mises-Fisher (vMF) scattering. In contrast to previous results, the expressions presented here are exact and based only on elementary functions, clearly revealing the impact of the underlying parameters. The derived results are validated by a comparison against numerical integration result, where an exact match is observed. To demonstrate their utility, the presented results are used to analyze spatial correlation across different antenna array geometries and to investigate temporal correlation of a fluctuating radar signal from a moving target.

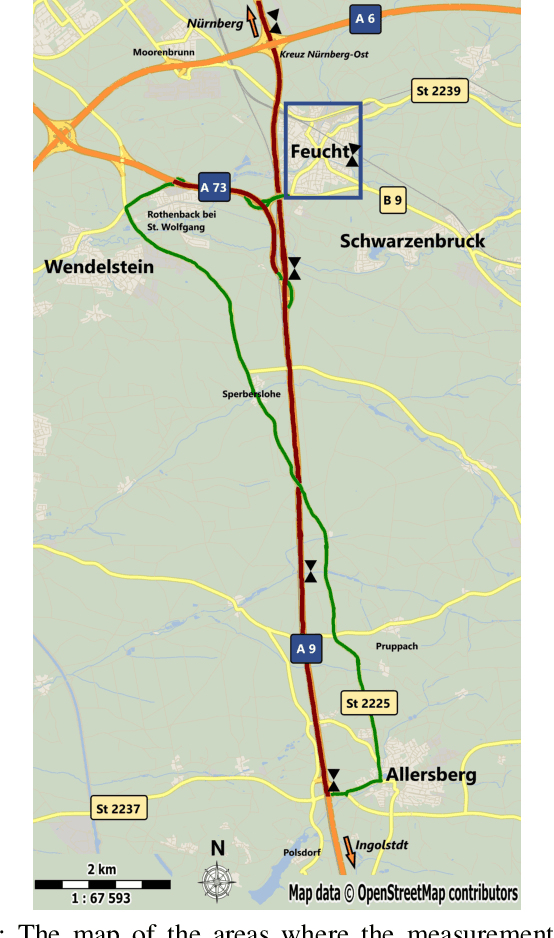

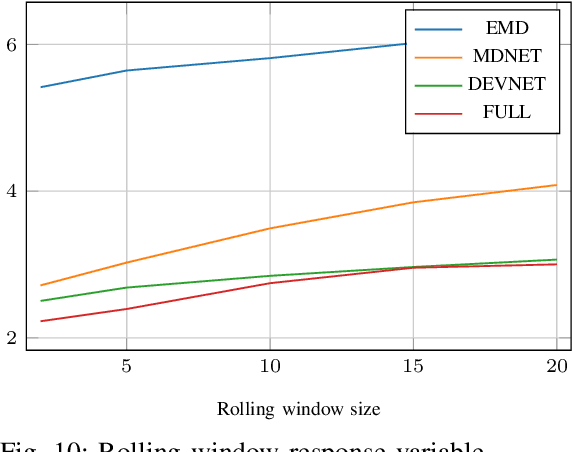

QoS prediction in radio vehicular environments via prior user information

Feb 27, 2024Abstract:Reliable wireless communications play an important role in the automotive industry as it helps to enhance current use cases and enable new ones such as connected autonomous driving, platooning, cooperative maneuvering, teleoperated driving, and smart navigation. These and other use cases often rely on specific quality of service (QoS) levels for communication. Recently, the area of predictive quality of service (QoS) has received a great deal of attention as a key enabler to forecast communication quality well enough in advance. However, predicting QoS in a reliable manner is a notoriously difficult task. In this paper, we evaluate ML tree-ensemble methods to predict QoS in the range of minutes with data collected from a cellular test network. We discuss radio environment characteristics and we showcase how these can be used to improve ML performance and further support the uptake of ML in commercial networks. Specifically, we use the correlations of the measurements coming from the radio environment by including information of prior vehicles to enhance the prediction of the target vehicles. Moreover, we are extending prior art by showing how longer prediction horizons can be supported.

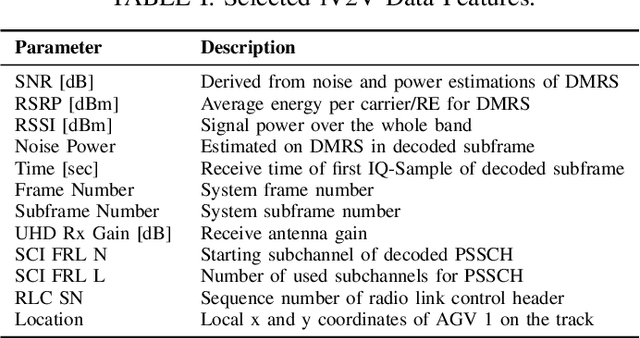

From Empirical Measurements to Augmented Data Rates: A Machine Learning Approach for MCS Adaptation in Sidelink Communication

Sep 29, 2023Abstract:Due to the lack of a feedback channel in the C-V2X sidelink, finding a suitable modulation and coding scheme (MCS) is a difficult task. However, recent use cases for vehicle-to-everything (V2X) communication with higher demands on data rate necessitate choosing the MCS adaptively. In this paper, we propose a machine learning approach to predict suitable MCS levels. Additionally, we propose the use of quantile prediction and evaluate it in combination with different algorithms for the task of predicting the MCS level with the highest achievable data rate. Thereby, we show significant improvements over conventional methods of choosing the MCS level. Using a machine learning approach, however, requires larger real-world data sets than are currently publicly available for research. For this reason, this paper presents a data set that was acquired in extensive drive tests, and that we make publicly available.

The Story of QoS Prediction in Vehicular Communication: From Radio Environment Statistics to Network-Access Throughput Prediction

Feb 23, 2023

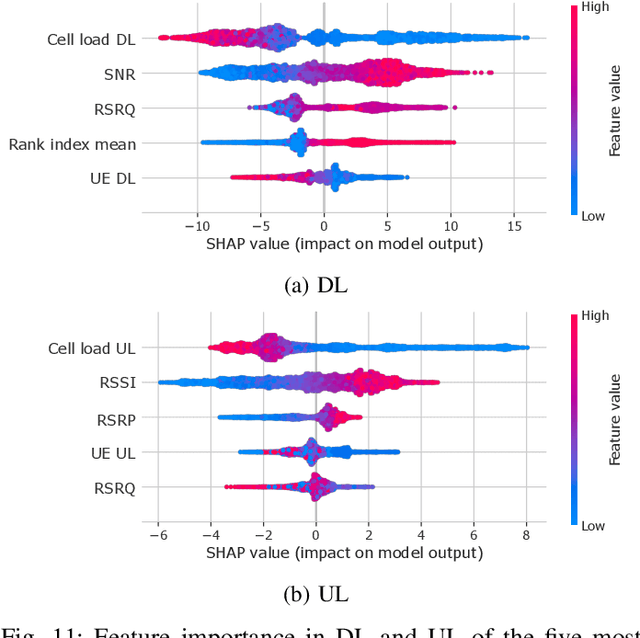

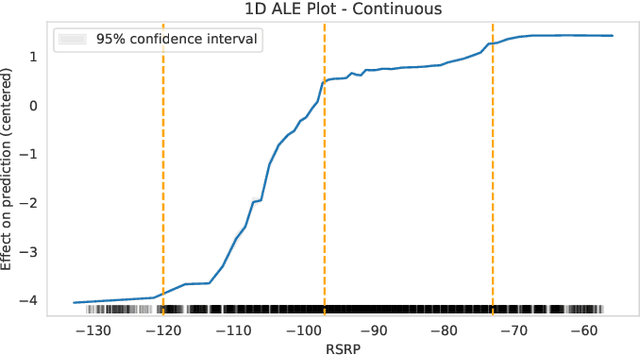

Abstract:As cellular networks evolve towards the 6th Generation (6G), Machine Learning (ML) is seen as a key enabling technology to improve the capabilities of the network. ML provides a methodology for predictive systems, which, in turn, can make networks become proactive. This proactive behavior of the network can be leveraged to sustain, for example, a specific Quality of Service (QoS) requirement. With predictive Quality of Service (pQoS), a wide variety of new use cases, both safety- and entertainment-related, are emerging, especially in the automotive sector. Therefore, in this work, we consider maximum throughput prediction enhancing, for example, streaming or HD mapping applications. We discuss the entire ML workflow highlighting less regarded aspects such as the detailed sampling procedures, the in-depth analysis of the dataset characteristics, the effects of splits in the provided results, and the data availability. Reliable ML models need to face a lot of challenges during their lifecycle. We highlight how confidence can be built on ML technologies by better understanding the underlying characteristics of the collected data. We discuss feature engineering and the effects of different splits for the training processes, showcasing that random splits might overestimate performance by more than twofold. Moreover, we investigate diverse sets of input features, where network information proved to be most effective, cutting the error by half. Part of our contribution is the validation of multiple ML models within diverse scenarios. We also use Explainable AI (XAI) to show that ML can learn underlying principles of wireless networks without being explicitly programmed. Our data is collected from a deployed network that was under full control of the measurement team and covered different vehicular scenarios and radio environments.

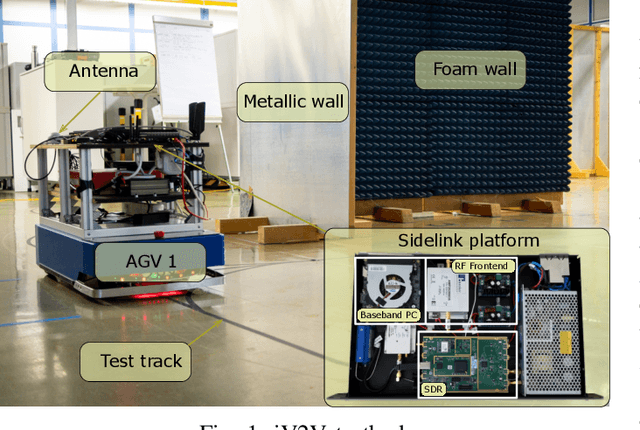

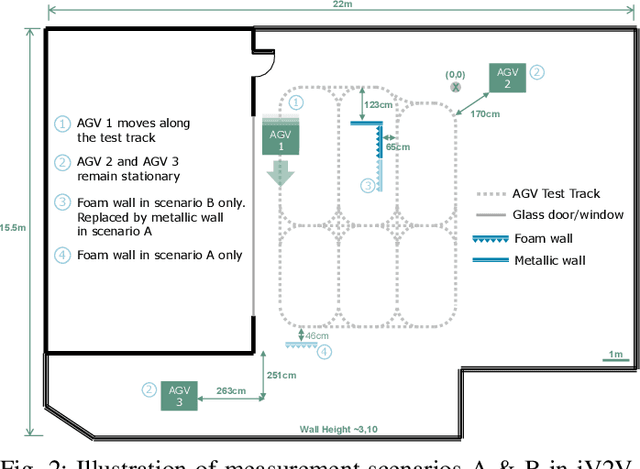

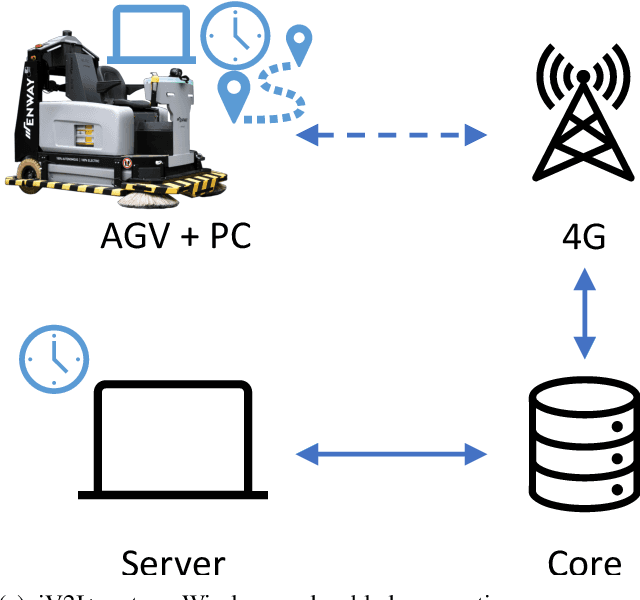

Towards an AI-enabled Connected Industry: AGV Communication and Sensor Measurement Datasets

Jan 10, 2023

Abstract:This paper presents two wireless measurement campaigns in industrial testbeds: industrial Vehicle-to-vehicle (iV2V) and industrial Vehicle-to-infrastructure plus Sensor (iV2I+). Detailed information about the two captured datasets is provided as well. iV2V covers sidelink communication scenarios between Automated Guided Vehicles (AGVs), while iV2I+ is conducted at an industrial setting where an autonomous cleaning robot is connected to a private cellular network. The combination of different communication technologies, together with a common measurement methodology, provides insights that can be exploited by Machine Learning (ML) for tasks such as fingerprinting, line-of-sight detection, prediction of quality of service or link selection. Moreover, the datasets are labelled and pre-filtered for fast on-boarding and applicability. The corresponding testbeds and measurements are also presented in detail for both datasets.

Berlin V2X: A Machine Learning Dataset from Multiple Vehicles and Radio Access Technologies

Dec 21, 2022Abstract:The evolution of wireless communications into 6G and beyond is expected to rely on new machine learning (ML)-based capabilities. These can enable proactive decisions and actions from wireless-network components to sustain quality-of-service (QoS) and user experience. Moreover, new use cases in the area of vehicular and industrial communications will emerge. Specifically in the area of vehicle communication, vehicle-to-everything (V2X) schemes will benefit strongly from such advances. With this in mind, we have conducted a detailed measurement campaign with the purpose of enabling a plethora of diverse ML-based studies. The resulting datasets offer GPS-located wireless measurements across diverse urban environments for both cellular (with two different operators) and sidelink radio access technologies, thus enabling a variety of different studies towards V2X. The datasets are labeled and sampled with a high time resolution. Furthermore, we make the data publicly available with all the necessary information to support the on-boarding of new researchers. We provide an initial analysis of the data showing some of the challenges that ML needs to overcome and the features that ML can leverage, as well as some hints at potential research studies.

MIMO Systems with Reconfigurable Antennas: Joint Channel Estimation and Mode Selection

Nov 24, 2022Abstract:Reconfigurable antennas (RAs) are a promising technology to enhance the capacity and coverage of wireless communication systems. However, RA systems have two major challenges: (i) High computational complexity of mode selection, and (ii) High overhead of channel estimation for all modes. In this paper, we develop a low-complexity iterative mode selection algorithm for data transmission in an RA-MIMO system. Furthermore, we study channel estimation of an RA multi-user MIMO system. However, given the coherence time, it is challenging to estimate channels of all modes. We propose a mode selection scheme to select a subset of modes, train channels for the selected subset, and predict channels for the remaining modes. In addition, we propose a prediction scheme based on pattern correlation between modes. Representative simulation results demonstrate the system's channel estimation error and achievable sum-rate for various selected modes and different signal-to-noise ratios (SNRs).

Machine Learning-based Methods for Reconfigurable Antenna Mode Selection in MIMO Systems

Nov 24, 2022Abstract:MIMO technology has enabled spatial multiple access and has provided a higher system spectral efficiency (SE). However, this technology has some drawbacks, such as the high number of RF chains that increases complexity in the system. One of the solutions to this problem can be to employ reconfigurable antennas (RAs) that can support different radiation patterns during transmission to provide similar performance with fewer RF chains. In this regard, the system aims to maximize the SE with respect to optimum beamforming design and RA mode selection. Due to the non-convexity of this problem, we propose machine learning-based methods for RA antenna mode selection in both dynamic and static scenarios. In the static scenario, we present how to solve the RA mode selection problem, an integer optimization problem in nature, via deep convolutional neural networks (DCNN). A Multi-Armed-bandit (MAB) consisting of offline and online training is employed for the dynamic RA state selection. For the proposed MAB, the computational complexity of the optimization problem is reduced. Finally, the proposed methods in both dynamic and static scenarios are compared with exhaustive search and random selection methods.

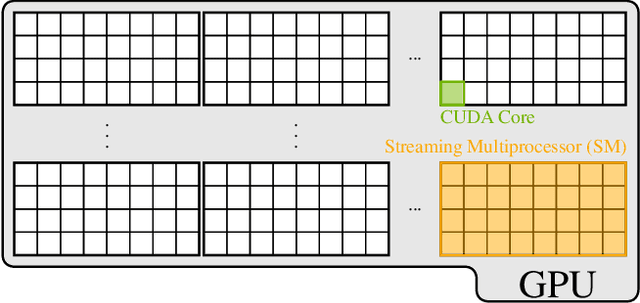

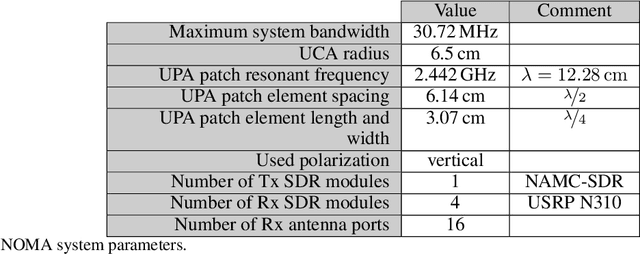

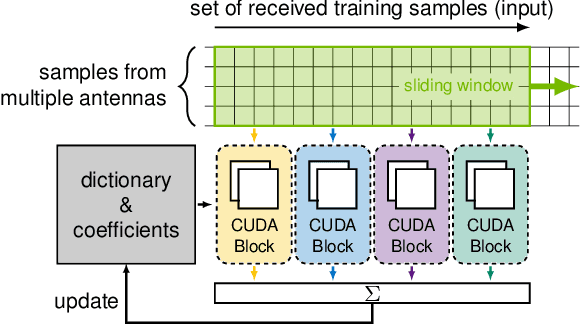

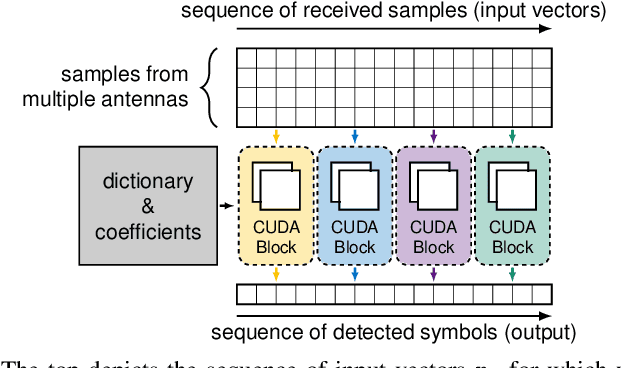

Real-Time GPU-Accelerated Machine Learning Based Multiuser Detection for 5G and Beyond

Jan 14, 2022

Abstract:Adaptive partial linear beamforming meets the need of 5G and future 6G applications for high flexibility and adaptability. Choosing an appropriate tradeoff between conflicting goals opens the recently proposed multiuser (MU) detection method. Due to their high spatial resolution, nonlinear beamforming filters can significantly outperform linear approaches in stationary scenarios with massive connectivity. However, a dramatic decrease in performance can be expected in high mobility scenarios because they are very susceptible to changes in the wireless channel. The robustness of linear filters is required, considering these changes. One way to respond appropriately is to use online machine learning algorithms. The theory of algorithms based on the adaptive projected subgradient method (APSM) is rich, and they promise accurate tracking capabilities in dynamic wireless environments. However, one of the main challenges comes from the real-time implementation of these algorithms, which involve projections on time-varying closed convex sets. While the projection operations are relatively simple, their vast number poses a challenge in ultralow latency (ULL) applications where latency constraints must be satisfied in every radio frame. Taking non-orthogonal multiple access (NOMA) systems as an example, this paper explores the acceleration of APSM-based algorithms through massive parallelization. The result is a GPU-accelerated real-time implementation of an orthogonal frequency-division multiplexing (OFDM)-based transceiver that enables detection latency of less than one millisecond and therefore complies with the requirements of 5G and beyond. To meet the stringent physical layer latency requirements, careful co-design of hardware and software is essential, especially in virtualized wireless systems with hardware accelerators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge