Matthias Mehlhose

Uplink Multi-User MIMO Implementation in OpenAirInterface for a Cell-Free O-RAN Testbed

Jan 14, 2026Abstract:Cell-Free Multiple-Input Multiple-Output (MIMO) and Open Radio Access Network (O-RAN) have been active research topics in the wireless communication community in recent years. As an open-source software implementation of the 3rd Generation Partnership Project (3GPP) 5th Generation (5G) protocol stack, OpenAirInterface (OAI) has become a valuable tool for deploying and testing new ideas in wireless communication systems. In this paper, we present our OAI based real-time uplink Multi-User MIMO (MU-MIMO) testbed developed at Fraunhofer HHI. As a part of our Cell-Free MIMO testbed development, we built a 2x2 MU-MIMO system using general purpose computers and commercially available software defined radios (SDRs). Using a modified OAI next-Generation Node-B (gNB) and two unmodified OAI user equipment (UE), we show that it is feasible to use Sounding Reference Signal (SRS) channel estimates to compute uplink combiners. Our results verify that this method can be used to separate and decode signals from two users transmitting in nonorthogonal time-frequency resources. This work serves as an important verification step to build a complete Cell-Free MU-MIMO system that leverages time domain duplexing (TDD) reciprocity to do downlink beamforming over multiple cells.

Parallel APSM for Fast and Adaptive Digital SIC in Full-Duplex Transceivers with Nonlinearity

Jul 12, 2022

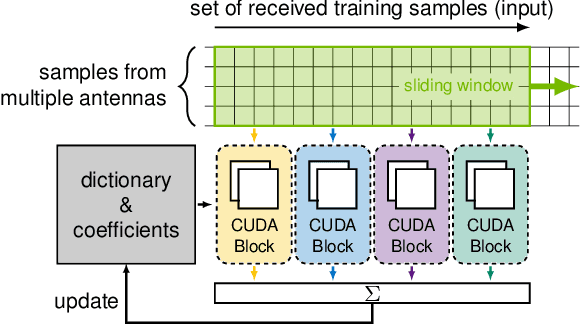

Abstract:This paper presents a kernel-based adaptive filter that is applied for the digital domain self-interference cancellation (SIC) in a transceiver operating in full-duplex (FD) mode. In FD, the benefit of simultaneous transmission and receiving of signals comes at the price of strong self-interference (SI). In this work, we are primarily interested in suppressing the SI using an adaptive filter namely adaptive projected subgradient method (APSM) in a reproducing kernel Hilbert space (RKHS) of functions. Using the projection concept as a powerful tool, APSM is used to model and consequently remove the SI. A low-complexity and fast-tracking algorithm is provided taking advantage of parallel projections as well as the kernel trick in RKHS. The performance of the proposed method is evaluated on real measurement data. The method illustrates the good performance of the proposed adaptive filter, compared to the known popular benchmarks. They demonstrate that the kernel-based algorithm achieves a favorable level of digital SIC while enabling parallel computation-based implementation within a rich and nonlinear function space, thanks to the employed adaptive filtering method.

GPU-Accelerated Machine Learning in Non-Orthogonal Multiple Access

Jun 13, 2022

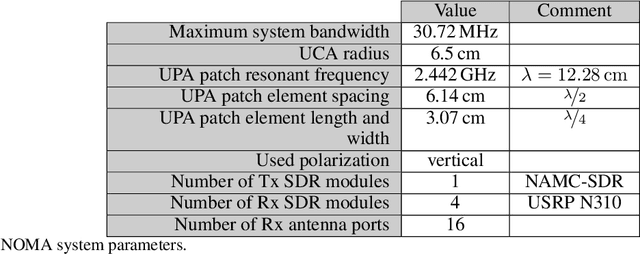

Abstract:Non-orthogonal multiple access (NOMA) is an interesting technology that enables massive connectivity as required in future 5G and 6G networks. While purely linear processing already achieves good performance in NOMA systems, in certain scenarios, non-linear processing is mandatory to ensure acceptable performance. In this paper, we propose a neural network architecture that combines the advantages of both linear and non-linear processing. Its real-time detection performance is demonstrated by a highly efficient implementation on a graphics processing unit (GPU). Using real measurements in a laboratory environment, we show the superiority of our approach over conventional methods.

Real-Time GPU-Accelerated Machine Learning Based Multiuser Detection for 5G and Beyond

Jan 14, 2022

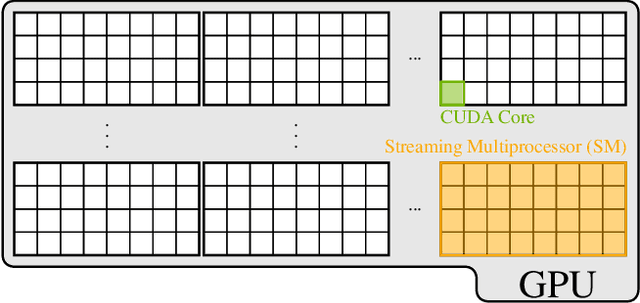

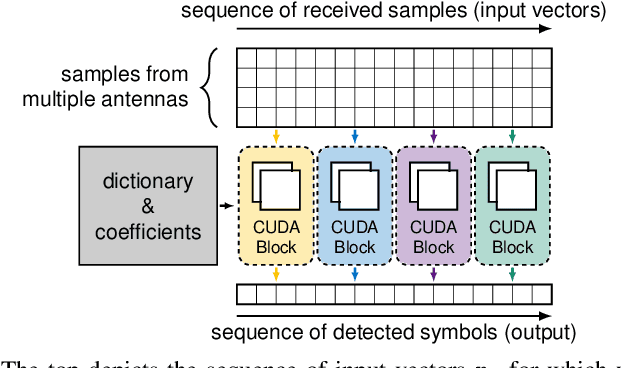

Abstract:Adaptive partial linear beamforming meets the need of 5G and future 6G applications for high flexibility and adaptability. Choosing an appropriate tradeoff between conflicting goals opens the recently proposed multiuser (MU) detection method. Due to their high spatial resolution, nonlinear beamforming filters can significantly outperform linear approaches in stationary scenarios with massive connectivity. However, a dramatic decrease in performance can be expected in high mobility scenarios because they are very susceptible to changes in the wireless channel. The robustness of linear filters is required, considering these changes. One way to respond appropriately is to use online machine learning algorithms. The theory of algorithms based on the adaptive projected subgradient method (APSM) is rich, and they promise accurate tracking capabilities in dynamic wireless environments. However, one of the main challenges comes from the real-time implementation of these algorithms, which involve projections on time-varying closed convex sets. While the projection operations are relatively simple, their vast number poses a challenge in ultralow latency (ULL) applications where latency constraints must be satisfied in every radio frame. Taking non-orthogonal multiple access (NOMA) systems as an example, this paper explores the acceleration of APSM-based algorithms through massive parallelization. The result is a GPU-accelerated real-time implementation of an orthogonal frequency-division multiplexing (OFDM)-based transceiver that enables detection latency of less than one millisecond and therefore complies with the requirements of 5G and beyond. To meet the stringent physical layer latency requirements, careful co-design of hardware and software is essential, especially in virtualized wireless systems with hardware accelerators.

Machine Learning-Based Adaptive Receive Filtering: Proof-of-Concept on an SDR Platform

Nov 11, 2019

Abstract:Conventional multiuser detection techniques either require a large number of antennas at the receiver for a desired performance, or they are too complex for practical implementation. Moreover, many of these techniques, such as successive interference cancellation (SIC), suffer from errors in parameter estimation (user channels, covariance matrix, noise variance, etc.) that is performed before detection of user data symbols. As an alternative to conventional methods, this paper proposes and demonstrates a low-complexity practical Machine Learning (ML) based receiver that achieves similar (and at times better) performance to the SIC receiver. The proposed receiver does not require parameter estimation; instead it uses supervised learning to detect the user modulation symbols directly. We perform comparisons with minimum mean square error (MMSE) and SIC receivers in terms of symbol error rate (SER) and complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge