Ganesh Ramakrishnan

Dynamic Multi-Expert Projectors with Stabilized Routing for Multilingual Speech Recognition

Jan 27, 2026Abstract:Recent advances in LLM-based ASR connect frozen speech encoders with Large Language Models (LLMs) via lightweight projectors. While effective in monolingual settings, a single projector struggles to capture the diverse acoustic-to-semantic mappings required for multilingual ASR. To address this, we propose SMEAR-MoE, a stabilized Mixture-of-Experts projector that ensures dense gradient flow to all experts, preventing expert collapse while enabling cross-lingual sharing. We systematically compare monolithic, static multi-projector, and dynamic MoE designs across four Indic languages (Hindi, Marathi, Tamil, Telugu). Our SMEAR-MoE achieves strong performance, delivering upto a 7.6% relative WER reduction over the single-projector baseline, while maintaining comparable runtime efficiency. Analysis of expert routing further shows linguistically meaningful specialization, with related languages sharing experts. These results demonstrate that stable multi-expert projectors are key to scalable and robust multilingual ASR.

Lost in Transcription: How Speech-to-Text Errors Derail Code Understanding

Jan 20, 2026Abstract:Code understanding is a foundational capability in software engineering tools and developer workflows. However, most existing systems are designed for English-speaking users interacting via keyboards, which limits accessibility in multilingual and voice-first settings, particularly in regions like India. Voice-based interfaces offer a more inclusive modality, but spoken queries involving code present unique challenges due to the presence of non-standard English usage, domain-specific vocabulary, and custom identifiers such as variable and function names, often combined with code-mixed expressions. In this work, we develop a multilingual speech-driven framework for code understanding that accepts spoken queries in a user native language, transcribes them using Automatic Speech Recognition (ASR), applies code-aware ASR output refinement using Large Language Models (LLMs), and interfaces with code models to perform tasks such as code question answering and code retrieval through benchmarks such as CodeSearchNet, CoRNStack, and CodeQA. Focusing on four widely spoken Indic languages and English, we systematically characterize how transcription errors impact downstream task performance. We also identified key failure modes in ASR for code and demonstrated that LLM-guided refinement significantly improves performance across both transcription and code understanding stages. Our findings underscore the need for code-sensitive adaptations in speech interfaces and offer a practical solution for building robust, multilingual voice-driven programming tools.

Improving Video Question Answering through query-based frame selection

Jan 12, 2026Abstract:Video Question Answering (VideoQA) models enhance understanding and interaction with audiovisual content, making it more accessible, searchable, and useful for a wide range of fields such as education, surveillance, entertainment, and content creation. Due to heavy compute requirements, most large visual language models (VLMs) for VideoQA rely on a fixed number of frames by uniformly sampling the video. However, this process does not pick important frames or capture the context of the video. We present a novel query-based selection of frames relevant to the questions based on the submodular mutual Information (SMI) functions. By replacing uniform frame sampling with query-based selection, our method ensures that the chosen frames provide complementary and essential visual information for accurate VideoQA. We evaluate our approach on the MVBench dataset, which spans a diverse set of multi-action video tasks. VideoQA accuracy on this dataset was assessed using two VLMs, namely Video-LLaVA and LLaVA-NeXT, both of which originally employed uniform frame sampling. Experiments were conducted using both uniform and query-based sampling strategies. An accuracy improvement of up to \textbf{4\%} was observed when using query-based frame selection over uniform sampling. Qualitative analysis further highlights that query-based selection, using SMI functions, consistently picks frames better aligned with the question. We opine that such query-based frame selection can enhance accuracy in a wide range of tasks that rely on only a subset of video frames.

Uncertainty-Aware Subset Selection for Robust Visual Explainability under Distribution Shifts

Dec 09, 2025Abstract:Subset selection-based methods are widely used to explain deep vision models: they attribute predictions by highlighting the most influential image regions and support object-level explanations. While these methods perform well in in-distribution (ID) settings, their behavior under out-of-distribution (OOD) conditions remains poorly understood. Through extensive experiments across multiple ID-OOD sets, we find that reliability of the existing subset based methods degrades markedly, yielding redundant, unstable, and uncertainty-sensitive explanations. To address these shortcomings, we introduce a framework that combines submodular subset selection with layer-wise, gradient-based uncertainty estimation to improve robustness and fidelity without requiring additional training or auxiliary models. Our approach estimates uncertainty via adaptive weight perturbations and uses these estimates to guide submodular optimization, ensuring diverse and informative subset selection. Empirical evaluations show that, beyond mitigating the weaknesses of existing methods under OOD scenarios, our framework also yields improvements in ID settings. These findings highlight limitations of current subset-based approaches and demonstrate how uncertainty-driven optimization can enhance attribution and object-level interpretability, paving the way for more transparent and trustworthy AI in real-world vision applications.

Consistency Is the Key: Detecting Hallucinations in LLM Generated Text By Checking Inconsistencies About Key Facts

Nov 15, 2025Abstract:Large language models (LLMs), despite their remarkable text generation capabilities, often hallucinate and generate text that is factually incorrect and not grounded in real-world knowledge. This poses serious risks in domains like healthcare, finance, and customer support. A typical way to use LLMs is via the APIs provided by LLM vendors where there is no access to model weights or options to fine-tune the model. Existing methods to detect hallucinations in such settings where the model access is restricted or constrained by resources typically require making multiple LLM API calls, increasing latency and API cost. We introduce CONFACTCHECK, an efficient hallucination detection approach that does not leverage any external knowledge base and works on the simple intuition that responses to factual probes within the generated text should be consistent within a single LLM and across different LLMs. Rigorous empirical evaluation on multiple datasets that cover both the generation of factual texts and the open generation shows that CONFACTCHECK can detect hallucinated facts efficiently using fewer resources and achieves higher accuracy scores compared to existing baselines that operate under similar conditions. Our code is available here.

DrishtiKon: Multi-Granular Visual Grounding for Text-Rich Document Images

Jun 26, 2025

Abstract:Visual grounding in text-rich document images is a critical yet underexplored challenge for document intelligence and visual question answering (VQA) systems. We present \drishtikon, a multi-granular visual grounding framework designed to enhance interpretability and trust in VQA for complex, multilingual documents. Our approach integrates robust multi-lingual OCR, large language models, and a novel region matching algorithm to accurately localize answer spans at block, line, word, and point levels. We curate a new benchmark from the CircularsVQA test set, providing fine-grained, human-verified annotations across multiple granularities. Extensive experiments demonstrate that our method achieves state-of-the-art grounding accuracy, with line-level granularity offering the best trade-off between precision and recall. Ablation studies further highlight the benefits of multi-block and multi-line reasoning. Comparative evaluations with leading vision-language models reveal the limitations of current VLMs in precise localization, underscoring the effectiveness of our structured, alignment-based approach. Our findings pave the way for more robust and interpretable document understanding systems in real-world, text-centric scenarios. Code and dataset has been made available at https://github.com/kasuba-badri-vishal/DhrishtiKon.

Enhancing Multi-Image Question Answering via Submodular Subset Selection

May 15, 2025Abstract:Large multimodal models (LMMs) have achieved high performance in vision-language tasks involving single image but they struggle when presented with a collection of multiple images (Multiple Image Question Answering scenario). These tasks, which involve reasoning over large number of images, present issues in scalability (with increasing number of images) and retrieval performance. In this work, we propose an enhancement for retriever framework introduced in MIRAGE model using submodular subset selection techniques. Our method leverages query-aware submodular functions, such as GraphCut, to pre-select a subset of semantically relevant images before main retrieval component. We demonstrate that using anchor-based queries and augmenting the data improves submodular-retriever pipeline effectiveness, particularly in large haystack sizes.

FairPO: Robust Preference Optimization for Fair Multi-Label Learning

May 05, 2025

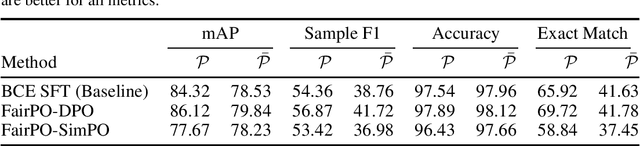

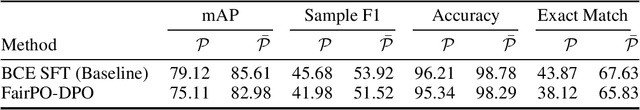

Abstract:We propose FairPO, a novel framework designed to promote fairness in multi-label classification by directly optimizing preference signals with a group robustness perspective. In our framework, the set of labels is partitioned into privileged and non-privileged groups, and a preference-based loss inspired by Direct Preference Optimization (DPO) is employed to more effectively differentiate true positive labels from confusing negatives within the privileged group, while preserving baseline classification performance for non-privileged labels. By framing the learning problem as a robust optimization over groups, our approach dynamically adjusts the training emphasis toward groups with poorer performance, thereby mitigating bias and ensuring a fairer treatment across diverse label categories. In addition, we outline plans to extend this approach by investigating alternative loss formulations such as Simple Preference Optimisation (SimPO) and Contrastive Preference Optimization (CPO) to exploit reference-free reward formulations and contrastive training signals. Furthermore, we plan to extend FairPO with multilabel generation capabilities, enabling the model to dynamically generate diverse and coherent label sets for ambiguous inputs.

Language translation, and change of accent for speech-to-speech task using diffusion model

May 04, 2025Abstract:Speech-to-speech translation (S2ST) aims to convert spoken input in one language to spoken output in another, typically focusing on either language translation or accent adaptation. However, effective cross-cultural communication requires handling both aspects simultaneously - translating content while adapting the speaker's accent to match the target language context. In this work, we propose a unified approach for simultaneous speech translation and change of accent, a task that remains underexplored in current literature. Our method reformulates the problem as a conditional generation task, where target speech is generated based on phonemes and guided by target speech features. Leveraging the power of diffusion models, known for high-fidelity generative capabilities, we adapt text-to-image diffusion strategies by conditioning on source speech transcriptions and generating Mel spectrograms representing the target speech with desired linguistic and accentual attributes. This integrated framework enables joint optimization of translation and accent adaptation, offering a more parameter-efficient and effective model compared to traditional pipelines.

Inducing Robustness in a 2 Dimensional Direct Preference Optimization Paradigm

May 03, 2025

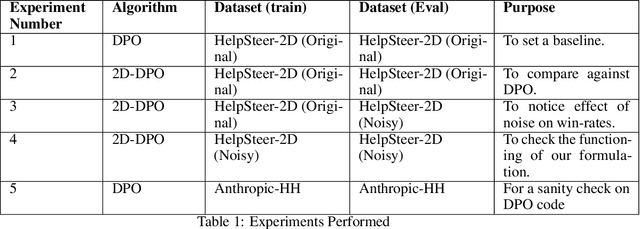

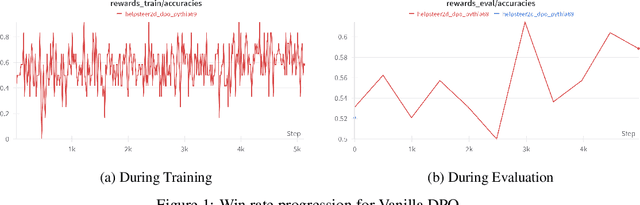

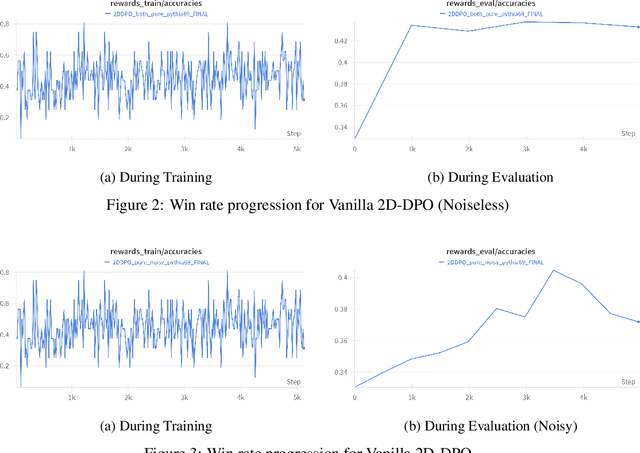

Abstract:Direct Preference Optimisation (DPO) has emerged as a powerful method for aligning Large Language Models (LLMs) with human preferences, offering a stable and efficient alternative to approaches that use Reinforcement learning via Human Feedback. In this work, we investigate the performance of DPO using open-source preference datasets. One of the major drawbacks of DPO is that it doesn't induce granular scoring and treats all the segments of the responses with equal propensity. However, this is not practically true for human preferences since even "good" responses have segments that may not be preferred by the annotator. To resolve this, a 2-dimensional scoring for DPO alignment called 2D-DPO was proposed. We explore the 2D-DPO alignment paradigm and the advantages it provides over the standard DPO by comparing their win rates. It is observed that these methods, even though effective, are not robust to label/score noise. To counter this, we propose an approach of incorporating segment-level score noise robustness to the 2D-DPO algorithm. Along with theoretical backing, we also provide empirical verification in favour of the algorithm and introduce other noise models that can be present.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge