Friedrich Fraundorfer

Graz University of Technology

A Streamlined Attention-Based Network for Descriptor Extraction

Jan 19, 2026Abstract:We introduce SANDesc, a Streamlined Attention-Based Network for Descriptor extraction that aims to improve on existing architectures for keypoint description. Our descriptor network learns to compute descriptors that improve matching without modifying the underlying keypoint detector. We employ a revised U-Net-like architecture enhanced with Convolutional Block Attention Modules and residual paths, enabling effective local representation while maintaining computational efficiency. We refer to the building blocks of our model as Residual U-Net Blocks with Attention. The model is trained using a modified triplet loss in combination with a curriculum learning-inspired hard negative mining strategy, which improves training stability. Extensive experiments on HPatches, MegaDepth-1500, and the Image Matching Challenge 2021 show that training SANDesc on top of existing keypoint detectors leads to improved results on multiple matching tasks compared to the original keypoint descriptors. At the same time, SANDesc has a model complexity of just 2.4 million parameters. As a further contribution, we introduce a new urban dataset featuring 4K images and pre-calibrated intrinsics, designed to evaluate feature extractors. On this benchmark, SANDesc achieves substantial performance gains over the existing descriptors while operating with limited computational resources.

Trifocal Tensor and Relative Pose Estimation with Known Vertical Direction

Dec 22, 2025Abstract:This work presents two novel solvers for estimating the relative poses among views with known vertical directions. The vertical directions of camera views can be easily obtained using inertial measurement units (IMUs) which have been widely used in autonomous vehicles, mobile phones, and unmanned aerial vehicles (UAVs). Given the known vertical directions, our lgorithms only need to solve for two rotation angles and two translation vectors. In this paper, a linear closed-form solution has been described, requiring only four point correspondences in three views. We also propose a minimal solution with three point correspondences using the latest Gröbner basis solver. Since the proposed methods require fewer point correspondences, they can be efficiently applied within the RANSAC framework for outliers removal and pose estimation in visual odometry. The proposed method has been tested on both synthetic data and real-world scenes from KITTI. The experimental results show that the accuracy of the estimated poses is superior to other alternative methods.

${C}^{3}$-GS: Learning Context-aware, Cross-dimension, Cross-scale Feature for Generalizable Gaussian Splatting

Aug 28, 2025Abstract:Generalizable Gaussian Splatting aims to synthesize novel views for unseen scenes without per-scene optimization. In particular, recent advancements utilize feed-forward networks to predict per-pixel Gaussian parameters, enabling high-quality synthesis from sparse input views. However, existing approaches fall short in encoding discriminative, multi-view consistent features for Gaussian predictions, which struggle to construct accurate geometry with sparse views. To address this, we propose $\mathbf{C}^{3}$-GS, a framework that enhances feature learning by incorporating context-aware, cross-dimension, and cross-scale constraints. Our architecture integrates three lightweight modules into a unified rendering pipeline, improving feature fusion and enabling photorealistic synthesis without requiring additional supervision. Extensive experiments on benchmark datasets validate that $\mathbf{C}^{3}$-GS achieves state-of-the-art rendering quality and generalization ability. Code is available at: https://github.com/YuhsiHu/C3-GS.

Leveraging Automatic CAD Annotations for Supervised Learning in 3D Scene Understanding

Apr 18, 2025Abstract:High-level 3D scene understanding is essential in many applications. However, the challenges of generating accurate 3D annotations make development of deep learning models difficult. We turn to recent advancements in automatic retrieval of synthetic CAD models, and show that data generated by such methods can be used as high-quality ground truth for training supervised deep learning models. More exactly, we employ a pipeline akin to the one previously used to automatically annotate objects in ScanNet scenes with their 9D poses and CAD models. This time, we apply it to the recent ScanNet++ v1 dataset, which previously lacked such annotations. Our findings demonstrate that it is not only possible to train deep learning models on these automatically-obtained annotations but that the resulting models outperform those trained on manually annotated data. We validate this on two distinct tasks: point cloud completion and single-view CAD model retrieval and alignment. Our results underscore the potential of automatic 3D annotations to enhance model performance while significantly reducing annotation costs. To support future research in 3D scene understanding, we will release our annotations, which we call SCANnotate++, along with our trained models.

ICG-MVSNet: Learning Intra-view and Cross-view Relationships for Guidance in Multi-View Stereo

Mar 27, 2025

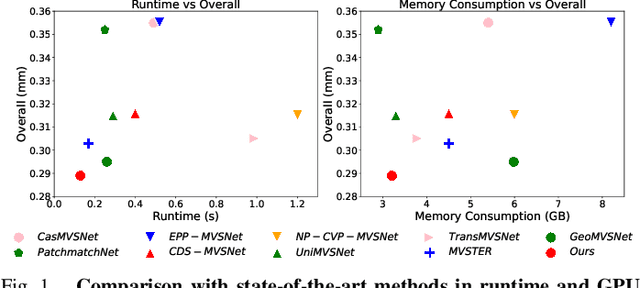

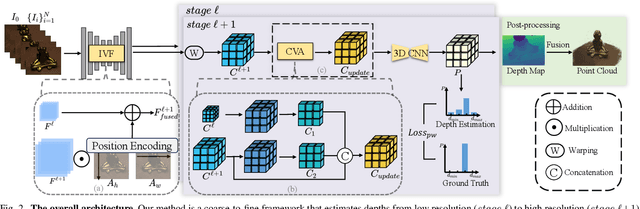

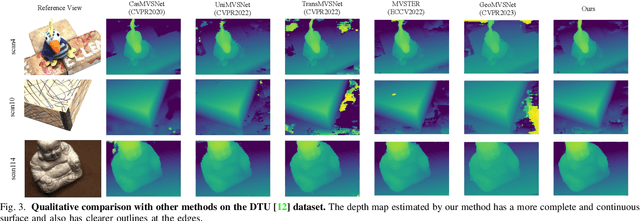

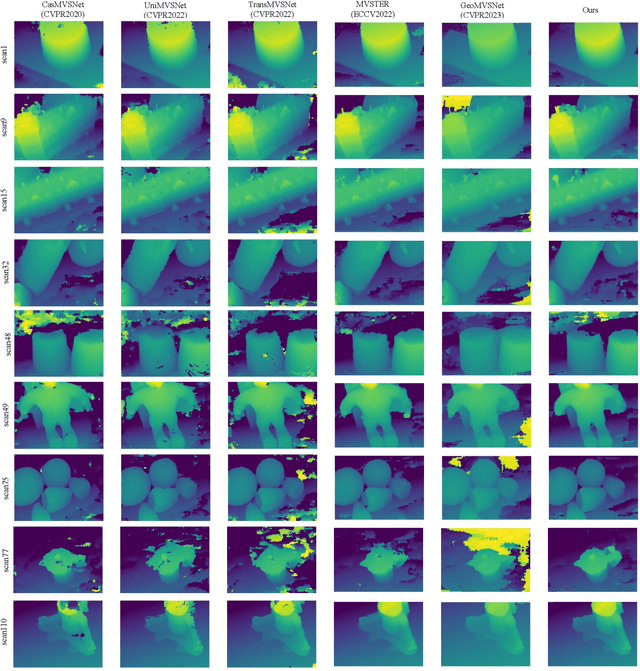

Abstract:Multi-view Stereo (MVS) aims to estimate depth and reconstruct 3D point clouds from a series of overlapping images. Recent learning-based MVS frameworks overlook the geometric information embedded in features and correlations, leading to weak cost matching. In this paper, we propose ICG-MVSNet, which explicitly integrates intra-view and cross-view relationships for depth estimation. Specifically, we develop an intra-view feature fusion module that leverages the feature coordinate correlations within a single image to enhance robust cost matching. Additionally, we introduce a lightweight cross-view aggregation module that efficiently utilizes the contextual information from volume correlations to guide regularization. Our method is evaluated on the DTU dataset and Tanks and Temples benchmark, consistently achieving competitive performance against state-of-the-art works, while requiring lower computational resources.

SAda-Net: A Self-Supervised Adaptive Stereo Estimation CNN For Remote Sensing Image Data

Oct 17, 2024Abstract:Stereo estimation has made many advancements in recent years with the introduction of deep-learning. However the traditional supervised approach to deep-learning requires the creation of accurate and plentiful ground-truth data, which is expensive to create and not available in many situations. This is especially true for remote sensing applications, where there is an excess of available data without proper ground truth. To tackle this problem, we propose a self-supervised CNN with self-improving adaptive abilities. In the first iteration, the created disparity map is inaccurate and noisy. Leveraging the left-right consistency check, we get a sparse but more accurate disparity map which is used as an initial pseudo ground-truth. This pseudo ground-truth is then adapted and updated after every epoch in the training step of the network. We use the sum of inconsistent points in order to track the network convergence. The code for our method is publicly available at: https://github.com/thedodo/SAda-Net}{https://github.com/thedodo/SAda-Net

GMM-IKRS: Gaussian Mixture Models for Interpretable Keypoint Refinement and Scoring

Aug 30, 2024

Abstract:The extraction of keypoints in images is at the basis of many computer vision applications, from localization to 3D reconstruction. Keypoints come with a score permitting to rank them according to their quality. While learned keypoints often exhibit better properties than handcrafted ones, their scores are not easily interpretable, making it virtually impossible to compare the quality of individual keypoints across methods. We propose a framework that can refine, and at the same time characterize with an interpretable score, the keypoints extracted by any method. Our approach leverages a modified robust Gaussian Mixture Model fit designed to both reject non-robust keypoints and refine the remaining ones. Our score comprises two components: one relates to the probability of extracting the same keypoint in an image captured from another viewpoint, the other relates to the localization accuracy of the keypoint. These two interpretable components permit a comparison of individual keypoints extracted across different methods. Through extensive experiments we demonstrate that, when applied to popular keypoint detectors, our framework consistently improves the repeatability of keypoints as well as their performance in homography and two/multiple-view pose recovery tasks.

PyTorchGeoNodes: Enabling Differentiable Shape Programs for 3D Shape Reconstruction

Apr 16, 2024

Abstract:We propose PyTorchGeoNodes, a differentiable module for reconstructing 3D objects from images using interpretable shape programs. In comparison to traditional CAD model retrieval methods, the use of shape programs for 3D reconstruction allows for reasoning about the semantic properties of reconstructed objects, editing, low memory footprint, etc. However, the utilization of shape programs for 3D scene understanding has been largely neglected in past works. As our main contribution, we enable gradient-based optimization by introducing a module that translates shape programs designed in Blender, for example, into efficient PyTorch code. We also provide a method that relies on PyTorchGeoNodes and is inspired by Monte Carlo Tree Search (MCTS) to jointly optimize discrete and continuous parameters of shape programs and reconstruct 3D objects for input scenes. In our experiments, we apply our algorithm to reconstruct 3D objects in the ScanNet dataset and evaluate our results against CAD model retrieval-based reconstructions. Our experiments indicate that our reconstructions match well the input scenes while enabling semantic reasoning about reconstructed objects.

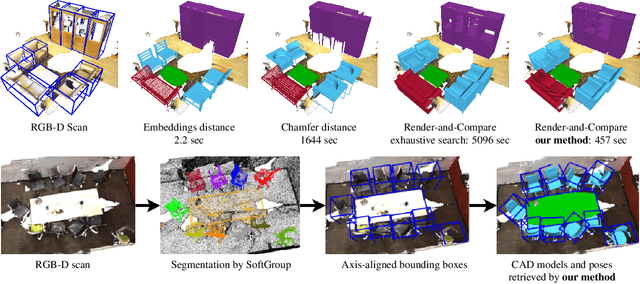

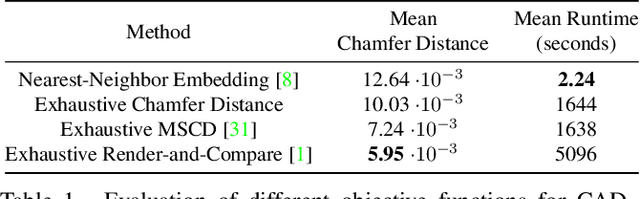

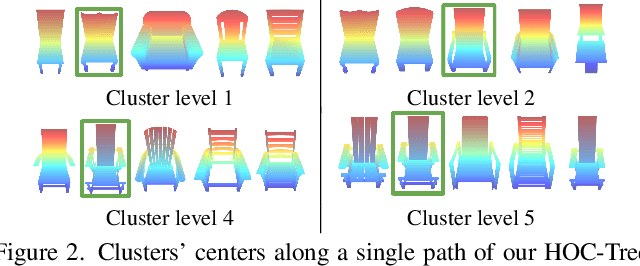

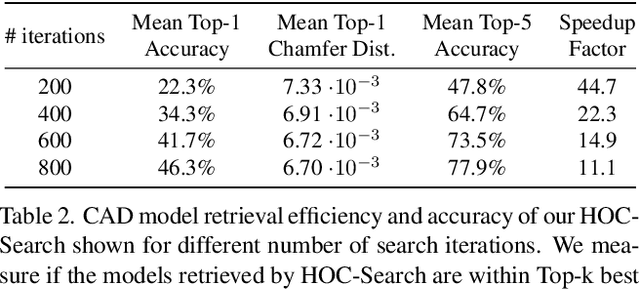

HOC-Search: Efficient CAD Model and Pose Retrieval from RGB-D Scans

Sep 12, 2023

Abstract:We present an automated and efficient approach for retrieving high-quality CAD models of objects and their poses in a scene captured by a moving RGB-D camera. We first investigate various objective functions to measure similarity between a candidate CAD object model and the available data, and the best objective function appears to be a "render-and-compare" method comparing depth and mask rendering. We thus introduce a fast-search method that approximates an exhaustive search based on this objective function for simultaneously retrieving the object category, a CAD model, and the pose of an object given an approximate 3D bounding box. This method involves a search tree that organizes the CAD models and object properties including object category and pose for fast retrieval and an algorithm inspired by Monte Carlo Tree Search, that efficiently searches this tree. We show that this method retrieves CAD models that fit the real objects very well, with a speed-up factor of 10x to 120x compared to exhaustive search.

S-TREK: Sequential Translation and Rotation Equivariant Keypoints for local feature extraction

Aug 28, 2023

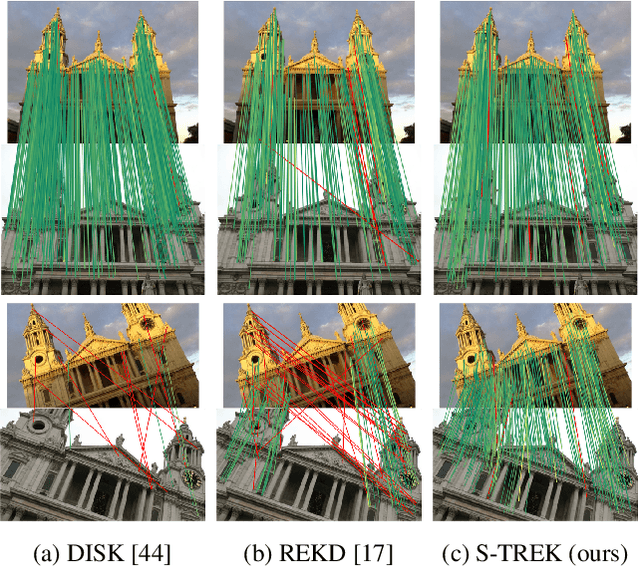

Abstract:In this work we introduce S-TREK, a novel local feature extractor that combines a deep keypoint detector, which is both translation and rotation equivariant by design, with a lightweight deep descriptor extractor. We train the S-TREK keypoint detector within a framework inspired by reinforcement learning, where we leverage a sequential procedure to maximize a reward directly related to keypoint repeatability. Our descriptor network is trained following a "detect, then describe" approach, where the descriptor loss is evaluated only at those locations where keypoints have been selected by the already trained detector. Extensive experiments on multiple benchmarks confirm the effectiveness of our proposed method, with S-TREK often outperforming other state-of-the-art methods in terms of repeatability and quality of the recovered poses, especially when dealing with in-plane rotations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge