Christian Sormann

Graz University of Technology

A Streamlined Attention-Based Network for Descriptor Extraction

Jan 19, 2026Abstract:We introduce SANDesc, a Streamlined Attention-Based Network for Descriptor extraction that aims to improve on existing architectures for keypoint description. Our descriptor network learns to compute descriptors that improve matching without modifying the underlying keypoint detector. We employ a revised U-Net-like architecture enhanced with Convolutional Block Attention Modules and residual paths, enabling effective local representation while maintaining computational efficiency. We refer to the building blocks of our model as Residual U-Net Blocks with Attention. The model is trained using a modified triplet loss in combination with a curriculum learning-inspired hard negative mining strategy, which improves training stability. Extensive experiments on HPatches, MegaDepth-1500, and the Image Matching Challenge 2021 show that training SANDesc on top of existing keypoint detectors leads to improved results on multiple matching tasks compared to the original keypoint descriptors. At the same time, SANDesc has a model complexity of just 2.4 million parameters. As a further contribution, we introduce a new urban dataset featuring 4K images and pre-calibrated intrinsics, designed to evaluate feature extractors. On this benchmark, SANDesc achieves substantial performance gains over the existing descriptors while operating with limited computational resources.

GMM-IKRS: Gaussian Mixture Models for Interpretable Keypoint Refinement and Scoring

Aug 30, 2024

Abstract:The extraction of keypoints in images is at the basis of many computer vision applications, from localization to 3D reconstruction. Keypoints come with a score permitting to rank them according to their quality. While learned keypoints often exhibit better properties than handcrafted ones, their scores are not easily interpretable, making it virtually impossible to compare the quality of individual keypoints across methods. We propose a framework that can refine, and at the same time characterize with an interpretable score, the keypoints extracted by any method. Our approach leverages a modified robust Gaussian Mixture Model fit designed to both reject non-robust keypoints and refine the remaining ones. Our score comprises two components: one relates to the probability of extracting the same keypoint in an image captured from another viewpoint, the other relates to the localization accuracy of the keypoint. These two interpretable components permit a comparison of individual keypoints extracted across different methods. Through extensive experiments we demonstrate that, when applied to popular keypoint detectors, our framework consistently improves the repeatability of keypoints as well as their performance in homography and two/multiple-view pose recovery tasks.

S-TREK: Sequential Translation and Rotation Equivariant Keypoints for local feature extraction

Aug 28, 2023

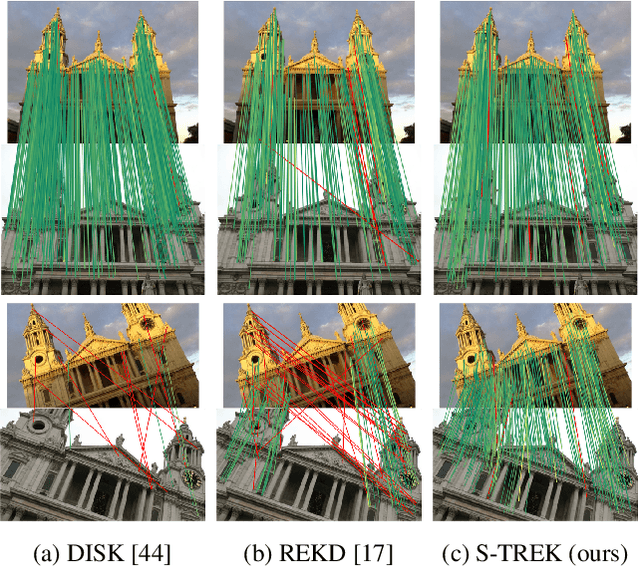

Abstract:In this work we introduce S-TREK, a novel local feature extractor that combines a deep keypoint detector, which is both translation and rotation equivariant by design, with a lightweight deep descriptor extractor. We train the S-TREK keypoint detector within a framework inspired by reinforcement learning, where we leverage a sequential procedure to maximize a reward directly related to keypoint repeatability. Our descriptor network is trained following a "detect, then describe" approach, where the descriptor loss is evaluated only at those locations where keypoints have been selected by the already trained detector. Extensive experiments on multiple benchmarks confirm the effectiveness of our proposed method, with S-TREK often outperforming other state-of-the-art methods in terms of repeatability and quality of the recovered poses, especially when dealing with in-plane rotations.

DELS-MVS: Deep Epipolar Line Search for Multi-View Stereo

Dec 13, 2022

Abstract:We propose a novel approach for deep learning-based Multi-View Stereo (MVS). For each pixel in the reference image, our method leverages a deep architecture to search for the corresponding point in the source image directly along the corresponding epipolar line. We denote our method DELS-MVS: Deep Epipolar Line Search Multi-View Stereo. Previous works in deep MVS select a range of interest within the depth space, discretize it, and sample the epipolar line according to the resulting depth values: this can result in an uneven scanning of the epipolar line, hence of the image space. Instead, our method works directly on the epipolar line: this guarantees an even scanning of the image space and avoids both the need to select a depth range of interest, which is often not known a priori and can vary dramatically from scene to scene, and the need for a suitable discretization of the depth space. In fact, our search is iterative, which avoids the building of a cost volume, costly both to store and to process. Finally, our method performs a robust geometry-aware fusion of the estimated depth maps, leveraging a confidence predicted alongside each depth. We test DELS-MVS on the ETH3D, Tanks and Temples and DTU benchmarks and achieve competitive results with respect to state-of-the-art approaches.

MD-Net: Multi-Detector for Local Feature Extraction

Aug 10, 2022

Abstract:Establishing a sparse set of keypoint correspon dences between images is a fundamental task in many computer vision pipelines. Often, this translates into a computationally expensive nearest neighbor search, where every keypoint descriptor at one image must be compared with all the descriptors at the others. In order to lower the computational cost of the matching phase, we propose a deep feature extraction network capable of detecting a predefined number of complementary sets of keypoints at each image. Since only the descriptors within the same set need to be compared across the different images, the matching phase computational complexity decreases with the number of sets. We train our network to predict the keypoints and compute the corresponding descriptors jointly. In particular, in order to learn complementary sets of keypoints, we introduce a novel unsupervised loss which penalizes intersections among the different sets. Additionally, we propose a novel descriptor-based weighting scheme meant to penalize the detection of keypoints with non-discriminative descriptors. With extensive experiments we show that our feature extraction network, trained only on synthetically warped images and in a fully unsupervised manner, achieves competitive results on 3D reconstruction and re-localization tasks at a reduced matching complexity.

IB-MVS: An Iterative Algorithm for Deep Multi-View Stereo based on Binary Decisions

Nov 29, 2021

Abstract:We present a novel deep-learning-based method for Multi-View Stereo. Our method estimates high resolution and highly precise depth maps iteratively, by traversing the continuous space of feasible depth values at each pixel in a binary decision fashion. The decision process leverages a deep-network architecture: this computes a pixelwise binary mask that establishes whether each pixel actual depth is in front or behind its current iteration individual depth hypothesis. Moreover, in order to handle occluded regions, at each iteration the results from different source images are fused using pixelwise weights estimated by a second network. Thanks to the adopted binary decision strategy, which permits an efficient exploration of the depth space, our method can handle high resolution images without trading resolution and precision. This sets it apart from most alternative learning-based Multi-View Stereo methods, where the explicit discretization of the depth space requires the processing of large cost volumes. We compare our method with state-of-the-art Multi-View Stereo methods on the DTU, Tanks and Temples and the challenging ETH3D benchmarks and show competitive results.

BP-MVSNet: Belief-Propagation-Layers for Multi-View-Stereo

Oct 23, 2020

Abstract:In this work, we propose BP-MVSNet, a convolutional neural network (CNN)-based Multi-View-Stereo (MVS) method that uses a differentiable Conditional Random Field (CRF) layer for regularization. To this end, we propose to extend the BP layer and add what is necessary to successfully use it in the MVS setting. We therefore show how we can calculate a normalization based on the expected 3D error, which we can then use to normalize the label jumps in the CRF. This is required to make the BP layer invariant to different scales in the MVS setting. In order to also enable fractional label jumps, we propose a differentiable interpolation step, which we embed into the computation of the pairwise term. These extensions allow us to integrate the BP layer into a multi-scale MVS network, where we continuously improve a rough initial estimate until we get high quality depth maps as a result. We evaluate the proposed BP-MVSNet in an ablation study and conduct extensive experiments on the DTU, Tanks and Temples and ETH3D data sets. The experiments show that we can significantly outperform the baseline and achieve state-of-the-art results.

Belief Propagation Reloaded: Learning BP-Layers for Labeling Problems

Mar 13, 2020

Abstract:It has been proposed by many researchers that combining deep neural networks with graphical models can create more efficient and better regularized composite models. The main difficulties in implementing this in practice are associated with a discrepancy in suitable learning objectives as well as with the necessity of approximations for the inference. In this work we take one of the simplest inference methods, a truncated max-product Belief Propagation, and add what is necessary to make it a proper component of a deep learning model: We connect it to learning formulations with losses on marginals and compute the backprop operation. This BP-Layer can be used as the final or an intermediate block in convolutional neural networks (CNNs), allowing us to design a hierarchical model composing BP inference and CNNs at different scale levels. The model is applicable to a range of dense prediction problems, is well-trainable and provides parameter-efficient and robust solutions in stereo, optical flow and semantic segmentation.

DeepC-MVS: Deep Confidence Prediction for Multi-View Stereo Reconstruction

Dec 04, 2019

Abstract:Deep Neural Networks (DNNs) have the potential to improve the quality of image-based 3D reconstructions. A challenge which still remains is to utilize the potential of DNNs to improve 3D reconstructions from high-resolution image datasets as available by the ETH3D benchmark. In this paper, we propose a way to employ DNNs in the image domain to gain a significant quality improvement of geometric image based 3D reconstruction. This is achieved by utilizing confidence prediction networks which have been adapted to the Multi-View Stereo (MVS) case and are trained on automatically generated ground truth established by geometric error propagation. In addition to a semi-dense real-world ground truth dataset for training the DNN, we present a synthetic dataset to enlarge the training dataset. We demonstrate the utility of the confidence predictions for two essential steps within a 3D reconstruction pipeline: Firstly, to be used for outlier clustering and filtering and secondly to be used within a depth refinement step. The presented 3D reconstruction pipeline DeepC-MVS makes use of deep learning for an essential part in MVS from high-resolution images and the experimental evaluation on popular benchmarks demonstrates the achieved state-of-the-art quality in 3D reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge