Felipe A. Medeiros

Scalable Bayesian inference for the generalized linear mixed model

Mar 05, 2024Abstract:The generalized linear mixed model (GLMM) is a popular statistical approach for handling correlated data, and is used extensively in applications areas where big data is common, including biomedical data settings. The focus of this paper is scalable statistical inference for the GLMM, where we define statistical inference as: (i) estimation of population parameters, and (ii) evaluation of scientific hypotheses in the presence of uncertainty. Artificial intelligence (AI) learning algorithms excel at scalable statistical estimation, but rarely include uncertainty quantification. In contrast, Bayesian inference provides full statistical inference, since uncertainty quantification results automatically from the posterior distribution. Unfortunately, Bayesian inference algorithms, including Markov Chain Monte Carlo (MCMC), become computationally intractable in big data settings. In this paper, we introduce a statistical inference algorithm at the intersection of AI and Bayesian inference, that leverages the scalability of modern AI algorithms with guaranteed uncertainty quantification that accompanies Bayesian inference. Our algorithm is an extension of stochastic gradient MCMC with novel contributions that address the treatment of correlated data (i.e., intractable marginal likelihood) and proper posterior variance estimation. Through theoretical and empirical results we establish our algorithm's statistical inference properties, and apply the method in a large electronic health records database.

Assessing glaucoma in retinal fundus photographs using Deep Feature Consistent Variational Autoencoders

Oct 04, 2021

Abstract:One of the leading causes of blindness is glaucoma, which is challenging to detect since it remains asymptomatic until the symptoms are severe. Thus, diagnosis is usually possible until the markers are easy to identify, i.e., the damage has already occurred. Early identification of glaucoma is generally made based on functional, structural, and clinical assessments. However, due to the nature of the disease, researchers still debate which markers qualify as a consistent glaucoma metric. Deep learning methods have partially solved this dilemma by bypassing the marker identification stage and analyzing high-level information directly to classify the data. Although favorable, these methods make expert analysis difficult as they provide no insight into the model discrimination process. In this paper, we overcome this using deep generative networks, a deep learning model that learns complicated, high-dimensional probability distributions. We train a Deep Feature consistent Variational Autoencoder (DFC-VAE) to reconstruct optic disc images. We show that a small-sized latent space obtained from the DFC-VAE can learn the high-dimensional glaucoma data distribution and provide discriminatory evidence between normal and glaucoma eyes. Latent representations of size as low as 128 from our model got a 0.885 area under the receiver operating characteristic curve when trained with Support Vector Classifier.

RetiNerveNet: Using Recursive Deep Learning to Estimate Pointwise 24-2 Visual Field Data based on Retinal Structure

Oct 15, 2020

Abstract:Glaucoma is the leading cause of irreversible blindness in the world, affecting over 70 million people. The cumbersome Standard Automated Perimetry (SAP) test is most frequently used to detect visual loss due to glaucoma. Due to the SAP test's innate difficulty and its high test-retest variability, we propose the RetiNerveNet, a deep convolutional recursive neural network for obtaining estimates of the SAP visual field. RetiNerveNet uses information from the more objective Spectral-Domain Optical Coherence Tomography (SDOCT). RetiNerveNet attempts to trace-back the arcuate convergence of the retinal nerve fibers, starting from the Retinal Nerve Fiber Layer (RNFL) thickness around the optic disc, to estimate individual age-corrected 24-2 SAP values. Recursive passes through the proposed network sequentially yield estimates of the visual locations progressively farther from the optic disc. The proposed network is able to obtain more accurate estimates of the individual visual field values, compared to a number of baselines, implying its utility as a proxy for SAP. We further augment RetiNerveNet to additionally predict the SAP Mean Deviation values and also create an ensemble of RetiNerveNets that further improves the performance, by increasingly weighting-up underrepresented parts of the training data.

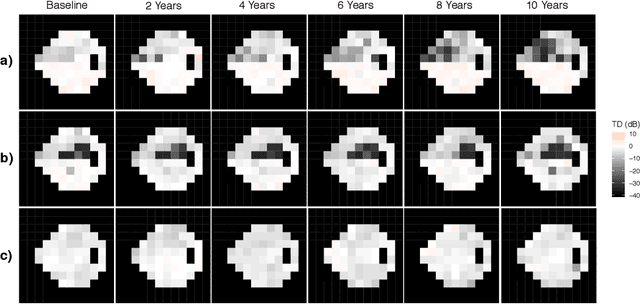

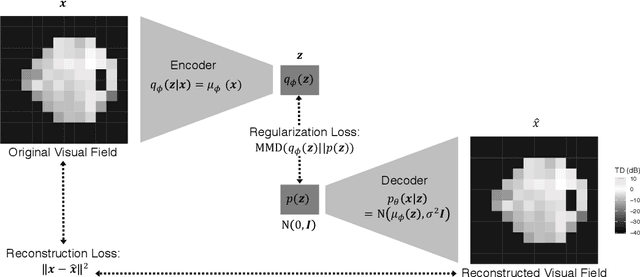

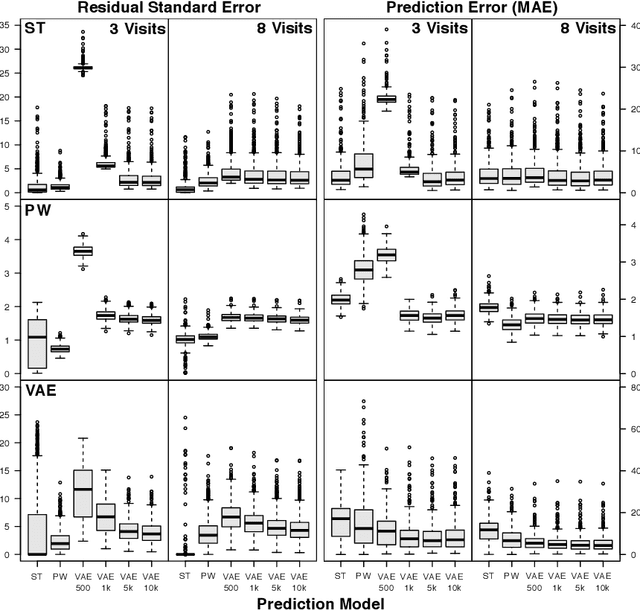

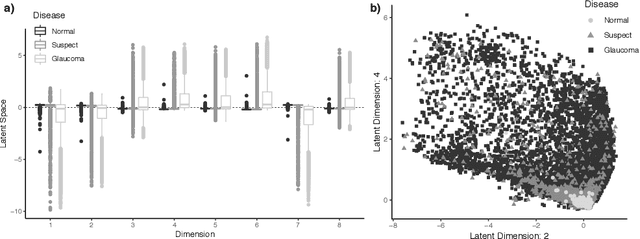

Scalable Modeling of Spatiotemporal Data using the Variational Autoencoder: an Application in Glaucoma

Aug 24, 2019

Abstract:As big spatial data becomes increasingly prevalent, classical spatiotemporal (ST) methods often do not scale well. While methods have been developed to account for high-dimensional spatial objects, the setting where there are exceedingly large samples of spatial observations has had less attention. The variational autoencoder (VAE), an unsupervised generative model based on deep learning and approximate Bayesian inference, fills this void using a latent variable specification that is inferred jointly across the large number of samples. In this manuscript, we compare the performance of the VAE with a more classical ST method when analyzing longitudinal visual fields from a large cohort of patients in a prospective glaucoma study. Through simulation and a case study, we demonstrate that the VAE is a scalable method for analyzing ST data, when the goal is to obtain accurate predictions. R code to implement the VAE can be found on GitHub: https://github.com/berchuck/vaeST.

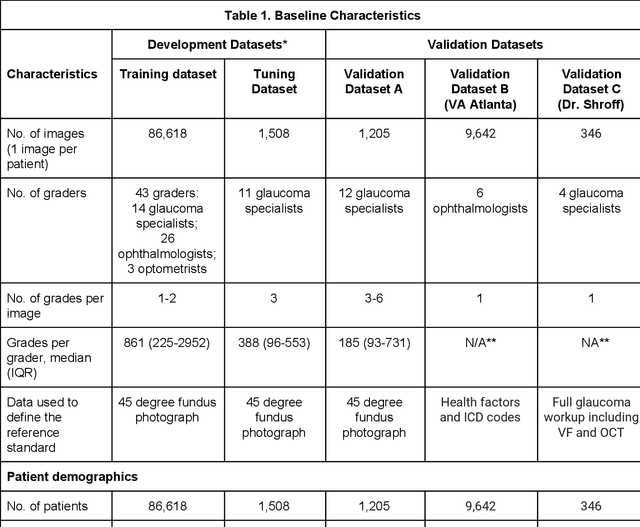

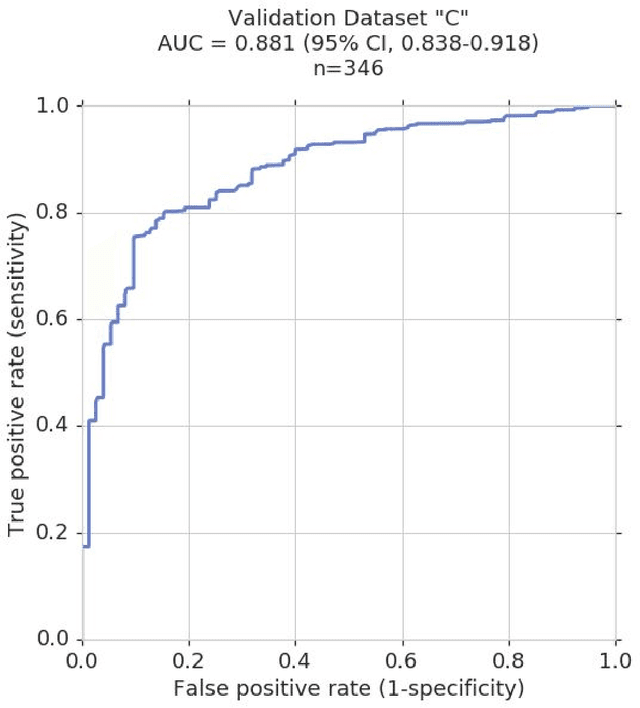

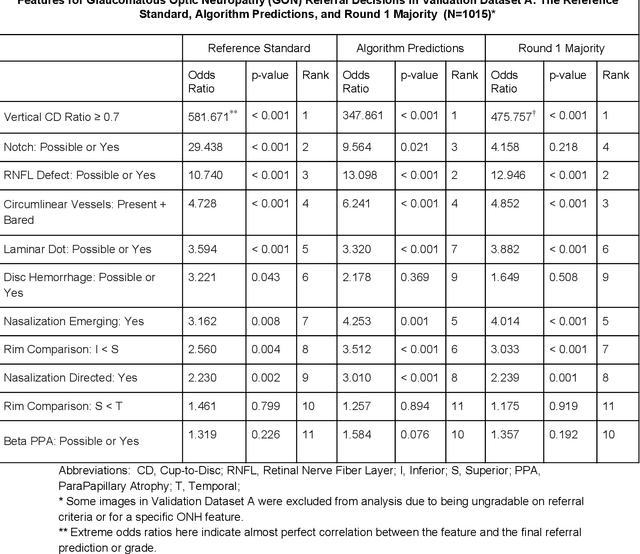

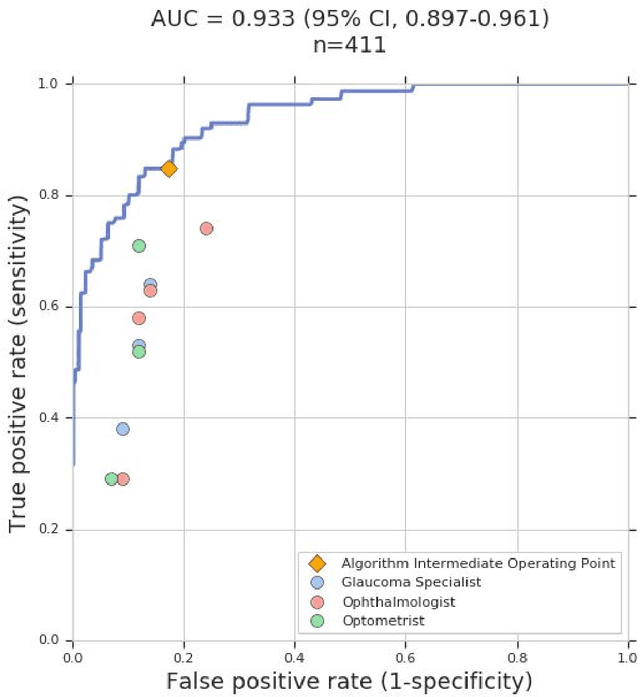

Deep Learning to Assess Glaucoma Risk and Associated Features in Fundus Images

Dec 21, 2018

Abstract:Glaucoma is the leading cause of preventable, irreversible blindness world-wide. The disease can remain asymptomatic until severe, and an estimated 50%-90% of people with glaucoma remain undiagnosed. Thus, glaucoma screening is recommended for early detection and treatment. A cost-effective tool to detect glaucoma could expand healthcare access to a much larger patient population, but such a tool is currently unavailable. We trained a deep learning (DL) algorithm using a retrospective dataset of 58,033 images, assessed for gradability, glaucomatous optic nerve head (ONH) features, and referable glaucoma risk. The resultant algorithm was validated using 2 separate datasets. For referable glaucoma risk, the algorithm had an AUC of 0.940 (95%CI, 0.922-0.955) in validation dataset "A" (1,205 images, 1 image/patient; 19% referable where images were adjudicated by panels of fellowship-trained glaucoma specialists) and 0.858 (95% CI, 0.836-0.878) in validation dataset "B" (17,593 images from 9,643 patients; 9.2% referable where images were from the Atlanta Veterans Affairs Eye Clinic diabetic teleretinal screening program using clinical referral decisions as the reference standard). Additionally, we found that the presence of vertical cup-to-disc ratio >= 0.7, neuroretinal rim notching, retinal nerve fiber layer defect, and bared circumlinear vessels contributed most to referable glaucoma risk assessment by both glaucoma specialists and the algorithm. Algorithm AUCs ranged between 0.608-0.977 for glaucomatous ONH features. The DL algorithm was significantly more sensitive than 6 of 10 graders, including 2 of 3 glaucoma specialists, with comparable or higher specificity relative to all graders. A DL algorithm trained on fundus images alone can detect referable glaucoma risk with higher sensitivity and comparable specificity to eye care providers.

From Machine to Machine: An OCT-trained Deep Learning Algorithm for Objective Quantification of Glaucomatous Damage in Fundus Photographs

Oct 20, 2018

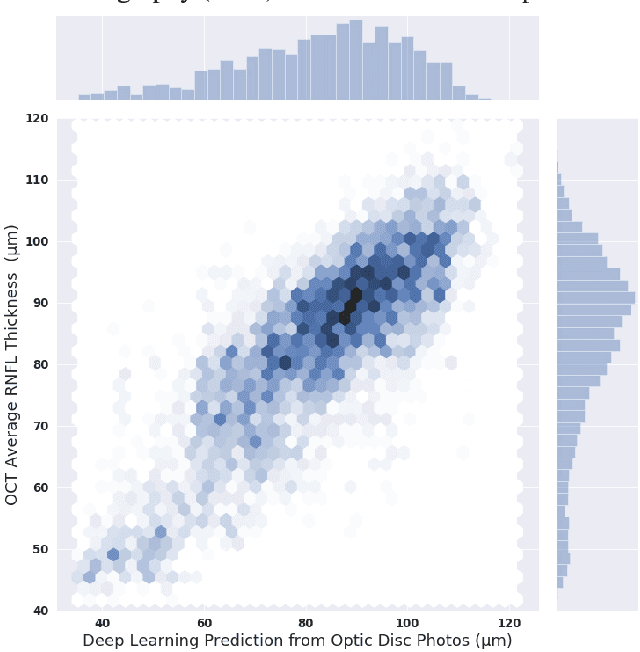

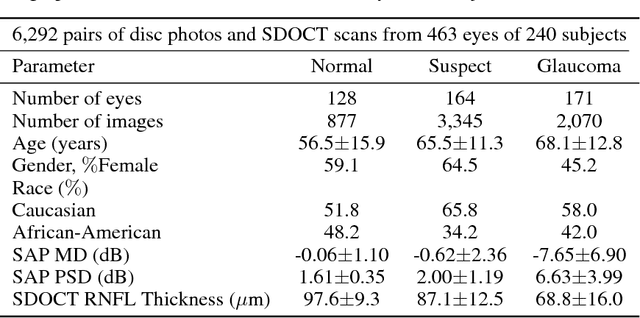

Abstract:Previous approaches using deep learning algorithms to classify glaucomatous damage on fundus photographs have been limited by the requirement for human labeling of a reference training set. We propose a new approach using spectral-domain optical coherence tomography (SDOCT) data to train a deep learning algorithm to quantify glaucomatous structural damage on optic disc photographs. The dataset included 32,820 pairs of optic disc photos and SDOCT retinal nerve fiber layer (RNFL) scans from 2,312 eyes of 1,198 subjects. A deep learning convolutional neural network was trained to assess optic disc photographs and predict SDOCT average RNFL thickness. The performance of the algorithm was evaluated in an independent test sample. The mean prediction of average RNFL thickness from all 6,292 optic disc photos in the test set was 83.3$\pm$14.5 $\mu$m, whereas the mean average RNFL thickness from all corresponding SDOCT scans was 82.5$\pm$16.8 $\mu$m (P = 0.164). There was a very strong correlation between predicted and observed RNFL thickness values (r = 0.832; P<0.001), with mean absolute error of the predictions of 7.39 $\mu$m. The areas under the receiver operating characteristic curves for discriminating glaucoma from healthy eyes with the deep learning predictions and actual SDOCT measurements were 0.944 (95$\%$ CI: 0.912- 0.966) and 0.940 (95$\%$ CI: 0.902 - 0.966), respectively (P = 0.724). In conclusion, we introduced a novel deep learning approach to assess optic disc photographs and provide quantitative information about the amount of neural damage. This approach could potentially be used to diagnose and stage glaucomatous damage from optic disc photographs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge