Eunjeong Jeong

Computation-aware Energy-harvesting Federated Learning: Cyclic Scheduling with Selective Participation

Nov 14, 2025Abstract:Federated Learning (FL) is a powerful paradigm for distributed learning, but its increasing complexity leads to significant energy consumption from client-side computations for training models. In particular, the challenge is critical in energy-harvesting FL (EHFL) systems where participation availability of each device oscillates due to limited energy. To address this, we propose FedBacys, a battery-aware EHFL framework using cyclic client participation based on users' battery levels. By clustering clients and scheduling them sequentially, FedBacys minimizes redundant computations, reduces system-wide energy usage, and improves learning stability. We also introduce FedBacys-Odd, a more energy-efficient variant that allows clients to participate selectively, further reducing energy costs without compromising performance. We provide a convergence analysis for our framework and demonstrate its superior energy efficiency and robustness compared to existing algorithms through numerical experiments.

Battery-aware Cyclic Scheduling in Energy-harvesting Federated Learning

Apr 16, 2025

Abstract:Federated Learning (FL) has emerged as a promising framework for distributed learning, but its growing complexity has led to significant energy consumption, particularly from computations on the client side. This challenge is especially critical in energy-harvesting FL (EHFL) systems, where device availability fluctuates due to limited and time-varying energy resources. We propose FedBacys, a battery-aware FL framework that introduces cyclic client participation based on users' battery levels to cope with these issues. FedBacys enables clients to save energy and strategically perform local training just before their designated transmission time by clustering clients and scheduling their involvement sequentially. This design minimizes redundant computation, reduces system-wide energy usage, and improves learning stability. Our experiments demonstrate that FedBacys outperforms existing approaches in terms of energy efficiency and performance consistency, exhibiting robustness even under non-i.i.d. training data distributions and with very infrequent battery charging. This work presents the first comprehensive evaluation of cyclic client participation in EHFL, incorporating both communication and computation costs into a unified, resource-aware scheduling strategy.

DRACO: Decentralized Asynchronous Federated Learning over Continuous Row-Stochastic Network Matrices

Jun 19, 2024

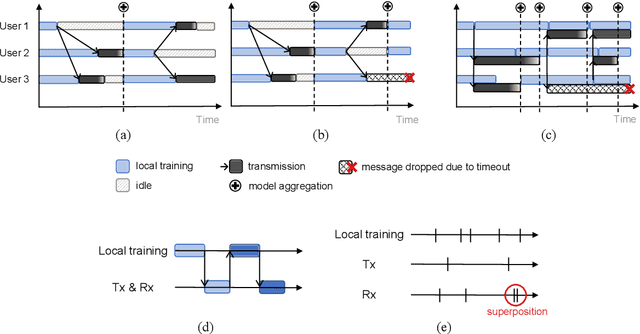

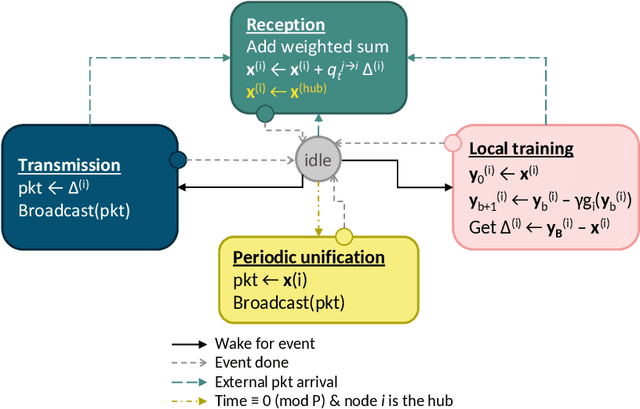

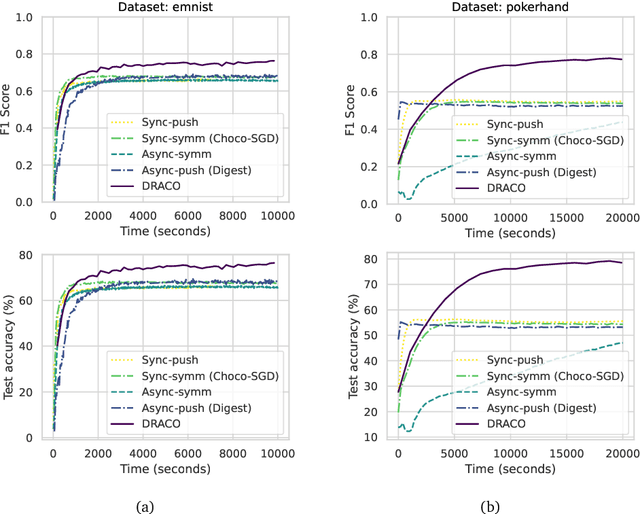

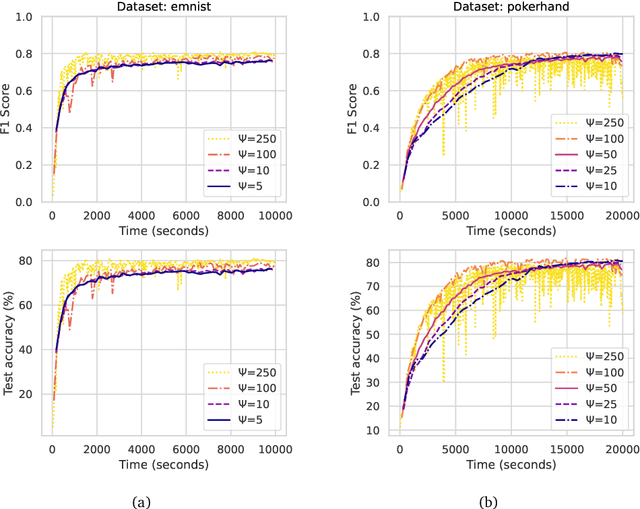

Abstract:Recent developments and emerging use cases, such as smart Internet of Things (IoT) and Edge AI, have sparked considerable interest in the training of neural networks over fully decentralized (serverless) networks. One of the major challenges of decentralized learning is to ensure stable convergence without resorting to strong assumptions applied for each agent regarding data distributions or updating policies. To address these issues, we propose DRACO, a novel method for decentralized asynchronous Stochastic Gradient Descent (SGD) over row-stochastic gossip wireless networks by leveraging continuous communication. Our approach enables edge devices within decentralized networks to perform local training and model exchanging along a continuous timeline, thereby eliminating the necessity for synchronized timing. The algorithm also features a specific technique of decoupling communication and computation schedules, which empowers complete autonomy for all users and manageable instructions for stragglers. Through a comprehensive convergence analysis, we highlight the advantages of asynchronous and autonomous participation in decentralized optimization. Our numerical experiments corroborate the efficacy of the proposed technique.

Personalized Decentralized Federated Learning with Knowledge Distillation

Feb 23, 2023

Abstract:Personalization in federated learning (FL) functions as a coordinator for clients with high variance in data or behavior. Ensuring the convergence of these clients' models relies on how closely users collaborate with those with similar patterns or preferences. However, it is generally challenging to quantify similarity under limited knowledge about other users' models given to users in a decentralized network. To cope with this issue, we propose a personalized and fully decentralized FL algorithm, leveraging knowledge distillation techniques to empower each device so as to discern statistical distances between local models. Each client device can enhance its performance without sharing local data by estimating the similarity between two intermediate outputs from feeding local samples as in knowledge distillation. Our empirical studies demonstrate that the proposed algorithm improves the test accuracy of clients in fewer iterations under highly non-independent and identically distributed (non-i.i.d.) data distributions and is beneficial to agents with small datasets, even without the need for a central server.

Asynchronous Decentralized Learning over Unreliable Wireless Networks

Feb 02, 2022

Abstract:Decentralized learning enables edge users to collaboratively train models by exchanging information via device-to-device communication, yet prior works have been limited to wireless networks with fixed topologies and reliable workers. In this work, we propose an asynchronous decentralized stochastic gradient descent (DSGD) algorithm, which is robust to the inherent computation and communication failures occurring at the wireless network edge. We theoretically analyze its performance and establish a non-asymptotic convergence guarantee. Experimental results corroborate our analysis, demonstrating the benefits of asynchronicity and outdated gradient information reuse in decentralized learning over unreliable wireless networks.

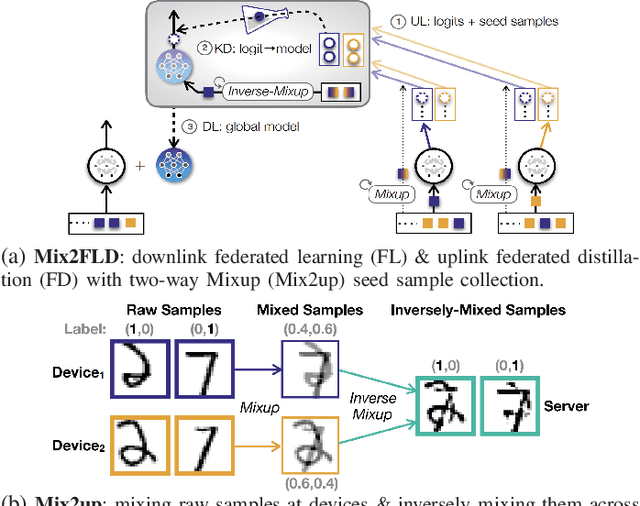

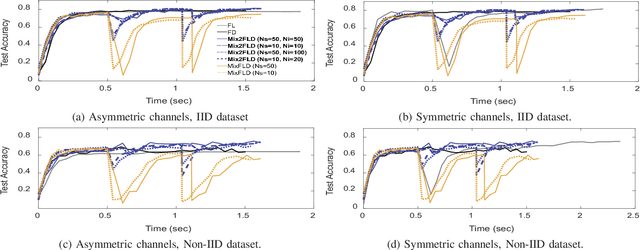

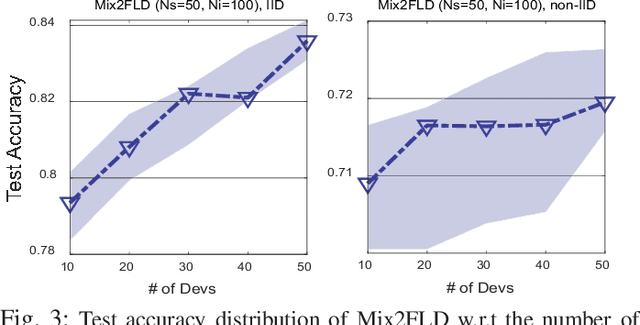

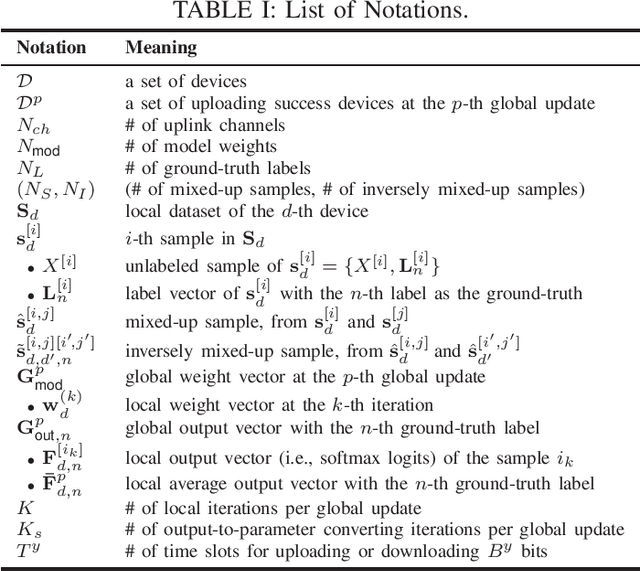

Mix2FLD: Downlink Federated Learning After Uplink Federated Distillation With Two-Way Mixup

Jun 17, 2020

Abstract:This letter proposes a novel communication-efficient and privacy-preserving distributed machine learning framework, coined Mix2FLD. To address uplink-downlink capacity asymmetry, local model outputs are uploaded to a server in the uplink as in federated distillation (FD), whereas global model parameters are downloaded in the downlink as in federated learning (FL). This requires a model output-to-parameter conversion at the server, after collecting additional data samples from devices. To preserve privacy while not compromising accuracy, linearly mixed-up local samples are uploaded, and inversely mixed up across different devices at the server. Numerical evaluations show that Mix2FLD achieves up to 16.7% higher test accuracy while reducing convergence time by up to 18.8% under asymmetric uplink-downlink channels compared to FL.

Distilling On-Device Intelligence at the Network Edge

Aug 16, 2019

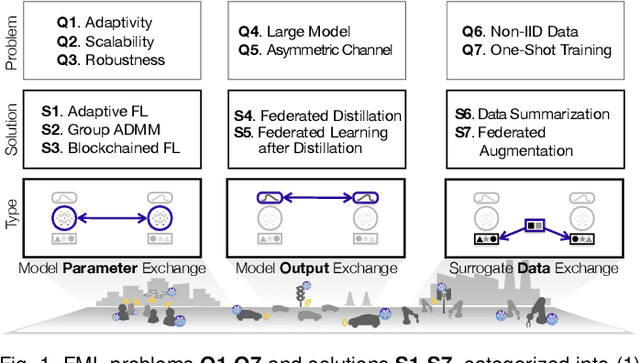

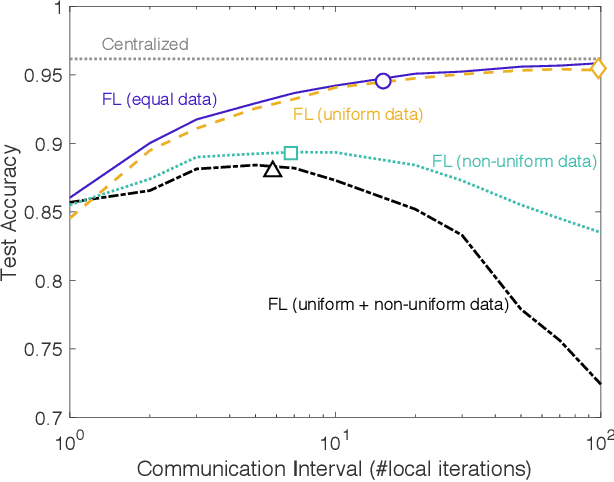

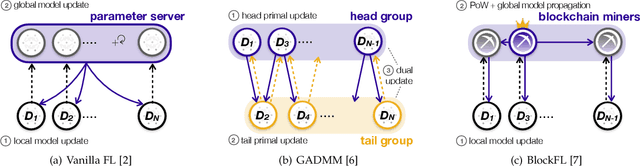

Abstract:Devices at the edge of wireless networks are the last mile data sources for machine learning (ML). As opposed to traditional ready-made public datasets, these user-generated private datasets reflect the freshest local environments in real time. They are thus indispensable for enabling mission-critical intelligent systems, ranging from fog radio access networks (RANs) to driverless cars and e-Health wearables. This article focuses on how to distill high-quality on-device ML models using fog computing, from such user-generated private data dispersed across wirelessly connected devices. To this end, we introduce communication-efficient and privacy-preserving distributed ML frameworks, termed fog ML (FML), wherein on-device ML models are trained by exchanging model parameters, model outputs, and surrogate data. We then present advanced FML frameworks addressing wireless RAN characteristics, limited on-device resources, and imbalanced data distributions. Our study suggests that the full potential of FML can be reached by co-designing communication and distributed ML operations while accounting for heterogeneous hardware specifications, data characteristics, and user requirements.

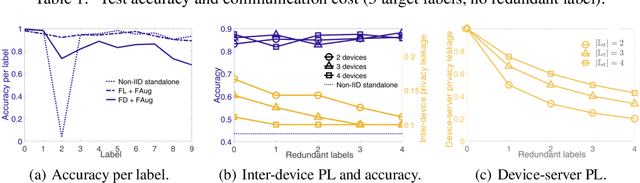

Multi-hop Federated Private Data Augmentation with Sample Compression

Jul 15, 2019

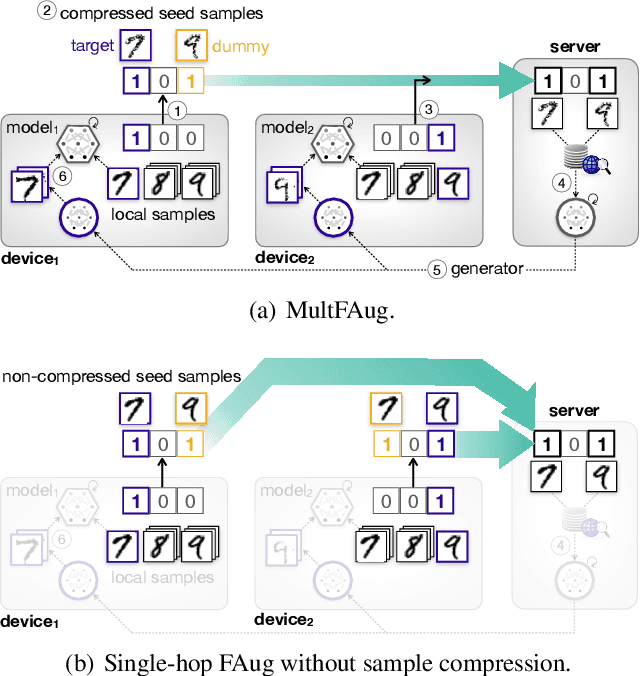

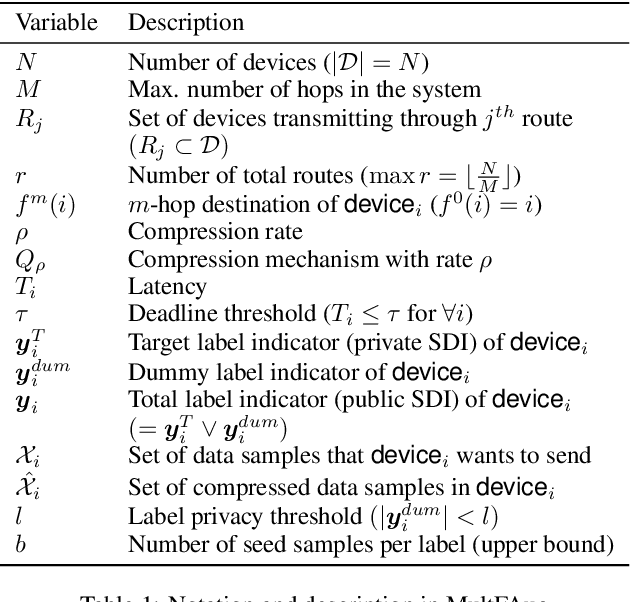

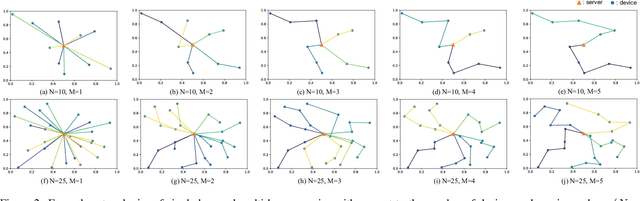

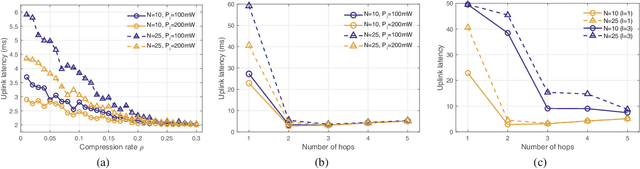

Abstract:On-device machine learning (ML) has brought about the accessibility to a tremendous amount of data from the users while keeping their local data private instead of storing it in a central entity. However, for privacy guarantee, it is inevitable at each device to compensate for the quality of data or learning performance, especially when it has a non-IID training dataset. In this paper, we propose a data augmentation framework using a generative model: multi-hop federated augmentation with sample compression (MultFAug). A multi-hop protocol speeds up the end-to-end over-the-air transmission of seed samples by enhancing the transport capacity. The relaying devices guarantee stronger privacy preservation as well since the origin of each seed sample is hidden in those participants. For further privatization on the individual sample level, the devices compress their data samples. The devices sparsify their data samples prior to transmissions to reduce the sample size, which impacts the communication payload. This preprocessing also strengthens the privacy of each sample, which corresponds to the input perturbation for preserving sample privacy. The numerical evaluations show that the proposed framework significantly improves privacy guarantee, transmission delay, and local training performance with adjustment to the number of hops and compression rate.

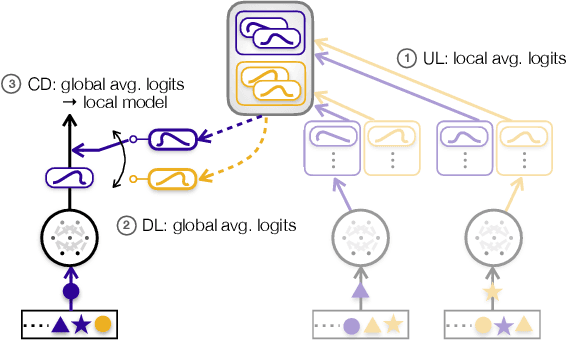

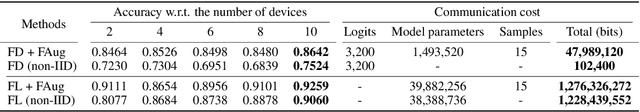

Communication-Efficient On-Device Machine Learning: Federated Distillation and Augmentation under Non-IID Private Data

Nov 28, 2018

Abstract:On-device machine learning (ML) enables the training process to exploit a massive amount of user-generated private data samples. To enjoy this benefit, inter-device communication overhead should be minimized. With this end, we propose federated distillation (FD), a distributed model training algorithm whose communication payload size is much smaller than a benchmark scheme, federated learning (FL), particularly when the model size is large. Moreover, user-generated data samples are likely to become non-IID across devices, which commonly degrades the performance compared to the case with an IID dataset. To cope with this, we propose federated augmentation (FAug), where each device collectively trains a generative model, and thereby augments its local data towards yielding an IID dataset. Empirical studies demonstrate that FD with FAug yields around 26x less communication overhead while achieving 95-98% test accuracy compared to FL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge