El-Ghazali Talbi

MOMAland: A Set of Benchmarks for Multi-Objective Multi-Agent Reinforcement Learning

Jul 23, 2024

Abstract:Many challenging tasks such as managing traffic systems, electricity grids, or supply chains involve complex decision-making processes that must balance multiple conflicting objectives and coordinate the actions of various independent decision-makers (DMs). One perspective for formalising and addressing such tasks is multi-objective multi-agent reinforcement learning (MOMARL). MOMARL broadens reinforcement learning (RL) to problems with multiple agents each needing to consider multiple objectives in their learning process. In reinforcement learning research, benchmarks are crucial in facilitating progress, evaluation, and reproducibility. The significance of benchmarks is underscored by the existence of numerous benchmark frameworks developed for various RL paradigms, including single-agent RL (e.g., Gymnasium), multi-agent RL (e.g., PettingZoo), and single-agent multi-objective RL (e.g., MO-Gymnasium). To support the advancement of the MOMARL field, we introduce MOMAland, the first collection of standardised environments for multi-objective multi-agent reinforcement learning. MOMAland addresses the need for comprehensive benchmarking in this emerging field, offering over 10 diverse environments that vary in the number of agents, state representations, reward structures, and utility considerations. To provide strong baselines for future research, MOMAland also includes algorithms capable of learning policies in such settings.

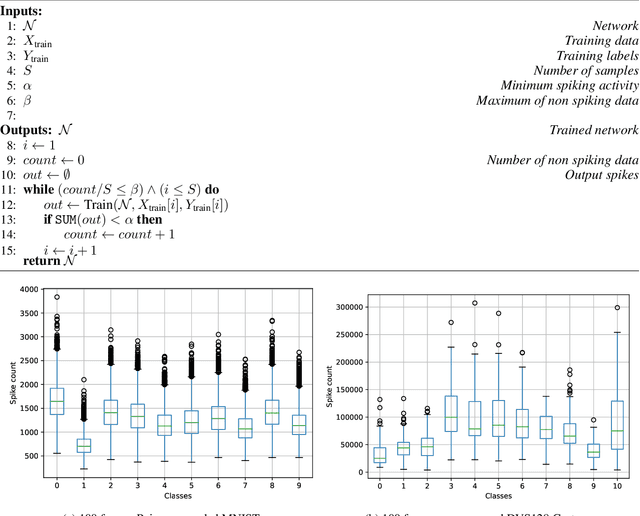

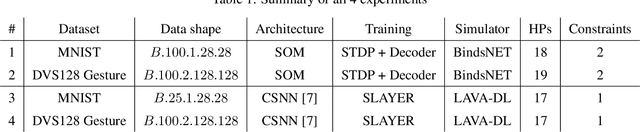

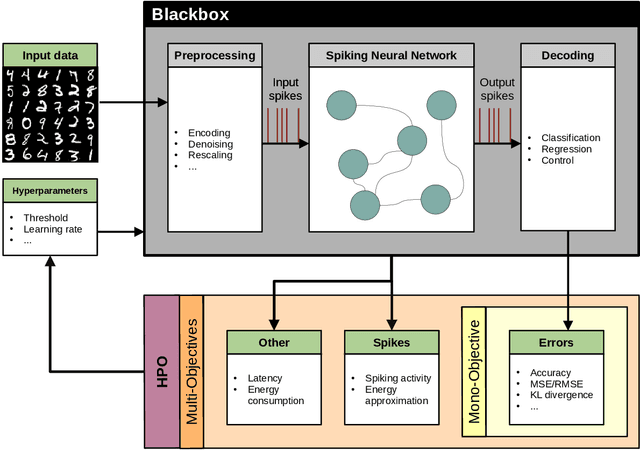

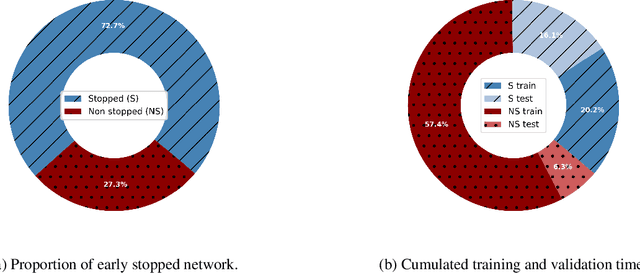

Parallel Hyperparameter Optimization Of Spiking Neural Network

Mar 01, 2024

Abstract:Spiking Neural Networks (SNN). SNNs are based on a more biologically inspired approach than usual artificial neural networks. Such models are characterized by complex dynamics between neurons and spikes. These are very sensitive to the hyperparameters, making their optimization challenging. To tackle hyperparameter optimization of SNNs, we initially extended the signal loss issue of SNNs to what we call silent networks. These networks fail to emit enough spikes at their outputs due to mistuned hyperparameters or architecture. Generally, search spaces are heavily restrained, sometimes even discretized, to prevent the sampling of such networks. By defining an early stopping criterion detecting silent networks and by designing specific constraints, we were able to instantiate larger and more flexible search spaces. We applied a constrained Bayesian optimization technique, which was asynchronously parallelized, as the evaluation time of a SNN is highly stochastic. Large-scale experiments were carried-out on a multi-GPU Petascale architecture. By leveraging silent networks, results show an acceleration of the search, while maintaining good performances of both the optimization algorithm and the best solution obtained. We were able to apply our methodology to two popular training algorithms, known as spike timing dependent plasticity and surrogate gradient. Early detection allowed us to prevent worthless and costly computation, directing the search toward promising hyperparameter combinations. Our methodology could be applied to multi-objective problems, where the spiking activity is often minimized to reduce the energy consumption. In this scenario, it becomes essential to find the delicate frontier between low-spiking and silent networks. Finally, our approach may have implications for neural architecture search, particularly in defining suitable spiking architectures.

SONATA: Self-adaptive Evolutionary Framework for Hardware-aware Neural Architecture Search

Feb 20, 2024

Abstract:Recent advancements in Artificial Intelligence (AI), driven by Neural Networks (NN), demand innovative neural architecture designs, particularly within the constrained environments of Internet of Things (IoT) systems, to balance performance and efficiency. HW-aware Neural Architecture Search (HW-aware NAS) emerges as an attractive strategy to automate the design of NN using multi-objective optimization approaches, such as evolutionary algorithms. However, the intricate relationship between NN design parameters and HW-aware NAS optimization objectives remains an underexplored research area, overlooking opportunities to effectively leverage this knowledge to guide the search process accordingly. Furthermore, the large amount of evaluation data produced during the search holds untapped potential for refining the optimization strategy and improving the approximation of the Pareto front. Addressing these issues, we propose SONATA, a self-adaptive evolutionary algorithm for HW-aware NAS. Our method leverages adaptive evolutionary operators guided by the learned importance of NN design parameters. Specifically, through tree-based surrogate models and a Reinforcement Learning agent, we aspire to gather knowledge on 'How' and 'When' to evolve NN architectures. Comprehensive evaluations across various NAS search spaces and hardware devices on the ImageNet-1k dataset have shown the merit of SONATA with up to 0.25% improvement in accuracy and up to 2.42x gains in latency and energy. Our SONATA has seen up to sim$93.6% Pareto dominance over the native NSGA-II, further stipulating the importance of self-adaptive evolution operators in HW-aware NAS.

Multi-Objective Reinforcement Learning based on Decomposition: A taxonomy and framework

Nov 21, 2023

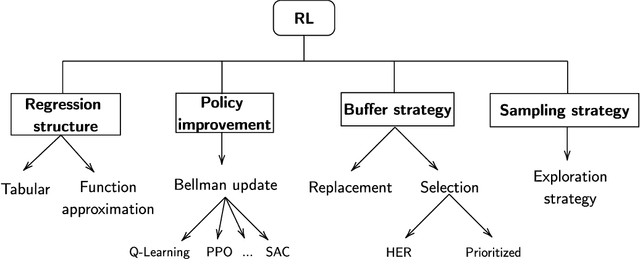

Abstract:Multi-objective reinforcement learning (MORL) extends traditional RL by seeking policies making different compromises among conflicting objectives. The recent surge of interest in MORL has led to diverse studies and solving methods, often drawing from existing knowledge in multi-objective optimization based on decomposition (MOO/D). Yet, a clear categorization based on both RL and MOO/D is lacking in the existing literature. Consequently, MORL researchers face difficulties when trying to classify contributions within a broader context due to the absence of a standardized taxonomy. To tackle such an issue, this paper introduces Multi-Objective Reinforcement Learning based on Decomposition (MORL/D), a novel methodology bridging RL and MOO literature. A comprehensive taxonomy for MORL/D is presented, providing a structured foundation for categorizing existing and potential MORL works. The introduced taxonomy is then used to scrutinize MORL research, enhancing clarity and conciseness through well-defined categorization. Moreover, a flexible framework derived from the taxonomy is introduced. This framework accommodates diverse instantiations using tools from both RL and MOO/D. Implementation across various configurations demonstrates its versatility, assessed against benchmark problems. Results indicate MORL/D instantiations achieve comparable performance with significantly greater versatility than current state-of-the-art approaches. By presenting the taxonomy and framework, this paper offers a comprehensive perspective and a unified vocabulary for MORL. This not only facilitates the identification of algorithmic contributions but also lays the groundwork for novel research avenues in MORL, contributing to the continued advancement of this field.

Hyperparameter Optimization for Multi-Objective Reinforcement Learning

Oct 25, 2023

Abstract:Reinforcement learning (RL) has emerged as a powerful approach for tackling complex problems. The recent introduction of multi-objective reinforcement learning (MORL) has further expanded the scope of RL by enabling agents to make trade-offs among multiple objectives. This advancement not only has broadened the range of problems that can be tackled but also created numerous opportunities for exploration and advancement. Yet, the effectiveness of RL agents heavily relies on appropriately setting their hyperparameters. In practice, this task often proves to be challenging, leading to unsuccessful deployments of these techniques in various instances. Hence, prior research has explored hyperparameter optimization in RL to address this concern. This paper presents an initial investigation into the challenge of hyperparameter optimization specifically for MORL. We formalize the problem, highlight its distinctive challenges, and propose a systematic methodology to address it. The proposed methodology is applied to a well-known environment using a state-of-the-art MORL algorithm, and preliminary results are reported. Our findings indicate that the proposed methodology can effectively provide hyperparameter configurations that significantly enhance the performance of MORL agents. Furthermore, this study identifies various future research opportunities to further advance the field of hyperparameter optimization for MORL.

A Virtual-Force Based Swarm Algorithm for Balanced Circular Bin Packing Problems

Jun 06, 2023Abstract:Balanced circular bin packing problems consist in positioning a given number of weighted circles in order to minimize the radius of a circular container while satisfying equilibrium constraints. These problems are NP-hard, highly constrained and dimensional. This paper describes a swarm algorithm based on a virtual-force system in order to solve balanced circular bin packing problems. In the proposed approach, a system of forces is applied to each component allowing to take into account the constraints and minimizing the objective function using the fundamental principle of dynamics. The proposed algorithm is experimented and validated on benchmarks of various balanced circular bin packing problems with up to 300 circles. The reported results allow to assess the effectiveness of the proposed approach compared to existing results from the literature.

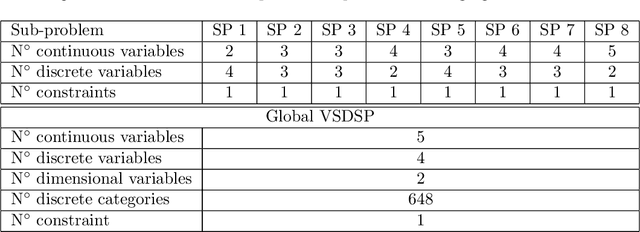

Hidden-Variables Genetic Algorithm for Variable-Size Design Space Optimal Layout Problems with Application to Aerospace Vehicles

Dec 21, 2022Abstract:The optimal layout of a complex system such as aerospace vehicles consists in placing a given number of components in a container in order to minimize one or several objectives under some geometrical or functional constraints. This paper presents an extended formulation of this problem as a variable-size design space (VSDS) problem to take into account a large number of architectural choices and components allocation during the design process. As a representative example of such systems, considering the layout of a satellite module, the VSDS aspect translates the fact that the optimizer has to choose between several subdivisions of the components. For instance, one large tank of fuel might be placed as well as two smaller tanks or three even smaller tanks for the same amount of fuel. In order to tackle this NP-hard problem, a genetic algorithm enhanced by an adapted hidden-variables mechanism is proposed. This latter is illustrated on a toy case and an aerospace application case representative to real world complexity to illustrate the performance of the proposed algorithms. The results obtained using the proposed mechanism are reported and analyzed.

Recovery-to-Efficiency: A New Robustness Concept for Multi-objective Optimization under Uncertainty

Nov 20, 2020

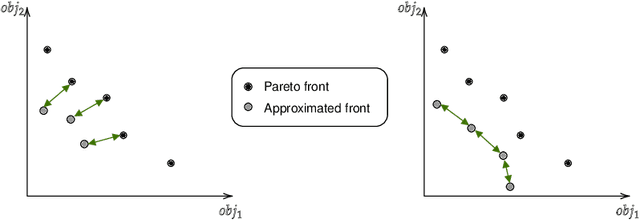

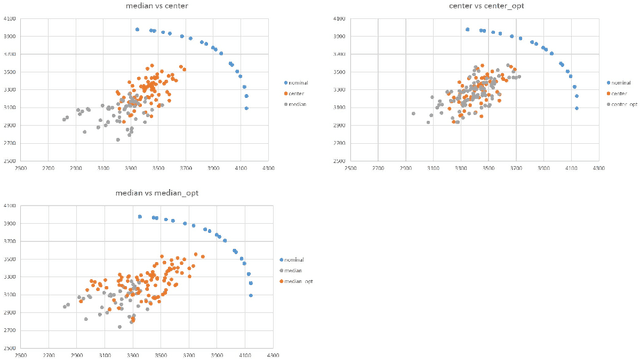

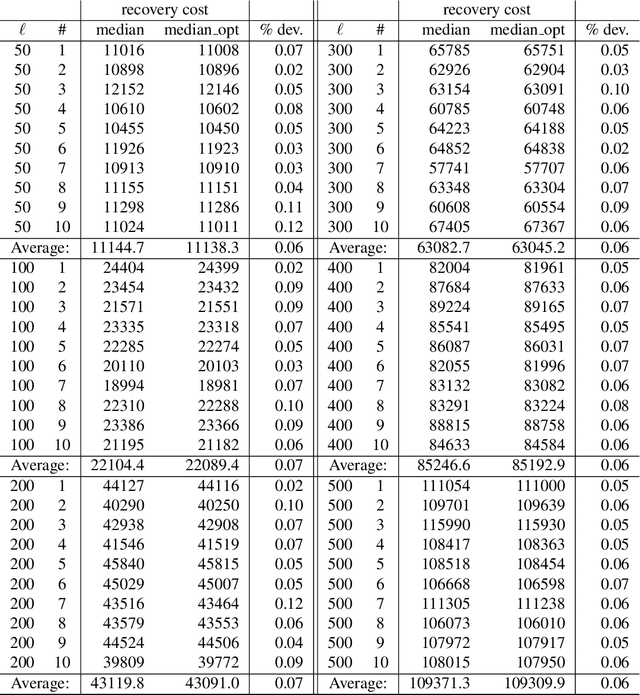

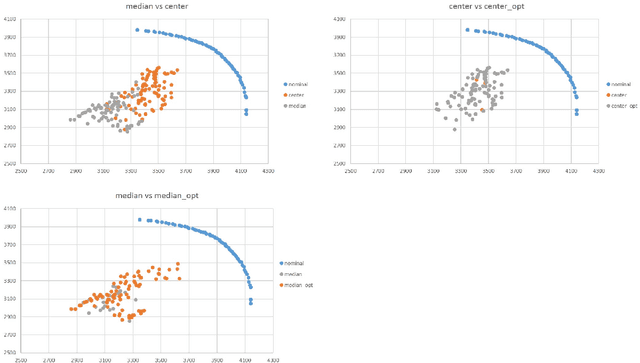

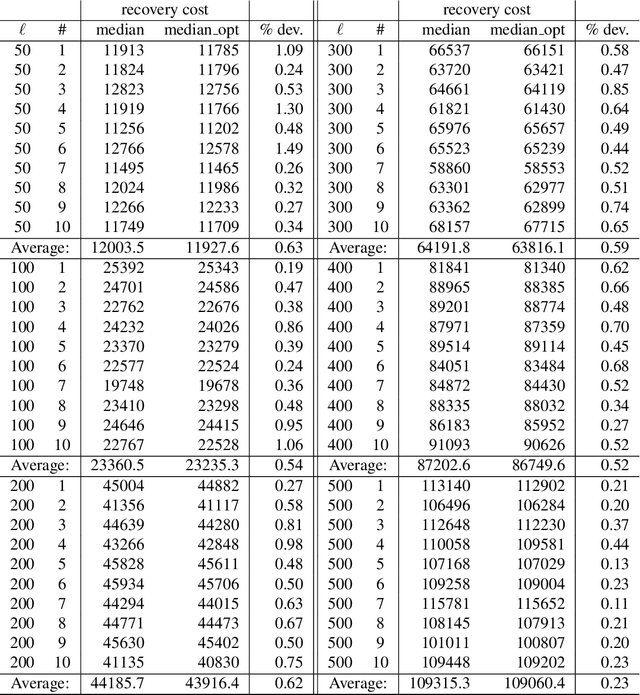

Abstract:This paper presents a new robustness concept for uncertain multi-objective optimization problems. More precisely, in the paper the so-called recovery-to-efficiency robustness concept is proposed and investigated. Several approaches for generating recovery-to-efficiency robust sets in the context of multi-objective optimization are proposed as well. An extensive experimental analysis is performed to disclose differences among robust sets obtained using different concepts as well as to deduce some interesting observations. For testing purposes, instances from the bi-objective knapsack problem are considered.

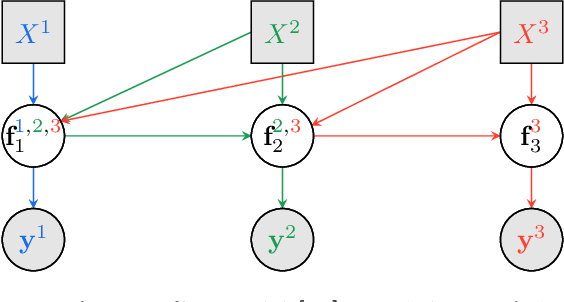

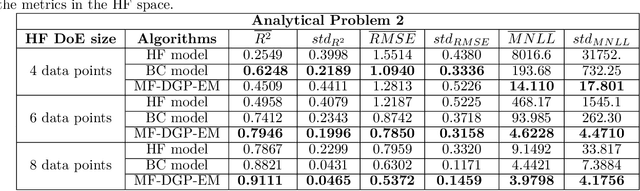

Multi-fidelity modeling with different input domain definitions using Deep Gaussian Processes

Jun 29, 2020

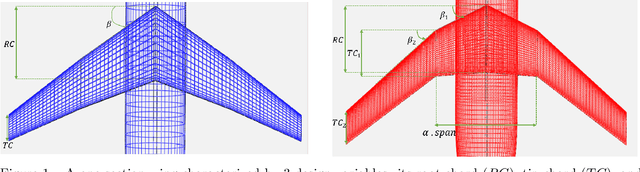

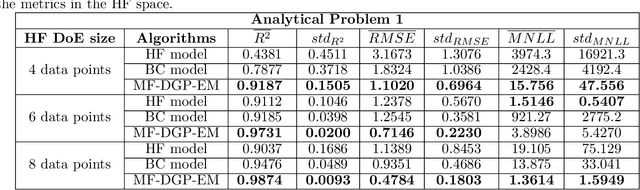

Abstract:Multi-fidelity approaches combine different models built on a scarce but accurate data-set (high-fidelity data-set), and a large but approximate one (low-fidelity data-set) in order to improve the prediction accuracy. Gaussian Processes (GPs) are one of the popular approaches to exhibit the correlations between these different fidelity levels. Deep Gaussian Processes (DGPs) that are functional compositions of GPs have also been adapted to multi-fidelity using the Multi-Fidelity Deep Gaussian process model (MF-DGP). This model increases the expressive power compared to GPs by considering non-linear correlations between fidelities within a Bayesian framework. However, these multi-fidelity methods consider only the case where the inputs of the different fidelity models are defined over the same domain of definition (e.g., same variables, same dimensions). However, due to simplification in the modeling of the low-fidelity, some variables may be omitted or a different parametrization may be used compared to the high-fidelity model. In this paper, Deep Gaussian Processes for multi-fidelity (MF-DGP) are extended to the case where a different parametrization is used for each fidelity. The performance of the proposed multifidelity modeling technique is assessed on analytical test cases and on structural and aerodynamic real physical problems.

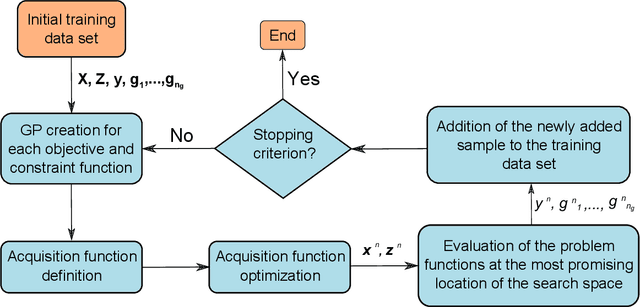

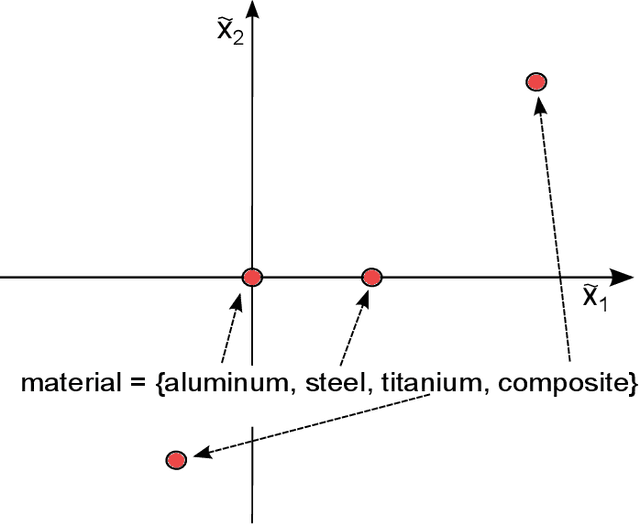

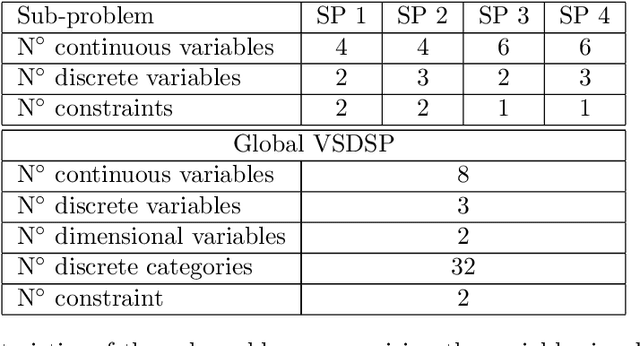

Bayesian optimization of variable-size design space problems

Mar 06, 2020

Abstract:Within the framework of complex system design, it is often necessary to solve mixed variable optimization problems, in which the objective and constraint functions can depend simultaneously on continuous and discrete variables. Additionally, complex system design problems occasionally present a variable-size design space. This results in an optimization problem for which the search space varies dynamically (with respect to both number and type of variables) along the optimization process as a function of the values of specific discrete decision variables. Similarly, the number and type of constraints can vary as well. In this paper, two alternative Bayesian Optimization-based approaches are proposed in order to solve this type of optimization problems. The first one consists in a budget allocation strategy allowing to focus the computational budget on the most promising design sub-spaces. The second approach, instead, is based on the definition of a kernel function allowing to compute the covariance between samples characterized by partially different sets of variables. The results obtained on analytical and engineering related test-cases show a faster and more consistent convergence of both proposed methods with respect to the standard approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge