Edward Chen

MoSH: Modeling Multi-Objective Tradeoffs with Soft and Hard Bounds

Dec 09, 2024

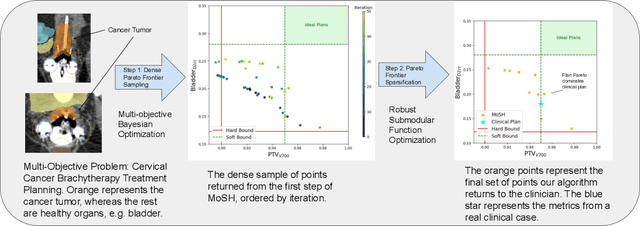

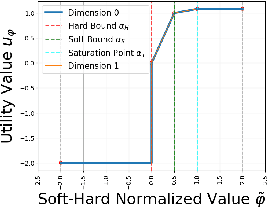

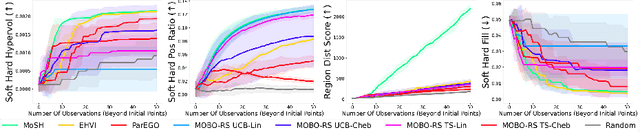

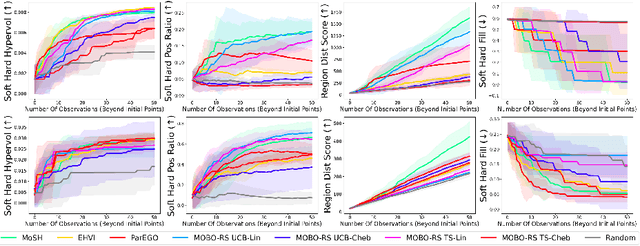

Abstract:Countless science and engineering applications in multi-objective optimization (MOO) necessitate that decision-makers (DMs) select a Pareto-optimal solution which aligns with their preferences. Evaluating individual solutions is often expensive, necessitating cost-sensitive optimization techniques. Due to competing objectives, the space of trade-offs is also expansive -- thus, examining the full Pareto frontier may prove overwhelming to a DM. Such real-world settings generally have loosely-defined and context-specific desirable regions for each objective function that can aid in constraining the search over the Pareto frontier. We introduce a novel conceptual framework that operationalizes these priors using soft-hard functions, SHFs, which allow for the DM to intuitively impose soft and hard bounds on each objective -- which has been lacking in previous MOO frameworks. Leveraging a novel minimax formulation for Pareto frontier sampling, we propose a two-step process for obtaining a compact set of Pareto-optimal points which respect the user-defined soft and hard bounds: (1) densely sample the Pareto frontier using Bayesian optimization, and (2) sparsify the selected set to surface to the user, using robust submodular function optimization. We prove that (2) obtains the optimal compact Pareto-optimal set of points from (1). We further show that many practical problems fit within the SHF framework and provide extensive empirical validation on diverse domains, including brachytherapy, engineering design, and large language model personalization. Specifically, for brachytherapy, our approach returns a compact set of points with over 3% greater SHF-defined utility than the next best approach. Among the other diverse experiments, our approach consistently leads in utility, allowing the DM to reach >99% of their maximum possible desired utility within validation of 5 points.

Dynamic Model Agnostic Reliability Evaluation of Machine-Learning Methods Integrated in Instrumentation & Control Systems

Aug 08, 2023Abstract:In recent years, the field of data-driven neural network-based machine learning (ML) algorithms has grown significantly and spurred research in its applicability to instrumentation and control systems. While they are promising in operational contexts, the trustworthiness of such algorithms is not adequately assessed. Failures of ML-integrated systems are poorly understood; the lack of comprehensive risk modeling can degrade the trustworthiness of these systems. In recent reports by the National Institute for Standards and Technology, trustworthiness in ML is a critical barrier to adoption and will play a vital role in intelligent systems' safe and accountable operation. Thus, in this work, we demonstrate a real-time model-agnostic method to evaluate the relative reliability of ML predictions by incorporating out-of-distribution detection on the training dataset. It is well documented that ML algorithms excel at interpolation (or near-interpolation) tasks but significantly degrade at extrapolation. This occurs when new samples are "far" from training samples. The method, referred to as the Laplacian distributed decay for reliability (LADDR), determines the difference between the operational and training datasets, which is used to calculate a prediction's relative reliability. LADDR is demonstrated on a feedforward neural network-based model used to predict safety significant factors during different loss-of-flow transients. LADDR is intended as a "data supervisor" and determines the appropriateness of well-trained ML models in the context of operational conditions. Ultimately, LADDR illustrates how training data can be used as evidence to support the trustworthiness of ML predictions when utilized for conventional interpolation tasks.

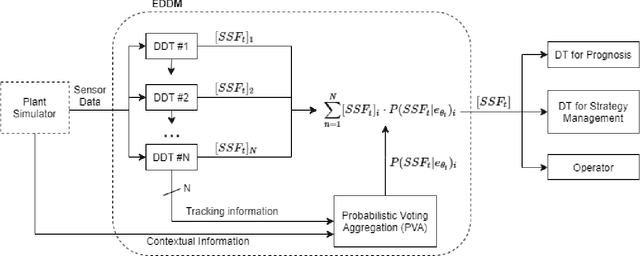

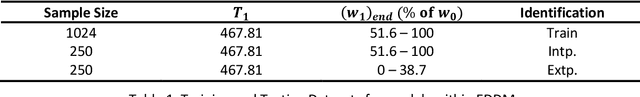

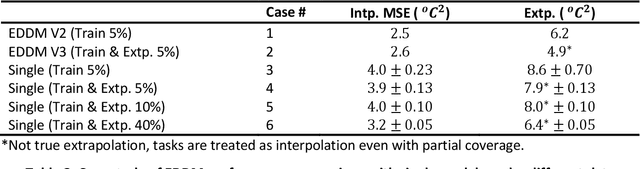

Advanced Transient Diagnostic with Ensemble Digital Twin Modeling

May 23, 2022

Abstract:The use of machine learning (ML) model as digital-twins for reduced-order-modeling (ROM) in lieu of system codes has grown traction over the past few years. However, due to the complex and non-linear nature of nuclear reactor transients as well as the large range of tasks required, it is infeasible for a single ML model to generalize across all tasks. In this paper, we incorporate issue specific digital-twin ML models with ensembles to enhance the prediction outcome. The ensemble also utilizes an indirect probabilistic tracking method of surrogate state variables to produce accurate predictions of unobservable safety goals. The unique method named Ensemble Diagnostic Digital-twin Modeling (EDDM) can select not only the most appropriate predictions from the incorporated diagnostic digital-twin models but can also reduce generalization error associated with training as opposed to single models.

The Role of Pleura and Adipose in Lung Ultrasound AI

Jan 19, 2022Abstract:In this paper, we study the significance of the pleura and adipose tissue in lung ultrasound AI analysis. We highlight their more prominent appearance when using high-frequency linear (HFL) instead of curvilinear ultrasound probes, showing HFL reveals better pleura detail. We compare the diagnostic utility of the pleura and adipose tissue using an HFL ultrasound probe. Masking the adipose tissue during training and inference (while retaining the pleural line and Merlin's space artifacts such as A-lines and B-lines) improved the AI model's diagnostic accuracy.

* Published in MICCAI 2021 workshop on Lessons Learned from the development and application of medical imaging-based AI technologies for combating COVID-19 (LL-COVID19). The first two authors contributed equally to this work

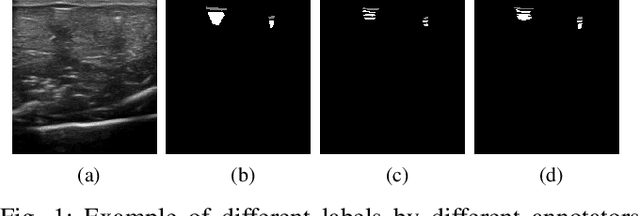

Weakly- and Semi-Supervised Probabilistic Segmentation and Quantification of Ultrasound Needle-Reverberation Artifacts to Allow Better AI Understanding of Tissue Beneath Needles

Nov 24, 2020

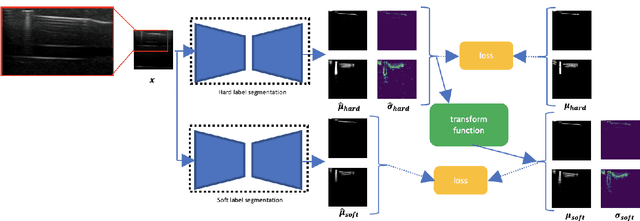

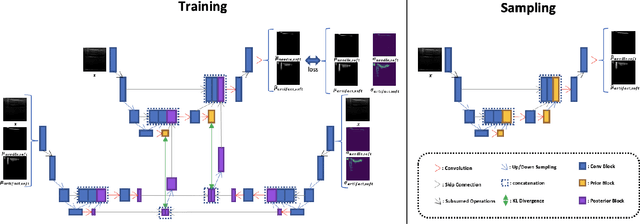

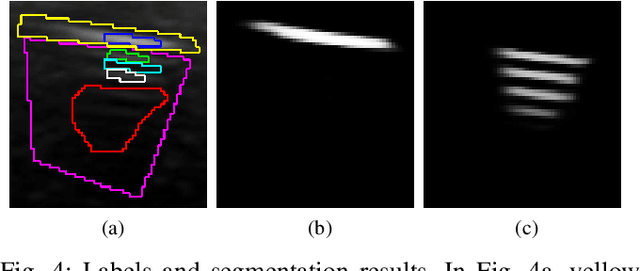

Abstract:Ultrasound image quality has been continually improving. However, when needles or other metallic objects are operating inside the tissue, the resulting reverberation artifacts can severely corrupt the surrounding image quality. Such effects are challenging for existing computer vision algorithms for medical image analysis. Needle reverberation artifacts can be hard to identify at times and affect various pixel values to different degrees. The boundaries of such artifacts are ambiguous, leading to disagreement among human experts labeling the artifacts. We purpose a weakly- and semi-supervised, probabilistic needle-and-needle-artifact segmentation algorithm to separate the desired tissue-based pixel values from the superimposed artifacts. Our method models the intensity decay of artifact intensities and is designed to minimize the human labeling error. We demonstrate the applicability of the approach, comparing it against other segmentation algorithms. Our method is capable of differentiating the reverberations from artifact-free patches between reverberations, as well as modeling the intensity fall-off in the artifacts. Our method matches state-of-the-art artifact segmentation performance, and sets a new standard in estimating the per-pixel contributions of artifact vs underlying anatomy, especially in the immediately adjacent regions between reverberation lines.

A Study of Domain Generalization on Ultrasound-based Multi-Class Segmentation of Arteries, Veins, Ligaments, and Nerves Using Transfer Learning

Nov 13, 2020

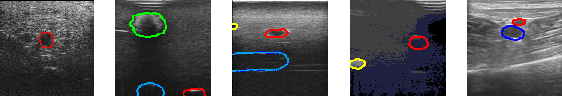

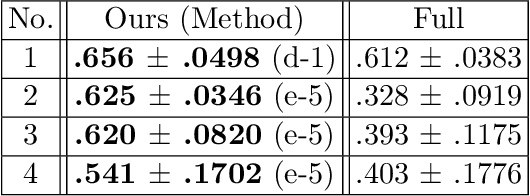

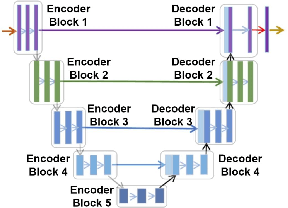

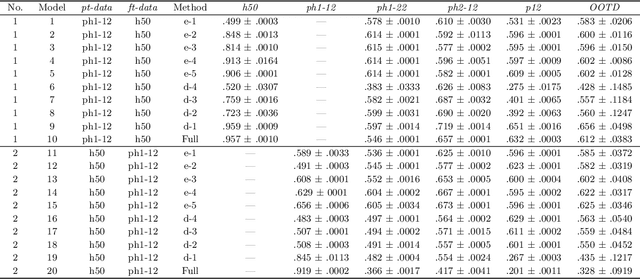

Abstract:Identifying landmarks in the femoral area is crucial for ultrasound (US) -based robot-guided catheter insertion, and their presentation varies when imaged with different scanners. As such, the performance of past deep learning-based approaches is also narrowly limited to the training data distribution; this can be circumvented by fine-tuning all or part of the model, yet the effects of fine-tuning are seldom discussed. In this work, we study the US-based segmentation of multiple classes through transfer learning by fine-tuning different contiguous blocks within the model, and evaluating on a gamut of US data from different scanners and settings. We propose a simple method for predicting generalization on unseen datasets and observe statistically significant differences between the fine-tuning methods while working towards domain generalization.

YouTube-8M Video Understanding Challenge Approach and Applications

Jun 26, 2017Abstract:This paper introduces the YouTube-8M Video Understanding Challenge hosted as a Kaggle competition and also describes my approach to experimenting with various models. For each of my experiments, I provide the score result as well as possible improvements to be made. Towards the end of the paper, I discuss the various ensemble learning techniques that I applied on the dataset which significantly boosted my overall competition score. At last, I discuss the exciting future of video understanding research and also the many applications that such research could significantly improve.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge