Wanwen Chen

Semantic-ICP: Iterative Closest Point for Non-rigid Multi-Organ Point Cloud Registration

Mar 02, 2025

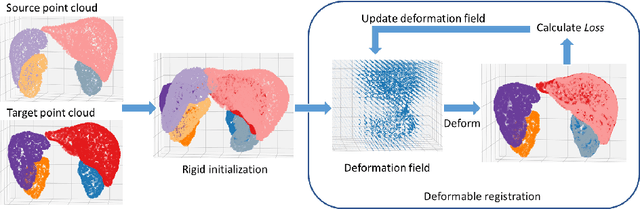

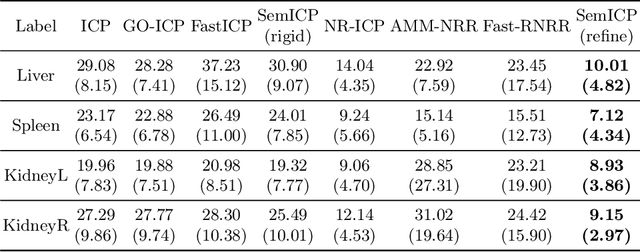

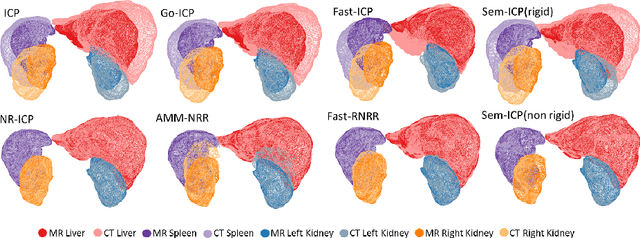

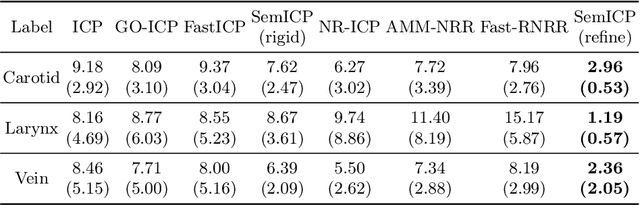

Abstract:Point cloud registration is important in computer-aided interventions (CAI). While learning-based point cloud registration methods have been developed, their clinical application is hampered by issues of generalizability and explainability. Therefore, classical point cloud registration methods, such as Iterative Closest Point (ICP), are still widely applied in CAI. ICP methods fail to consider that: (1) the points have well-defined semantic meaning, in that each point can be related to a specific anatomical label; (2) the deformation needs to follow biomechanical energy constraints. In this paper, we present a novel semantic ICP (sem-ICP) method that handles multiple point labels and uses linear elastic energy regularization. We use semantic labels to improve the robustness of the closest point matching and propose a new point cloud deformation representation to apply explicit biomechanical energy regularization. Our experiments on the Learn2reg abdominal MR-CT registration dataset and a trans-oral robotic surgery ultrasound-CT registration dataset show that our method improves the Hausdorff distance compared with other state-of-the-art ICP-based registration methods. We also perform a sensitivity study to show that our rigid initialization achieves better convergence with different initializations and visible ratios.

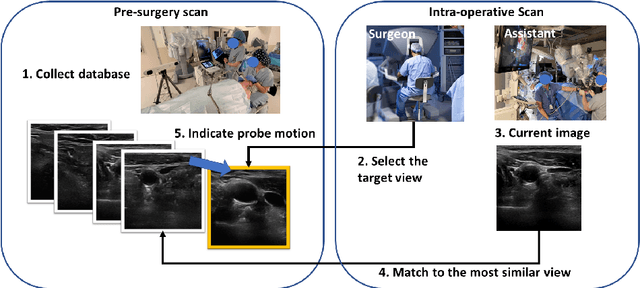

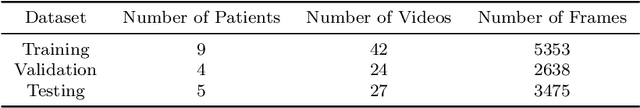

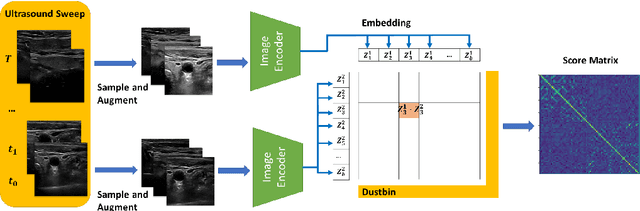

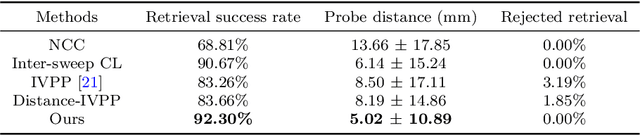

Image Retrieval with Intra-Sweep Representation Learning for Neck Ultrasound Scanning Guidance

Dec 10, 2024

Abstract:Purpose: Intraoperative ultrasound (US) can enhance real-time visualization in transoral robotic surgery. The surgeon creates a mental map with a pre-operative scan. Then, a surgical assistant performs freehand US scanning during the surgery while the surgeon operates at the remote surgical console. Communicating the target scanning plane in the surgeon's mental map is difficult. Automatic image retrieval can help match intraoperative images to preoperative scans, guiding the assistant to adjust the US probe toward the target plane. Methods: We propose a self-supervised contrastive learning approach to match intraoperative US views to a preoperative image database. We introduce a novel contrastive learning strategy that leverages intra-sweep similarity and US probe location to improve feature encoding. Additionally, our model incorporates a flexible threshold to reject unsatisfactory matches. Results: Our method achieves 92.30% retrieval accuracy on simulated data and outperforms state-of-the-art temporal-based contrastive learning approaches. Our ablation study demonstrates that using probe location in the optimization goal improves image representation, suggesting that semantic information can be extracted from probe location. We also present our approach on real patient data to show the feasibility of the proposed US probe localization system despite tissue deformation from tongue retraction. Conclusion: Our contrastive learning method, which utilizes intra-sweep similarity and US probe location, enhances US image representation learning. We also demonstrate the feasibility of using our image retrieval method to provide neck US localization on real patient US after tongue retraction.

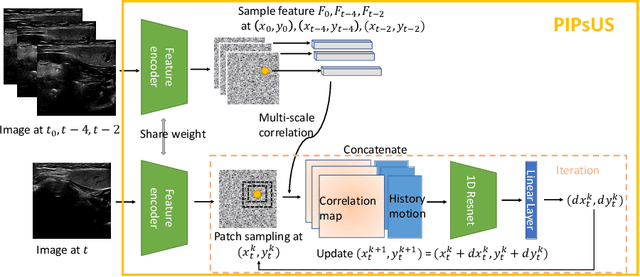

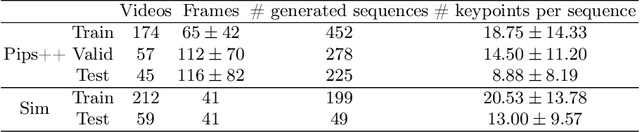

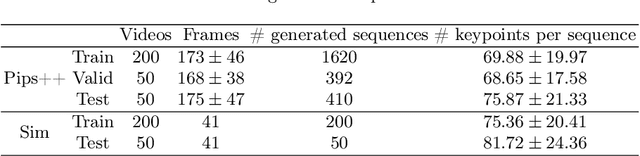

PIPsUS: Self-Supervised Dense Point Tracking in Ultrasound

Mar 08, 2024

Abstract:Finding point-level correspondences is a fundamental problem in ultrasound (US), since it can enable US landmark tracking for intraoperative image guidance in different surgeries, including head and neck. Most existing US tracking methods, e.g., those based on optical flow or feature matching, were initially designed for RGB images before being applied to US. Therefore domain shift can impact their performance. Training could be supervised by ground-truth correspondences, but these are expensive to acquire in US. To solve these problems, we propose a self-supervised pixel-level tracking model called PIPsUS. Our model can track an arbitrary number of points in one forward pass and exploits temporal information by considering multiple, instead of just consecutive, frames. We developed a new self-supervised training strategy that utilizes a long-term point-tracking model trained for RGB images as a teacher to guide the model to learn realistic motions and use data augmentation to enforce tracking from US appearance. We evaluate our method on neck and oral US and echocardiography, showing higher point tracking accuracy when compared with fast normalized cross-correlation and tuned optical flow. Code will be available once the paper is accepted.

Transcervical Ultrasound Image Guidance System for Transoral Robotic Surgery

Nov 29, 2022Abstract:Purpose: Trans-oral robotic surgery (TORS) using the da Vinci surgical robot is a new minimally-invasive surgery method to treat oropharyngeal tumors, but it is a challenging operation. Augmented reality (AR) based on intra-operative ultrasound (US) has the potential to enhance the visualization of the anatomy and cancerous tumors to provide additional tools for decision-making in surgery. Methods: We propose and carry out preliminary evaluations of a US-guided AR system for TORS, with the transducer placed on the neck for a transcervical view. Firstly, we perform a novel MRI-transcervical 3D US registration study. Secondly, we develop a US-robot calibration method with an optical tracker and an AR system to display the anatomy mesh model in the real-time endoscope images inside the surgeon console. Results: Our AR system reaches a mean projection error of 26.81 and 27.85 pixels for the projection from the US to stereo cameras in a water bath experiment. The average target registration error for MRI to 3D US is 8.90 mm for the 3D US transducer and 5.85 mm for freehand 3D US, and the average distance between the vessel centerlines is 2.32 mm. Conclusion: We demonstrate the first proof-of-concept transcervical US-guided AR system for TORS and the feasibility of trans-cervical 3D US-MRI registration. Our results show that trans-cervical 3D US is a promising technique for TORS image guidance.

The Role of Pleura and Adipose in Lung Ultrasound AI

Jan 19, 2022Abstract:In this paper, we study the significance of the pleura and adipose tissue in lung ultrasound AI analysis. We highlight their more prominent appearance when using high-frequency linear (HFL) instead of curvilinear ultrasound probes, showing HFL reveals better pleura detail. We compare the diagnostic utility of the pleura and adipose tissue using an HFL ultrasound probe. Masking the adipose tissue during training and inference (while retaining the pleural line and Merlin's space artifacts such as A-lines and B-lines) improved the AI model's diagnostic accuracy.

* Published in MICCAI 2021 workshop on Lessons Learned from the development and application of medical imaging-based AI technologies for combating COVID-19 (LL-COVID19). The first two authors contributed equally to this work

Ultrasound Confidence Maps of Intensity and Structure Based on Directed Acyclic Graphs and Artifact Models

Nov 24, 2020

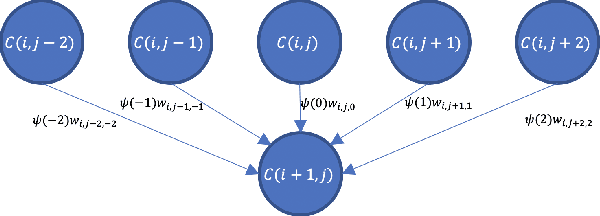

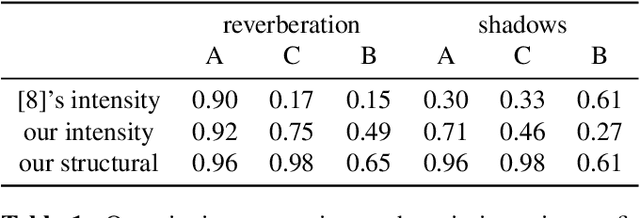

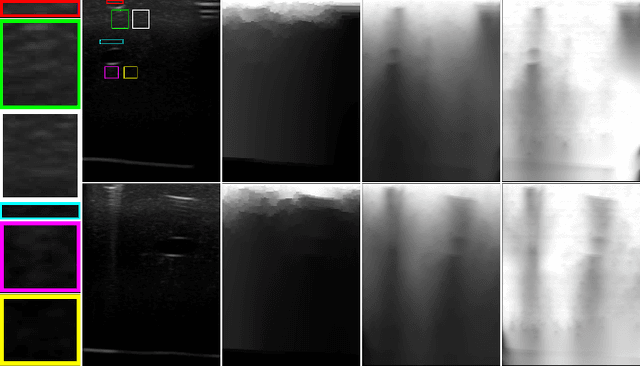

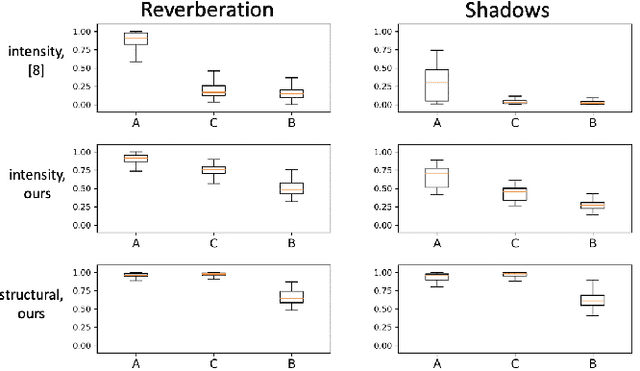

Abstract:Ultrasound imaging has been improving, but continues to suffer from inherent artifacts that are challenging to model, such as attenuation, shadowing, diffraction, speckle, etc. These artifacts can potentially confuse image analysis algorithms unless an attempt is made to assess the certainty of individual pixel values. Our novel confidence algorithms analyze pixel values using a directed acyclic graph based on acoustic physical properties of ultrasound imaging. We demonstrate unique capabilities of our approach and compare it against previous confidence-measurement algorithms for shadow-detection and image-compounding tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge