Edgar Anarossi

KeyMPs: One-Shot Vision-Language Guided Motion Generation by Sequencing DMPs for Occlusion-Rich Tasks

Apr 14, 2025

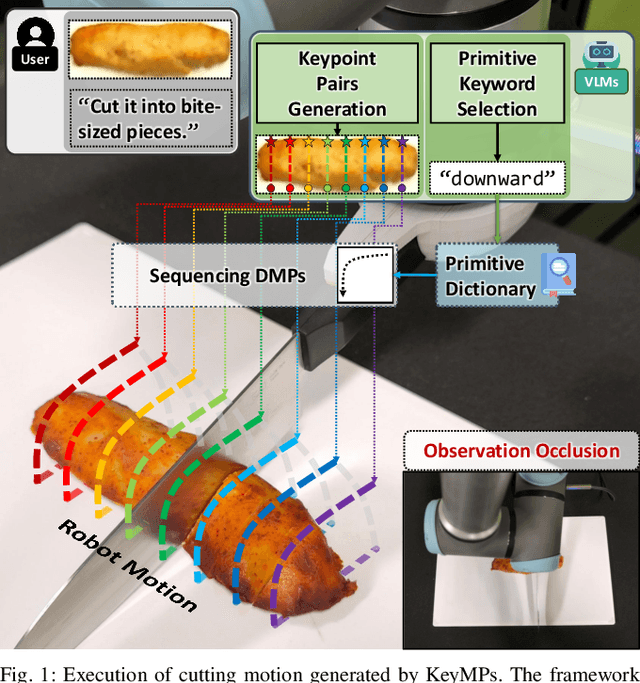

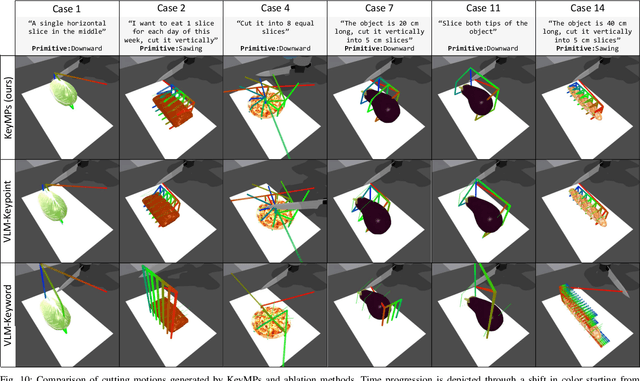

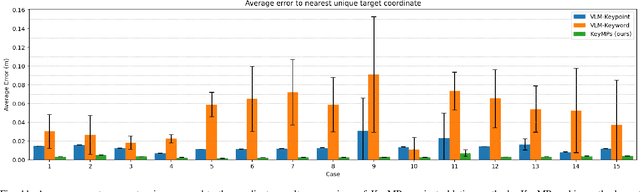

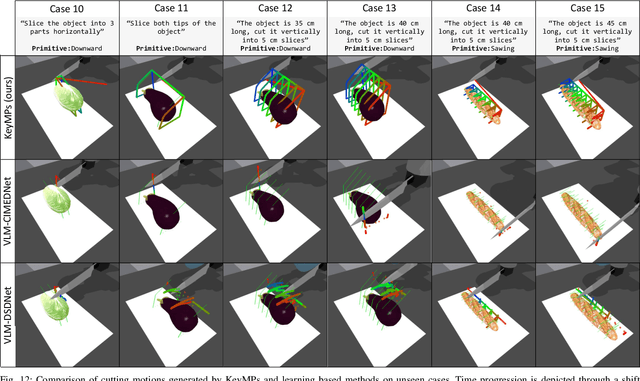

Abstract:Dynamic Movement Primitives (DMPs) provide a flexible framework wherein smooth robotic motions are encoded into modular parameters. However, they face challenges in integrating multimodal inputs commonly used in robotics like vision and language into their framework. To fully maximize DMPs' potential, enabling them to handle multimodal inputs is essential. In addition, we also aim to extend DMPs' capability to handle object-focused tasks requiring one-shot complex motion generation, as observation occlusion could easily happen mid-execution in such tasks (e.g., knife occlusion in cake icing, hand occlusion in dough kneading, etc.). A promising approach is to leverage Vision-Language Models (VLMs), which process multimodal data and can grasp high-level concepts. However, they typically lack enough knowledge and capabilities to directly infer low-level motion details and instead only serve as a bridge between high-level instructions and low-level control. To address this limitation, we propose Keyword Labeled Primitive Selection and Keypoint Pairs Generation Guided Movement Primitives (KeyMPs), a framework that combines VLMs with sequencing of DMPs. KeyMPs use VLMs' high-level reasoning capability to select a reference primitive through keyword labeled primitive selection and VLMs' spatial awareness to generate spatial scaling parameters used for sequencing DMPs by generalizing the overall motion through keypoint pairs generation, which together enable one-shot vision-language guided motion generation that aligns with the intent expressed in the multimodal input. We validate our approach through an occlusion-rich manipulation task, specifically object cutting experiments in both simulated and real-world environments, demonstrating superior performance over other DMP-based methods that integrate VLMs support.

Deep Segmented DMP Networks for Learning Discontinuous Motions

Sep 01, 2023

Abstract:Discontinuous motion which is a motion composed of multiple continuous motions with sudden change in direction or velocity in between, can be seen in state-aware robotic tasks. Such robotic tasks are often coordinated with sensor information such as image. In recent years, Dynamic Movement Primitives (DMP) which is a method for generating motor behaviors suitable for robotics has garnered several deep learning based improvements to allow associations between sensor information and DMP parameters. While the implementation of deep learning framework does improve upon DMP's inability to directly associate to an input, we found that it has difficulty learning DMP parameters for complex motion which requires large number of basis functions to reconstruct. In this paper we propose a novel deep learning network architecture called Deep Segmented DMP Network (DSDNet) which generates variable-length segmented motion by utilizing the combination of multiple DMP parameters predicting network architecture, double-stage decoder network, and number of segments predictor. The proposed method is evaluated on both artificial data (object cutting & pick-and-place) and real data (object cutting) where our proposed method could achieve high generalization capability, task-achievement, and data-efficiency compared to previous method on generating discontinuous long-horizon motions.

Disturbance Injection under Partial Automation: Robust Imitation Learning for Long-horizon Tasks

Mar 22, 2023

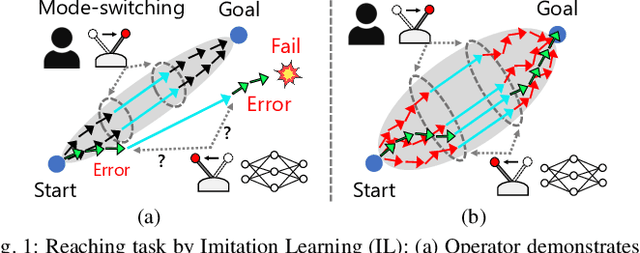

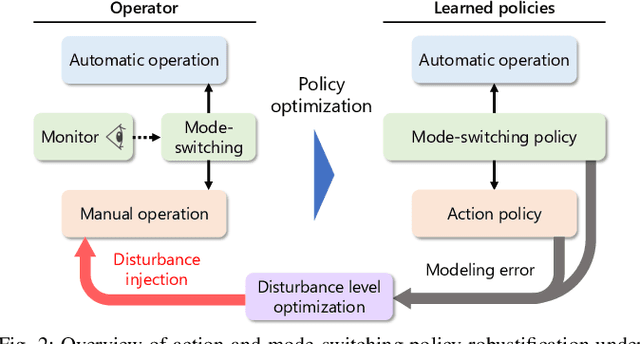

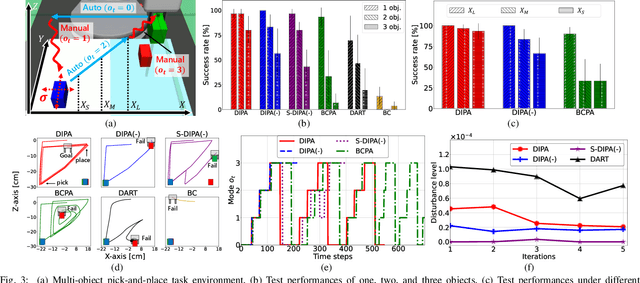

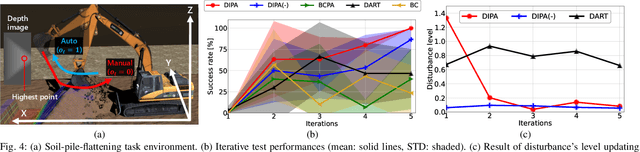

Abstract:Partial Automation (PA) with intelligent support systems has been introduced in industrial machinery and advanced automobiles to reduce the burden of long hours of human operation. Under PA, operators perform manual operations (providing actions) and operations that switch to automatic/manual mode (mode-switching). Since PA reduces the total duration of manual operation, these two action and mode-switching operations can be replicated by imitation learning with high sample efficiency. To this end, this paper proposes Disturbance Injection under Partial Automation (DIPA) as a novel imitation learning framework. In DIPA, mode and actions (in the manual mode) are assumed to be observables in each state and are used to learn both action and mode-switching policies. The above learning is robustified by injecting disturbances into the operator's actions to optimize the disturbance's level for minimizing the covariate shift under PA. We experimentally validated the effectiveness of our method for long-horizon tasks in two simulations and a real robot environment and confirmed that our method outperformed the previous methods and reduced the demonstration burden.

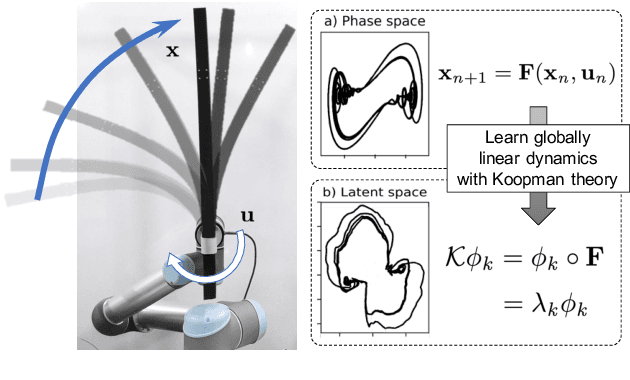

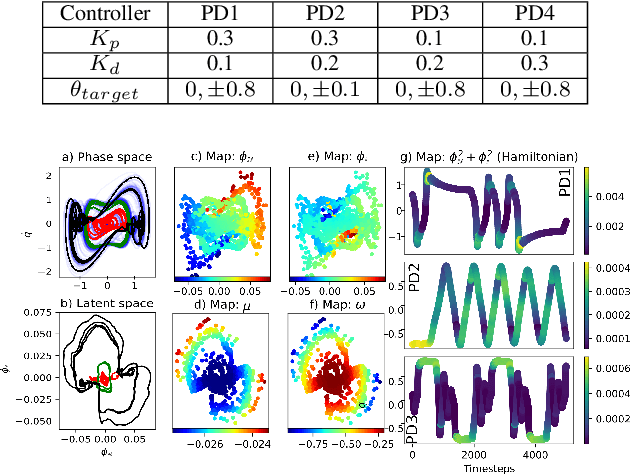

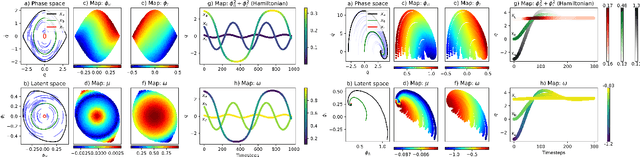

Deep Koopman with Control: Spectral Analysis of Soft Robot Dynamics

Oct 14, 2022

Abstract:Soft robots are challenging to model and control as inherent non-linearities (e.g., elasticity and deformation), often requires complex explicit physics-based analytical modeling (e.g., a priori geometric definitions). While machine learning can be used to learn non-linear control models in a data-driven approach, these models often lack an intuitive internal physical interpretation and representation, limiting dynamical analysis. To address this, this paper presents an approach using Koopman operator theory and deep neural networks to provide a global linear description of the non-linear control systems. Specifically, by globally linearising dynamics, the Koopman operator is analyzed using spectral decomposition to characterises important physics-based interpretations, such as functional growths and oscillations. Experiments in this paper demonstrate this approach for controlling non-linear soft robotics, and shows model outputs are interpretable in the context of spectral analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge