Dongheng Zhang

Lessons from Deploying Learning-based CSI Localization on a Large-Scale ISAC Platform

Apr 24, 2025Abstract:In recent years, Channel State Information (CSI), recognized for its fine-grained spatial characteristics, has attracted increasing attention in WiFi-based indoor localization. However, despite its potential, CSI-based approaches have yet to achieve the same level of deployment scale and commercialization as those based on Received Signal Strength Indicator (RSSI). A key limitation lies in the fact that most existing CSI-based systems are developed and evaluated in controlled, small-scale environments, limiting their generalizability. To bridge this gap, we explore the deployment of a large-scale CSI-based localization system involving over 400 Access Points (APs) in a real-world building under the Integrated Sensing and Communication (ISAC) paradigm. We highlight two critical yet often overlooked factors: the underutilization of unlabeled data and the inherent heterogeneity of CSI measurements. To address these challenges, we propose a novel CSI-based learning framework for WiFi localization, tailored for large-scale ISAC deployments on the server side. Specifically, we employ a novel graph-based structure to model heterogeneous CSI data and reduce redundancy. We further design a pretext pretraining task that incorporates spatial and temporal priors to effectively leverage large-scale unlabeled CSI data. Complementarily, we introduce a confidence-aware fine-tuning strategy to enhance the robustness of localization results. In a leave-one-smartphone-out experiment spanning five floors and 25, 600 m2, we achieve a median localization error of 2.17 meters and a floor accuracy of 99.49%. This performance corresponds to an 18.7% reduction in mean absolute error (MAE) compared to the best-performing baseline.

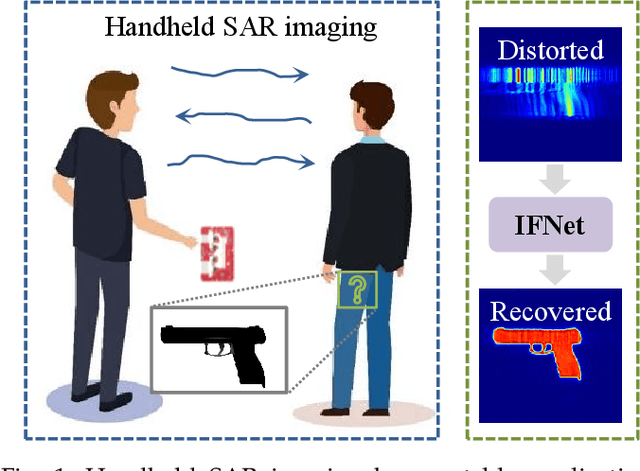

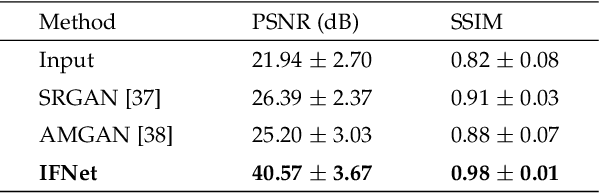

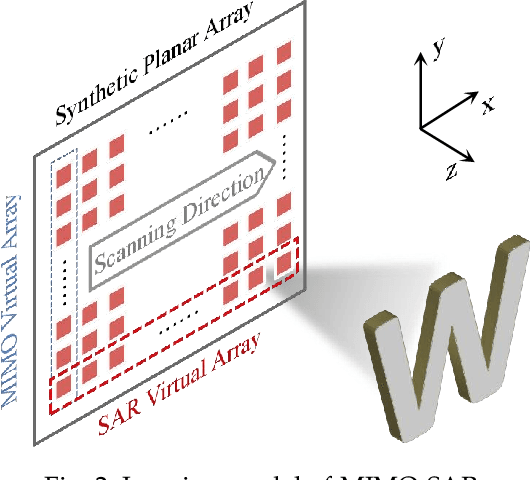

IFNet: Deep Imaging and Focusing for Handheld SAR with Millimeter-wave Signals

May 06, 2024

Abstract:Recent advancements have showcased the potential of handheld millimeter-wave (mmWave) imaging, which applies synthetic aperture radar (SAR) principles in portable settings. However, existing studies addressing handheld motion errors either rely on costly tracking devices or employ simplified imaging models, leading to impractical deployment or limited performance. In this paper, we present IFNet, a novel deep unfolding network that combines the strengths of signal processing models and deep neural networks to achieve robust imaging and focusing for handheld mmWave systems. We first formulate the handheld imaging model by integrating multiple priors about mmWave images and handheld phase errors. Furthermore, we transform the optimization processes into an iterative network structure for improved and efficient imaging performance. Extensive experiments demonstrate that IFNet effectively compensates for handheld phase errors and recovers high-fidelity images from severely distorted signals. In comparison with existing methods, IFNet can achieve at least 11.89 dB improvement in average peak signal-to-noise ratio (PSNR) and 64.91% improvement in average structural similarity index measure (SSIM) on a real-world dataset.

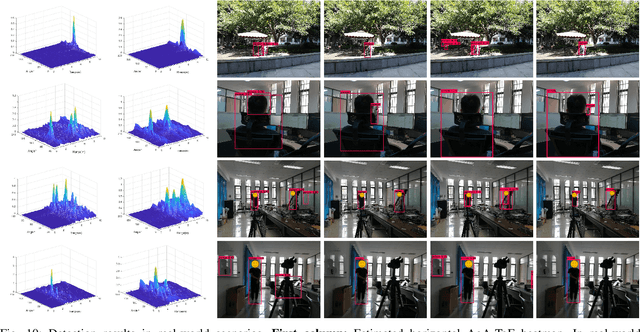

DREAM-PCD: Deep Reconstruction and Enhancement of mmWave Radar Pointcloud

Sep 27, 2023Abstract:Millimeter-wave (mmWave) radar pointcloud offers attractive potential for 3D sensing, thanks to its robustness in challenging conditions such as smoke and low illumination. However, existing methods failed to simultaneously address the three main challenges in mmWave radar pointcloud reconstruction: specular information lost, low angular resolution, and strong interference and noise. In this paper, we propose DREAM-PCD, a novel framework that combines signal processing and deep learning methods into three well-designed components to tackle all three challenges: Non-Coherent Accumulation for dense points, Synthetic Aperture Accumulation for improved angular resolution, and Real-Denoise Multiframe network for noise and interference removal. Moreover, the causal multiframe and "real-denoise" mechanisms in DREAM-PCD significantly enhance the generalization performance. We also introduce RadarEyes, the largest mmWave indoor dataset with over 1,000,000 frames, featuring a unique design incorporating two orthogonal single-chip radars, lidar, and camera, enriching dataset diversity and applications. Experimental results demonstrate that DREAM-PCD surpasses existing methods in reconstruction quality, and exhibits superior generalization and real-time capabilities, enabling high-quality real-time reconstruction of radar pointcloud under various parameters and scenarios. We believe that DREAM-PCD, along with the RadarEyes dataset, will significantly advance mmWave radar perception in future real-world applications.

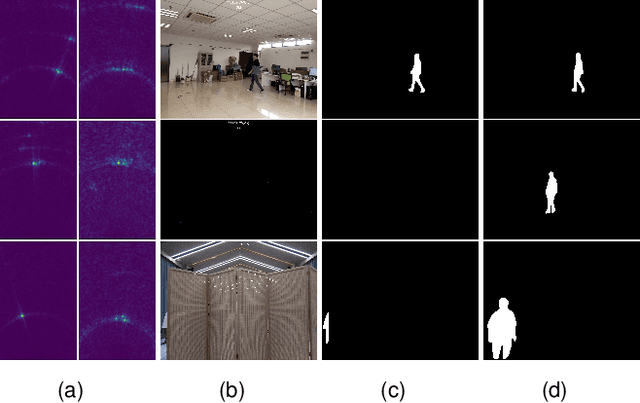

RFMask: A Simple Baseline for Human Silhouette Segmentation with Radio Signals

Jan 25, 2022

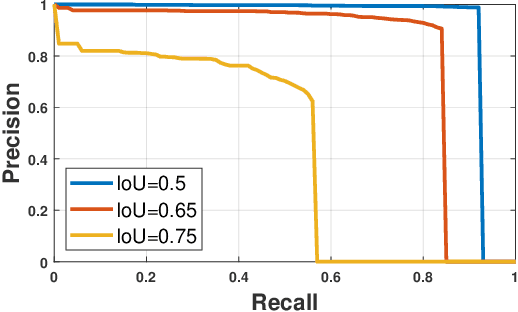

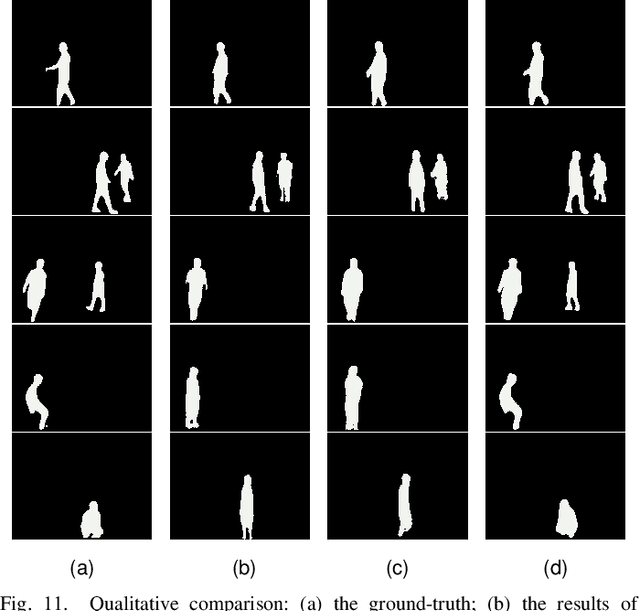

Abstract:Human silhouette segmentation, which is originally defined in computer vision, has achieved promising results for understanding human activities. However, the physical limitation makes existing systems based on optical cameras suffer from severe performance degradation under low illumination, smoke, and/or opaque obstruction conditions. To overcome such limitations, in this paper, we propose to utilize the radio signals, which can traverse obstacles and are unaffected by the lighting conditions to achieve silhouette segmentation. The proposed RFMask framework is composed of three modules. It first transforms RF signals captured by millimeter wave radar on two planes into spatial domain and suppress interference with the signal processing module. Then, it locates human reflections on RF frames and extract features from surrounding signals with human detection module. Finally, the extracted features from RF frames are aggregated with an attention based mask generation module. To verify our proposed framework, we collect a dataset containing 804,760 radio frames and 402,380 camera frames with human activities under various scenes. Experimental results show that the proposed framework can achieve impressive human silhouette segmentation even under the challenging scenarios(such as low light and occlusion scenarios) where traditional optical-camera-based methods fail. To the best of our knowledge, this is the first investigation towards segmenting human silhouette based on millimeter wave signals. We hope that our work can serve as a baseline and inspire further research that perform vision tasks with radio signals. The dataset and codes will be made in public.

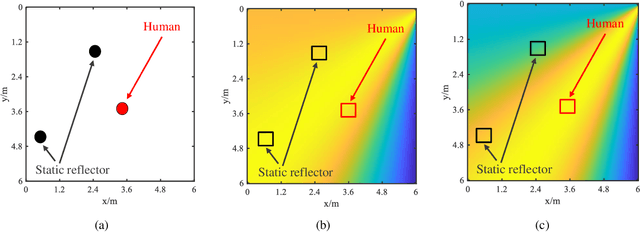

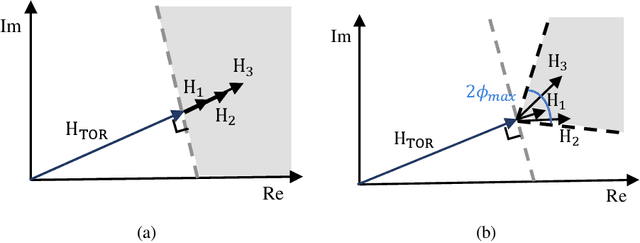

Multi-Person Passive WiFi Indoor Localization with Intelligent Reflecting Surface

Jan 05, 2022

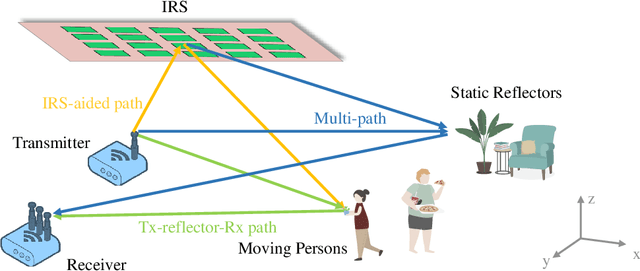

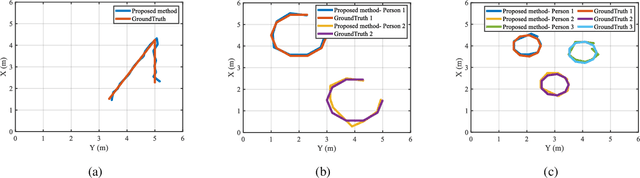

Abstract:The past years have witnessed increasing research interest in achieving passive human localization with commodity WiFi devices. However, due to the fundamental limited spatial resolution of WiFi signals, it is still very difficult to achieve accurate localization with existing commodity WiFi devices. To tackle this problem, in this paper, we propose to exploit the degree of freedom provided by the Intelligent Reflecting Surface (IRS), which is composed of a large number of controllable reflective elements, to modulate the spatial distribution of WiFi signals and thus break down the spatial resolution limitation of WiFi signals to achieve accurate localization. Specifically, in the single-person scenario, we derive the closed-form solution to optimally control the phase shift of the IRS elements. In the multi-person scenario, we propose a Side-lobe Cancellation Algorithm to eliminate the near-far effect to achieve accurate localization of multiple persons in an iterative manner. Extensive simulation results demonstrate that without any change to the existing WiFi infrastructure, the proposed framework can locate multiple moving persons passively with sub-centimeter accuracy under multipath interference and random noise.

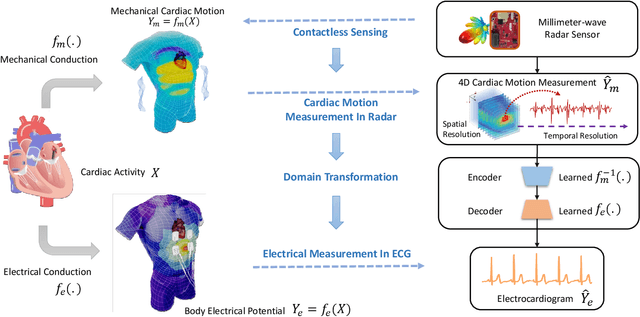

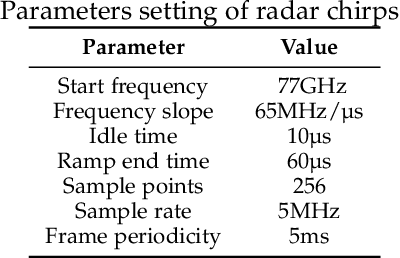

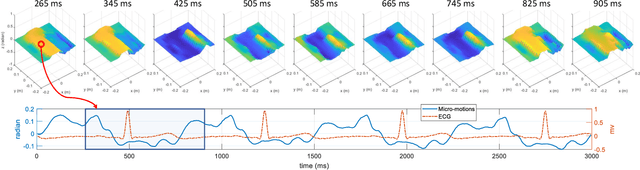

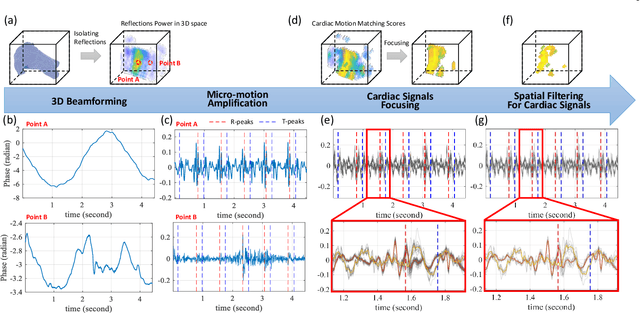

Contactless Electrocardiogram Monitoring with Millimeter Wave Radar

Dec 21, 2021

Abstract:The electrocardiogram (ECG) has always been an important biomedical test to diagnose cardiovascular diseases. Current approaches for ECG monitoring are based on body attached electrodes leading to uncomfortable user experience. Therefore, contactless ECG monitoring has drawn tremendous attention, which however remains unsolved. In fact, cardiac electrical-mechanical activities are coupling in a well-coordinated pattern. In this paper, we achieve contactless ECG monitoring by breaking the boundary between the cardiac mechanical and electrical activity. Specifically, we develop a millimeter-wave radar system to contactlessly measure cardiac mechanical activity and reconstruct ECG without any contact in. To measure the cardiac mechanical activity comprehensively, we propose a series of signal processing algorithms to extract 4D cardiac motions from radio frequency (RF) signals. Furthermore, we design a deep neural network to solve the cardiac related domain transformation problem and achieve end-to-end reconstruction mapping from RF input to the ECG output. The experimental results show that our contactless ECG measurements achieve timing accuracy of cardiac electrical events with median error below 14ms and morphology accuracy with median Pearson-Correlation of 90% and median Root-Mean-Square-Error of 0.081mv compared to the groudtruth ECG. These results indicate that the system enables the potential of contactless, continuous and accurate ECG monitoring.

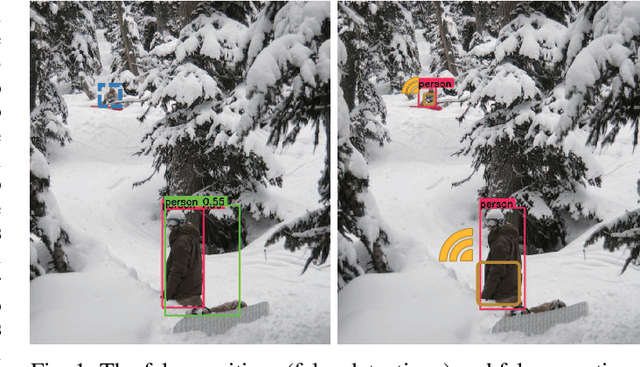

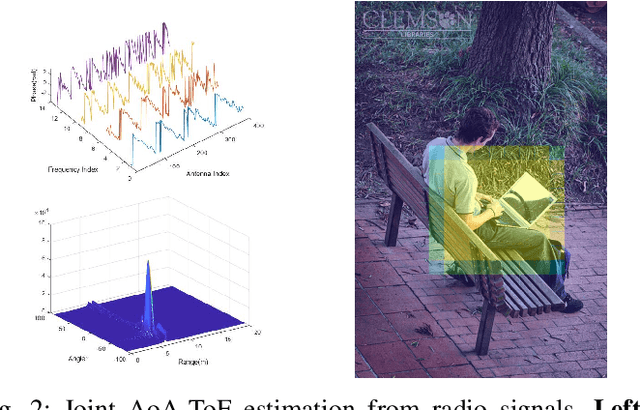

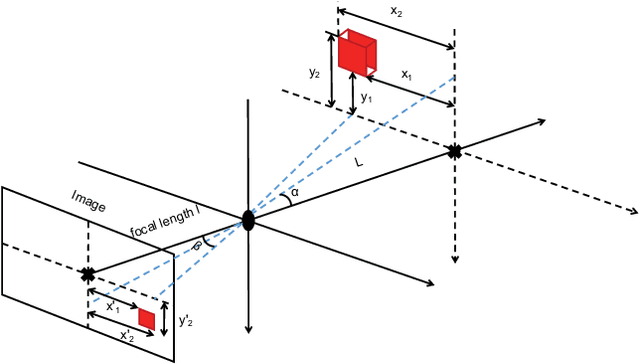

Radio-Assisted Human Detection

Dec 16, 2021

Abstract:In this paper, we propose a radio-assisted human detection framework by incorporating radio information into the state-of-the-art detection methods, including anchor-based onestage detectors and two-stage detectors. We extract the radio localization and identifer information from the radio signals to assist the human detection, due to which the problem of false positives and false negatives can be greatly alleviated. For both detectors, we use the confidence score revision based on the radio localization to improve the detection performance. For two-stage detection methods, we propose to utilize the region proposals generated from radio localization rather than relying on region proposal network (RPN). Moreover, with the radio identifier information, a non-max suppression method with the radio localization constraint has also been proposed to further suppress the false detections and reduce miss detections. Experiments on the simulative Microsoft COCO dataset and Caltech pedestrian datasets show that the mean average precision (mAP) and the miss rate of the state-of-the-art detection methods can be improved with the aid of radio information. Finally, we conduct experiments in real-world scenarios to demonstrate the feasibility of our proposed method in practice.

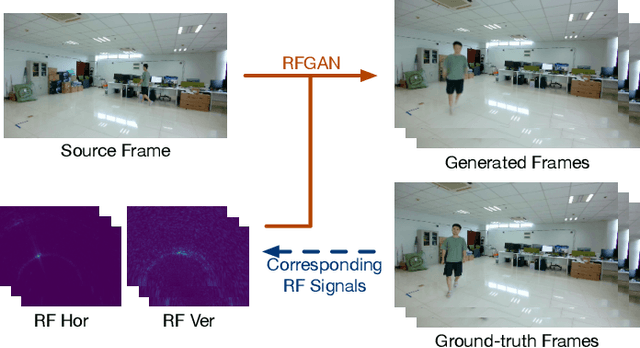

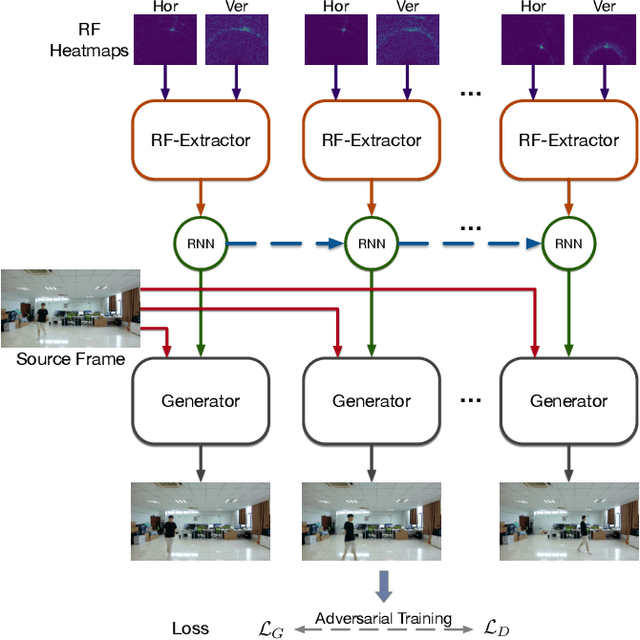

RFGAN: RF-Based Human Synthesis

Dec 07, 2021

Abstract:This paper demonstrates human synthesis based on the Radio Frequency (RF) signals, which leverages the fact that RF signals can record human movements with the signal reflections off the human body. Different from existing RF sensing works that can only perceive humans roughly, this paper aims to generate fine-grained optical human images by introducing a novel cross-modal RFGAN model. Specifically, we first build a radio system equipped with horizontal and vertical antenna arrays to transceive RF signals. Since the reflected RF signals are processed as obscure signal projection heatmaps on the horizontal and vertical planes, we design a RF-Extractor with RNN in RFGAN for RF heatmap encoding and combining to obtain the human activity information. Then we inject the information extracted by the RF-Extractor and RNN as the condition into GAN using the proposed RF-based adaptive normalizations. Finally, we train the whole model in an end-to-end manner. To evaluate our proposed model, we create two cross-modal datasets (RF-Walk & RF-Activity) that contain thousands of optical human activity frames and corresponding RF signals. Experimental results show that the RFGAN can generate target human activity frames using RF signals. To the best of our knowledge, this is the first work to generate optical images based on RF signals.

High-Resolution WiFi Imaging with Reconfigurable Intelligent Surfaces

Dec 01, 2021

Abstract:WiFi-based imaging enables pervasive sensing in a privacy-preserving and cost-effective way. However, most of existing methods either require specialized hardware modification or suffer from poor imaging performance due to the fundamental limit of off-the-shelf commodity WiFi devices in spatial resolution. We observe that the recently developed reconfigurable intelligent surface (RIS) could be a promising solution to overcome these challenges. Thus, in this paper, we propose a RIS-aided WiFi imaging framework to achieve high-resolution imaging with the off-the-shelf WiFi devices. Specifically, we first design a beamforming method to achieve the first-stage imaging by separating the signals from different spatial locations with the aid of the RIS. Then, we propose an optimization-based super-resolution imaging algorithm by leveraging the low rank nature of the reconstructed object. During the optimization, we also explicitly take into account the effect of finite phase quantization in RIS to avoid the resolution degradation due to quantization errors. Simulation results demonstrate that our framework achieves median root mean square error (RMSE) of 0.03 and median structural similarity (SSIM) of 0.52. The visual results show that high-resolution imaging results are achieved with simulation signals at 5 GHz that are matched with commercial WiFi 802.11n/ac protocols.

Unsupervised Domain Adaptation for Device-free Gesture Recognition

Nov 20, 2021

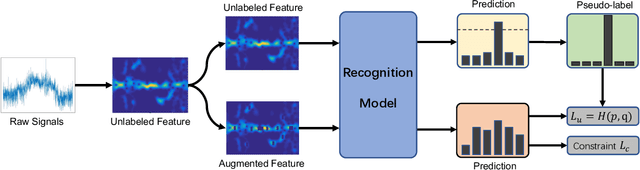

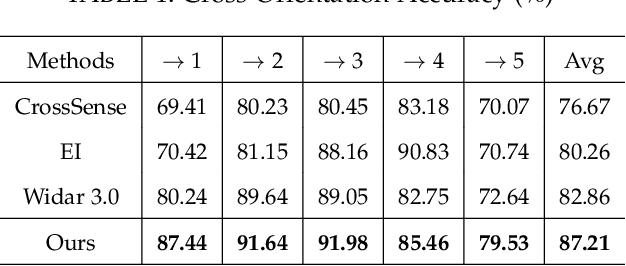

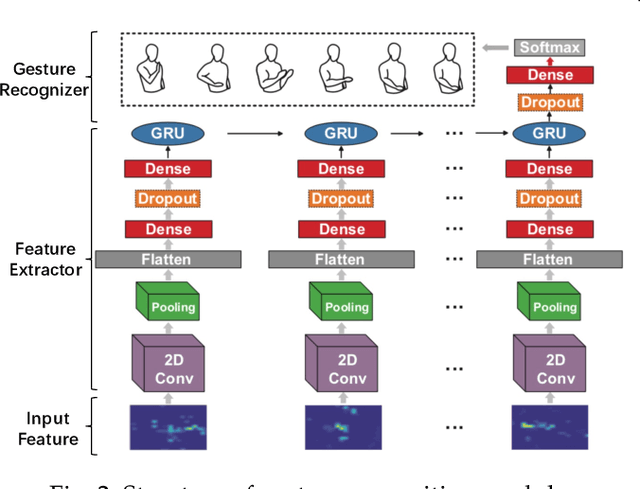

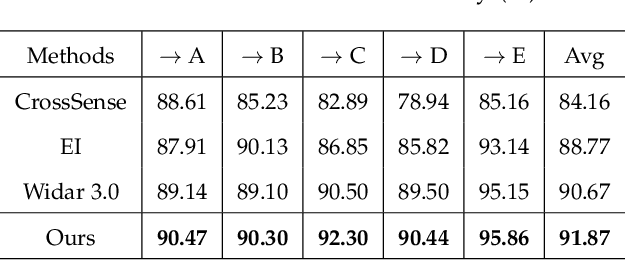

Abstract:Device free human gesture recognition with Radio Frequency signals has attained acclaim due to the omnipresence, privacy protection, and broad coverage nature of RF signals. However, neural network models trained for recognition with data collected from a specific domain suffer from significant performance degradation when applied to a new domain. To tackle this challenge, we propose an unsupervised domain adaptation framework for device free gesture recognition by making effective use of the unlabeled target domain data. Specifically, we apply pseudo labeling and consistency regularization with elaborated design on target domain data to produce pseudo labels and align instance feature of the target domain. Then, we design two data augmentation methods by randomly erasing the input data to enhance the robustness of the model. Furthermore, we apply a confidence control constraint to tackle the overconfidence problem. We conduct extensive experiments on a public WiFi dataset and a public millimeter wave radar dataset. The experimental results demonstrate the superior effectiveness of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge