Zhi Wu

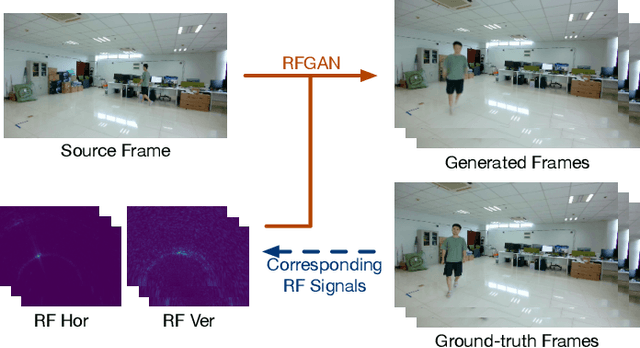

RFMask: A Simple Baseline for Human Silhouette Segmentation with Radio Signals

Jan 25, 2022

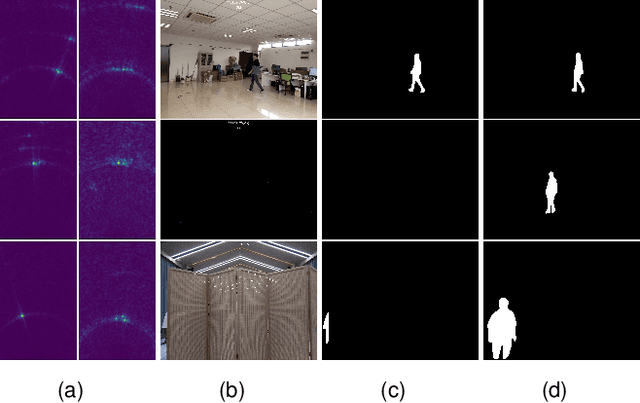

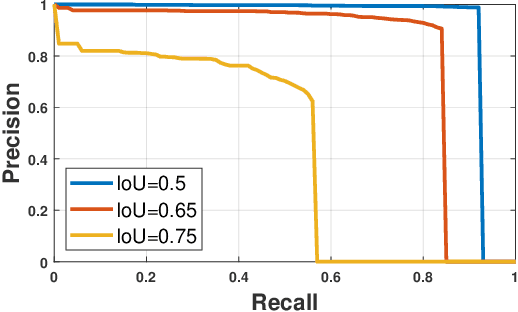

Abstract:Human silhouette segmentation, which is originally defined in computer vision, has achieved promising results for understanding human activities. However, the physical limitation makes existing systems based on optical cameras suffer from severe performance degradation under low illumination, smoke, and/or opaque obstruction conditions. To overcome such limitations, in this paper, we propose to utilize the radio signals, which can traverse obstacles and are unaffected by the lighting conditions to achieve silhouette segmentation. The proposed RFMask framework is composed of three modules. It first transforms RF signals captured by millimeter wave radar on two planes into spatial domain and suppress interference with the signal processing module. Then, it locates human reflections on RF frames and extract features from surrounding signals with human detection module. Finally, the extracted features from RF frames are aggregated with an attention based mask generation module. To verify our proposed framework, we collect a dataset containing 804,760 radio frames and 402,380 camera frames with human activities under various scenes. Experimental results show that the proposed framework can achieve impressive human silhouette segmentation even under the challenging scenarios(such as low light and occlusion scenarios) where traditional optical-camera-based methods fail. To the best of our knowledge, this is the first investigation towards segmenting human silhouette based on millimeter wave signals. We hope that our work can serve as a baseline and inspire further research that perform vision tasks with radio signals. The dataset and codes will be made in public.

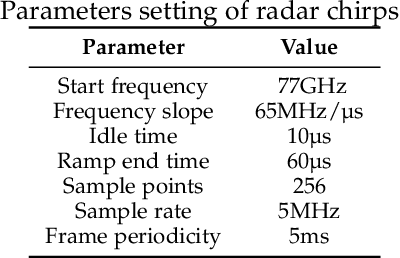

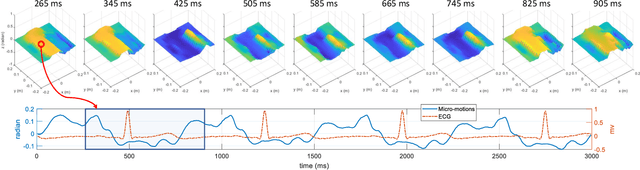

Contactless Electrocardiogram Monitoring with Millimeter Wave Radar

Dec 21, 2021

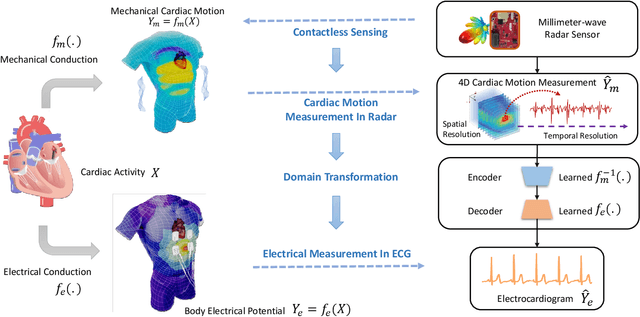

Abstract:The electrocardiogram (ECG) has always been an important biomedical test to diagnose cardiovascular diseases. Current approaches for ECG monitoring are based on body attached electrodes leading to uncomfortable user experience. Therefore, contactless ECG monitoring has drawn tremendous attention, which however remains unsolved. In fact, cardiac electrical-mechanical activities are coupling in a well-coordinated pattern. In this paper, we achieve contactless ECG monitoring by breaking the boundary between the cardiac mechanical and electrical activity. Specifically, we develop a millimeter-wave radar system to contactlessly measure cardiac mechanical activity and reconstruct ECG without any contact in. To measure the cardiac mechanical activity comprehensively, we propose a series of signal processing algorithms to extract 4D cardiac motions from radio frequency (RF) signals. Furthermore, we design a deep neural network to solve the cardiac related domain transformation problem and achieve end-to-end reconstruction mapping from RF input to the ECG output. The experimental results show that our contactless ECG measurements achieve timing accuracy of cardiac electrical events with median error below 14ms and morphology accuracy with median Pearson-Correlation of 90% and median Root-Mean-Square-Error of 0.081mv compared to the groudtruth ECG. These results indicate that the system enables the potential of contactless, continuous and accurate ECG monitoring.

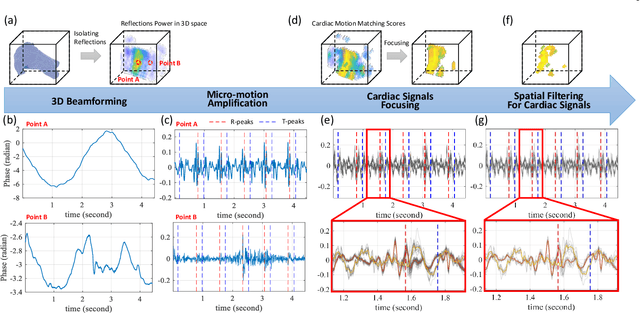

RFGAN: RF-Based Human Synthesis

Dec 07, 2021

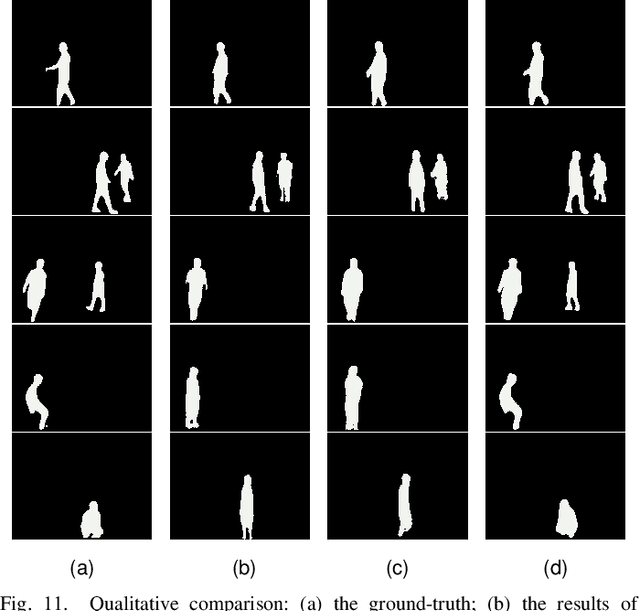

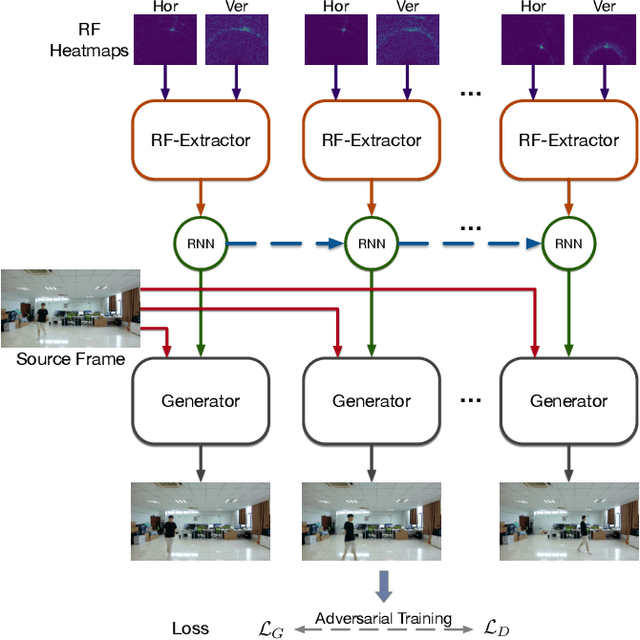

Abstract:This paper demonstrates human synthesis based on the Radio Frequency (RF) signals, which leverages the fact that RF signals can record human movements with the signal reflections off the human body. Different from existing RF sensing works that can only perceive humans roughly, this paper aims to generate fine-grained optical human images by introducing a novel cross-modal RFGAN model. Specifically, we first build a radio system equipped with horizontal and vertical antenna arrays to transceive RF signals. Since the reflected RF signals are processed as obscure signal projection heatmaps on the horizontal and vertical planes, we design a RF-Extractor with RNN in RFGAN for RF heatmap encoding and combining to obtain the human activity information. Then we inject the information extracted by the RF-Extractor and RNN as the condition into GAN using the proposed RF-based adaptive normalizations. Finally, we train the whole model in an end-to-end manner. To evaluate our proposed model, we create two cross-modal datasets (RF-Walk & RF-Activity) that contain thousands of optical human activity frames and corresponding RF signals. Experimental results show that the RFGAN can generate target human activity frames using RF signals. To the best of our knowledge, this is the first work to generate optical images based on RF signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge