Dogan Corus

On Steady-State Evolutionary Algorithms and Selective Pressure: Why Inverse Rank-Based Allocation of Reproductive Trials is Best

Mar 18, 2021

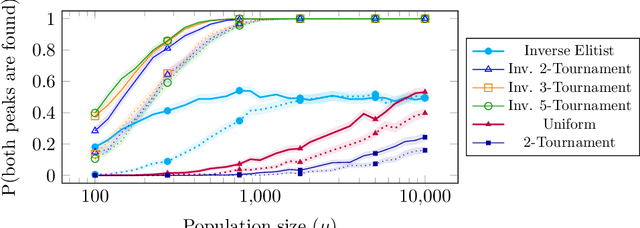

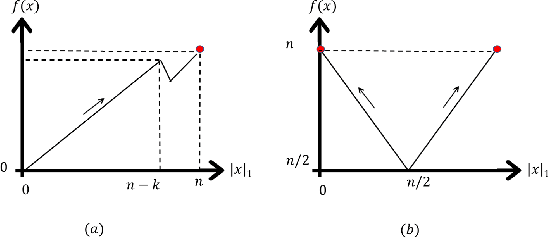

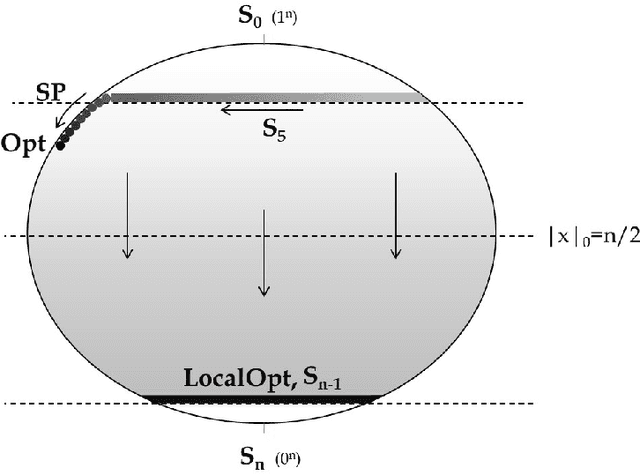

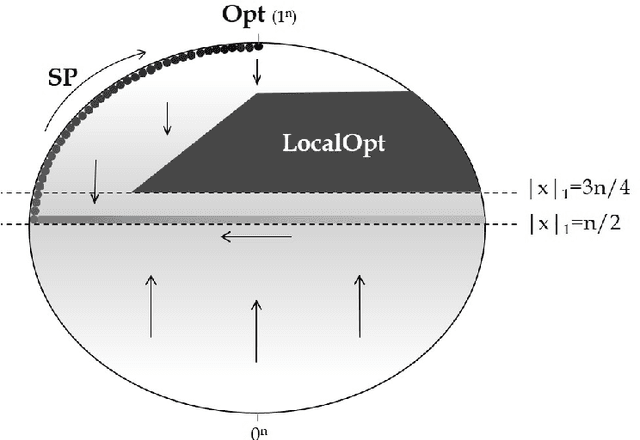

Abstract:We analyse the impact of the selective pressure for the global optimisation capabilities of steady-state EAs. For the standard bimodal benchmark function \twomax we rigorously prove that using uniform parent selection leads to exponential runtimes with high probability to locate both optima for the standard ($\mu$+1)~EA and ($\mu$+1)~RLS with any polynomial population sizes. On the other hand, we prove that selecting the worst individual as parent leads to efficient global optimisation with overwhelming probability for reasonable population sizes. Since always selecting the worst individual may have detrimental effects for escaping from local optima, we consider the performance of stochastic parent selection operators with low selective pressure for a function class called \textsc{TruncatedTwoMax} where one slope is shorter than the other. An experimental analysis shows that the EAs equipped with inverse tournament selection, where the loser is selected for reproduction and small tournament sizes, globally optimise \textsc{TwoMax} efficiently and effectively escape from local optima of \textsc{TruncatedTwoMax} with high probability. Thus they identify both optima efficiently while uniform (or stronger) selection fails in theory and in practice. We then show the power of inverse selection on function classes from the literature where populations are essential by providing rigorous proofs or experimental evidence that it outperforms uniform selection equipped with or without a restart strategy. We conclude the paper by confirming our theoretical insights with an empirical analysis of the different selective pressures on standard benchmarks of the classical MaxSat and Multidimensional Knapsack Problems.

On Inversely Proportional Hypermutations with Mutation Potential

Mar 27, 2019

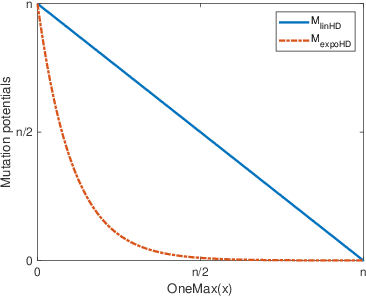

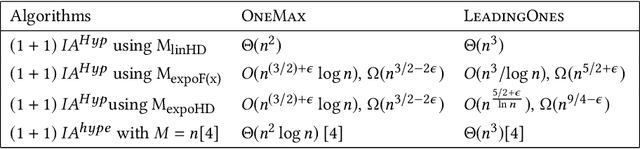

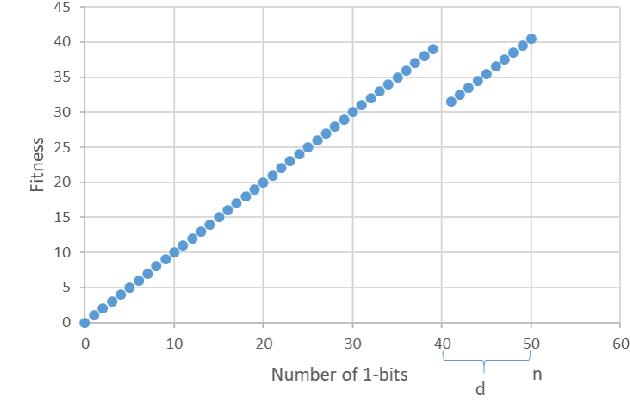

Abstract:Artificial Immune Systems (AIS) employing hypermutations with linear static mutation potential have recently been shown to be very effective at escaping local optima of combinatorial optimisation problems at the expense of being slower during the exploitation phase compared to standard evolutionary algorithms. In this paper we prove that considerable speed-ups in the exploitation phase may be achieved with dynamic inversely proportional mutation potentials (IPM) and argue that the potential should decrease inversely to the distance to the optimum rather than to the difference in fitness. Afterwards we define a simple (1+1)~Opt-IA, that uses IPM hypermutations and ageing, for realistic applications where optimal solutions are unknown. The aim of the AIS is to approximate the ideal behaviour of the inversely proportional hypermutations better and better as the search space is explored. We prove that such desired behaviour, and related speed-ups, occur for a well-studied bimodal benchmark function called \textsc{TwoMax}. Furthermore, we prove that the (1+1)~Opt-IA with IPM efficiently optimises a third bimodal function, \textsc{Cliff}, by escaping its local optima while Opt-IA with static potential cannot, thus requires exponential expected runtime in the distance between the cliff and the optimum.

On the Benefits of Populations on the Exploitation Speed of Standard Steady-State Genetic Algorithms

Mar 26, 2019

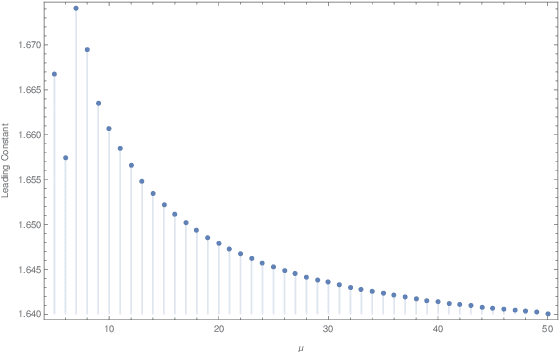

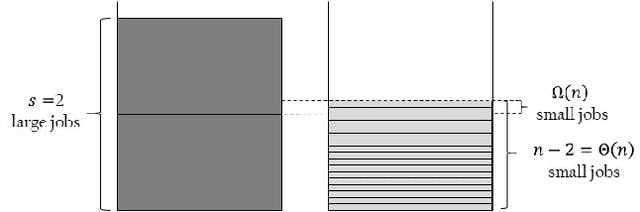

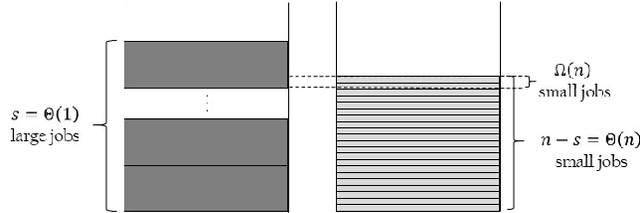

Abstract:It is generally accepted that populations are useful for the global exploration of multi-modal optimisation problems. Indeed, several theoretical results are available showing such advantages over single-trajectory search heuristics. In this paper we provide evidence that evolving populations via crossover and mutation may also benefit the optimisation time for hillclimbing unimodal functions. In particular, we prove bounds on the expected runtime of the standard ($\mu$+1)~GA for OneMax that are lower than its unary black box complexity and decrease in the leading constant with the population size up to $\mu=O(\sqrt{\log n})$. Our analysis suggests that the optimal mutation strategy is to flip two bits most of the time. To achieve the results we provide two interesting contributions to the theory of randomised search heuristics: 1) A novel application of drift analysis which compares absorption times of different Markov chains without defining an explicit potential function. 2) The inversion of fundamental matrices to calculate the absorption times of the Markov chains. The latter strategy was previously proposed in the literature but to the best of our knowledge this is the first time is has been used to show non-trivial bounds on expected runtimes.

Artificial Immune Systems Can Find Arbitrarily Good Approximations for the NP-Hard Partition Problem

Jun 01, 2018

Abstract:Typical Artificial Immune System (AIS) operators such as hypermutations with mutation potential and ageing allow to efficiently overcome local optima from which Evolutionary Algorithms (EAs) struggle to escape. Such behaviour has been shown for artificial example functions such as Jump, Cliff or Trap constructed especially to show difficulties that EAs may encounter during the optimisation process. However, no evidence is available indicating that similar effects may also occur in more realistic problems. In this paper we perform an analysis for the standard NP-Hard \partition problem from combinatorial optimisation and rigorously show that hypermutations and ageing allow AISs to efficiently escape from local optima where standard EAs require exponential time. As a result we prove that while EAs and Random Local Search may get trapped on 4/3 approximations, AISs find arbitrarily good approximate solutions of ratio (1+$\epsilon$) for any constant $\epsilon$ within a time that is polynomial in the problem size and exponential only in $1/\epsilon$.

Fast Artificial Immune Systems

Jun 01, 2018

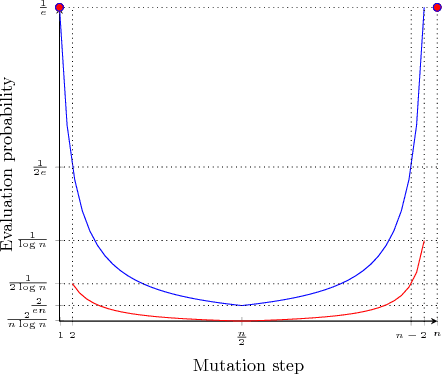

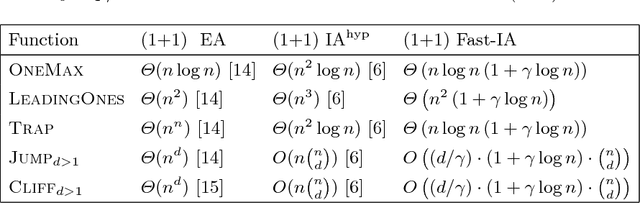

Abstract:Various studies have shown that characteristic Artificial Immune System (AIS) operators such as hypermutations and ageing can be very efficient at escaping local optima of multimodal optimisation problems. However, this efficiency comes at the expense of considerably slower runtimes during the exploitation phase compared to standard evolutionary algorithms. We propose modifications to the traditional `hypermutations with mutation potential' (HMP) that allow them to be efficient at exploitation as well as maintaining their effective explorative characteristics. Rather than deterministically evaluating fitness after each bitflip of a hypermutation, we sample the fitness function stochastically with a `parabolic' distribution which allows the `stop at first constructive mutation' (FCM) variant of HMP to reduce the linear amount of wasted function evaluations when no improvement is found to a constant. By returning the best sampled solution during the hypermutation, rather than the first constructive mutation, we then turn the extremely inefficient HMP operator without FCM, into a very effective operator for the standard Opt-IA AIS using hypermutation, cloning and ageing. We rigorously prove the effectiveness of the two proposed operators by analysing them on all problems where the performance of HPM is rigorously understood in the literature. %

When Hypermutations and Ageing Enable Artificial Immune Systems to Outperform Evolutionary Algorithms

Apr 04, 2018

Abstract:We present a time complexity analysis of the Opt-IA artificial immune system (AIS). We first highlight the power and limitations of its distinguishing operators (i.e., hypermutations with mutation potential and ageing) by analysing them in isolation. Recent work has shown that ageing combined with local mutations can help escape local optima on a dynamic optimisation benchmark function. We generalise this result by rigorously proving that, compared to evolutionary algorithms (EAs), ageing leads to impressive speed-ups on the standard Cliff benchmark function both when using local and global mutations. Unless the stop at first constructive mutation (FCM) mechanism is applied, we show that hypermutations require exponential expected runtime to optimise any function with a polynomial number of optima. If instead FCM is used, the expected runtime is at most a linear factor larger than the upper bound achieved for any random local search algorithm using the artificial fitness levels method. Nevertheless, we prove that algorithms using hypermutations can be considerably faster than EAs at escaping local optima. An analysis of the complete Opt-IA reveals that it is efficient on the previously considered functions and highlights problems where the use of the full algorithm is crucial. We complete the picture by presenting a class of functions for which Opt-IA fails with overwhelming probability while standard EAs are efficient.

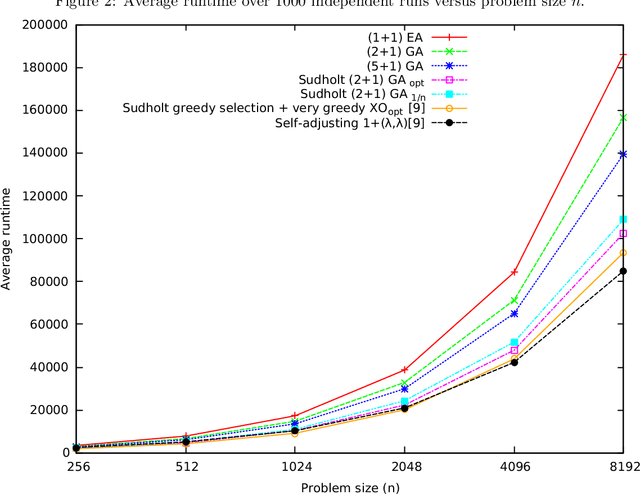

Standard Steady State Genetic Algorithms Can Hillclimb Faster than Mutation-only Evolutionary Algorithms

Aug 25, 2017

Abstract:Explaining to what extent the real power of genetic algorithms lies in the ability of crossover to recombine individuals into higher quality solutions is an important problem in evolutionary computation. In this paper we show how the interplay between mutation and crossover can make genetic algorithms hillclimb faster than their mutation-only counterparts. We devise a Markov Chain framework that allows to rigorously prove an upper bound on the runtime of standard steady state genetic algorithms to hillclimb the OneMax function. The bound establishes that the steady-state genetic algorithms are 25% faster than all standard bit mutation-only evolutionary algorithms with static mutation rate up to lower order terms for moderate population sizes. The analysis also suggests that larger populations may be faster than populations of size 2. We present a lower bound for a greedy (2+1) GA that matches the upper bound for populations larger than 2, rigorously proving that 2 individuals cannot outperform larger population sizes under greedy selection and greedy crossover up to lower order terms. In complementary experiments the best population size is greater than 2 and the greedy genetic algorithms are faster than standard ones, further suggesting that the derived lower bound also holds for the standard steady state (2+1) GA.

Level-based Analysis of Genetic Algorithms and other Search Processes

Oct 27, 2016

Abstract:Understanding how the time-complexity of evolutionary algorithms (EAs) depend on their parameter settings and characteristics of fitness landscapes is a fundamental problem in evolutionary computation. Most rigorous results were derived using a handful of key analytic techniques, including drift analysis. However, since few of these techniques apply effortlessly to population-based EAs, most time-complexity results concern simplified EAs, such as the (1+1) EA. This paper describes the level-based theorem, a new technique tailored to population-based processes. It applies to any non-elitist process where offspring are sampled independently from a distribution depending only on the current population. Given conditions on this distribution, our technique provides upper bounds on the expected time until the process reaches a target state. We demonstrate the technique on several pseudo-Boolean functions, the sorting problem, and approximation of optimal solutions in combinatorial optimisation. The conditions of the theorem are often straightforward to verify, even for Genetic Algorithms and Estimation of Distribution Algorithms which were considered highly non-trivial to analyse. Finally, we prove that the theorem is nearly optimal for the processes considered. Given the information the theorem requires about the process, a much tighter bound cannot be proved.

A Parameterized Complexity Analysis of Bi-level Optimisation with Evolutionary Algorithms

Jan 09, 2014

Abstract:Bi-level optimisation problems have gained increasing interest in the field of combinatorial optimisation in recent years. With this paper, we start the runtime analysis of evolutionary algorithms for bi-level optimisation problems. We examine two NP-hard problems, the generalised minimum spanning tree problem (GMST), and the generalised travelling salesman problem (GTSP) in the context of parameterised complexity. For the generalised minimum spanning tree problem, we analyse the two approaches presented by Hu and Raidl (2012) with respect to the number of clusters that distinguish each other by the chosen representation of possible solutions. Our results show that a (1+1) EA working with the spanning nodes representation is not a fixed-parameter evolutionary algorithm for the problem, whereas the global structure representation enables to solve the problem in fixed-parameter time. We present hard instances for each approach and show that the two approaches are highly complementary by proving that they solve each other's hard instances very efficiently. For the generalised travelling salesman problem, we analyse the problem with respect to the number of clusters in the problem instance. Our results show that a (1+1) EA working with the global structure representation is a fixed-parameter evolutionary algorithm for the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge