Diego R. Faria

Reducing Overconfidence Predictions for Autonomous Driving Perception

Feb 16, 2022

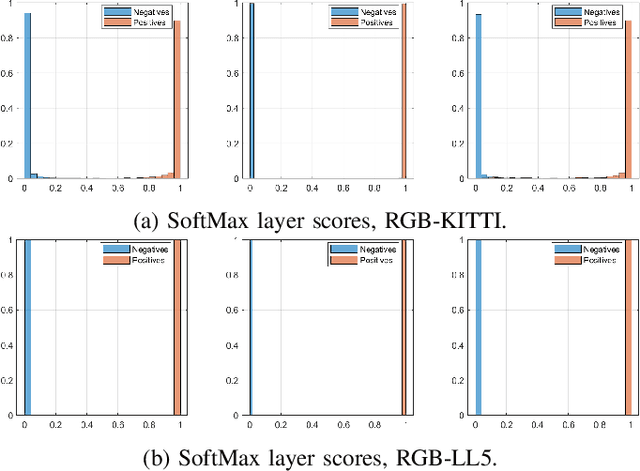

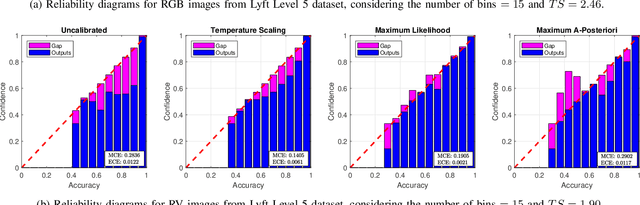

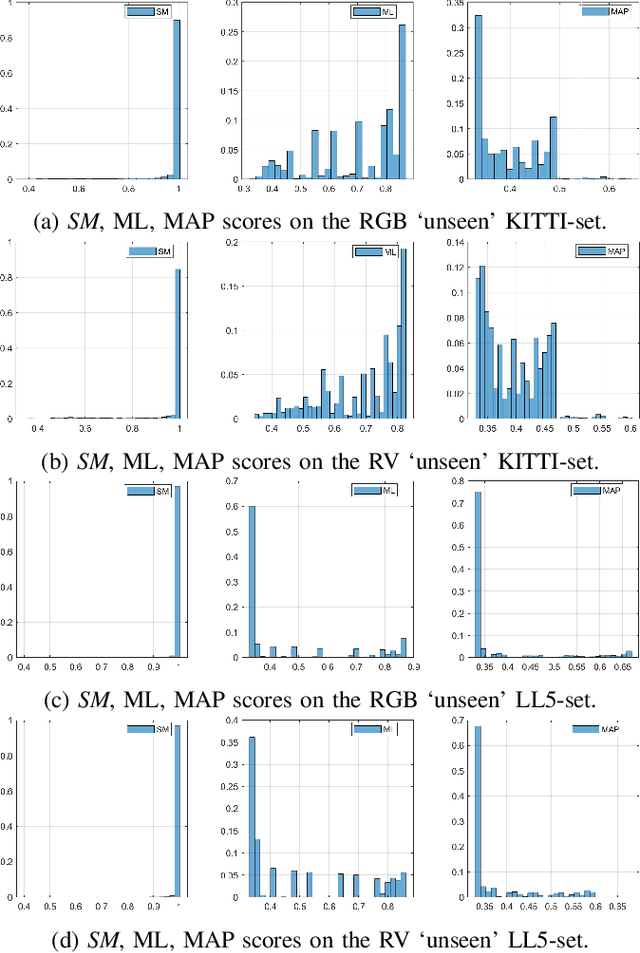

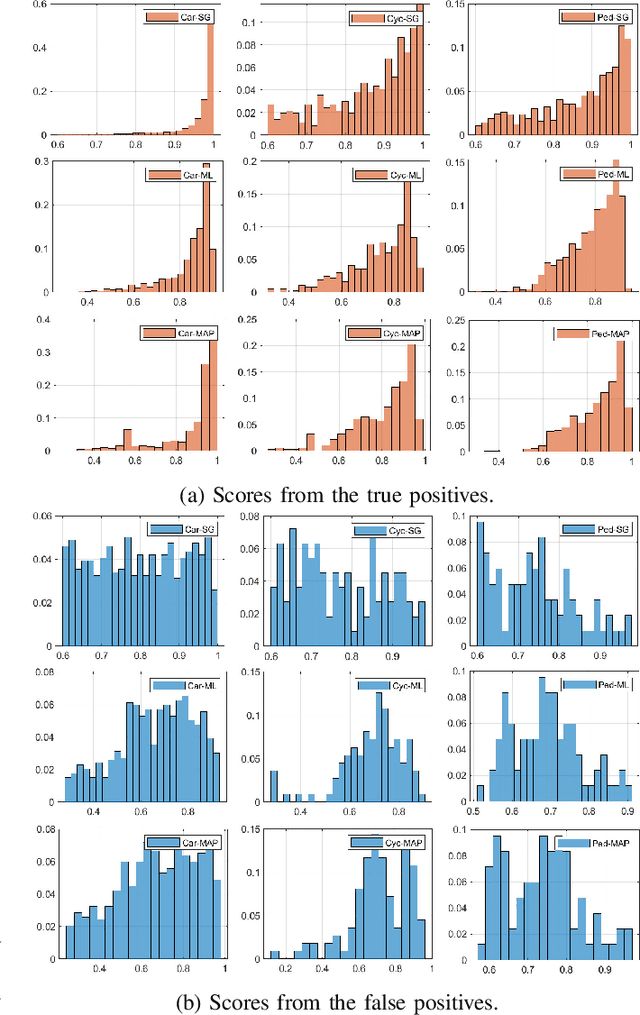

Abstract:In state-of-the-art deep learning for object recognition, SoftMax and Sigmoid functions are most commonly employed as the predictor outputs. Such layers often produce overconfident predictions rather than proper probabilistic scores, which can thus harm the decision-making of `critical' perception systems applied in autonomous driving and robotics. Given this, the experiments in this work propose a probabilistic approach based on distributions calculated out of the Logit layer scores of pre-trained networks. We demonstrate that Maximum Likelihood (ML) and Maximum a-Posteriori (MAP) functions are more suitable for probabilistic interpretations than SoftMax and Sigmoid-based predictions for object recognition. We explore distinct sensor modalities via RGB images and LiDARs (RV: range-view) data from the KITTI and Lyft Level-5 datasets, where our approach shows promising performance compared to the usual SoftMax and Sigmoid layers, with the benefit of enabling interpretable probabilistic predictions. Another advantage of the approach introduced in this paper is that the ML and MAP functions can be implemented in existing trained networks, that is, the approach benefits from the output of the Logit layer of pre-trained networks. Thus, there is no need to carry out a new training phase since the ML and MAP functions are used in the test/prediction phase.

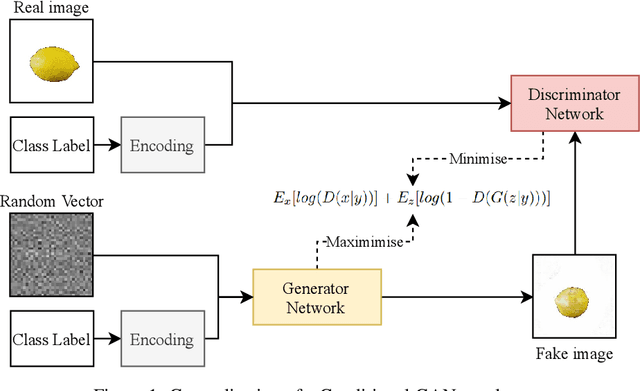

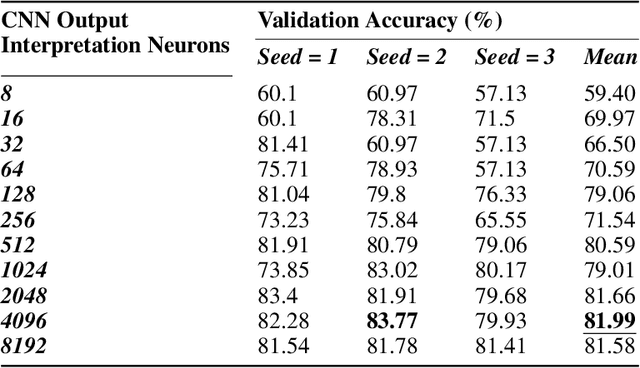

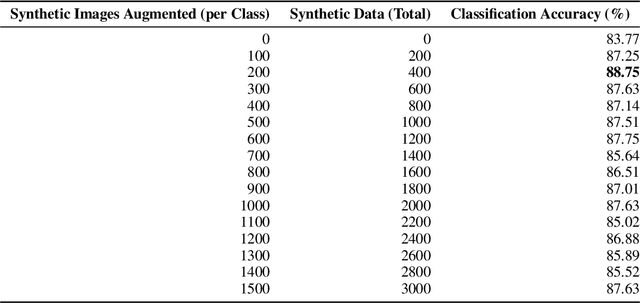

Fruit Quality and Defect Image Classification with Conditional GAN Data Augmentation

Apr 12, 2021

Abstract:Contemporary Artificial Intelligence technologies allow for the employment of Computer Vision to discern good crops from bad, providing a step in the pipeline of selecting healthy fruit from undesirable fruit, such as those which are mouldy or gangrenous. State-of-the-art works in the field report high accuracy results on small datasets (<1000 images), which are not representative of the population regarding real-world usage. The goals of this study are to further enable real-world usage by improving generalisation with data augmentation as well as to reduce overfitting and energy usage through model pruning. In this work, we suggest a machine learning pipeline that combines the ideas of fine-tuning, transfer learning, and generative model-based training data augmentation towards improving fruit quality image classification. A linear network topology search is performed to tune a VGG16 lemon quality classification model using a publicly-available dataset of 2690 images. We find that appending a 4096 neuron fully connected layer to the convolutional layers leads to an image classification accuracy of 83.77%. We then train a Conditional Generative Adversarial Network on the training data for 2000 epochs, and it learns to generate relatively realistic images. Grad-CAM analysis of the model trained on real photographs shows that the synthetic images can exhibit classifiable characteristics such as shape, mould, and gangrene. A higher image classification accuracy of 88.75% is then attained by augmenting the training with synthetic images, arguing that Conditional Generative Adversarial Networks have the ability to produce new data to alleviate issues of data scarcity. Finally, model pruning is performed via polynomial decay, where we find that the Conditional GAN-augmented classification network can retain 81.16% classification accuracy when compressed to 50% of its original size.

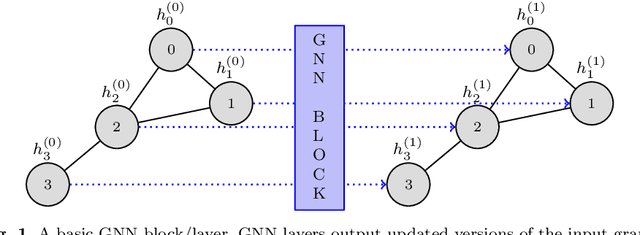

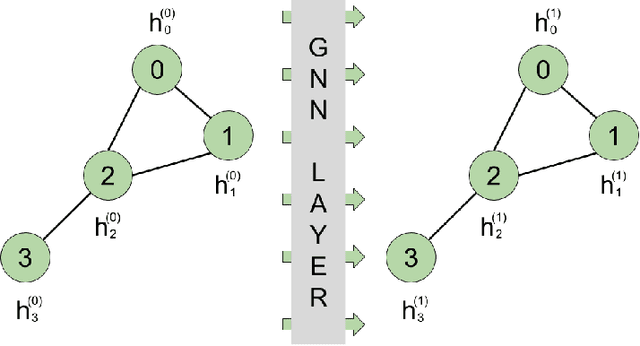

A Graph Neural Network to Model User Comfort in Robot Navigation

Feb 17, 2021

Abstract:Autonomous navigation is a key skill for assistive and service robots. To be successful, robots have to minimise the disruption caused to humans while moving. This implies predicting how people will move and complying with social conventions. Avoiding disrupting personal spaces, people's paths and interactions are examples of these social conventions. This paper leverages Graph Neural Networks to model robot disruption considering the movement of the humans and the robot so that the model built can be used by path planning algorithms. Along with the model, this paper presents an evolution of the dataset SocNav1 which considers the movement of the robot and the humans, and an updated scenario-to-graph transformation which is tested using different Graph Neural Network blocks. The model trained achieves close-to-human performance in the dataset. In addition to its accuracy, the main advantage of the approach is its scalability in terms of the number of social factors that can be considered in comparison with handcrafted models.

Chatbot Interaction with Artificial Intelligence: Human Data Augmentation with T5 and Language Transformer Ensemble for Text Classification

Oct 22, 2020

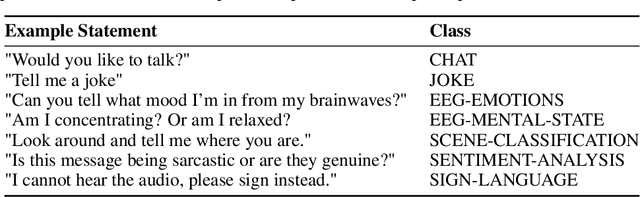

Abstract:In this work, we present the Chatbot Interaction with Artificial Intelligence (CI-AI) framework as an approach to the training of deep learning chatbots for task classification. The intelligent system augments human-sourced data via artificial paraphrasing in order to generate a large set of training data for further classical, attention, and language transformation-based learning approaches for Natural Language Processing. Human beings are asked to paraphrase commands and questions for task identification for further execution of a machine. The commands and questions are split into training and validation sets. A total of 483 responses were recorded. Secondly, the training set is paraphrased by the T5 model in order to augment it with further data. Seven state-of-the-art transformer-based text classification algorithms (BERT, DistilBERT, RoBERTa, DistilRoBERTa, XLM, XLM-RoBERTa, and XLNet) are benchmarked for both sets after fine-tuning on the training data for two epochs. We find that all models are improved when training data is augmented by the T5 model, with an average increase of classification accuracy by 4.01%. The best result was the RoBERTa model trained on T5 augmented data which achieved 98.96% classification accuracy. Finally, we found that an ensemble of the five best-performing transformer models via Logistic Regression of output label predictions led to an accuracy of 99.59% on the dataset of human responses. A highly-performing model allows the intelligent system to interpret human commands at the social-interaction level through a chatbot-like interface (e.g. "Robot, can we have a conversation?") and allows for better accessibility to AI by non-technical users.

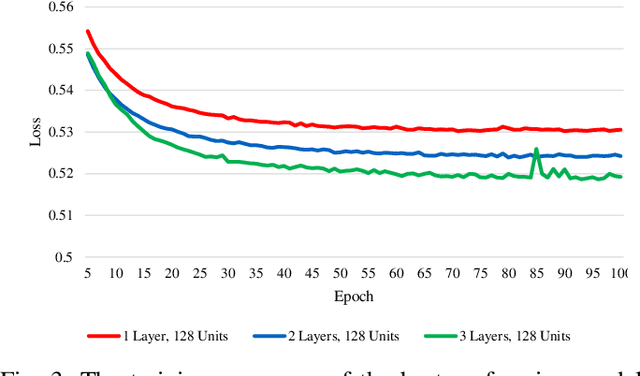

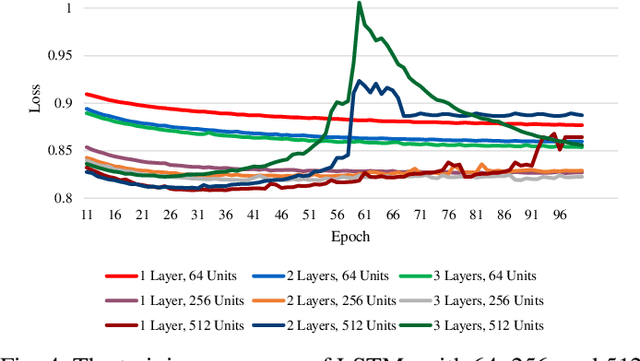

LSTM and GPT-2 Synthetic Speech Transfer Learning for Speaker Recognition to Overcome Data Scarcity

Jul 03, 2020

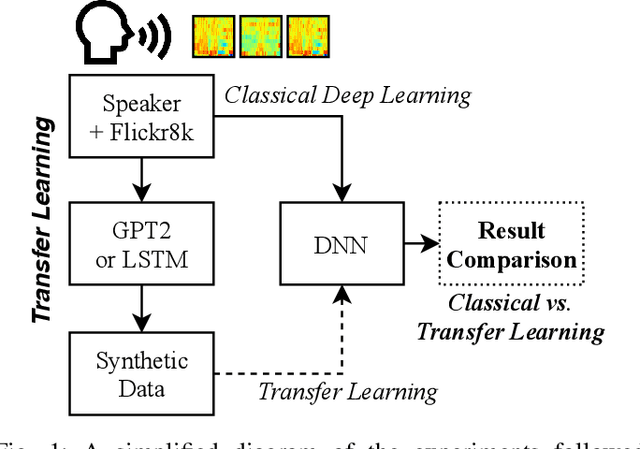

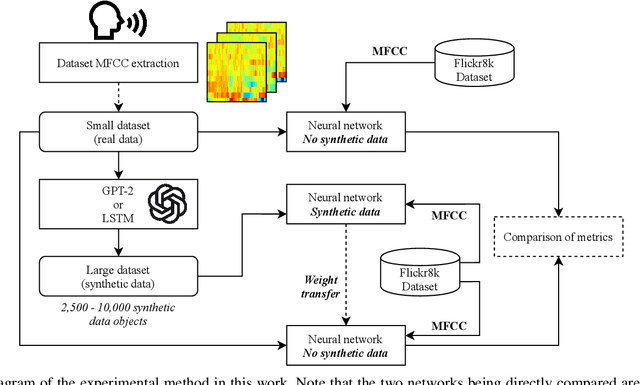

Abstract:In speech recognition problems, data scarcity often poses an issue due to the willingness of humans to provide large amounts of data for learning and classification. In this work, we take a set of 5 spoken Harvard sentences from 7 subjects and consider their MFCC attributes. Using character level LSTMs (supervised learning) and OpenAI's attention-based GPT-2 models, synthetic MFCCs are generated by learning from the data provided on a per-subject basis. A neural network is trained to classify the data against a large dataset of Flickr8k speakers and is then compared to a transfer learning network performing the same task but with an initial weight distribution dictated by learning from the synthetic data generated by the two models. The best result for all of the 7 subjects were networks that had been exposed to synthetic data, the model pre-trained with LSTM-produced data achieved the best result 3 times and the GPT-2 equivalent 5 times (since one subject had their best result from both models at a draw). Through these results, we argue that speaker classification can be improved by utilising a small amount of user data but with exposure to synthetically-generated MFCCs which then allow the networks to achieve near maximum classification scores.

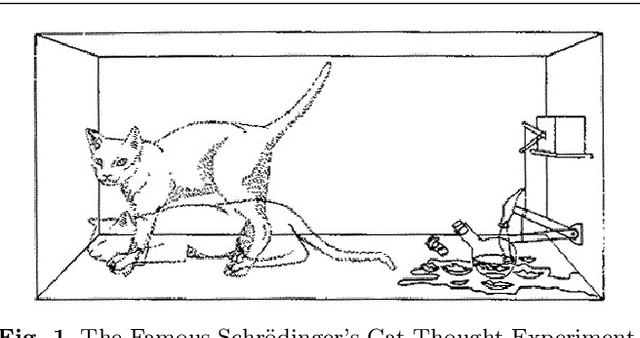

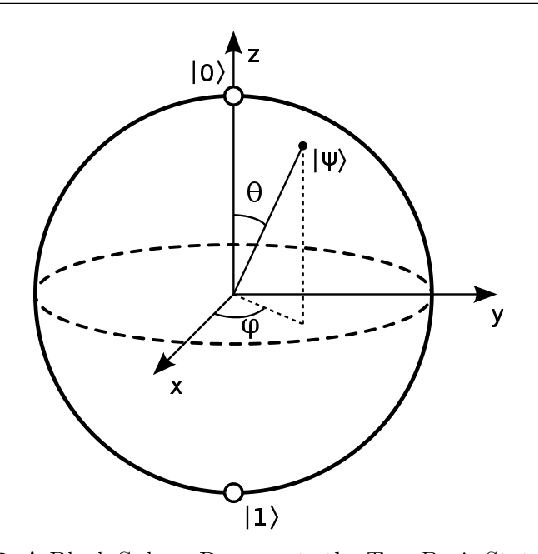

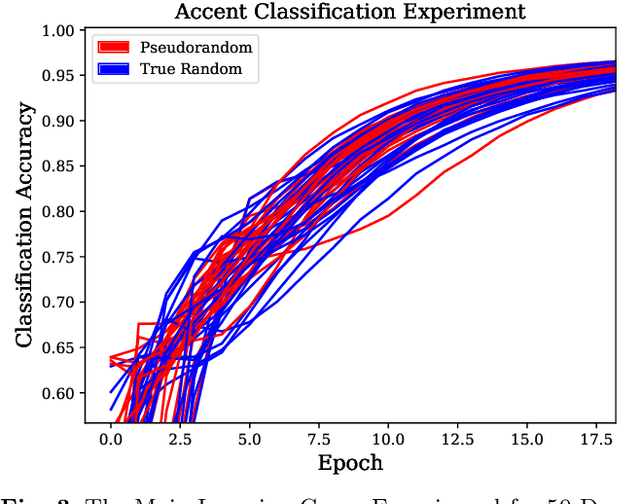

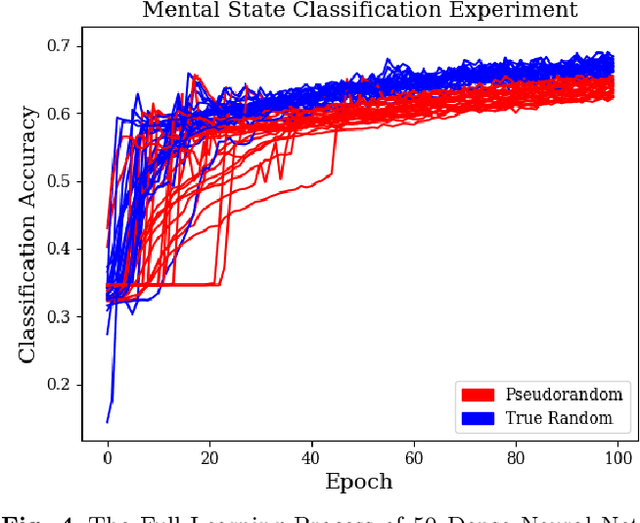

On the Effects of Pseudo and Quantum Random Number Generators in Soft Computing

Oct 10, 2019

Abstract:In this work, we argue that the implications of Pseudo and Quantum Random Number Generators (PRNG and QRNG) inexplicably affect the performances and behaviours of various machine learning models that require a random input. These implications are yet to be explored in Soft Computing until this work. We use a CPU and a QPU to generate random numbers for multiple Machine Learning techniques. Random numbers are employed in the random initial weight distributions of Dense and Convolutional Neural Networks, in which results show a profound difference in learning patterns for the two. In 50 Dense Neural Networks (25 PRNG/25 QRNG), QRNG increases over PRNG for accent classification at +0.1%, and QRNG exceeded PRNG for mental state EEG classification by +2.82%. In 50 Convolutional Neural Networks (25 PRNG/25 QRNG), the MNIST and CIFAR-10 problems are benchmarked, in MNIST the QRNG experiences a higher starting accuracy than the PRNG but ultimately only exceeds it by 0.02%. In CIFAR-10, the QRNG outperforms PRNG by +0.92%. The n-random split of a Random Tree is enhanced towards and new Quantum Random Tree (QRT) model, which has differing classification abilities to its classical counterpart, 200 trees are trained and compared (100 PRNG/100 QRNG). Using the accent and EEG classification datasets, a QRT seemed inferior to a RT as it performed on average worse by -0.12%. This pattern is also seen in the EEG classification problem, where a QRT performs worse than a RT by -0.28%. Finally, the QRT is ensembled into a Quantum Random Forest (QRF), which also has a noticeable effect when compared to the standard Random Forest (RF)... ABSTRACT SHORTENED DUE TO ARXIV LIMIT

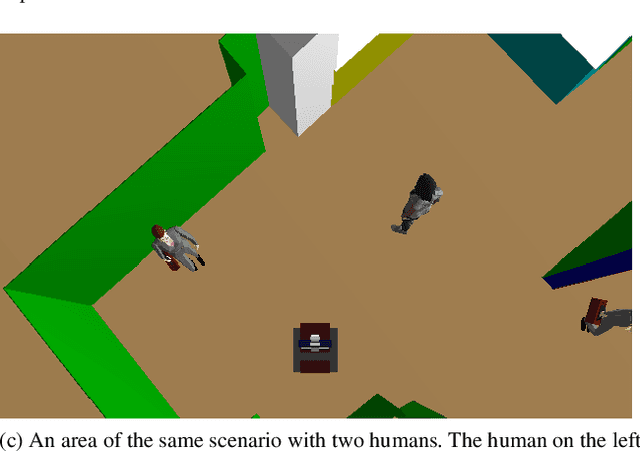

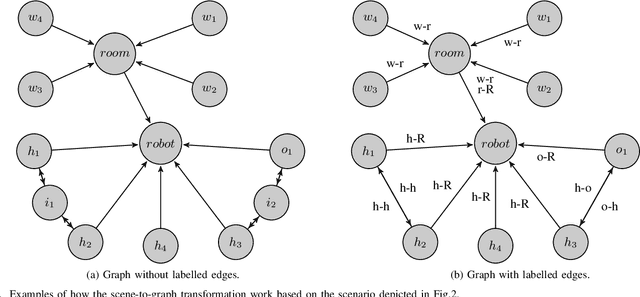

Graph Neural Networks for Human-aware Social Navigation

Sep 19, 2019

Abstract:Autonomous navigation is a key skill for assistive and service robots. To be successful, robots have to navigate avoiding going through the personal spaces of the people surrounding them. Complying with social rules such as not getting in the middle of human-to-human and human-to-object interactions is also important. This paper suggests using Graph Neural Networks to model how inconvenient the presence of a robot would be in a particular scenario according to learned human conventions so that it can be used by path planning algorithms. To do so, we propose two ways of modelling social interactions using graphs and benchmark them with different Graph Neural Networks using the SocNav1 dataset. We achieve close-to-human performance in the dataset and argue that, in addition to promising results, the main advantage of the approach is its scalability in terms of the number of social factors that can be considered and easily embedded in code, in comparison with model-based approaches. The code used to train and test the resulting graph neural network is available in a public repository.

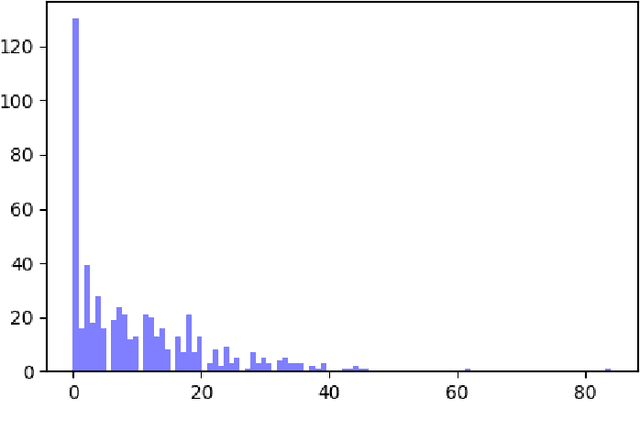

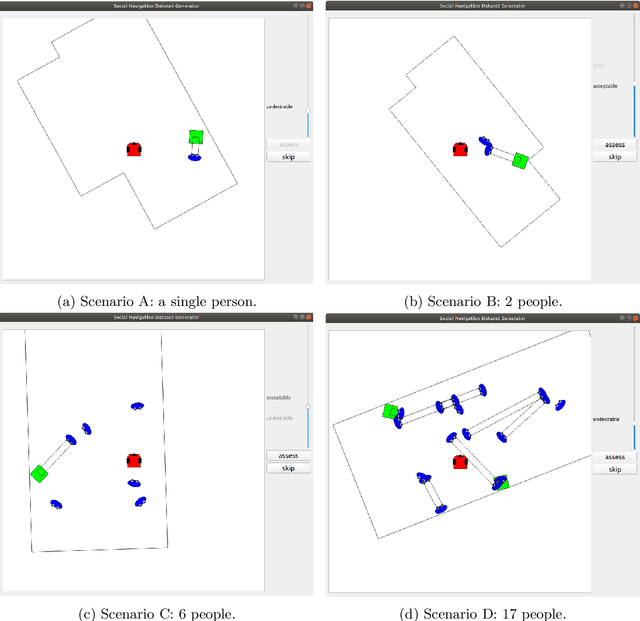

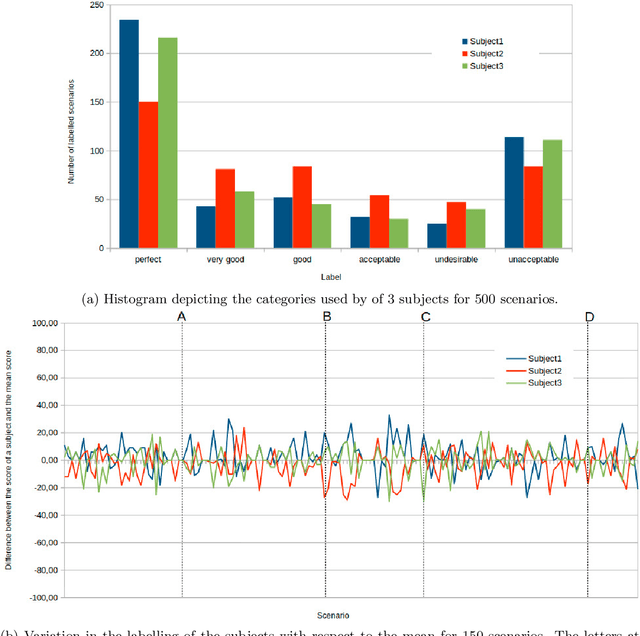

SocNav1: A Dataset to Benchmark and Learn Social Navigation Conventions

Sep 06, 2019

Abstract:Adapting to social conventions is an unavoidable requirement for the acceptance of assistive and social robots. While the scientific community broadly accepts that assistive robots and social robot companions are unlikely to have widespread use in the near future, their presence in health-care and other medium-sized institutions is becoming a reality. These robots will have a beneficial impact in industry and other fields such as health care. The growing number of research contributions to social navigation is also indicative of the importance of the topic. To foster the future prevalence of these robots, they must be useful, but also socially accepted. The first step to be able to actively ask for collaboration or permission is to estimate whether the robot would make people feel uncomfortable otherwise, and that is precisely the goal of algorithms evaluating social navigation compliance. Some approaches provide analytic models, whereas others use machine learning techniques such as neural networks. This data report presents and describes SocNav1, a dataset for social navigation conventions. The aims of SocNav1 are two-fold: a) enabling comparison of the algorithms that robots use to assess the convenience of their presence in a particular position when navigating; b) providing a sufficient amount of data so that modern machine learning algorithms such as deep neural networks can be used. Because of the structured nature of the data, SocNav1 is particularly well-suited to be used to benchmark non-Euclidean machine learning algorithms such as Graph Neural Networks (see [1]). The dataset has been made available in a public repository.

A Deep Evolutionary Approach to Bioinspired Classifier Optimisation for Brain-Machine Interaction

Aug 13, 2019

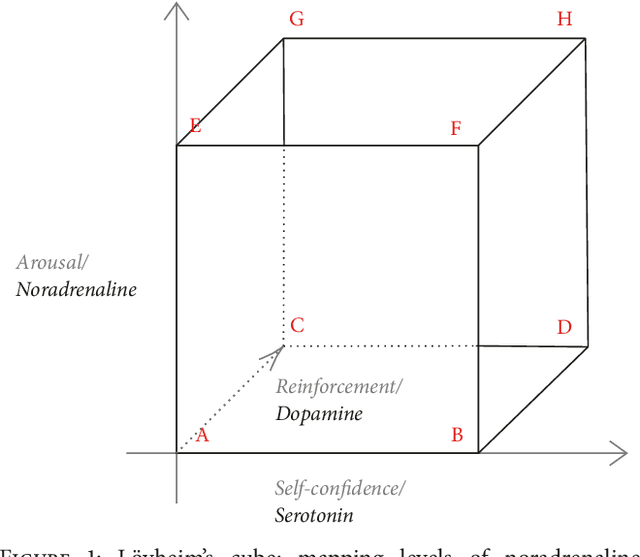

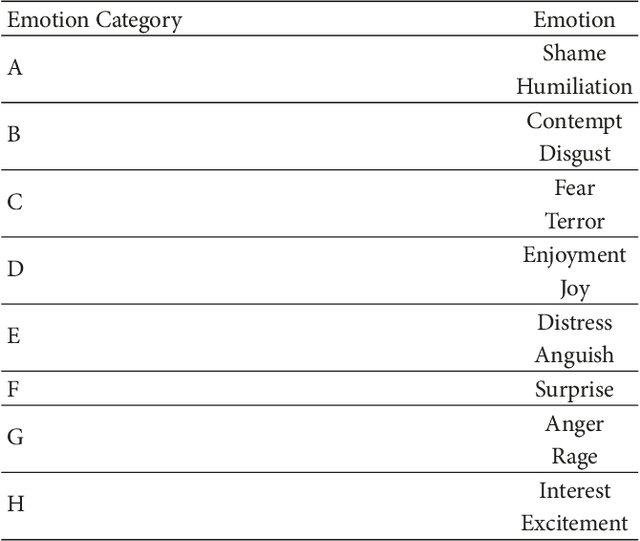

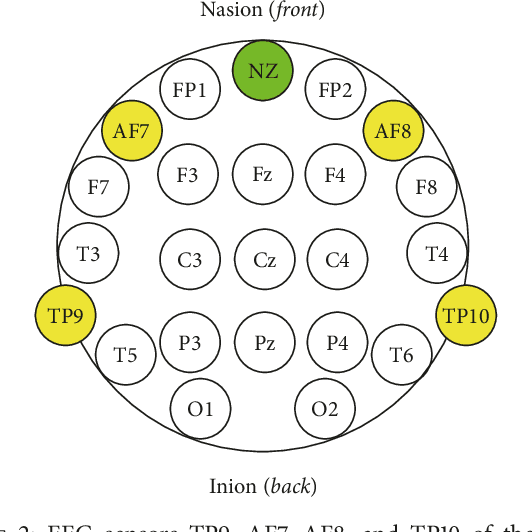

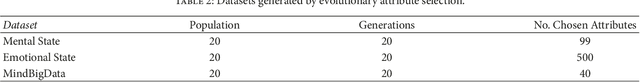

Abstract:This study suggests a new approach to EEG data classification by exploring the idea of using evolutionary computation to both select useful discriminative EEG features and optimise the topology of Artificial Neural Networks. An evolutionary algorithm is applied to select the most informative features from an initial set of 2550 EEG statistical features. Optimisation of a Multilayer Perceptron (MLP) is performed with an evolutionary approach before classification to estimate the best hyperparameters of the network. Deep learning and tuning with Long Short-Term Memory (LSTM) are also explored, and Adaptive Boosting of the two types of models is tested for each problem. Three experiments are provided for comparison using different classifiers: one for attention state classification, one for emotional sentiment classification, and a third experiment in which the goal is to guess the number a subject is thinking of. The obtained results show that an Adaptive Boosted LSTM can achieve an accuracy of 84.44%, 97.06%, and 9.94% on the attentional, emotional, and number datasets, respectively. An evolutionary-optimised MLP achieves results close to the Adaptive Boosted LSTM for the two first experiments and significantly higher for the number-guessing experiment with an Adaptive Boosted DEvo MLP reaching 31.35%, while being significantly quicker to train and classify. In particular, the accuracy of the nonboosted DEvo MLP was of 79.81%, 96.11%, and 27.07% in the same benchmarks. Two datasets for the experiments were gathered using a Muse EEG headband with four electrodes corresponding to TP9, AF7, AF8, and TP10 locations of the international EEG placement standard. The EEG MindBigData digits dataset was gathered from the TP9, FP1, FP2, and TP10 locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge