Daniel Rodriguez-Criado

Synthesizing Traffic Datasets using Graph Neural Networks

Dec 08, 2023

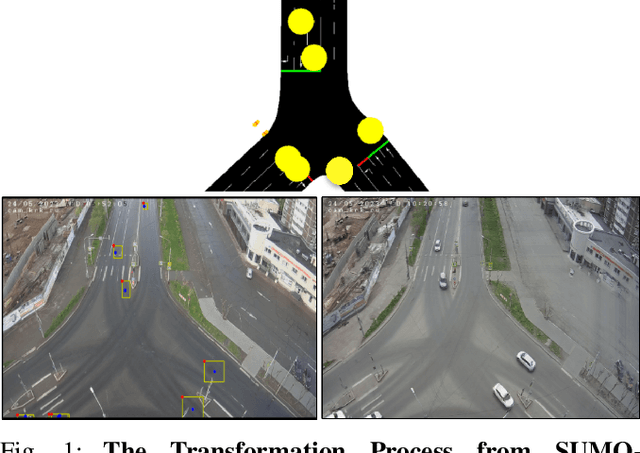

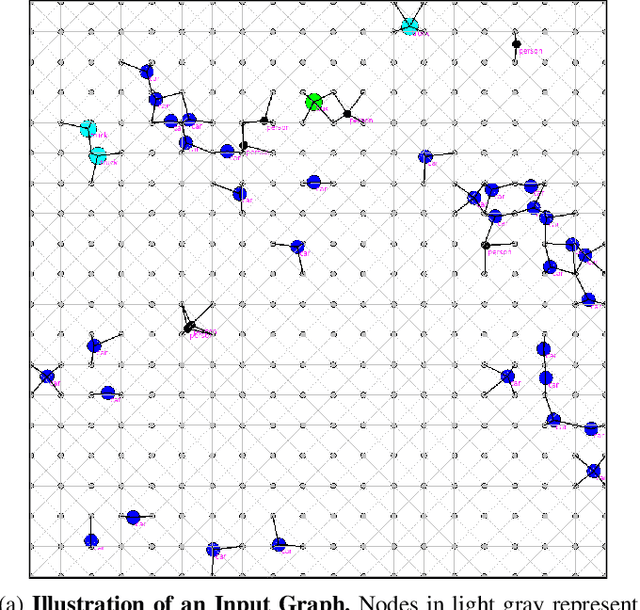

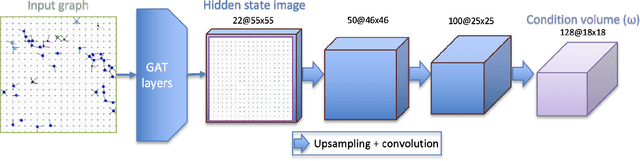

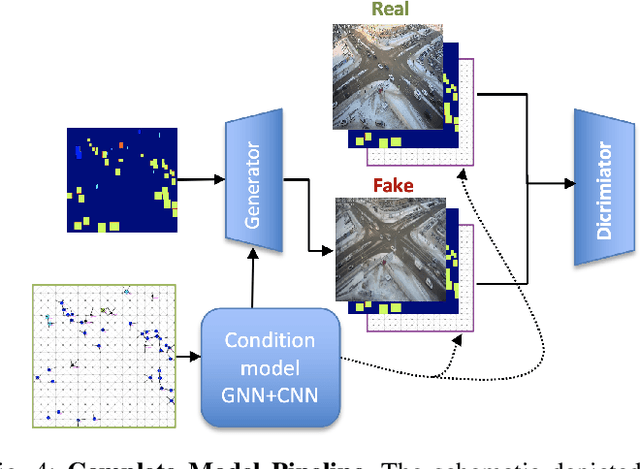

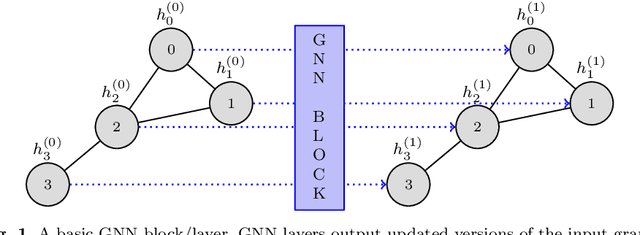

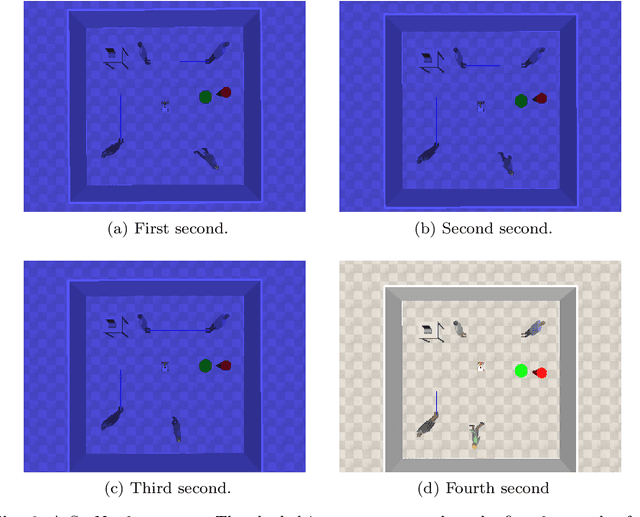

Abstract:Traffic congestion in urban areas presents significant challenges, and Intelligent Transportation Systems (ITS) have sought to address these via automated and adaptive controls. However, these systems often struggle to transfer simulated experiences to real-world scenarios. This paper introduces a novel methodology for bridging this `sim-real' gap by creating photorealistic images from 2D traffic simulations and recorded junction footage. We propose a novel image generation approach, integrating a Conditional Generative Adversarial Network with a Graph Neural Network (GNN) to facilitate the creation of realistic urban traffic images. We harness GNNs' ability to process information at different levels of abstraction alongside segmented images for preserving locality data. The presented architecture leverages the power of SPADE and Graph ATtention (GAT) network models to create images based on simulated traffic scenarios. These images are conditioned by factors such as entity positions, colors, and time of day. The uniqueness of our approach lies in its ability to effectively translate structured and human-readable conditions, encoded as graphs, into realistic images. This advancement contributes to applications requiring rich traffic image datasets, from data augmentation to urban traffic solutions. We further provide an application to test the model's capabilities, including generating images with manually defined positions for various entities.

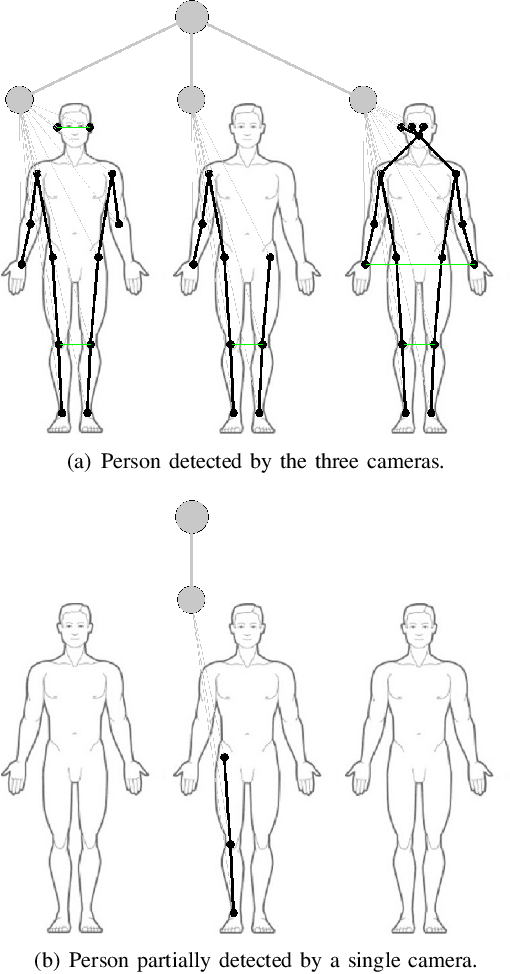

Multi-person 3D pose estimation from unlabelled data

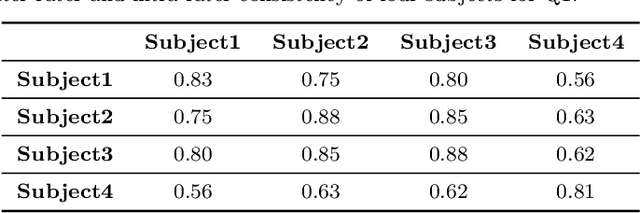

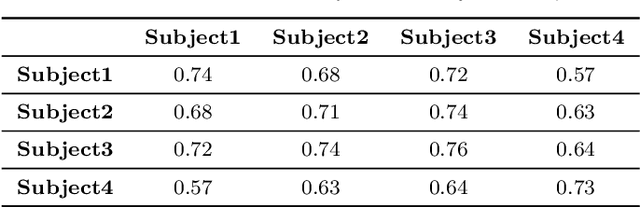

Dec 16, 2022Abstract:Its numerous applications make multi-human 3D pose estimation a remarkably impactful area of research. Nevertheless, assuming a multiple-view system composed of several regular RGB cameras, 3D multi-pose estimation presents several challenges. First of all, each person must be uniquely identified in the different views to separate the 2D information provided by the cameras. Secondly, the 3D pose estimation process from the multi-view 2D information of each person must be robust against noise and potential occlusions in the scenario. In this work, we address these two challenges with the help of deep learning. Specifically, we present a model based on Graph Neural Networks capable of predicting the cross-view correspondence of the people in the scenario along with a Multilayer Perceptron that takes the 2D points to yield the 3D poses of each person. These two models are trained in a self-supervised manner, thus avoiding the need for large datasets with 3D annotations.

A Graph Neural Network to Model User Comfort in Robot Navigation

Feb 17, 2021

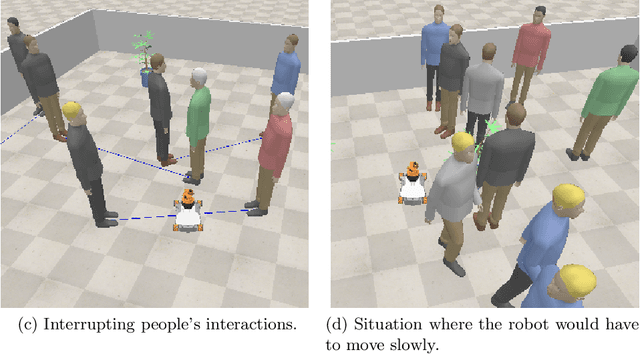

Abstract:Autonomous navigation is a key skill for assistive and service robots. To be successful, robots have to minimise the disruption caused to humans while moving. This implies predicting how people will move and complying with social conventions. Avoiding disrupting personal spaces, people's paths and interactions are examples of these social conventions. This paper leverages Graph Neural Networks to model robot disruption considering the movement of the humans and the robot so that the model built can be used by path planning algorithms. Along with the model, this paper presents an evolution of the dataset SocNav1 which considers the movement of the robot and the humans, and an updated scenario-to-graph transformation which is tested using different Graph Neural Network blocks. The model trained achieves close-to-human performance in the dataset. In addition to its accuracy, the main advantage of the approach is its scalability in terms of the number of social factors that can be considered in comparison with handcrafted models.

Generation of Human-aware Navigation Maps using Graph Neural Networks

Nov 10, 2020

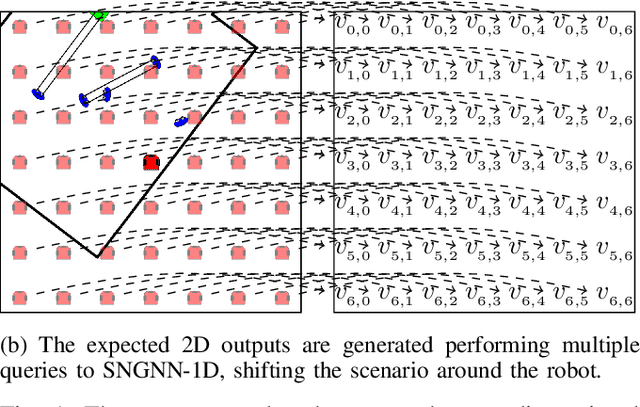

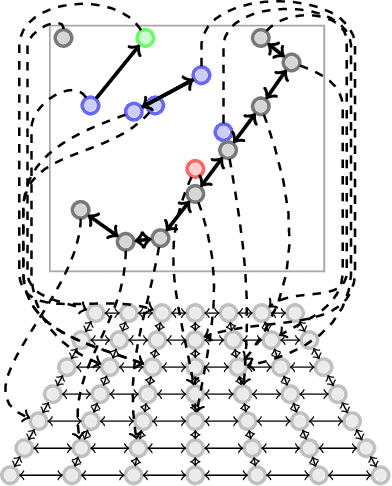

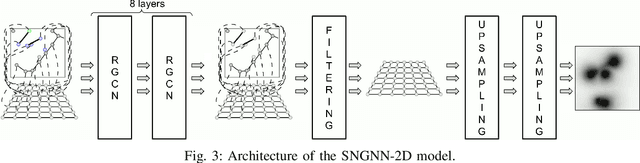

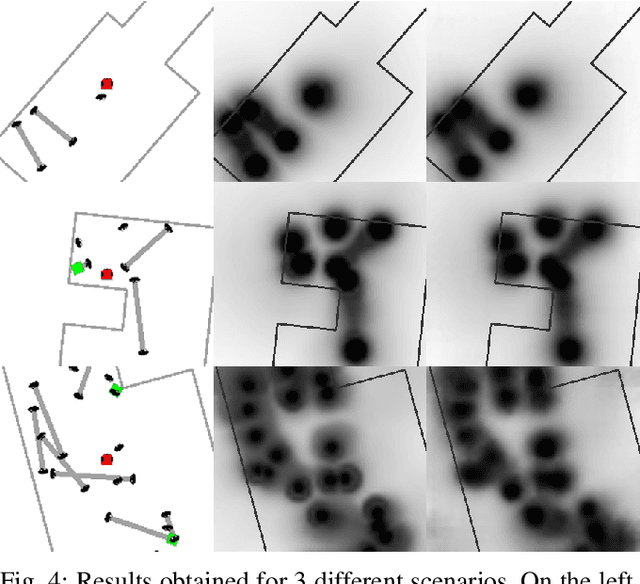

Abstract:Minimising the discomfort caused by robots when navigating in social situations is crucial for them to be accepted. The paper presents a machine learning-based framework that bootstraps existing one-dimensional datasets to generate a cost map dataset and a model combining Graph Neural Network and Convolutional Neural Network layers to produce cost maps for human-aware navigation in real-time. The proposed framework is evaluated against the original one-dimensional dataset and in simulated navigation tasks. The results outperform similar state-of-the-art-methods considering the accuracy on the dataset and the navigation metrics used. The applications of the proposed framework are not limited to human-aware navigation, it could be applied to other fields where map generation is needed.

A Toolkit to Generate Social Navigation Datasets

Sep 11, 2020

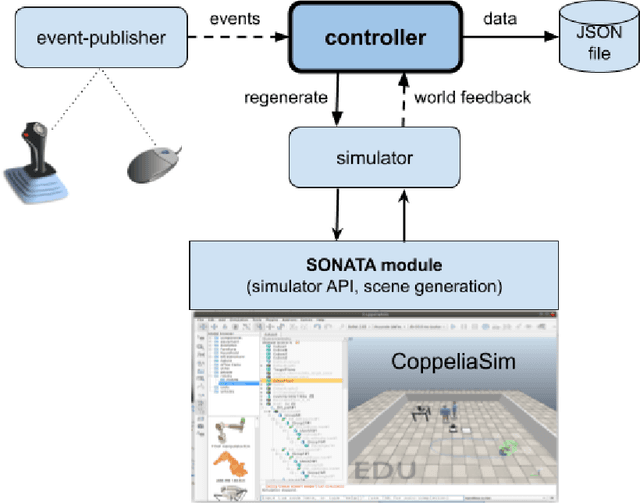

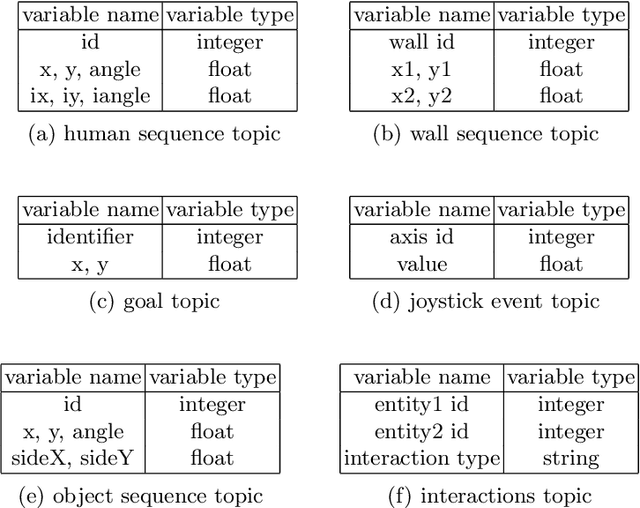

Abstract:Social navigation datasets are necessary to assess social navigation algorithms and train machine learning algorithms. Most of the currently available datasets target pedestrians' movements as a pattern to be replicated by robots. It can be argued that one of the main reasons for this to happen is that compiling datasets where real robots are manually controlled, as they would be expected to behave when moving, is a very resource-intensive task. Another aspect that is often missing in datasets is symbolic information that could be relevant, such as human activities, relationships or interactions. Unfortunately, the available datasets targeting robots and supporting symbolic information are restricted to static scenes. This paper argues that simulation can be used to gather social navigation data in an effective and cost-efficient way and presents a toolkit for this purpose. A use case studying the application of graph neural networks to create learned control policies using supervised learning is presented as an example of how it can be used.

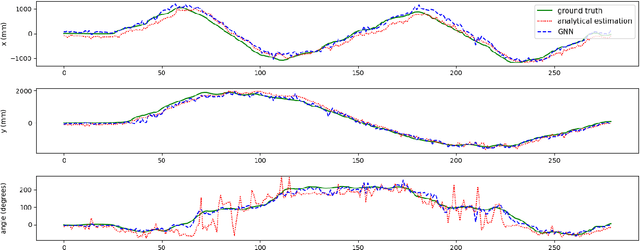

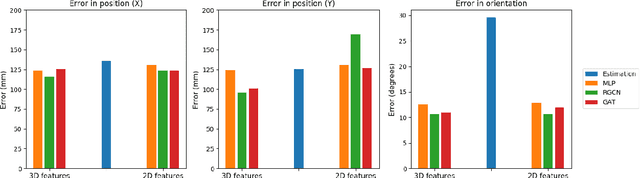

Multi-camera Torso Pose Estimation using Graph Neural Networks

Jul 28, 2020

Abstract:Estimating the location and orientation of humans is an essential skill for service and assistive robots. To achieve a reliable estimation in a wide area such as an apartment, multiple RGBD cameras are frequently used. Firstly, these setups are relatively expensive. Secondly, they seldom perform an effective data fusion using the multiple camera sources at an early stage of the processing pipeline. Occlusions and partial views make this second point very relevant in these scenarios. The proposal presented in this paper makes use of graph neural networks to merge the information acquired from multiple camera sources, achieving a mean absolute error below 125 mm for the location and 10 degrees for the orientation using low-resolution RGB images. The experiments, conducted in an apartment with three cameras, benchmarked two different graph neural network implementations and a third architecture based on fully connected layers. The software used has been released as open-source in a public repository (https://github.com/vangiel/WheresTheFellow).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge