On the Effects of Pseudo and Quantum Random Number Generators in Soft Computing

Paper and Code

Oct 10, 2019

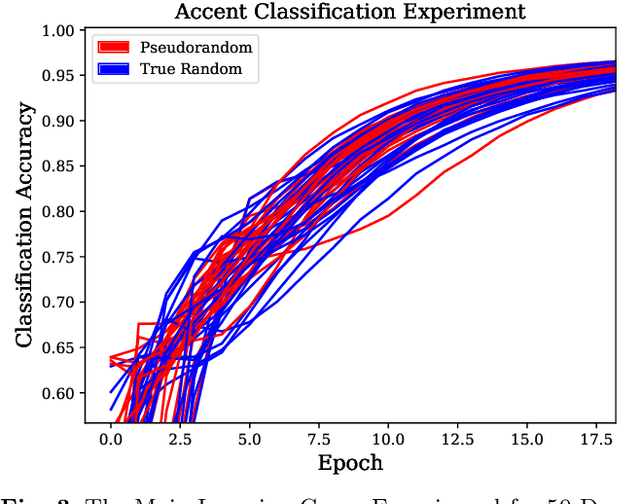

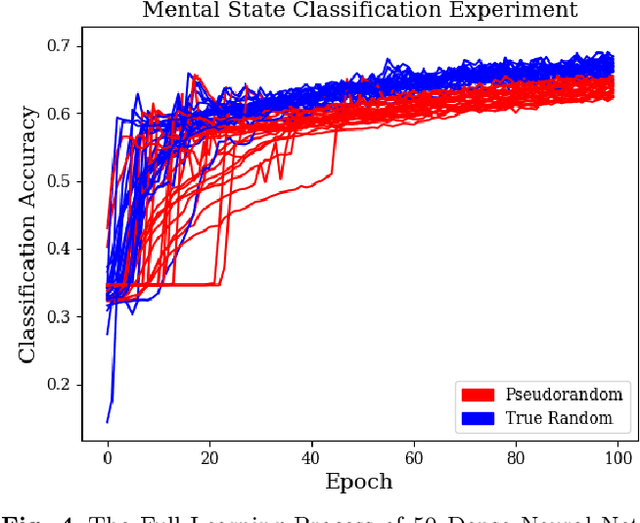

In this work, we argue that the implications of Pseudo and Quantum Random Number Generators (PRNG and QRNG) inexplicably affect the performances and behaviours of various machine learning models that require a random input. These implications are yet to be explored in Soft Computing until this work. We use a CPU and a QPU to generate random numbers for multiple Machine Learning techniques. Random numbers are employed in the random initial weight distributions of Dense and Convolutional Neural Networks, in which results show a profound difference in learning patterns for the two. In 50 Dense Neural Networks (25 PRNG/25 QRNG), QRNG increases over PRNG for accent classification at +0.1%, and QRNG exceeded PRNG for mental state EEG classification by +2.82%. In 50 Convolutional Neural Networks (25 PRNG/25 QRNG), the MNIST and CIFAR-10 problems are benchmarked, in MNIST the QRNG experiences a higher starting accuracy than the PRNG but ultimately only exceeds it by 0.02%. In CIFAR-10, the QRNG outperforms PRNG by +0.92%. The n-random split of a Random Tree is enhanced towards and new Quantum Random Tree (QRT) model, which has differing classification abilities to its classical counterpart, 200 trees are trained and compared (100 PRNG/100 QRNG). Using the accent and EEG classification datasets, a QRT seemed inferior to a RT as it performed on average worse by -0.12%. This pattern is also seen in the EEG classification problem, where a QRT performs worse than a RT by -0.28%. Finally, the QRT is ensembled into a Quantum Random Forest (QRF), which also has a noticeable effect when compared to the standard Random Forest (RF)... ABSTRACT SHORTENED DUE TO ARXIV LIMIT

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge