Dicong Qiu

SD-OVON: A Semantics-aware Dataset and Benchmark Generation Pipeline for Open-Vocabulary Object Navigation in Dynamic Scenes

May 24, 2025Abstract:We present the Semantics-aware Dataset and Benchmark Generation Pipeline for Open-vocabulary Object Navigation in Dynamic Scenes (SD-OVON). It utilizes pretraining multimodal foundation models to generate infinite unique photo-realistic scene variants that adhere to real-world semantics and daily commonsense for the training and the evaluation of navigation agents, accompanied with a plugin for generating object navigation task episodes compatible to the Habitat simulator. In addition, we offer two pre-generated object navigation task datasets, SD-OVON-3k and SD-OVON-10k, comprising respectively about 3k and 10k episodes of the open-vocabulary object navigation task, derived from the SD-OVON-Scenes dataset with 2.5k photo-realistic scans of real-world environments and the SD-OVON-Objects dataset with 0.9k manually inspected scanned and artist-created manipulatable object models. Unlike prior datasets limited to static environments, SD-OVON covers dynamic scenes and manipulatable objects, facilitating both real-to-sim and sim-to-real robotic applications. This approach enhances the realism of navigation tasks, the training and the evaluation of open-vocabulary object navigation agents in complex settings. To demonstrate the effectiveness of our pipeline and datasets, we propose two baselines and evaluate them along with state-of-the-art baselines on SD-OVON-3k. The datasets, benchmark and source code are publicly available.

GaussianProperty: Integrating Physical Properties to 3D Gaussians with LMMs

Dec 15, 2024Abstract:Estimating physical properties for visual data is a crucial task in computer vision, graphics, and robotics, underpinning applications such as augmented reality, physical simulation, and robotic grasping. However, this area remains under-explored due to the inherent ambiguities in physical property estimation. To address these challenges, we introduce GaussianProperty, a training-free framework that assigns physical properties of materials to 3D Gaussians. Specifically, we integrate the segmentation capability of SAM with the recognition capability of GPT-4V(ision) to formulate a global-local physical property reasoning module for 2D images. Then we project the physical properties from multi-view 2D images to 3D Gaussians using a voting strategy. We demonstrate that 3D Gaussians with physical property annotations enable applications in physics-based dynamic simulation and robotic grasping. For physics-based dynamic simulation, we leverage the Material Point Method (MPM) for realistic dynamic simulation. For robot grasping, we develop a grasping force prediction strategy that estimates a safe force range required for object grasping based on the estimated physical properties. Extensive experiments on material segmentation, physics-based dynamic simulation, and robotic grasping validate the effectiveness of our proposed method, highlighting its crucial role in understanding physical properties from visual data. Online demo, code, more cases and annotated datasets are available on \href{https://Gaussian-Property.github.io}{this https URL}.

Open-vocabulary Mobile Manipulation in Unseen Dynamic Environments with 3D Semantic Maps

Jun 26, 2024

Abstract:Open-Vocabulary Mobile Manipulation (OVMM) is a crucial capability for autonomous robots, especially when faced with the challenges posed by unknown and dynamic environments. This task requires robots to explore and build a semantic understanding of their surroundings, generate feasible plans to achieve manipulation goals, adapt to environmental changes, and comprehend natural language instructions from humans. To address these challenges, we propose a novel framework that leverages the zero-shot detection and grounded recognition capabilities of pretraining visual-language models (VLMs) combined with dense 3D entity reconstruction to build 3D semantic maps. Additionally, we utilize large language models (LLMs) for spatial region abstraction and online planning, incorporating human instructions and spatial semantic context. We have built a 10-DoF mobile manipulation robotic platform JSR-1 and demonstrated in real-world robot experiments that our proposed framework can effectively capture spatial semantics and process natural language user instructions for zero-shot OVMM tasks under dynamic environment settings, with an overall navigation and task success rate of 80.95% and 73.33% over 105 episodes, and better SFT and SPL by 157.18% and 19.53% respectively compared to the baseline. Furthermore, the framework is capable of replanning towards the next most probable candidate location based on the spatial semantic context derived from the 3D semantic map when initial plans fail, keeping an average success rate of 76.67%.

PODDP: Partially Observable Differential Dynamic Programming for Latent Belief Space Planning

Dec 14, 2019

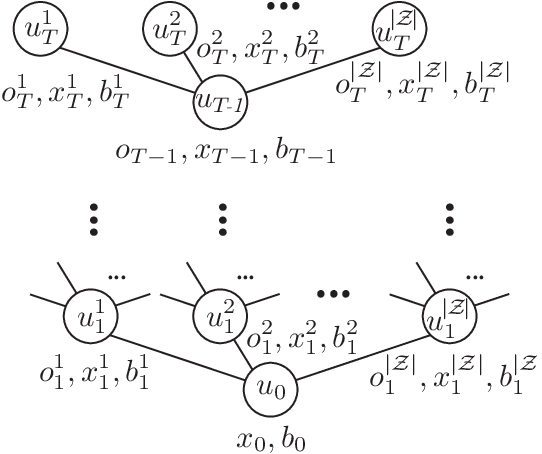

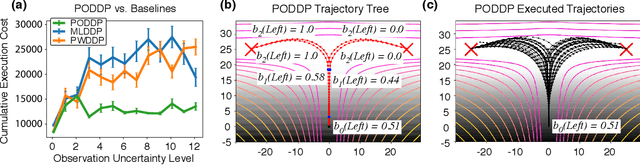

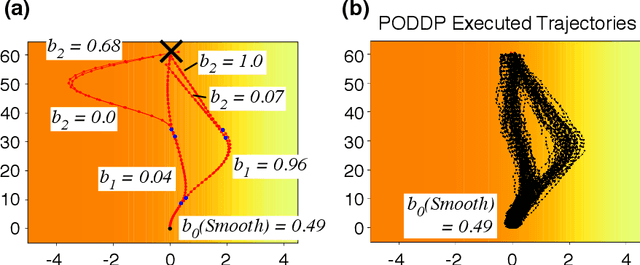

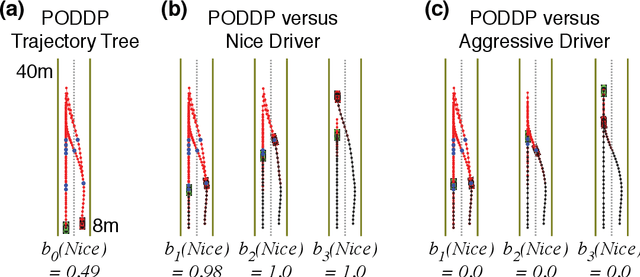

Abstract:Autonomous agents are limited in their ability to observe the world state. Partially observable Markov decision processes (POMDPs) formally model the problem of planning under world state uncertainty, but POMDPs with continuous actions and nonlinear dynamics suitable for robotics applications are challenging to solve. In this paper, we present an efficient differential dynamic programming (DDP) algorithm for belief space planning in POMDPs with uncertainty over a discrete latent state, and continuous states, actions, observations, and nonlinear dynamics. This representation allows planning of dynamic trajectories which are sensitive to structured uncertainty over discrete latent world states. We develop dynamic programming techniques to optimize a contingency plan over a tree of possible observations and belief space trajectories, and also derive a hierarchical version of the algorithm. Our method is applicable to problems with uncertainty over the cost or reward function (e.g., the configuration of goals or obstacles), uncertainty over the dynamics (e.g., the dynamical mode of a hybrid system), and uncertainty about interactions, where other agents' behavior is conditioned on latent intentions. Benchmarks show that our algorithm outperforms popular heuristic approaches to planning under uncertainty, and results from an autonomous lane changing task demonstrate that our algorithm can synthesize robust interactive trajectories.

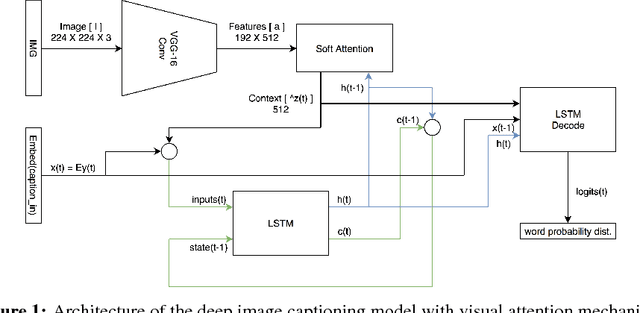

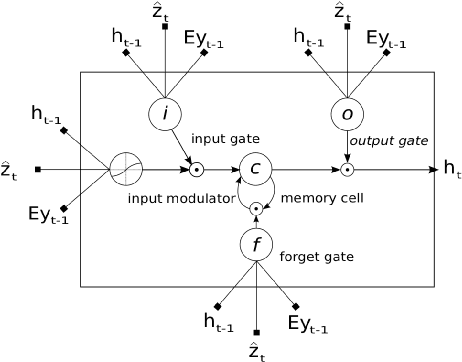

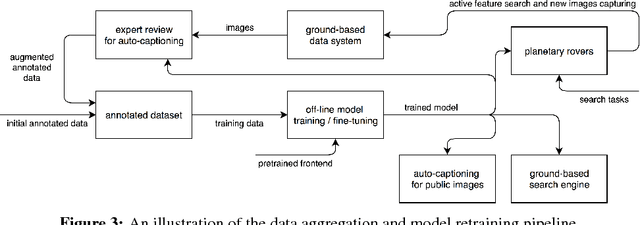

SPASS: Scientific Prominence Active Search System with Deep Image Captioning Network

Sep 10, 2018

Abstract:Planetary exploration missions with Mars rovers are complicated, which generally require elaborated task planning by human experts, from the path to take to the images to capture. NASA has been using this process to acquire over 22 million images from the planet Mars. In order to improve the degree of automation and thus efficiency in this process, we propose a system for planetary rovers to actively search for prominence of prespecified scientific features in captured images. Scientists can prespecify such search tasks in natural language and upload them to a rover, on which the deployed system constantly captions captured images with a deep image captioning network and compare the auto-generated captions to the prespecified search tasks by certain metrics so as to prioritize those images for transmission. As a beneficial side effect, the proposed system can also be deployed to ground-based planetary data systems as a content-based search engine.

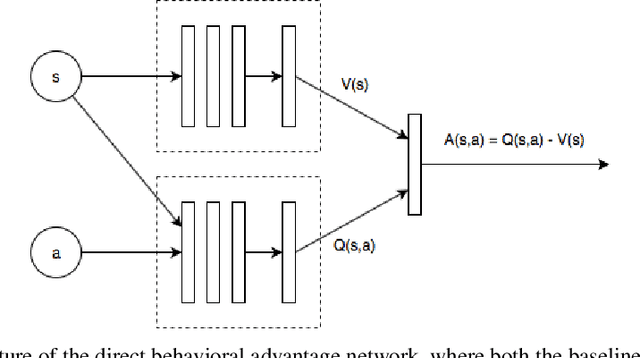

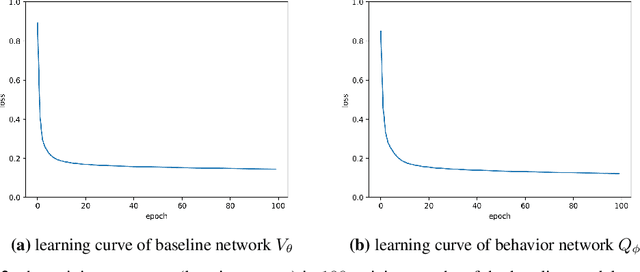

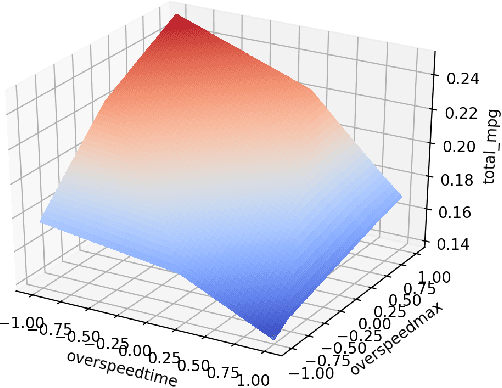

Adaptive Performance Assessment For Drivers Through Behavioral Advantage

Apr 26, 2018

Abstract:The potential positive impact of autonomous driving and driver assistance technolo- gies have been a major impetus over the last decade. On the flip side, it has been a challenging problem to analyze the performance of human drivers or autonomous driving agents quantitatively. In this work, we propose a generic method that compares the performance of drivers or autonomous driving agents even if the environmental conditions are different, by using the driver behavioral advantage instead of absolute metrics, which efficiently removes the environmental factors. A concrete application of the method is also presented, where the performance of more than 100 truck drivers was evaluated and ranked in terms of fuel efficiency, covering more than 90,000 trips spanning an average of 300 miles in a variety of driving conditions and environments.

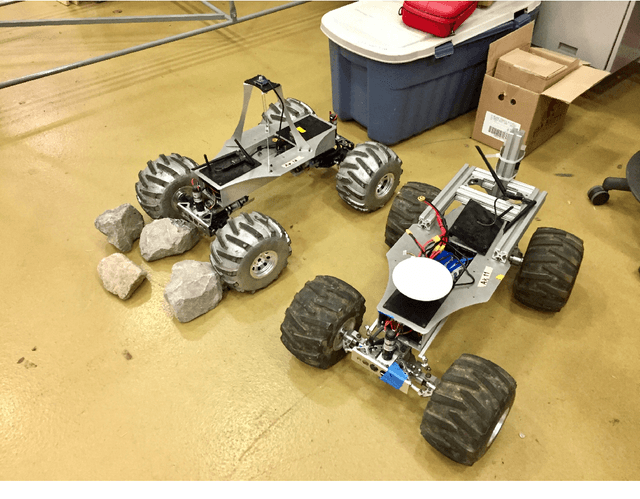

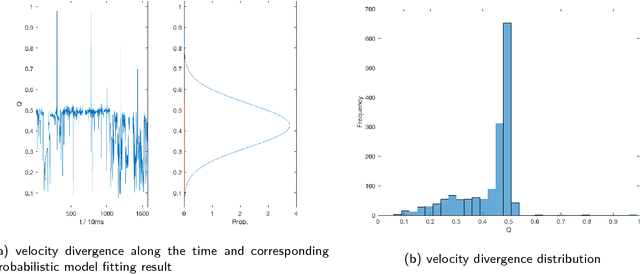

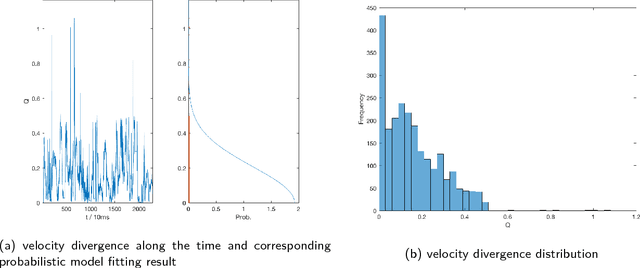

Naive Bayes Entrapment Detection for Planetary Rovers

Jan 31, 2018

Abstract:Entrapment detection is a prerequisite for planetary rovers to perform autonomous rescue procedure. In this study, rover entrapment and approximated entrapment criteria are formally defined. Entrapment detection using Naive Bayes classifiers is proposed and discussed along with results from experiments where the Naive Bayes entrapment detector is applied to AutoKralwer rovers. And final conclusions and further discussions are presented in the final section.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge