SPASS: Scientific Prominence Active Search System with Deep Image Captioning Network

Paper and Code

Sep 10, 2018

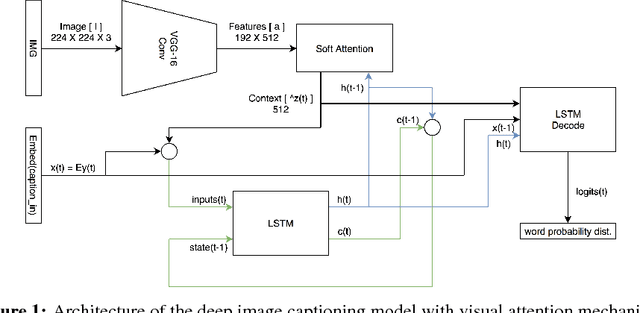

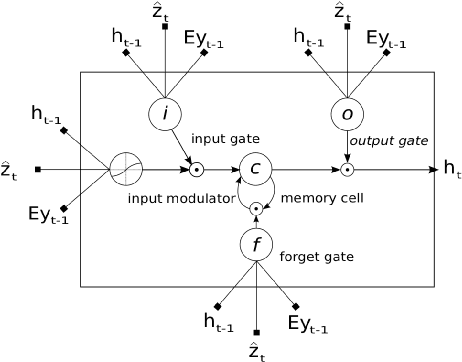

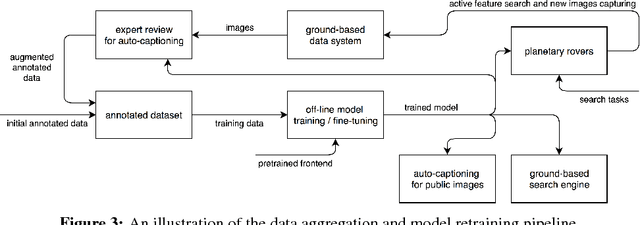

Planetary exploration missions with Mars rovers are complicated, which generally require elaborated task planning by human experts, from the path to take to the images to capture. NASA has been using this process to acquire over 22 million images from the planet Mars. In order to improve the degree of automation and thus efficiency in this process, we propose a system for planetary rovers to actively search for prominence of prespecified scientific features in captured images. Scientists can prespecify such search tasks in natural language and upload them to a rover, on which the deployed system constantly captions captured images with a deep image captioning network and compare the auto-generated captions to the prespecified search tasks by certain metrics so as to prioritize those images for transmission. As a beneficial side effect, the proposed system can also be deployed to ground-based planetary data systems as a content-based search engine.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge