Deepak Singh

AsterNav: Autonomous Aerial Robot Navigation In Darkness Using Passive Computation

Jan 24, 2026Abstract:Autonomous aerial navigation in absolute darkness is crucial for post-disaster search and rescue operations, which often occur from disaster-zone power outages. Yet, due to resource constraints, tiny aerial robots, perfectly suited for these operations, are unable to navigate in the darkness to find survivors safely. In this paper, we present an autonomous aerial robot for navigation in the dark by combining an Infra-Red (IR) monocular camera with a large-aperture coded lens and structured light without external infrastructure like GPS or motion-capture. Our approach obtains depth-dependent defocus cues (each structured light point appears as a pattern that is depth dependent), which acts as a strong prior for our AsterNet deep depth estimation model. The model is trained in simulation by generating data using a simple optical model and transfers directly to the real world without any fine-tuning or retraining. AsterNet runs onboard the robot at 20 Hz on an NVIDIA Jetson Orin$^\text{TM}$ Nano. Furthermore, our network is robust to changes in the structured light pattern and relative placement of the pattern emitter and IR camera, leading to simplified and cost-effective construction. We successfully evaluate and demonstrate our proposed depth navigation approach AsterNav using depth from AsterNet in many real-world experiments using only onboard sensing and computation, including dark matte obstacles and thin ropes (diameter 6.25mm), achieving an overall success rate of 95.5% with unknown object shapes, locations and materials. To the best of our knowledge, this is the first work on monocular, structured-light-based quadrotor navigation in absolute darkness.

The Marine Debris Forward-Looking Sonar Datasets

Mar 28, 2025Abstract:Sonar sensing is fundamental for underwater robotics, but limited by capabilities of AI systems, which need large training datasets. Public data in sonar modalities is lacking. This paper presents the Marine Debris Forward-Looking Sonar datasets, with three different settings (watertank, turntable, flooded quarry) increasing dataset diversity and multiple computer vision tasks: object classification, object detection, semantic segmentation, patch matching, and unsupervised learning. We provide full dataset description, basic analysis and initial results for some tasks. We expect the research community will benefit from this dataset, which is publicly available at https://doi.org/10.5281/zenodo.15101686

Sparse Distributed Memory is a Continual Learner

Mar 20, 2023Abstract:Continual learning is a problem for artificial neural networks that their biological counterparts are adept at solving. Building on work using Sparse Distributed Memory (SDM) to connect a core neural circuit with the powerful Transformer model, we create a modified Multi-Layered Perceptron (MLP) that is a strong continual learner. We find that every component of our MLP variant translated from biology is necessary for continual learning. Our solution is also free from any memory replay or task information, and introduces novel methods to train sparse networks that may be broadly applicable.

* 9 Pages. ICLR Acceptance

CCO-VOXEL: Chance Constrained Optimization over Uncertain Voxel-Grid Representation for Safe Trajectory Planning

Oct 06, 2021

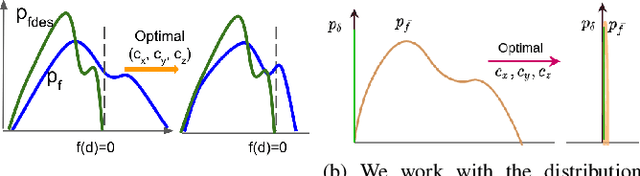

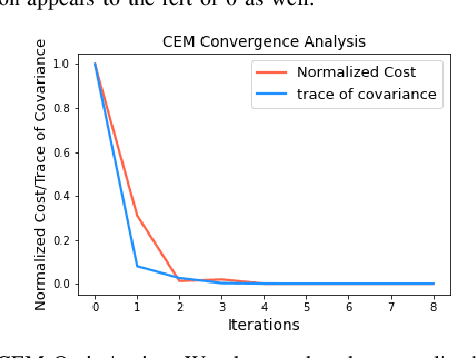

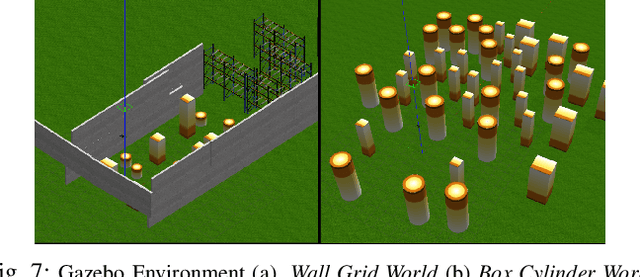

Abstract:We present CCO-VOXEL: the very first chance-constrained optimization (CCO) algorithm that can compute trajectory plans with probabilistic safety guarantees in real-time directly on the voxel-grid representation of the world. CCO-VOXEL maps the distribution over the distance to the closest obstacle to a distribution over collision-constraint violation and computes an optimal trajectory that minimizes the violation probability. Importantly, unlike existing works, we never assume the nature of the sensor uncertainty or the probability distribution of the resulting collision-constraint violations. We leverage the notion of Hilbert Space embedding of distributions and Maximum Mean Discrepancy (MMD) to compute a tractable surrogate for the original chance-constrained optimization problem and employ a combination of A* based graph-search and Cross-Entropy Method for obtaining its minimum. We show tangible performance gain in terms of collision avoidance and trajectory smoothness as a consequence of our probabilistic formulation vis a vis state-of-the-art planning methods that do not account for such nonparametric noise. Finally, we also show how a combination of low-dimensional feature embedding and pre-caching of Kernel Matrices of MMD allows us to achieve real-time performance in simulations as well as in implementations on on-board commodity hardware that controls the quadrotor flight

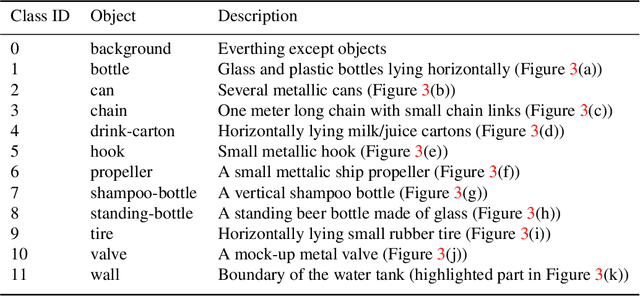

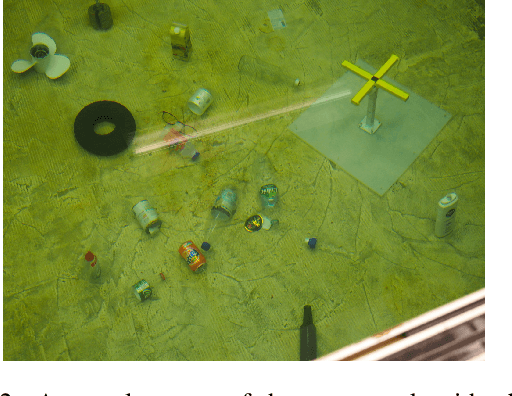

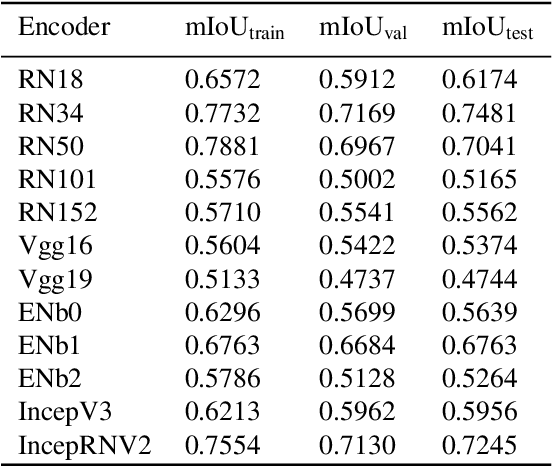

The Marine Debris Dataset for Forward-Looking Sonar Semantic Segmentation

Aug 15, 2021

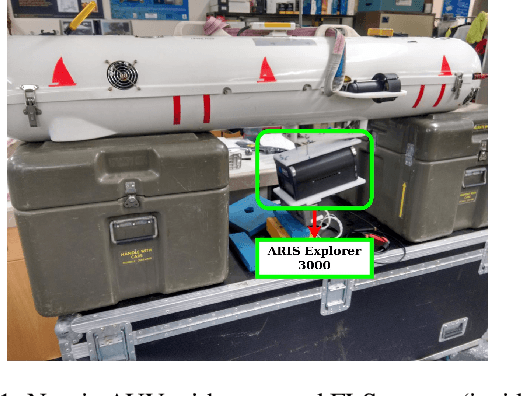

Abstract:Accurate detection and segmentation of marine debris is important for keeping the water bodies clean. This paper presents a novel dataset for marine debris segmentation collected using a Forward Looking Sonar (FLS). The dataset consists of 1868 FLS images captured using ARIS Explorer 3000 sensor. The objects used to produce this dataset contain typical house-hold marine debris and distractor marine objects (tires, hooks, valves,etc), divided in 11 classes plus a background class. Performance of state of the art semantic segmentation architectures with a variety of encoders have been analyzed on this dataset and presented as baseline results. Since the images are grayscale, no pretrained weights have been used. Comparisons are made using Intersection over Union (IoU). The best performing model is Unet with ResNet34 backbone at 0.7481 mIoU. The dataset is available at https://github.com/mvaldenegro/marine-debris-fls-datasets/

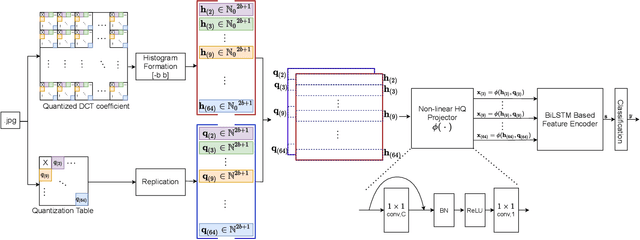

Q-matrix Unaware Double JPEG Detection using DCT-Domain Deep BiLSTM Network

Apr 10, 2021

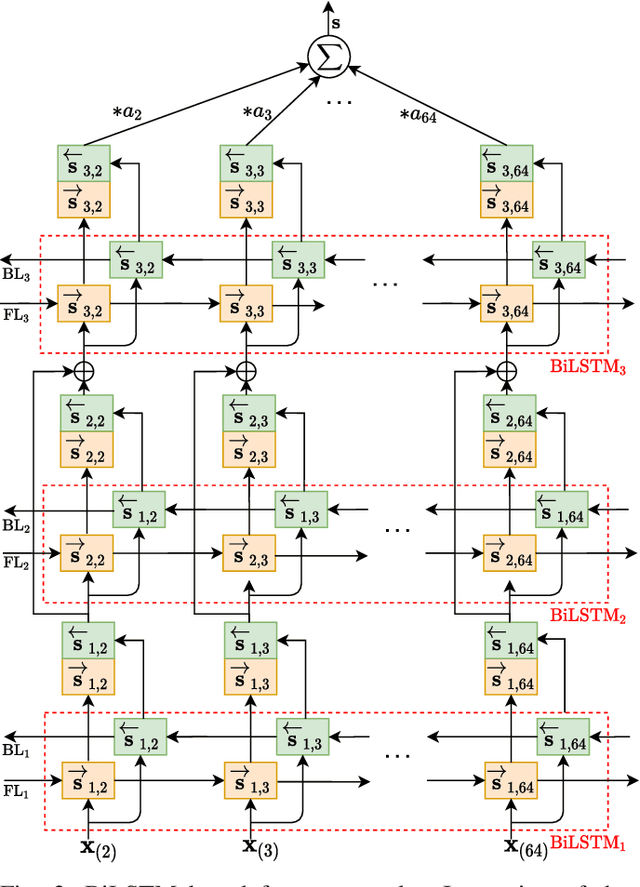

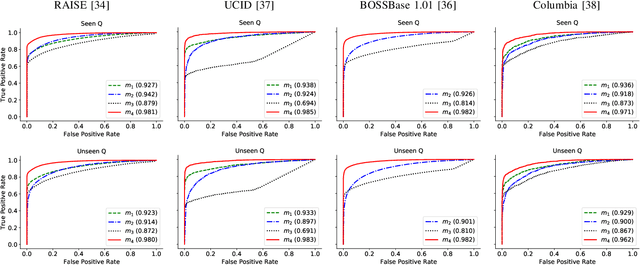

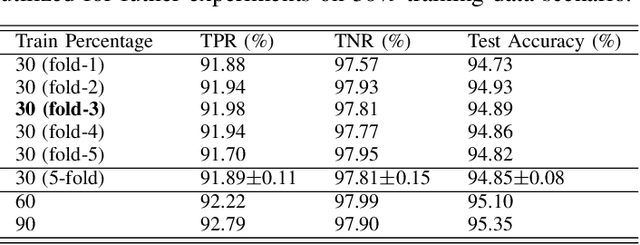

Abstract:The double JPEG compression detection has received much attention in recent years due to its applicability as a forensic tool for the most widely used JPEG file format. Existing state-of-the-art CNN-based methods either use histograms of all the frequencies or rely on heuristics to select histograms of specific low frequencies to classify single and double compressed images. However, even amidst lower frequencies of double compressed images/patches, histograms of all the frequencies do not have distinguishable features to separate them from single compressed images. This paper directly extracts the quantized DCT coefficients from the JPEG images without decompressing them in the pixel domain, obtains all AC frequencies' histograms, uses a module based on $1\times 1$ depth-wise convolutions to learn the inherent relation between each histogram and corresponding q-factor, and utilizes a tailor-made BiLSTM network for selectively encoding these feature vector sequences. The proposed system outperforms several baseline methods on a relatively large and diverse publicly available dataset of single and double compressed patches. Another essential aspect of any single vs. double JPEG compression detection system is handling the scenario where test patches are compressed with entirely different quantization matrices (Q-matrices) than those used while training; different camera manufacturers and image processing software generally utilize their customized quantization matrices. A set of extensive experiments shows that the proposed system trained on a single dataset generalizes well on other datasets compressed with completely unseen quantization matrices and outperforms the state-of-the-art methods in both seen and unseen quantization matrices scenarios.

Multi-Objective Evolutionary approach for the Performance Improvement of Learners using Ensembling Feature selection and Discretization Technique on Medical data

Apr 16, 2020

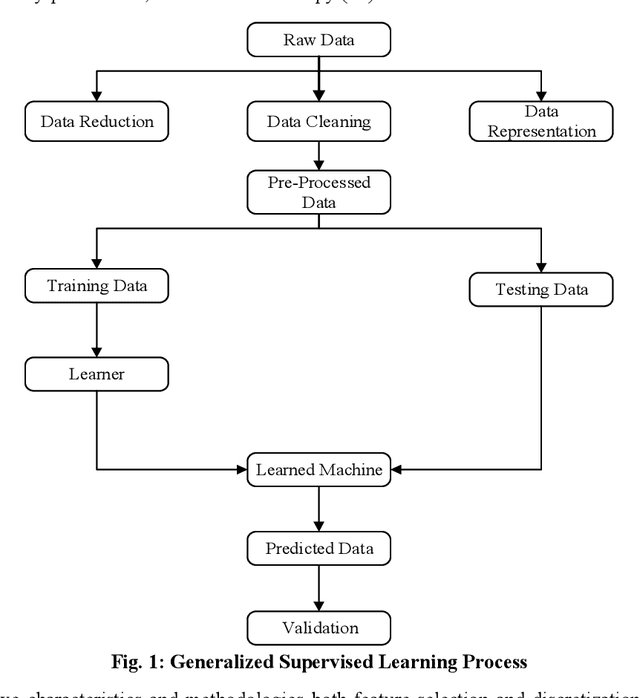

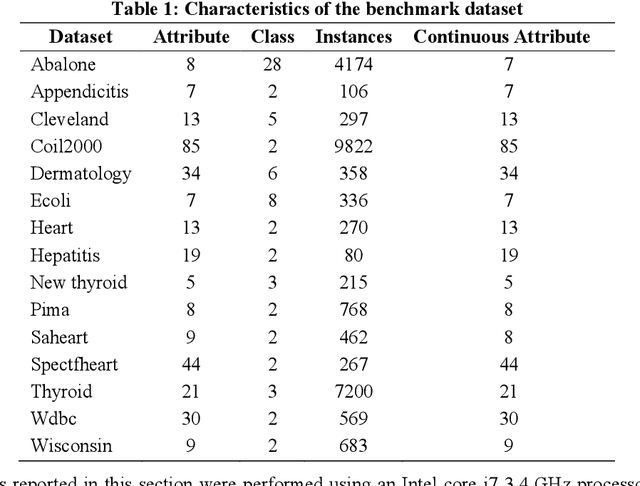

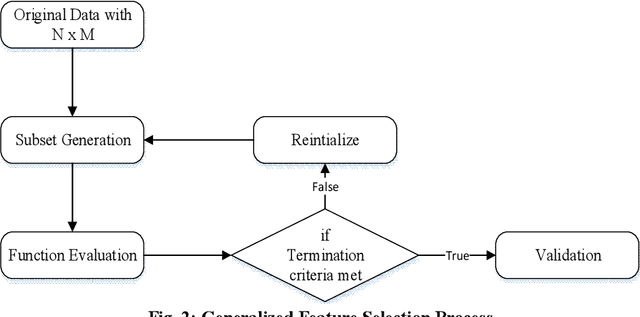

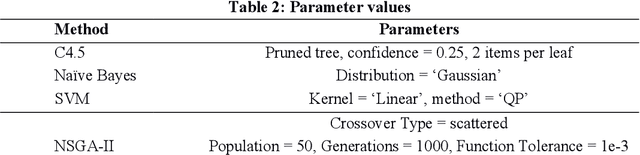

Abstract:Biomedical data is filled with continuous real values; these values in the feature set tend to create problems like underfitting, the curse of dimensionality and increase in misclassification rate because of higher variance. In response, pre-processing techniques on dataset minimizes the side effects and have shown success in maintaining the adequate accuracy. Feature selection and discretization are the two necessary preprocessing steps that were effectively employed to handle the data redundancies in the biomedical data. However, in the previous works, the absence of unified effort by integrating feature selection and discretization together in solving the data redundancy problem leads to the disjoint and fragmented field. This paper proposes a novel multi-objective based dimensionality reduction framework, which incorporates both discretization and feature reduction as an ensemble model for performing feature selection and discretization. Selection of optimal features and the categorization of discretized and non-discretized features from the feature subset is governed by the multi-objective genetic algorithm (NSGA-II). The two objective, minimizing the error rate during the feature selection and maximizing the information gain while discretization is considered as fitness criteria.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge