David Brie

CRAN, CNRS, UL

Coupled tensor models for probability mass function estimation: Part II, Uniqueness of the model

Sep 05, 2025Abstract:In this paper, uniqueness properties of a coupled tensor model are studied. This new coupled tensor model is used in a new method called Partial Coupled Tensor Factorization of 3D marginals or PCTF3D. This method performs estimation of probability mass functions by coupling 3D marginals, seen as order-3 tensors. The core novelty of PCTF3D's approach (detailed in the part I article) relies on the partial coupling which consists on the choice of 3D marginals to be coupled. Tensor methods are ubiquitous in many applications of statistical learning, with their biggest advantage of having strong uniqueness properties. In this paper, the uniqueness properties of PCTF3D's constrained coupled low-rank model is assessed. While probabilistic constraints of the coupled model are handled properly, it is shown that uniqueness highly depends on the coupling used in PCTF3D. After proposing a Jacobian algorithm providing maximum recoverable rank, different coupling strategies presented in the Part I article are examined with respect to their uniqueness properties. Finally, an identifiability bound is given for a so-called Cartesian coupling which permits enhancing sufficient bounds of the literature.

Personalized Coupled Tensor Decomposition for Multimodal Data Fusion: Uniqueness and Algorithms

Dec 02, 2024Abstract:Coupled tensor decompositions (CTDs) perform data fusion by linking factors from different datasets. Although many CTDs have been already proposed, current works do not address important challenges of data fusion, where: 1) the datasets are often heterogeneous, constituting different "views" of a given phenomena (multimodality); and 2) each dataset can contain personalized or dataset-specific information, constituting distinct factors that are not coupled with other datasets. In this work, we introduce a personalized CTD framework tackling these challenges. A flexible model is proposed where each dataset is represented as the sum of two components, one related to a common tensor through a multilinear measurement model, and another specific to each dataset. Both the common and distinct components are assumed to admit a polyadic decomposition. This generalizes several existing CTD models. We provide conditions for specific and generic uniqueness of the decomposition that are easy to interpret. These conditions employ uni-mode uniqueness of different individual datasets and properties of the measurement model. Two algorithms are proposed to compute the common and distinct components: a semi-algebraic one and a coordinate-descent optimization method. Experimental results illustrate the advantage of the proposed framework compared with the state of the art approaches.

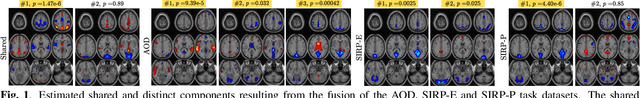

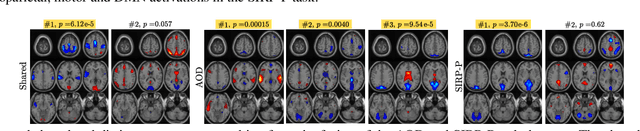

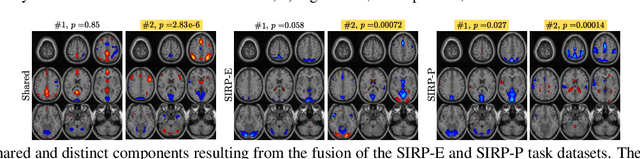

Coupled CP tensor decomposition with shared and distinct components for multi-task fMRI data fusion

Nov 25, 2022

Abstract:Discovering components that are shared in multiple datasets, next to dataset-specific features, has great potential for studying the relationships between different subjects or tasks in functional Magnetic Resonance Imaging (fMRI) data. Coupled matrix and tensor factorization approaches have been useful for flexible data fusion, or decomposition to extract features that can be used in multiple ways. However, existing methods do not directly recover shared and dataset-specific components, which requires post-processing steps involving additional hyperparameter selection. In this paper, we propose a tensor-based framework for multi-task fMRI data fusion, using a partially constrained canonical polyadic (CP) decomposition model. Differently from previous approaches, the proposed method directly recovers shared and dataset-specific components, leading to results that are directly interpretable. A strategy to select a highly reproducible solution to the decomposition is also proposed. We evaluate the proposed methodology on real fMRI data of three tasks, and show that the proposed method finds meaningful components that clearly identify group differences between patients with schizophrenia and healthy controls.

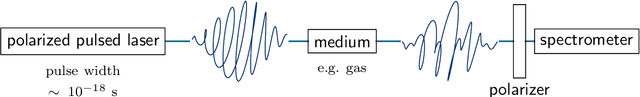

Polarimetric phase retrieval: uniqueness and algorithms

Jun 26, 2022

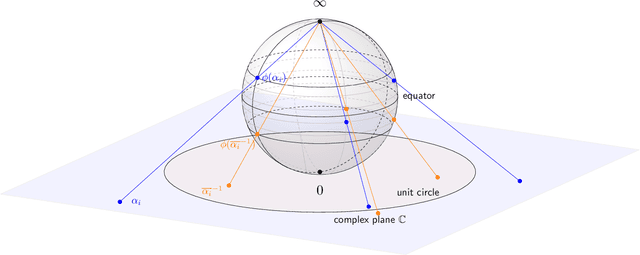

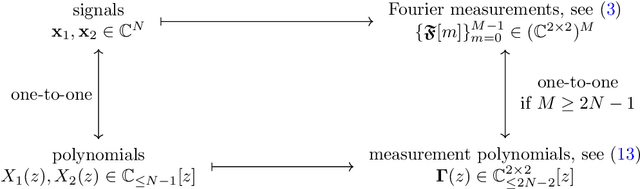

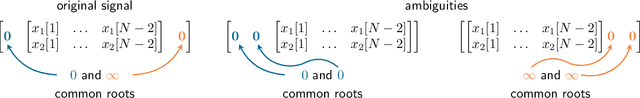

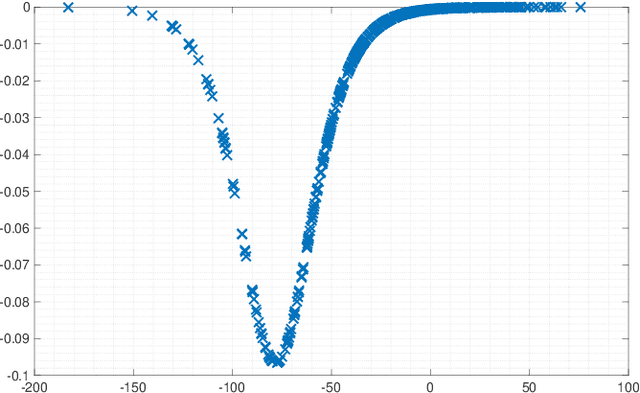

Abstract:This work introduces a novel Fourier phase retrieval model, called polarimetric phase retrieval that enables a systematic use of polarization information in Fourier phase retrieval problems. We provide a complete characterization of uniqueness properties of this new model by unraveling equivalencies with a peculiar polynomial factorization problem. We introduce two different but complementary categories of reconstruction methods. The first one is algebraic and relies on the use of approximate greatest common divisor computations using Sylvester matrices. The second one carefully adapts existing algorithms for Fourier phase retrieval, namely semidefinite positive relaxation and Wirtinger-Flow, to solve the polarimetric phase retrieval problem. Finally, a set of numerical experiments permits a detailed assessment of the numerical behavior and relative performances of each proposed reconstruction strategy. We further highlight a reconstruction strategy that combines both approaches for scalable, computationally efficient and asymptotically MSE optimal performance.

Tensor-based framework for training flexible neural networks

Jun 25, 2021

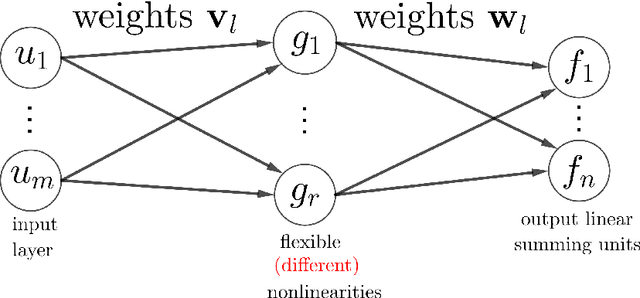

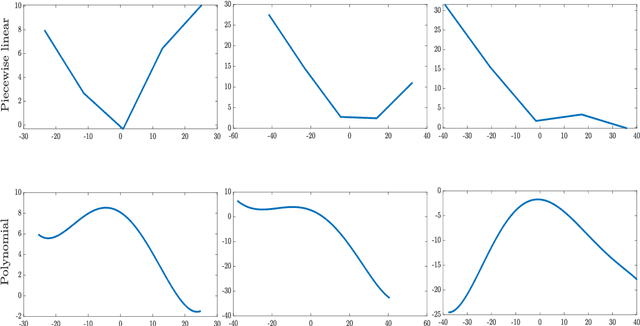

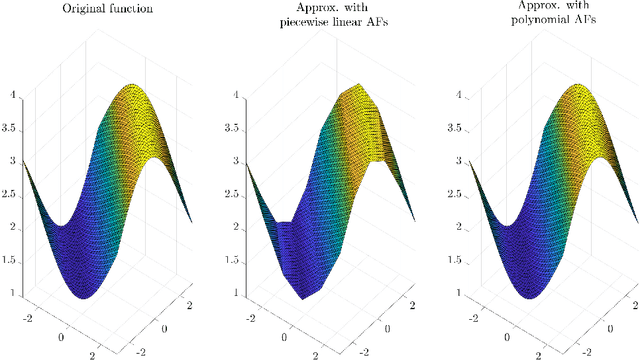

Abstract:Activation functions (AFs) are an important part of the design of neural networks (NNs), and their choice plays a predominant role in the performance of a NN. In this work, we are particularly interested in the estimation of flexible activation functions using tensor-based solutions, where the AFs are expressed as a weighted sum of predefined basis functions. To do so, we propose a new learning algorithm which solves a constrained coupled matrix-tensor factorization (CMTF) problem. This technique fuses the first and zeroth order information of the NN, where the first-order information is contained in a Jacobian tensor, following a constrained canonical polyadic decomposition (CPD). The proposed algorithm can handle different decomposition bases. The goal of this method is to compress large pretrained NN models, by replacing subnetworks, {\em i.e.,} one or multiple layers of the original network, by a new flexible layer. The approach is applied to a pretrained convolutional neural network (CNN) used for character classification.

A general framework for constrained convex quaternion optimization

Feb 04, 2021

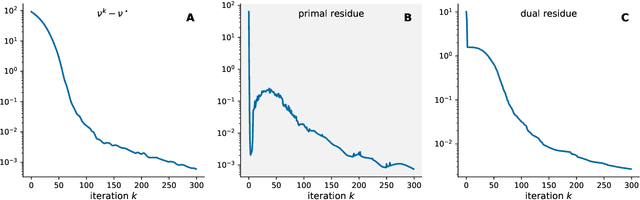

Abstract:This paper introduces a general framework for solving constrained convex quaternion optimization problems in the quaternion domain. To soundly derive these new results, the proposed approach leverages the recently developed generalized $\mathbb{HR}$-calculus together with the equivalence between the original quaternion optimization problem and its augmented real-domain counterpart. This new framework simultaneously provides rigorous theoretical foundations as well as elegant, compact quaternion-domain formulations for optimization problems in quaternion variables. Our contributions are threefold: (i) we introduce the general form for convex constrained optimization problems in quaternion variables, (ii) we extend fundamental notions of convex optimization to the quaternion case, namely Lagrangian duality and optimality conditions, (iii) we develop the quaternion alternating direction method of multipliers (Q-ADMM) as a general purpose quaternion optimization algorithm. The relevance of the proposed methodology is demonstrated by solving two typical examples of constrained convex quaternion optimization problems arising in signal processing. Our results open new avenues in the design, analysis and efficient implementation of quaternion-domain optimization procedures.

Homotopy based algorithms for $\ell_0$-regularized least-squares

Mar 18, 2015

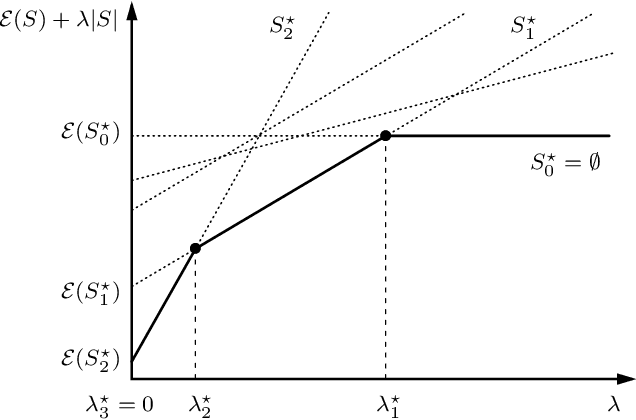

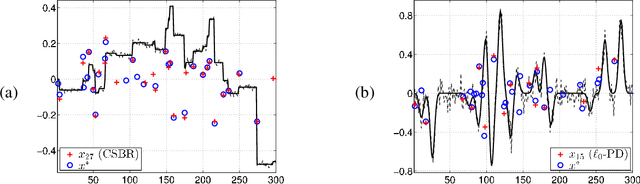

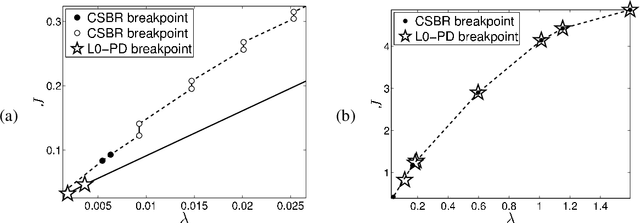

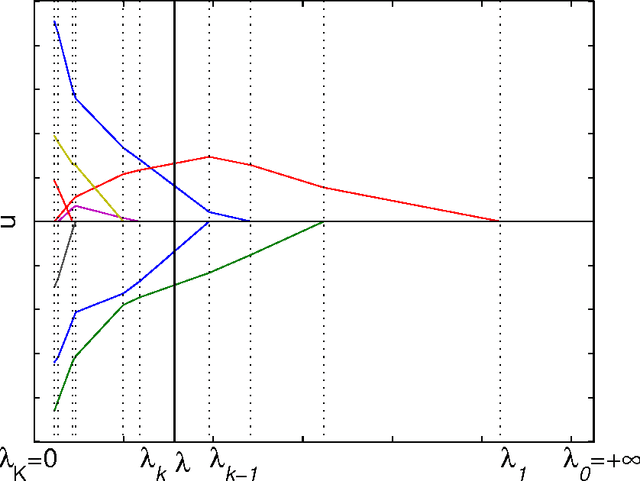

Abstract:Sparse signal restoration is usually formulated as the minimization of a quadratic cost function $\|y-Ax\|_2^2$, where A is a dictionary and x is an unknown sparse vector. It is well-known that imposing an $\ell_0$ constraint leads to an NP-hard minimization problem. The convex relaxation approach has received considerable attention, where the $\ell_0$-norm is replaced by the $\ell_1$-norm. Among the many efficient $\ell_1$ solvers, the homotopy algorithm minimizes $\|y-Ax\|_2^2+\lambda\|x\|_1$ with respect to x for a continuum of $\lambda$'s. It is inspired by the piecewise regularity of the $\ell_1$-regularization path, also referred to as the homotopy path. In this paper, we address the minimization problem $\|y-Ax\|_2^2+\lambda\|x\|_0$ for a continuum of $\lambda$'s and propose two heuristic search algorithms for $\ell_0$-homotopy. Continuation Single Best Replacement is a forward-backward greedy strategy extending the Single Best Replacement algorithm, previously proposed for $\ell_0$-minimization at a given $\lambda$. The adaptive search of the $\lambda$-values is inspired by $\ell_1$-homotopy. $\ell_0$ Regularization Path Descent is a more complex algorithm exploiting the structural properties of the $\ell_0$-regularization path, which is piecewise constant with respect to $\lambda$. Both algorithms are empirically evaluated for difficult inverse problems involving ill-conditioned dictionaries. Finally, we show that they can be easily coupled with usual methods of model order selection.

* 38 pages

A sufficient condition on monotonic increase of the number of nonzero entry in the optimizer of L1 norm penalized least-square problem

Apr 19, 2011

Abstract:The $\ell$-1 norm based optimization is widely used in signal processing, especially in recent compressed sensing theory. This paper studies the solution path of the $\ell$-1 norm penalized least-square problem, whose constrained form is known as Least Absolute Shrinkage and Selection Operator (LASSO). A solution path is the set of all the optimizers with respect to the evolution of the hyperparameter (Lagrange multiplier). The study of the solution path is of great significance in viewing and understanding the profile of the tradeoff between the approximation and regularization terms. If the solution path of a given problem is known, it can help us to find the optimal hyperparameter under a given criterion such as the Akaike Information Criterion. In this paper we present a sufficient condition on $\ell$-1 norm penalized least-square problem. Under this sufficient condition, the number of nonzero entries in the optimizer or solution vector increases monotonically when the hyperparameter decreases. We also generalize the result to the often used total variation case, where the $\ell$-1 norm is taken over the first order derivative of the solution vector. We prove that the proposed condition has intrinsic connections with the condition given by Donoho, et al \cite{Donoho08} and the positive cone condition by Efron {\it el al} \cite{Efron04}. However, the proposed condition does not need to assume the sparsity level of the signal as required by Donoho et al's condition, and is easier to verify than Efron, et al's positive cone condition when being used for practical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge