Darko Katic

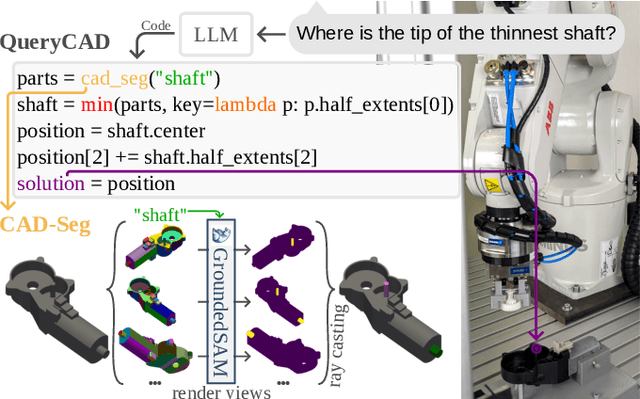

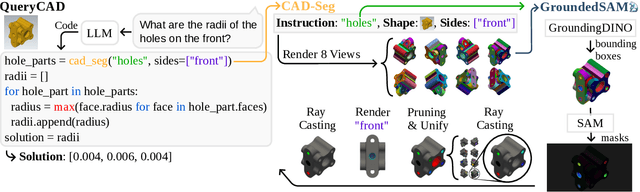

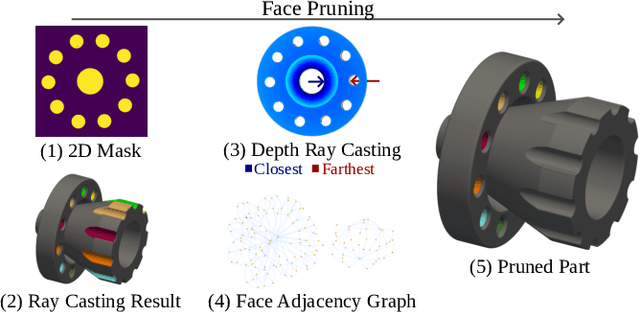

QueryCAD: Grounded Question Answering for CAD Models

Sep 16, 2024

Abstract:CAD models are widely used in industry and are essential for robotic automation processes. However, these models are rarely considered in novel AI-based approaches, such as the automatic synthesis of robot programs, as there are no readily available methods that would allow CAD models to be incorporated for the analysis, interpretation, or extraction of information. To address these limitations, we propose QueryCAD, the first system designed for CAD question answering, enabling the extraction of precise information from CAD models using natural language queries. QueryCAD incorporates SegCAD, an open-vocabulary instance segmentation model we developed to identify and select specific parts of the CAD model based on part descriptions. We further propose a CAD question answering benchmark to evaluate QueryCAD and establish a foundation for future research. Lastly, we integrate QueryCAD within an automatic robot program synthesis framework, validating its ability to enhance deep-learning solutions for robotics by enabling them to process CAD models (https://claudius-kienle.github.com/querycad).

Shadow Program Inversion with Differentiable Planning: A Framework for Unified Robot Program Parameter and Trajectory Optimization

Sep 13, 2024

Abstract:This paper presents SPI-DP, a novel first-order optimizer capable of optimizing robot programs with respect to both high-level task objectives and motion-level constraints. To that end, we introduce DGPMP2-ND, a differentiable collision-free motion planner for serial N-DoF kinematics, and integrate it into an iterative, gradient-based optimization approach for generic, parameterized robot program representations. SPI-DP allows first-order optimization of planned trajectories and program parameters with respect to objectives such as cycle time or smoothness subject to e.g. collision constraints, while enabling humans to understand, modify or even certify the optimized programs. We provide a comprehensive evaluation on two practical household and industrial applications.

MuTT: A Multimodal Trajectory Transformer for Robot Skills

Jul 22, 2024

Abstract:High-level robot skills represent an increasingly popular paradigm in robot programming. However, configuring the skills' parameters for a specific task remains a manual and time-consuming endeavor. Existing approaches for learning or optimizing these parameters often require numerous real-world executions or do not work in dynamic environments. To address these challenges, we propose MuTT, a novel encoder-decoder transformer architecture designed to predict environment-aware executions of robot skills by integrating vision, trajectory, and robot skill parameters. Notably, we pioneer the fusion of vision and trajectory, introducing a novel trajectory projection. Furthermore, we illustrate MuTT's efficacy as a predictor when combined with a model-based robot skill optimizer. This approach facilitates the optimization of robot skill parameters for the current environment, without the need for real-world executions during optimization. Designed for compatibility with any representation of robot skills, MuTT demonstrates its versatility across three comprehensive experiments, showcasing superior performance across two different skill representations.

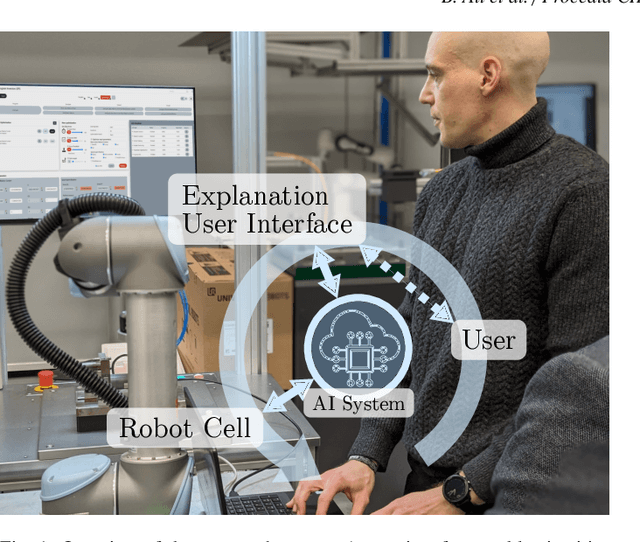

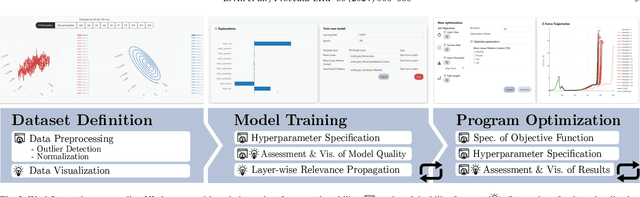

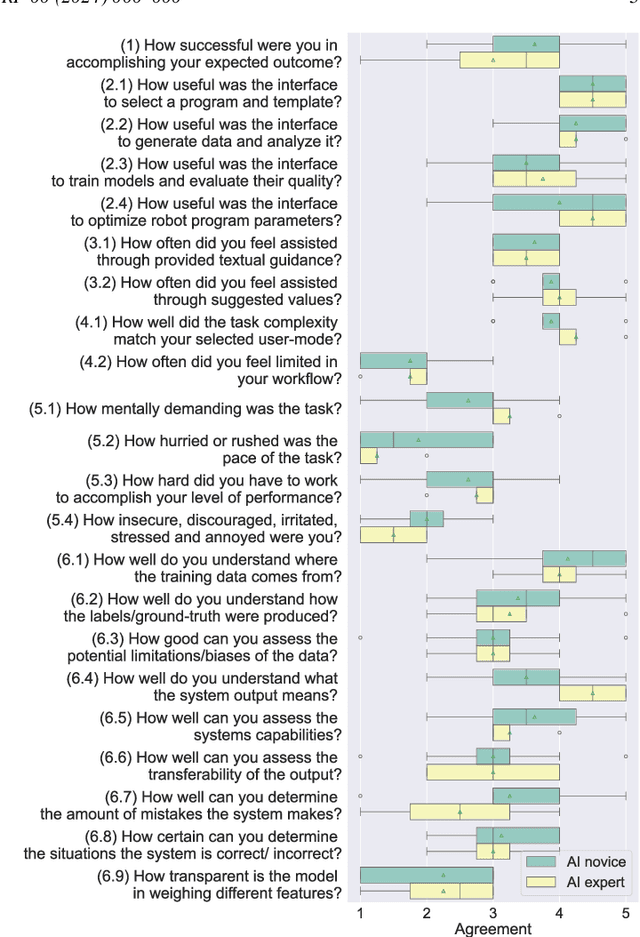

Human-AI Interaction in Industrial Robotics: Design and Empirical Evaluation of a User Interface for Explainable AI-Based Robot Program Optimization

Apr 30, 2024

Abstract:While recent advances in deep learning have demonstrated its transformative potential, its adoption for real-world manufacturing applications remains limited. We present an Explanation User Interface (XUI) for a state-of-the-art deep learning-based robot program optimizer which provides both naive and expert users with different user experiences depending on their skill level, as well as Explainable AI (XAI) features to facilitate the application of deep learning methods in real-world applications. To evaluate the impact of the XUI on task performance, user satisfaction and cognitive load, we present the results of a preliminary user survey and propose a study design for a large-scale follow-up study.

BANSAI: Towards Bridging the AI Adoption Gap in Industrial Robotics with Neurosymbolic Programming

Apr 21, 2024

Abstract:Over the past decade, deep learning helped solve manipulation problems across all domains of robotics. At the same time, industrial robots continue to be programmed overwhelmingly using traditional program representations and interfaces. This paper undertakes an analysis of this "AI adoption gap" from an industry practitioner's perspective. In response, we propose the BANSAI approach (Bridging the AI Adoption Gap via Neurosymbolic AI). It systematically leverages principles of neurosymbolic AI to establish data-driven, subsymbolic program synthesis and optimization in modern industrial robot programming workflow. BANSAI conceptually unites several lines of prior research and proposes a path toward practical, real-world validation.

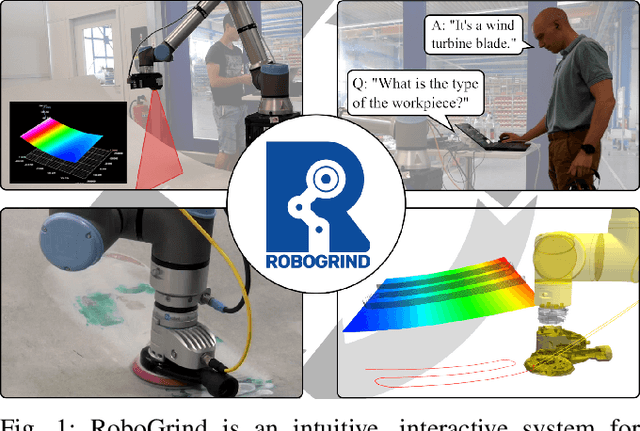

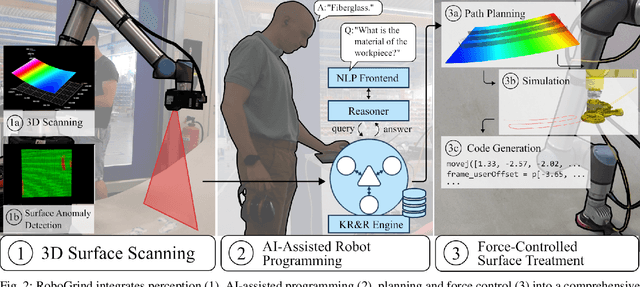

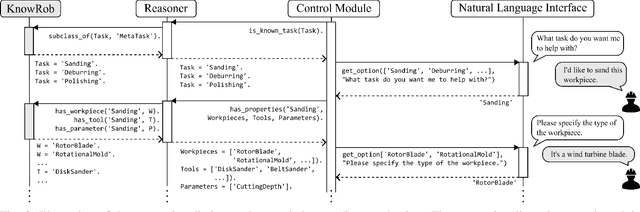

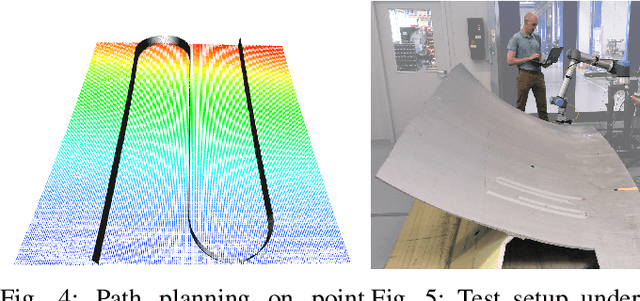

RoboGrind: Intuitive and Interactive Surface Treatment with Industrial Robots

Feb 27, 2024

Abstract:Surface treatment tasks such as grinding, sanding or polishing are a vital step of the value chain in many industries, but are notoriously challenging to automate. We present RoboGrind, an integrated system for the intuitive, interactive automation of surface treatment tasks with industrial robots. It combines a sophisticated 3D perception pipeline for surface scanning and automatic defect identification, an interactive voice-controlled wizard system for the AI-assisted bootstrapping and parameterization of robot programs, and an automatic planning and execution pipeline for force-controlled robotic surface treatment. RoboGrind is evaluated both under laboratory and real-world conditions in the context of refabricating fiberglass wind turbine blades.

EfficientPPS: Part-aware Panoptic Segmentation of Transparent Objects for Robotic Manipulation

Dec 21, 2023Abstract:The use of autonomous robots for assistance tasks in hospitals has the potential to free up qualified staff and im-prove patient care. However, the ubiquity of deformable and transparent objects in hospital settings poses signif-icant challenges to vision-based perception systems. We present EfficientPPS, a neural architecture for part-aware panoptic segmentation that provides robots with semantically rich visual information for grasping and ma-nipulation tasks. We also present an unsupervised data collection and labelling method to reduce the need for human involvement in the training process. EfficientPPS is evaluated on a dataset containing real-world hospital objects and demonstrated to be robust and efficient in grasping transparent transfusion bags with a collaborative robot arm.

* 8 pages, 8 figures, presented at the 56th International Symposium on Robotics (ISR Europe)

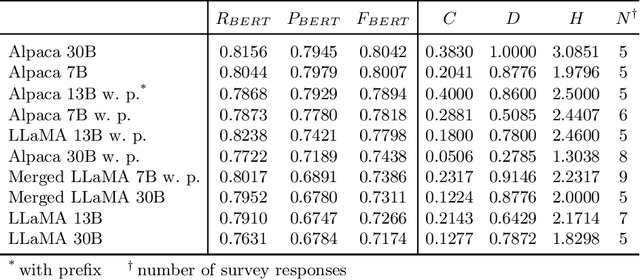

Domain-Specific Fine-Tuning of Large Language Models for Interactive Robot Programming

Dec 21, 2023

Abstract:Industrial robots are applied in a widening range of industries, but robot programming mostly remains a task limited to programming experts. We propose a natural language-based assistant for programming of advanced, industrial robotic applications and investigate strategies for domain-specific fine-tuning of foundation models with limited data and compute.

Knowledge-Driven Robot Program Synthesis from Human VR Demonstrations

Jun 05, 2023

Abstract:Aging societies, labor shortages and increasing wage costs call for assistance robots capable of autonomously performing a wide array of real-world tasks. Such open-ended robotic manipulation requires not only powerful knowledge representations and reasoning (KR&R) algorithms, but also methods for humans to instruct robots what tasks to perform and how to perform them. In this paper, we present a system for automatically generating executable robot control programs from human task demonstrations in virtual reality (VR). We leverage common-sense knowledge and game engine-based physics to semantically interpret human VR demonstrations, as well as an expressive and general task representation and automatic path planning and code generation, embedded into a state-of-the-art cognitive architecture. We demonstrate our approach in the context of force-sensitive fetch-and-place for a robotic shopping assistant. The source code is available at https://github.com/ease-crc/vr-program-synthesis.

Heuristic-free Optimization of Force-Controlled Robot Search Strategies in Stochastic Environments

Jul 15, 2022

Abstract:In both industrial and service domains, a central benefit of the use of robots is their ability to quickly and reliably execute repetitive tasks. However, even relatively simple peg-in-hole tasks are typically subject to stochastic variations, requiring search motions to find relevant features such as holes. While search improves robustness, it comes at the cost of increased runtime: More exhaustive search will maximize the probability of successfully executing a given task, but will significantly delay any downstream tasks. This trade-off is typically resolved by human experts according to simple heuristics, which are rarely optimal. This paper introduces an automatic, data-driven and heuristic-free approach to optimize robot search strategies. By training a neural model of the search strategy on a large set of simulated stochastic environments, conditioning it on few real-world examples and inverting the model, we can infer search strategies which adapt to the time-variant characteristics of the underlying probability distributions, while requiring very few real-world measurements. We evaluate our approach on two different industrial robots in the context of spiral and probe search for THT electronics assembly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge