Daniel Martin

Misaligned by Design: Incentive Failures in Machine Learning

Nov 10, 2025Abstract:The cost of error in many high-stakes settings is asymmetric: misdiagnosing pneumonia when absent is an inconvenience, but failing to detect it when present can be life-threatening. Because of this, artificial intelligence (AI) models used to assist such decisions are frequently trained with asymmetric loss functions that incorporate human decision-makers' trade-offs between false positives and false negatives. In two focal applications, we show that this standard alignment practice can backfire. In both cases, it would be better to train the machine learning model with a loss function that ignores the human's objective and then adjust predictions ex post according to that objective. We rationalize this result using an economic model of incentive design with endogenous information acquisition. The key insight from our theoretical framework is that machine classifiers perform not one but two incentivized tasks: choosing how to classify and learning how to classify. We show that while the adjustments engineers use correctly incentivize choosing, they can simultaneously reduce the incentives to learn. Our formal treatment of the problem reveals that methods embraced for their intuitive appeal can in fact misalign human and machine objectives in predictable ways.

AI Oversight and Human Mistakes: Evidence from Centre Court

Jan 30, 2024Abstract:Powered by the increasing predictive capabilities of machine learning algorithms, artificial intelligence (AI) systems have begun to be used to overrule human mistakes in many settings. We provide the first field evidence this AI oversight carries psychological costs that can impact human decision-making. We investigate one of the highest visibility settings in which AI oversight has occurred: the Hawk-Eye review of umpires in top tennis tournaments. We find that umpires lowered their overall mistake rate after the introduction of Hawk-Eye review, in line with rational inattention given psychological costs of being overruled by AI. We also find that umpires increased the rate at which they called balls in, which produced a shift from making Type II errors (calling a ball out when in) to Type I errors (calling a ball in when out). We structurally estimate the psychological costs of being overruled by AI using a model of rational inattentive umpires, and our results suggest that because of these costs, umpires cared twice as much about Type II errors under AI oversight.

AI Use in Manuscript Preparation for Academic Journals

Nov 19, 2023Abstract:The emergent abilities of Large Language Models (LLMs), which power tools like ChatGPT and Bard, have produced both excitement and worry about how AI will impact academic writing. In response to rising concerns about AI use, authors of academic publications may decide to voluntarily disclose any AI tools they use to revise their manuscripts, and journals and conferences could begin mandating disclosure and/or turn to using detection services, as many teachers have done with student writing in class settings. Given these looming possibilities, we investigate whether academics view it as necessary to report AI use in manuscript preparation and how detectors react to the use of AI in academic writing.

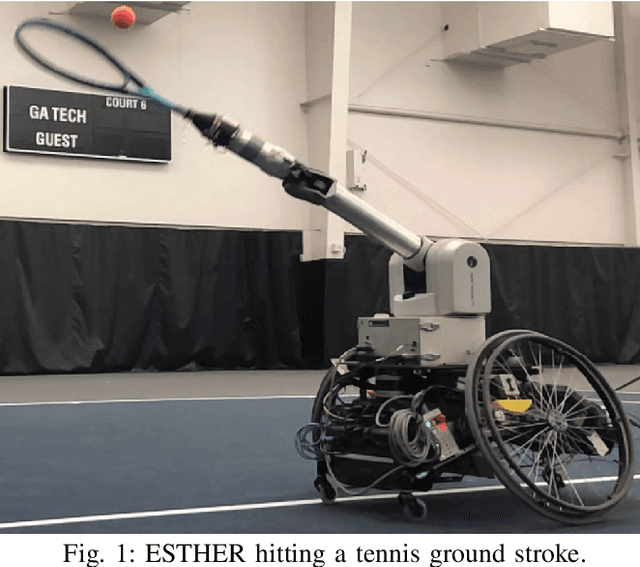

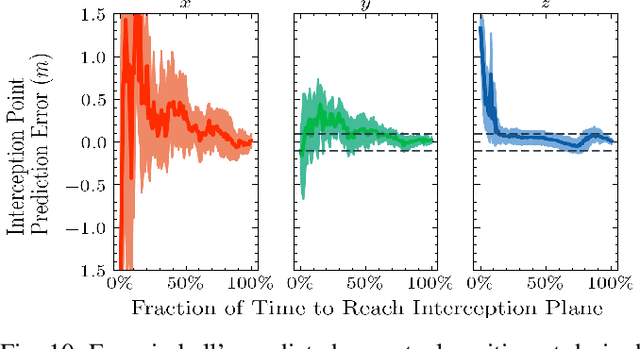

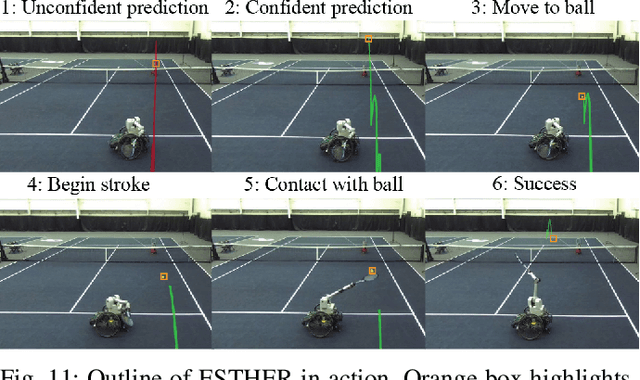

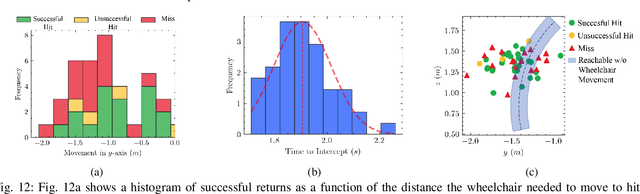

Athletic Mobile Manipulator System for Robotic Wheelchair Tennis

Oct 05, 2022

Abstract:Athletics are a quintessential and universal expression of humanity. From French monks who in the 12th century invented jeu de paume, the precursor to modern lawn tennis, back to the K'iche' people who played the Maya Ballgame as a form of religious expression over three thousand years ago, humans have sought to train their minds and bodies to excel in sporting contests. Advances in robotics are opening up the possibility of robots in sports. Yet, key challenges remain, as most prior works in robotics for sports are limited to pristine sensing environments, do not require significant force generation, or are on miniaturized scales unsuited for joint human-robot play. In this paper, we propose the first open-source, autonomous robot for playing regulation wheelchair tennis. We demonstrate the performance of our full-stack system in executing ground strokes and evaluate each of the system's hardware and software components. The goal of this paper is to (1) inspire more research in human-scale robot athletics and (2) establish the first baseline towards developing a robot in future work that can serve as a teammate for mixed, human-robot doubles play. Our paper contributes to the science of systems design and poses a set of key challenges for the robotics community to address in striving towards a vision of human-robot collaboration in sports.

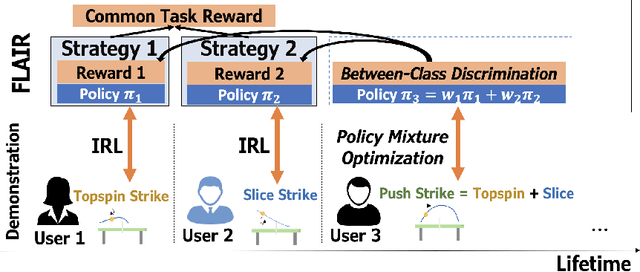

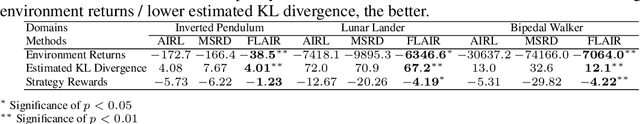

Fast Lifelong Adaptive Inverse Reinforcement Learning from Demonstrations

Sep 24, 2022

Abstract:Learning from Demonstration (LfD) approaches empower end-users to teach robots novel tasks via demonstrations of the desired behaviors, democratizing access to robotics. However, current LfD frameworks are not capable of fast adaptation to heterogeneous human demonstrations nor the large-scale deployment in ubiquitous robotics applications. In this paper, we propose a novel LfD framework, Fast Lifelong Adaptive Inverse Reinforcement learning (FLAIR). Our approach (1) leverages learned strategies to construct policy mixtures for fast adaptation to new demonstrations, allowing for quick end-user personalization; (2) distills common knowledge across demonstrations, achieving accurate task inference; and (3) expands its model only when needed in lifelong deployments, maintaining a concise set of prototypical strategies that can approximate all behaviors via policy mixtures. We empirically validate that FLAIR achieves adaptability (i.e., the robot adapts to heterogeneous, user-specific task preferences), efficiency (i.e., the robot achieves sample-efficient adaptation), and scalability (i.e., the model grows sublinearly with the number of demonstrations while maintaining high performance). FLAIR surpasses benchmarks across three continuous control tasks with an average 57% improvement in policy returns and an average 78% fewer episodes required for demonstration modeling using policy mixtures. Finally, we demonstrate the success of FLAIR in a real-robot table tennis task.

Calibrating for Class Weights by Modeling Machine Learning

May 10, 2022

Abstract:A much studied issue is the extent to which the confidence scores provided by machine learning algorithms are calibrated to ground truth probabilities. Our starting point is that calibration is seemingly incompatible with class weighting, a technique often employed when one class is less common (class imbalance) or with the hope of achieving some external objective (cost-sensitive learning). We provide a model-based explanation for this incompatibility and use our anthropomorphic model to generate a simple method of recovering likelihoods from an algorithm that is miscalibrated due to class weighting. We validate this approach in the binary pneumonia detection task of Rajpurkar, Irvin, Zhu, et al. (2017).

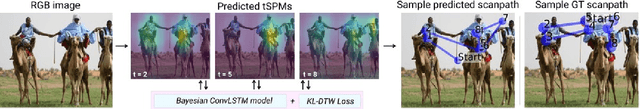

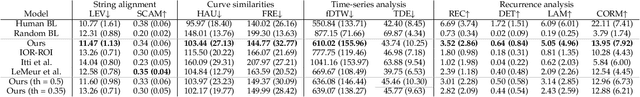

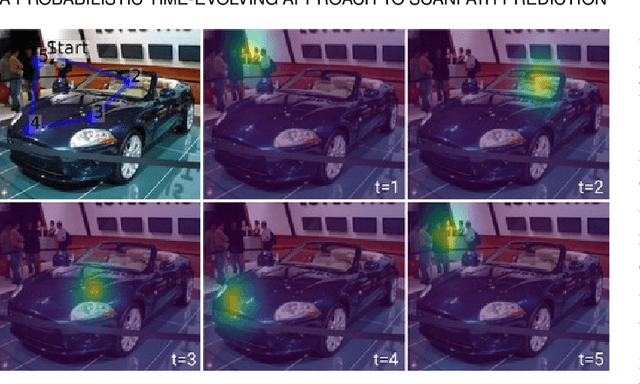

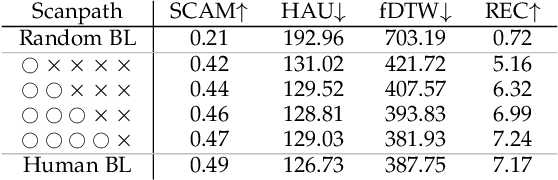

A Probabilistic Time-Evolving Approach to Scanpath Prediction

Apr 20, 2022

Abstract:Human visual attention is a complex phenomenon that has been studied for decades. Within it, the particular problem of scanpath prediction poses a challenge, particularly due to the inter- and intra-observer variability, among other reasons. Besides, most existing approaches to scanpath prediction have focused on optimizing the prediction of a gaze point given the previous ones. In this work, we present a probabilistic time-evolving approach to scanpath prediction, based on Bayesian deep learning. We optimize our model using a novel spatio-temporal loss function based on a combination of Kullback-Leibler divergence and dynamic time warping, jointly considering the spatial and temporal dimensions of scanpaths. Our scanpath prediction framework yields results that outperform those of current state-of-the-art approaches, and are almost on par with the human baseline, suggesting that our model is able to generate scanpaths whose behavior closely resembles those of the real ones.

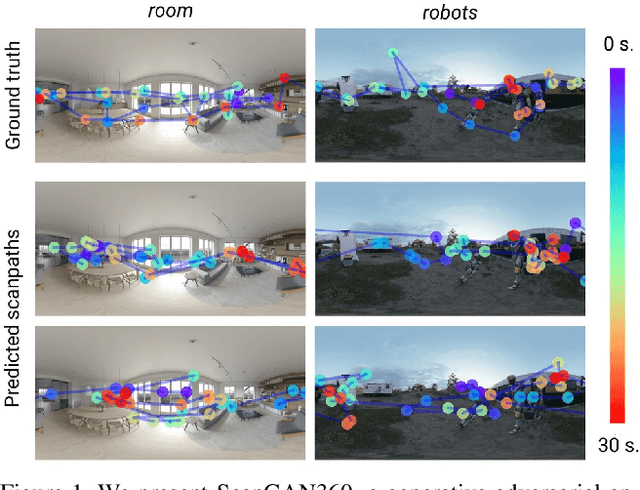

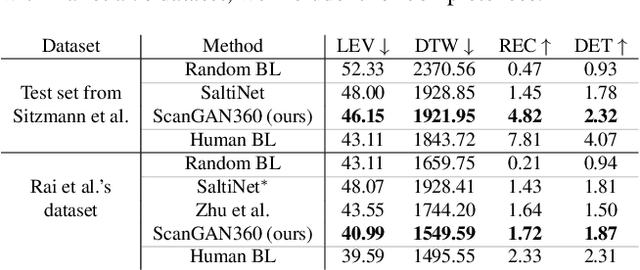

ScanGAN360: A Generative Model of Realistic Scanpaths for 360$^{\circ}$ Images

Mar 25, 2021

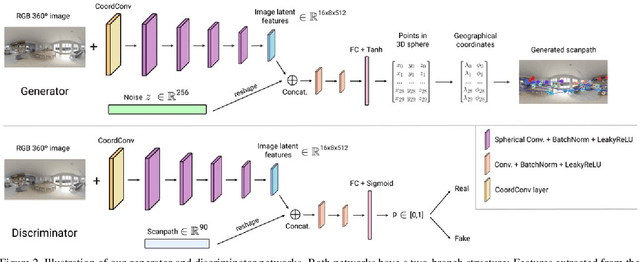

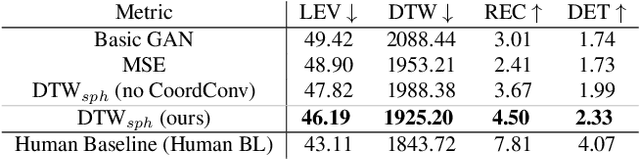

Abstract:Understanding and modeling the dynamics of human gaze behavior in 360$^\circ$ environments is a key challenge in computer vision and virtual reality. Generative adversarial approaches could alleviate this challenge by generating a large number of possible scanpaths for unseen images. Existing methods for scanpath generation, however, do not adequately predict realistic scanpaths for 360$^\circ$ images. We present ScanGAN360, a new generative adversarial approach to address this challenging problem. Our network generator is tailored to the specifics of 360$^\circ$ images representing immersive environments. Specifically, we accomplish this by leveraging the use of a spherical adaptation of dynamic-time warping as a loss function and proposing a novel parameterization of 360$^\circ$ scanpaths. The quality of our scanpaths outperforms competing approaches by a large margin and is almost on par with the human baseline. ScanGAN360 thus allows fast simulation of large numbers of virtual observers, whose behavior mimics real users, enabling a better understanding of gaze behavior and novel applications in virtual scene design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge