Daniel Elson

Nested ResNet: A Vision-Based Method for Detecting the Sensing Area of a Drop-in Gamma Probe

Oct 30, 2024

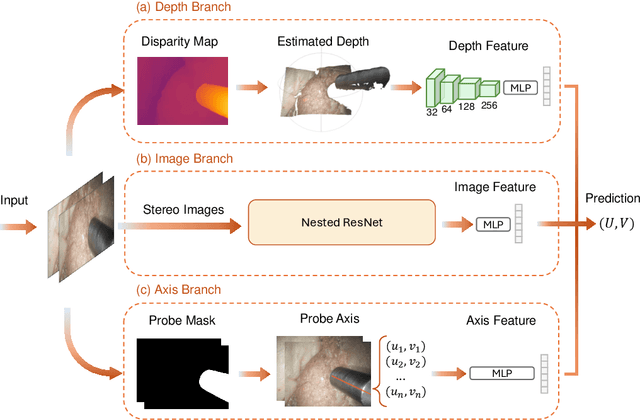

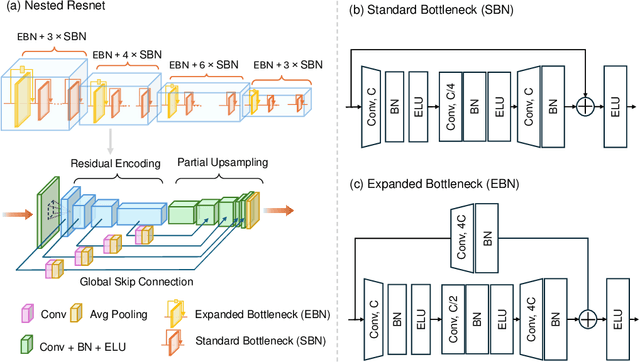

Abstract:Purpose: Drop-in gamma probes are widely used in robotic-assisted minimally invasive surgery (RAMIS) for lymph node detection. However, these devices only provide audio feedback on signal intensity, lacking the visual feedback necessary for precise localisation. Previous work attempted to predict the sensing area location using laparoscopic images, but the prediction accuracy was unsatisfactory. Improvements are needed in the deep learning-based regression approach. Methods: We introduce a three-branch deep learning framework to predict the sensing area of the probe. Specifically, we utilise the stereo laparoscopic images as input for the main branch and develop a Nested ResNet architecture. The framework also incorporates depth estimation via transfer learning and orientation guidance through probe axis sampling. The combined features from each branch enhanced the accuracy of the prediction. Results: Our approach has been evaluated on a publicly available dataset, demonstrating superior performance over previous methods. In particular, our method resulted in a 22.10\% decrease in 2D mean error and a 41.67\% reduction in 3D mean error. Additionally, qualitative comparisons further demonstrated the improved precision of our approach. Conclusion: With extensive evaluation, our solution significantly enhances the accuracy and reliability of sensing area predictions. This advancement enables visual feedback during the use of the drop-in gamma probe in surgery, providing surgeons with more accurate and reliable localisation.}

RGB to Hyperspectral: Spectral Reconstruction for Enhanced Surgical Imaging

Oct 17, 2024

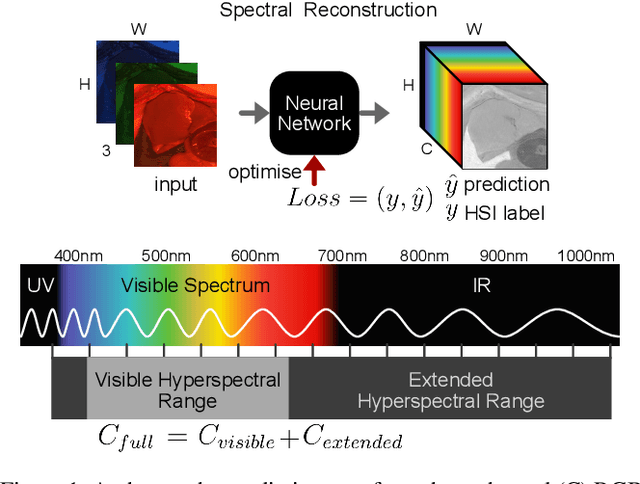

Abstract:This study investigates the reconstruction of hyperspectral signatures from RGB data to enhance surgical imaging, utilizing the publicly available HeiPorSPECTRAL dataset from porcine surgery and an in-house neurosurgery dataset. Various architectures based on convolutional neural networks (CNNs) and transformer models are evaluated using comprehensive metrics. Transformer models exhibit superior performance in terms of RMSE, SAM, PSNR and SSIM by effectively integrating spatial information to predict accurate spectral profiles, encompassing both visible and extended spectral ranges. Qualitative assessments demonstrate the capability to predict spectral profiles critical for informed surgical decision-making during procedures. Challenges associated with capturing both the visible and extended hyperspectral ranges are highlighted using the MAE, emphasizing the complexities involved. The findings open up the new research direction of hyperspectral reconstruction for surgical applications and clinical use cases in real-time surgical environments.

CathAction: A Benchmark for Endovascular Intervention Understanding

Aug 23, 2024Abstract:Real-time visual feedback from catheterization analysis is crucial for enhancing surgical safety and efficiency during endovascular interventions. However, existing datasets are often limited to specific tasks, small scale, and lack the comprehensive annotations necessary for broader endovascular intervention understanding. To tackle these limitations, we introduce CathAction, a large-scale dataset for catheterization understanding. Our CathAction dataset encompasses approximately 500,000 annotated frames for catheterization action understanding and collision detection, and 25,000 ground truth masks for catheter and guidewire segmentation. For each task, we benchmark recent related works in the field. We further discuss the challenges of endovascular intentions compared to traditional computer vision tasks and point out open research questions. We hope that CathAction will facilitate the development of endovascular intervention understanding methods that can be applied to real-world applications. The dataset is available at https://airvlab.github.io/cathdata/.

High-fidelity Endoscopic Image Synthesis by Utilizing Depth-guided Neural Surfaces

Apr 20, 2024Abstract:In surgical oncology, screening colonoscopy plays a pivotal role in providing diagnostic assistance, such as biopsy, and facilitating surgical navigation, particularly in polyp detection. Computer-assisted endoscopic surgery has recently gained attention and amalgamated various 3D computer vision techniques, including camera localization, depth estimation, surface reconstruction, etc. Neural Radiance Fields (NeRFs) and Neural Implicit Surfaces (NeuS) have emerged as promising methodologies for deriving accurate 3D surface models from sets of registered images, addressing the limitations of existing colon reconstruction approaches stemming from constrained camera movement. However, the inadequate tissue texture representation and confused scale problem in monocular colonoscopic image reconstruction still impede the progress of the final rendering results. In this paper, we introduce a novel method for colon section reconstruction by leveraging NeuS applied to endoscopic images, supplemented by a single frame of depth map. Notably, we pioneered the exploration of utilizing only one frame depth map in photorealistic reconstruction and neural rendering applications while this single depth map can be easily obtainable from other monocular depth estimation networks with an object scale. Through rigorous experimentation and validation on phantom imagery, our approach demonstrates exceptional accuracy in completely rendering colon sections, even capturing unseen portions of the surface. This breakthrough opens avenues for achieving stable and consistently scaled reconstructions, promising enhanced quality in cancer screening procedures and treatment interventions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge