Songyu Xu

CineTechBench: A Benchmark for Cinematographic Technique Understanding and Generation

May 21, 2025

Abstract:Cinematography is a cornerstone of film production and appreciation, shaping mood, emotion, and narrative through visual elements such as camera movement, shot composition, and lighting. Despite recent progress in multimodal large language models (MLLMs) and video generation models, the capacity of current models to grasp and reproduce cinematographic techniques remains largely uncharted, hindered by the scarcity of expert-annotated data. To bridge this gap, we present CineTechBench, a pioneering benchmark founded on precise, manual annotation by seasoned cinematography experts across key cinematography dimensions. Our benchmark covers seven essential aspects-shot scale, shot angle, composition, camera movement, lighting, color, and focal length-and includes over 600 annotated movie images and 120 movie clips with clear cinematographic techniques. For the understanding task, we design question answer pairs and annotated descriptions to assess MLLMs' ability to interpret and explain cinematographic techniques. For the generation task, we assess advanced video generation models on their capacity to reconstruct cinema-quality camera movements given conditions such as textual prompts or keyframes. We conduct a large-scale evaluation on 15+ MLLMs and 5+ video generation models. Our results offer insights into the limitations of current models and future directions for cinematography understanding and generation in automatically film production and appreciation. The code and benchmark can be accessed at https://github.com/PRIS-CV/CineTechBench.

Nested ResNet: A Vision-Based Method for Detecting the Sensing Area of a Drop-in Gamma Probe

Oct 30, 2024

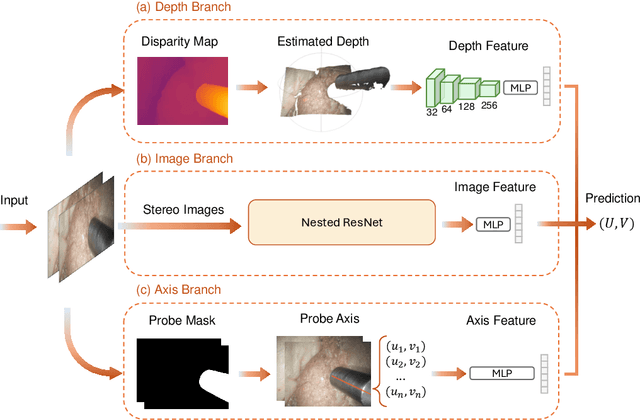

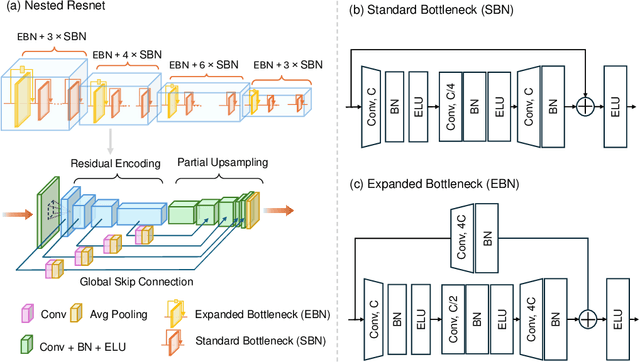

Abstract:Purpose: Drop-in gamma probes are widely used in robotic-assisted minimally invasive surgery (RAMIS) for lymph node detection. However, these devices only provide audio feedback on signal intensity, lacking the visual feedback necessary for precise localisation. Previous work attempted to predict the sensing area location using laparoscopic images, but the prediction accuracy was unsatisfactory. Improvements are needed in the deep learning-based regression approach. Methods: We introduce a three-branch deep learning framework to predict the sensing area of the probe. Specifically, we utilise the stereo laparoscopic images as input for the main branch and develop a Nested ResNet architecture. The framework also incorporates depth estimation via transfer learning and orientation guidance through probe axis sampling. The combined features from each branch enhanced the accuracy of the prediction. Results: Our approach has been evaluated on a publicly available dataset, demonstrating superior performance over previous methods. In particular, our method resulted in a 22.10\% decrease in 2D mean error and a 41.67\% reduction in 3D mean error. Additionally, qualitative comparisons further demonstrated the improved precision of our approach. Conclusion: With extensive evaluation, our solution significantly enhances the accuracy and reliability of sensing area predictions. This advancement enables visual feedback during the use of the drop-in gamma probe in surgery, providing surgeons with more accurate and reliable localisation.}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge