Constantinos Siettos

RANDSMAPs: Random-Feature/multi-Scale Neural Decoders with Mass Preservation

Jan 21, 2026Abstract:We introduce RANDSMAPs (Random-feature/multi-scale neural decoders with Mass Preservation), numerical analysis-informed, explainable neural decoders designed to explicitly respect conservation laws when solving the challenging ill-posed pre-image problem in manifold learning. We start by proving the equivalence of vanilla random Fourier feature neural networks to Radial Basis Function interpolation and the double Diffusion Maps (based on Geometric Harmonics) decoders in the deterministic limit. We then establish the theoretical foundations for RANDSMAP and introduce its multiscale variant to capture structures across multiple scales. We formulate and derive the closed-form solution of the corresponding constrained optimization problem and prove the mass preservation property. Numerically, we assess the performance of RANDSMAP on three benchmark problems/datasets with mass preservation obtained by the Lighthill-Whitham-Richards traffic flow PDE with shock waves, 2D rotated MRI brain images, and the Hughes crowd dynamics PDEs. We demonstrate that RANDSMAPs yield high reconstruction accuracy at low computational cost and maintain mass conservation at single-machine precision. In its vanilla formulation, the scheme remains applicable to the classical pre-image problem, i.e., when mass-preservation constraints are not imposed.

Next Generation Equation-Free Multiscale Modelling of Crowd Dynamics via Machine Learning

Aug 05, 2025Abstract:Bridging the microscopic and the macroscopic modelling scales in crowd dynamics constitutes an important, open challenge for systematic numerical analysis, optimization, and control. We propose a combined manifold and machine learning approach to learn the discrete evolution operator for the emergent crowd dynamics in latent spaces from high-fidelity agent-based simulations. The proposed framework builds upon our previous works on next-generation Equation-free algorithms on learning surrogate models for high-dimensional and multiscale systems. Our approach is a four-stage one, explicitly conserving the mass of the reconstructed dynamics in the high-dimensional space. In the first step, we derive continuous macroscopic fields (densities) from discrete microscopic data (pedestrians' positions) using KDE. In the second step, based on manifold learning, we construct a map from the macroscopic ambient space into the latent space parametrized by a few coordinates based on POD of the corresponding density distribution. The third step involves learning reduced-order surrogate ROMs in the latent space using machine learning techniques, particularly LSTMs networks and MVARs. Finally, we reconstruct the crowd dynamics in the high-dimensional space in terms of macroscopic density profiles. We demonstrate that the POD reconstruction of the density distribution via SVD conserves the mass. With this "embed->learn in latent space->lift back to the ambient space" pipeline, we create an effective solution operator of the unavailable macroscopic PDE for the density evolution. For our illustrations, we use the Social Force Model to generate data in a corridor with an obstacle, imposing periodic boundary conditions. The numerical results demonstrate high accuracy, robustness, and generalizability, thus allowing for fast and accurate modelling/simulation of crowd dynamics from agent-based simulations.

Fredholm Neural Networks for forward and inverse problems in elliptic PDEs

Jul 09, 2025Abstract:Building on our previous work introducing Fredholm Neural Networks (Fredholm NNs/ FNNs) for solving integral equations, we extend the framework to tackle forward and inverse problems for linear and semi-linear elliptic partial differential equations. The proposed scheme consists of a deep neural network (DNN) which is designed to represent the iterative process of fixed-point iterations for the solution of elliptic PDEs using the boundary integral method within the framework of potential theory. The number of layers, weights, biases and hyperparameters are computed in an explainable manner based on the iterative scheme, and we therefore refer to this as the Potential Fredholm Neural Network (PFNN). We show that this approach ensures both accuracy and explainability, achieving small errors in the interior of the domain, and near machine-precision on the boundary. We provide a constructive proof for the consistency of the scheme and provide explicit error bounds for both the interior and boundary of the domain, reflected in the layers of the PFNN. These error bounds depend on the approximation of the boundary function and the integral discretization scheme, both of which directly correspond to components of the Fredholm NN architecture. In this way, we provide an explainable scheme that explicitly respects the boundary conditions. We assess the performance of the proposed scheme for the solution of both the forward and inverse problem for linear and semi-linear elliptic PDEs in two dimensions.

Enabling Local Neural Operators to perform Equation-Free System-Level Analysis

May 05, 2025Abstract:Neural Operators (NOs) provide a powerful framework for computations involving physical laws that can be modelled by (integro-) partial differential equations (PDEs), directly learning maps between infinite-dimensional function spaces that bypass both the explicit equation identification and their subsequent numerical solving. Still, NOs have so far primarily been employed to explore the dynamical behavior as surrogates of brute-force temporal simulations/predictions. Their potential for systematic rigorous numerical system-level tasks, such as fixed-point, stability, and bifurcation analysis - crucial for predicting irreversible transitions in real-world phenomena - remains largely unexplored. Toward this aim, inspired by the Equation-Free multiscale framework, we propose and implement a framework that integrates (local) NOs with advanced iterative numerical methods in the Krylov subspace, so as to perform efficient system-level stability and bifurcation analysis of large-scale dynamical systems. Beyond fixed point, stability, and bifurcation analysis enabled by local in time NOs, we also demonstrate the usefulness of local in space as well as in space-time ("patch") NOs in accelerating the computer-aided analysis of spatiotemporal dynamics. We illustrate our framework via three nonlinear PDE benchmarks: the 1D Allen-Cahn equation, which undergoes multiple concatenated pitchfork bifurcations; the Liouville-Bratu-Gelfand PDE, which features a saddle-node tipping point; and the FitzHugh-Nagumo (FHN) model, consisting of two coupled PDEs that exhibit both Hopf and saddle-node bifurcations.

GoRINNs: Godunov-Riemann Informed Neural Networks for Learning Hyperbolic Conservation Laws

Oct 31, 2024

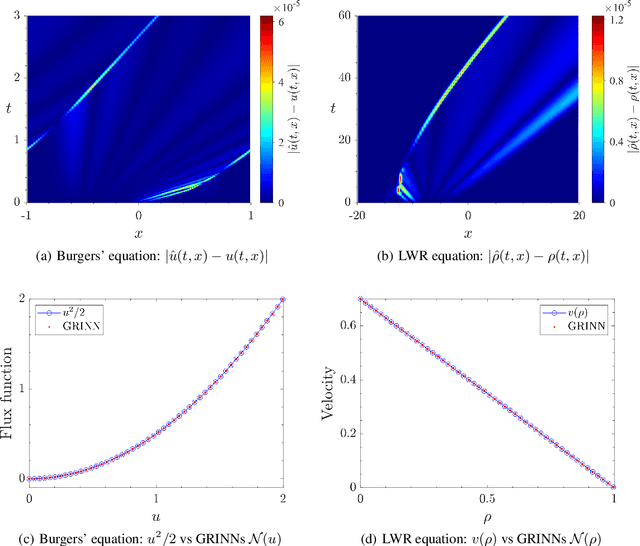

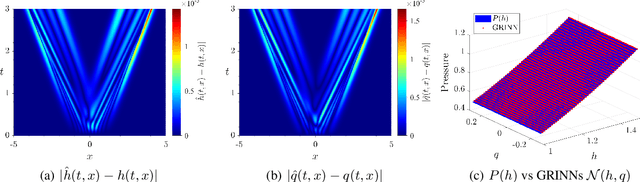

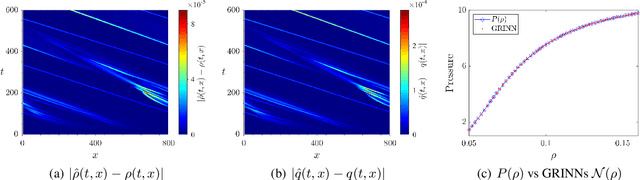

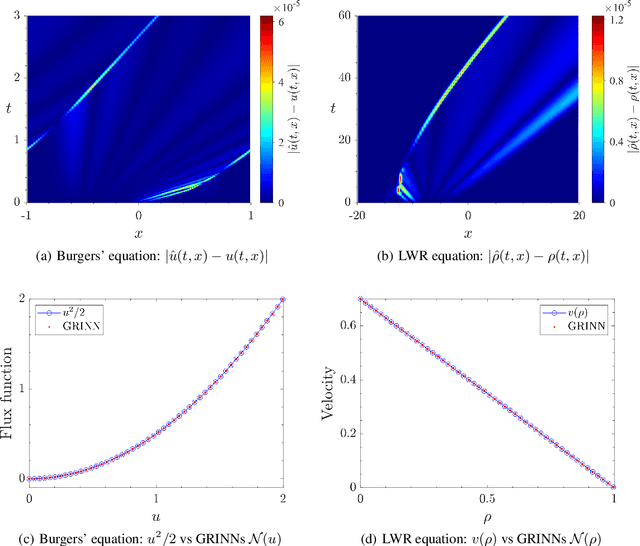

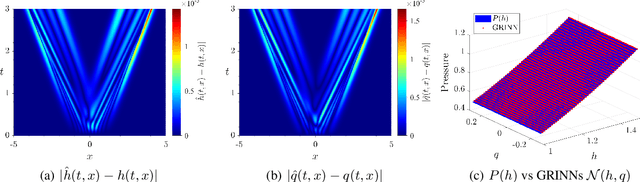

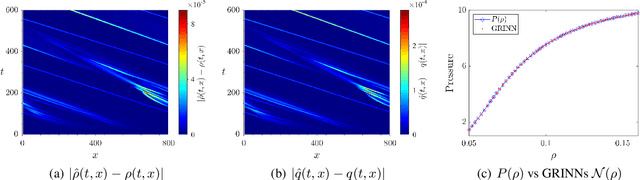

Abstract:We present GoRINNs: numerical analysis-informed neural networks for the solution of inverse problems of non-linear systems of conservation laws. GoRINNs are based on high-resolution Godunov schemes for the solution of the Riemann problem in hyperbolic Partial Differential Equations (PDEs). In contrast to other existing machine learning methods that learn the numerical fluxes of conservative Finite Volume methods, GoRINNs learn the physical flux function per se. Due to their structure, GoRINNs provide interpretable, conservative schemes, that learn the solution operator on the basis of approximate Riemann solvers that satisfy the Rankine-Hugoniot condition. The performance of GoRINNs is assessed via four benchmark problems, namely the Burgers', the Shallow Water, the Lighthill-Whitham-Richards and the Payne-Whitham traffic flow models. The solution profiles of these PDEs exhibit shock waves, rarefactions and/or contact discontinuities at finite times. We demonstrate that GoRINNs provide a very high accuracy both in the smooth and discontinuous regions.

GRINNs: Godunov-Riemann Informed Neural Networks for Learning Hyperbolic Conservation Laws

Oct 29, 2024

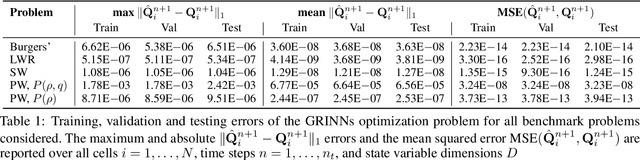

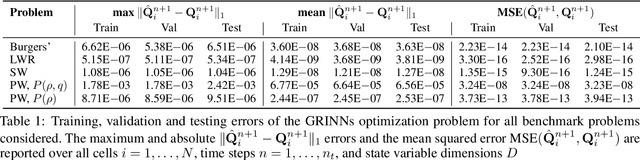

Abstract:We present GRINNs: numerical analysis-informed neural networks for the solution of inverse problems of non-linear systems of conservation laws. GRINNs are based on high-resolution Godunov schemes for the solution of the Riemann problem in hyperbolic Partial Differential Equations (PDEs). In contrast to other existing machine learning methods that learn the numerical fluxes of conservative Finite Volume methods, GRINNs learn the physical flux function per se. Due to their structure, GRINNs provide interpretable, conservative schemes, that learn the solution operator on the basis of approximate Riemann solvers that satisfy the Rankine-Hugoniot condition. The performance of GRINNs is assessed via four benchmark problems, namely the Burgers', the Shallow Water, the Lighthill-Whitham-Richards and the Payne-Whitham traffic flow models. The solution profiles of these PDEs exhibit shock waves, rarefactions and/or contact discontinuities at finite times. We demonstrate that GRINNs provide a very high accuracy both in the smooth and discontinuous regions.

Stability Analysis of Physics-Informed Neural Networks for Stiff Linear Differential Equations

Aug 27, 2024

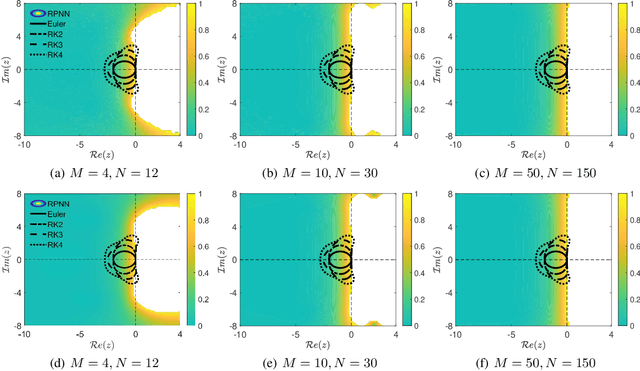

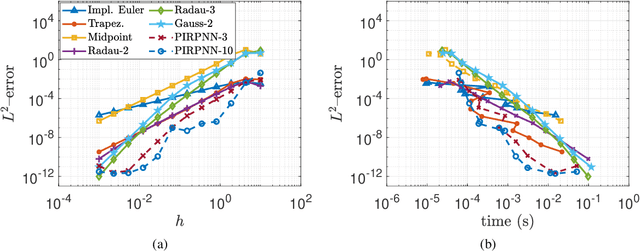

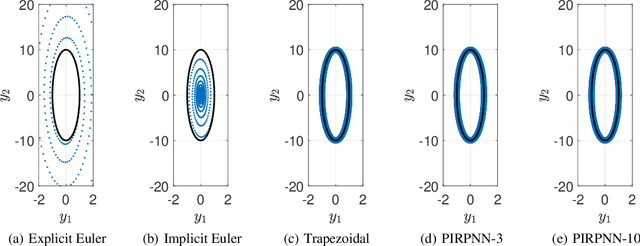

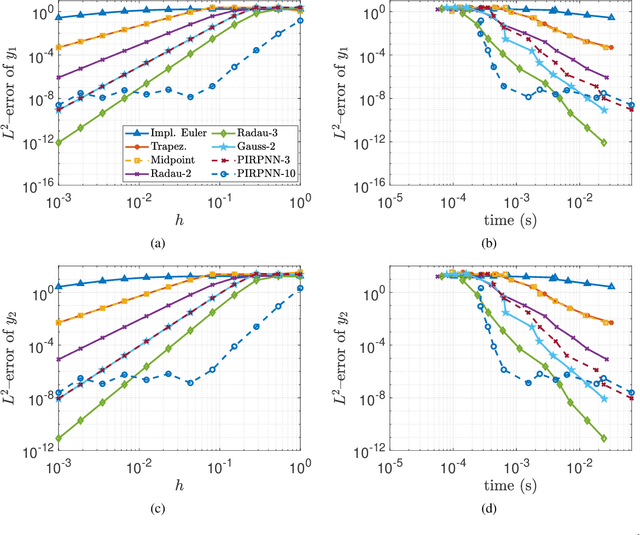

Abstract:We present a stability analysis of Physics-Informed Neural Networks (PINNs) coupled with random projections, for the numerical solution of (stiff) linear differential equations. For our analysis, we consider systems of linear ODEs, and linear parabolic PDEs. We prove that properly designed PINNs offer consistent and asymptotically stable numerical schemes, thus convergent schemes. In particular, we prove that multi-collocation random projection PINNs guarantee asymptotic stability for very high stiffness and that single-collocation PINNs are $A$-stable. To assess the performance of the PINNs in terms of both numerical approximation accuracy and computational cost, we compare it with other implicit schemes and in particular backward Euler, the midpoint, trapezoidal (Crank-Nikolson), the 2-stage Gauss scheme and the 2 and 3 stages Radau schemes. We show that the proposed PINNs outperform the above traditional schemes, in both numerical approximation accuracy and importantly computational cost, for a wide range of step sizes.

RandONet: Shallow-Networks with Random Projections for learning linear and nonlinear operators

Jun 08, 2024

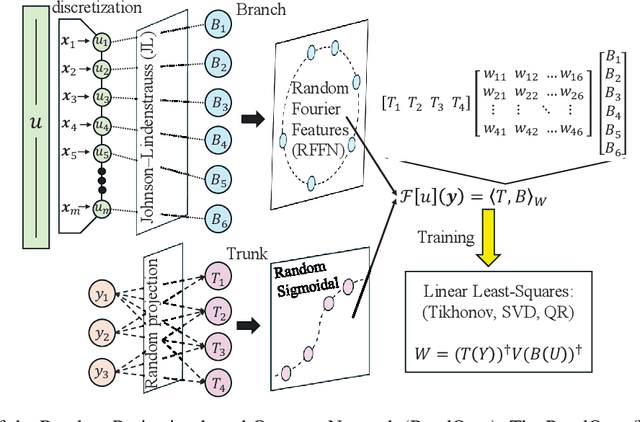

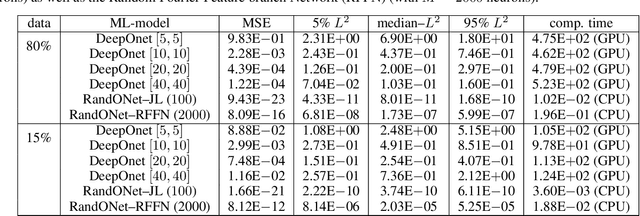

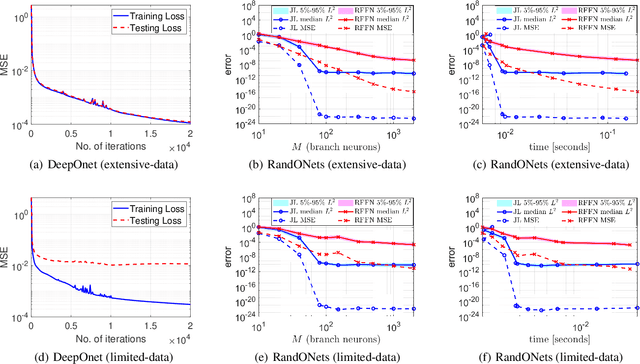

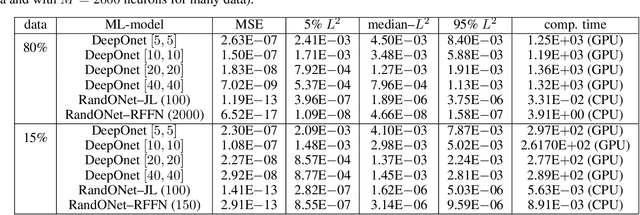

Abstract:Deep Operator Networks (DeepOnets) have revolutionized the domain of scientific machine learning for the solution of the inverse problem for dynamical systems. However, their implementation necessitates optimizing a high-dimensional space of parameters and hyperparameters. This fact, along with the requirement of substantial computational resources, poses a barrier to achieving high numerical accuracy. Here, inpsired by DeepONets and to address the above challenges, we present Random Projection-based Operator Networks (RandONets): shallow networks with random projections that learn linear and nonlinear operators. The implementation of RandONets involves: (a) incorporating random bases, thus enabling the use of shallow neural networks with a single hidden layer, where the only unknowns are the output weights of the network's weighted inner product; this reduces dramatically the dimensionality of the parameter space; and, based on this, (b) using established least-squares solvers (e.g., Tikhonov regularization and preconditioned QR decomposition) that offer superior numerical approximation properties compared to other optimization techniques used in deep-learning. In this work, we prove the universal approximation accuracy of RandONets for approximating nonlinear operators and demonstrate their efficiency in approximating linear nonlinear evolution operators (right-hand-sides (RHS)) with a focus on PDEs. We show, that for this particular task, RandONets outperform, both in terms of numerical approximation accuracy and computational cost, the ``vanilla" DeepOnets.

A physics-informed neural network method for the approximation of slow invariant manifolds for the general class of stiff systems of ODEs

Mar 18, 2024

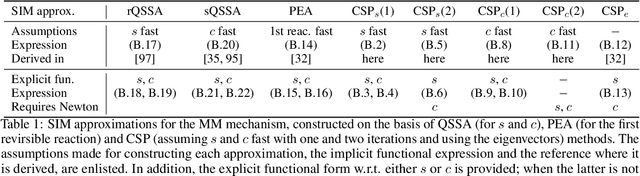

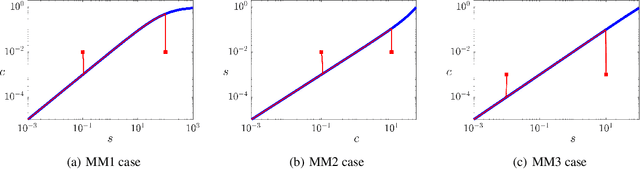

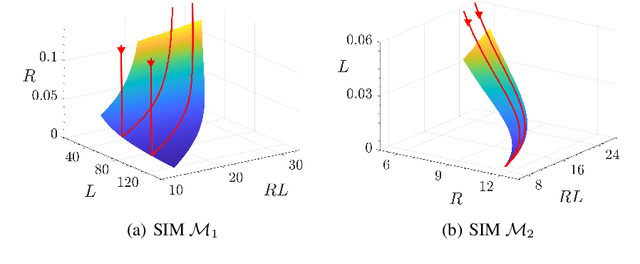

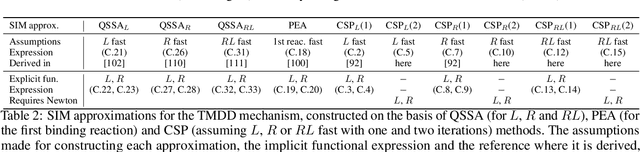

Abstract:We present a physics-informed neural network (PINN) approach for the discovery of slow invariant manifolds (SIMs), for the most general class of fast/slow dynamical systems of ODEs. In contrast to other machine learning (ML) approaches that construct reduced order black box surrogate models using simple regression, and/or require a priori knowledge of the fast and slow variables, our approach, simultaneously decomposes the vector field into fast and slow components and provides a functional of the underlying SIM in a closed form. The decomposition is achieved by finding a transformation of the state variables to the fast and slow ones, which enables the derivation of an explicit, in terms of fast variables, SIM functional. The latter is obtained by solving a PDE corresponding to the invariance equation within the Geometric Singular Perturbation Theory (GSPT) using a single-layer feedforward neural network with symbolic differentiation. The performance of the proposed physics-informed ML framework is assessed via three benchmark problems: the Michaelis-Menten, the target mediated drug disposition (TMDD) reaction model and a fully competitive substrate-inhibitor(fCSI) mechanism. We also provide a comparison with other GPST methods, namely the quasi steady state approximation (QSSA), the partial equilibrium approximation (PEA) and CSP with one and two iterations. We show that the proposed PINN scheme provides SIM approximations, of equivalent or even higher accuracy, than those provided by QSSA, PEA and CSP, especially close to the boundaries of the underlying SIMs.

Nonlinear Discrete-Time Observers with Physics-Informed Neural Networks

Feb 19, 2024

Abstract:We use Physics-Informed Neural Networks (PINNs) to solve the discrete-time nonlinear observer state estimation problem. Integrated within a single-step exact observer linearization framework, the proposed PINN approach aims at learning a nonlinear state transformation map by solving a system of inhomogeneous functional equations. The performance of the proposed PINN approach is assessed via two illustrative case studies for which the observer linearizing transformation map can be derived analytically. We also perform an uncertainty quantification analysis for the proposed PINN scheme and we compare it with conventional power-series numerical implementations, which rely on the computation of a power series solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge