Chu Zhou

PIDSR:ComplementaryPolarizedImageDemosaicingandSuper-Resolution

Apr 10, 2025

Abstract:Polarization cameras can capture multiple polarized images with different polarizer angles in a single shot, bringing convenience to polarization-based downstream tasks. However, their direct outputs are color-polarization filter array (CPFA) raw images, requiring demosaicing to reconstruct full-resolution, full-color polarized images; unfortunately, this necessary step introduces artifacts that make polarization-related parameters such as the degree of polarization (DoP) and angle of polarization (AoP) prone to error. Besides, limited by the hardware design, the resolution of a polarization camera is often much lower than that of a conventional RGB camera. Existing polarized image demosaicing (PID) methods are limited in that they cannot enhance resolution, while polarized image super-resolution (PISR) methods, though designed to obtain high-resolution (HR) polarized images from the demosaicing results, tend to retain or even amplify errors in the DoP and AoP introduced by demosaicing artifacts. In this paper, we propose PIDSR, a joint framework that performs complementary Polarized Image Demosaicing and Super-Resolution, showing the ability to robustly obtain high-quality HR polarized images with more accurate DoP and AoP from a CPFA raw image in a direct manner. Experiments show our PIDSR not only achieves state-of-the-art performance on both synthetic and real data, but also facilitates downstream tasks.

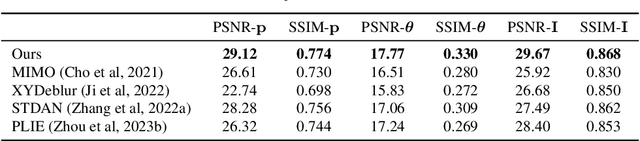

Learning to Deblur Polarized Images

Feb 28, 2024

Abstract:A polarization camera can capture four polarized images with different polarizer angles in a single shot, which is useful in polarization-based vision applications since the degree of polarization (DoP) and the angle of polarization (AoP) can be directly computed from the captured polarized images. However, since the on-chip micro-polarizers block part of the light so that the sensor often requires a longer exposure time, the captured polarized images are prone to motion blur caused by camera shakes, leading to noticeable degradation in the computed DoP and AoP. Deblurring methods for conventional images often show degenerated performance when handling the polarized images since they only focus on deblurring without considering the polarization constrains. In this paper, we propose a polarized image deblurring pipeline to solve the problem in a polarization-aware manner by adopting a divide-and-conquer strategy to explicitly decompose the problem into two less ill-posed sub-problems, and design a two-stage neural network to handle the two sub-problems respectively. Experimental results show that our method achieves state-of-the-art performance on both synthetic and real-world images, and can improve the performance of polarization-based vision applications such as image dehazing and reflection removal.

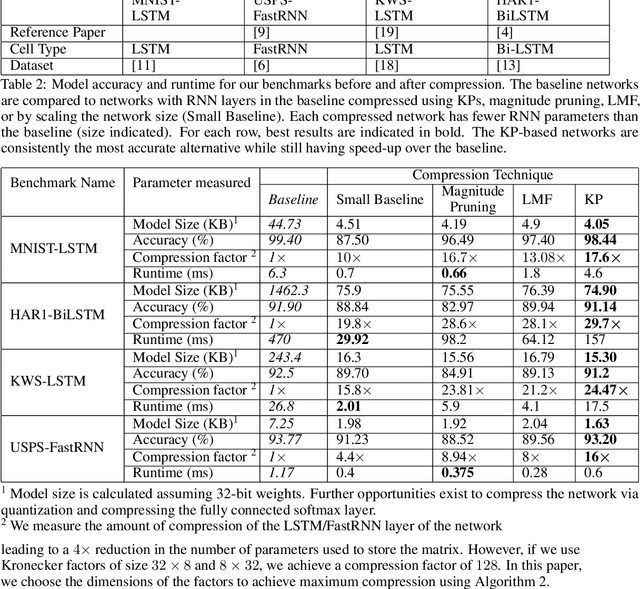

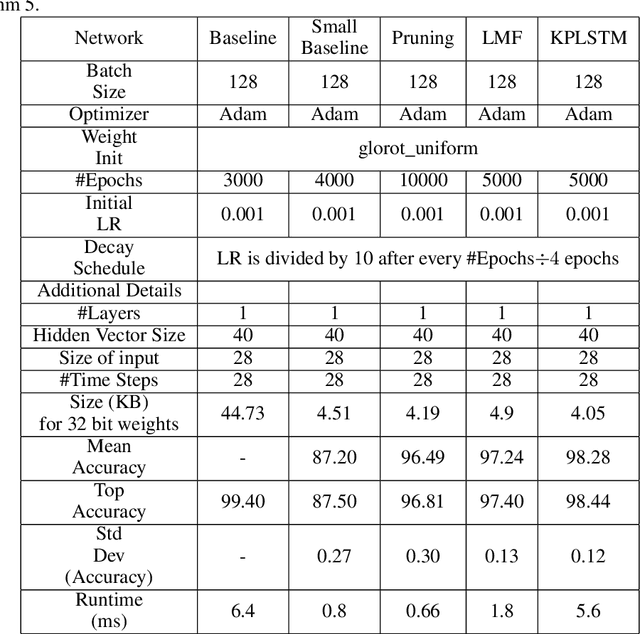

Pushing the limits of RNN Compression

Oct 09, 2019

Abstract:Recurrent Neural Networks (RNN) can be difficult to deploy on resource constrained devices due to their size. As a result, there is a need for compression techniques that can significantly compress RNNs without negatively impacting task accuracy. This paper introduces a method to compress RNNs for resource constrained environments using Kronecker product (KP). KPs can compress RNN layers by 16-38x with minimal accuracy loss. We show that KP can beat the task accuracy achieved by other state-of-the-art compression techniques (pruning and low-rank matrix factorization) across 4 benchmarks spanning 3 different applications, while simultaneously improving inference run-time.

* 6 pages. arXiv admin note: substantial text overlap with arXiv:1906.02876

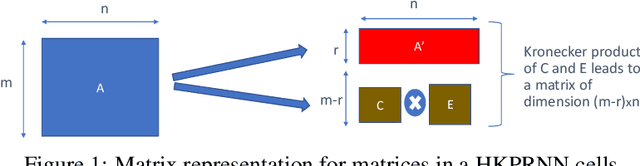

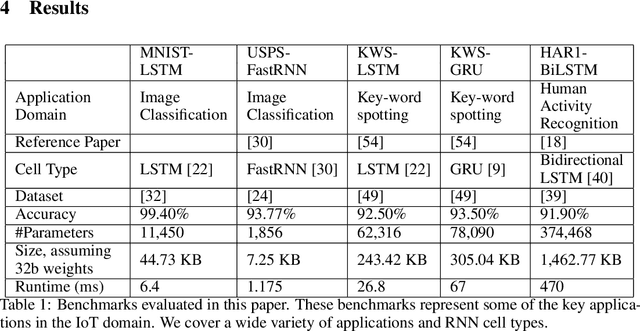

Compressing RNNs for IoT devices by 15-38x using Kronecker Products

Jun 18, 2019

Abstract:Recurrent Neural Networks (RNN) can be large and compute-intensive, making them hard to deploy on resource constrained devices. As a result, there is a need for compression technique that can significantly compress recurrent neural networks, without negatively impacting task accuracy. This paper introduces a method to compress RNNs for resource constrained environments using Kronecker products. We call the RNNs compressed using Kronecker products as Kronecker product Recurrent Neural Networks (KPRNNs). KPRNNs can compress the LSTM[22], GRU [9] and parameter optimized FastRNN [30] layers by 15 - 38x with minor loss in accuracy and can act as in-place replacement of most RNN cells in existing applications. By quantizing the Kronecker compressed networks to 8 bits, we further push the compression factor to 50x. We compare the accuracy and runtime of KPRNNs with other state-of-the-art compression techniques across 5 benchmarks spanning 3 different applications, showing its generality. Additionally, we show how to control the compression factors achieved by Kronecker products using a novel hybrid decomposition technique. We call the RNN cells compressed using Kronecker products with this control mechanism as hybrid Kronecker product RNNs (HKPRNN). Using HKPRNN, we compress RNN Cells in 2 benchmarks by 10x and 20x achieving better accuracy than other state-of-the-art compression techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge