Christoph Brune

Manifold limit for the training of shallow graph convolutional neural networks

Jan 09, 2026Abstract:We study the discrete-to-continuum consistency of the training of shallow graph convolutional neural networks (GCNNs) on proximity graphs of sampled point clouds under a manifold assumption. Graph convolution is defined spectrally via the graph Laplacian, whose low-frequency spectrum approximates that of the Laplace-Beltrami operator of the underlying smooth manifold, and shallow GCNNs of possibly infinite width are linear functionals on the space of measures on the parameter space. From this functional-analytic perspective, graph signals are seen as spatial discretizations of functions on the manifold, which leads to a natural notion of training data consistent across graph resolutions. To enable convergence results, the continuum parameter space is chosen as a weakly compact product of unit balls, with Sobolev regularity imposed on the output weight and bias, but not on the convolutional parameter. The corresponding discrete parameter spaces inherit the corresponding spectral decay, and are additionally restricted by a frequency cutoff adapted to the informative spectral window of the graph Laplacians. Under these assumptions, we prove $Γ$-convergence of regularized empirical risk minimization functionals and corresponding convergence of their global minimizers, in the sense of weak convergence of the parameter measures and uniform convergence of the functions over compact sets. This provides a formalization of mesh and sample independence for the training of such networks.

Consistent View Alignment Improves Foundation Models for 3D Medical Image Segmentation

Sep 17, 2025Abstract:Many recent approaches in representation learning implicitly assume that uncorrelated views of a data point are sufficient to learn meaningful representations for various downstream tasks. In this work, we challenge this assumption and demonstrate that meaningful structure in the latent space does not emerge naturally. Instead, it must be explicitly induced. We propose a method that aligns representations from different views of the data to align complementary information without inducing false positives. Our experiments show that our proposed self-supervised learning method, Consistent View Alignment, improves performance for downstream tasks, highlighting the critical role of structured view alignment in learning effective representations. Our method achieved first and second place in the MICCAI 2025 SSL3D challenge when using a Primus vision transformer and ResEnc convolutional neural network, respectively. The code and pretrained model weights are released at https://github.com/Tenbatsu24/LatentCampus.

Physics-based deep kernel learning for parameter estimation in high dimensional PDEs

Sep 17, 2025Abstract:Inferring parameters of high-dimensional partial differential equations (PDEs) poses significant computational and inferential challenges, primarily due to the curse of dimensionality and the inherent limitations of traditional numerical methods. This paper introduces a novel two-stage Bayesian framework that synergistically integrates training, physics-based deep kernel learning (DKL) with Hamiltonian Monte Carlo (HMC) to robustly infer unknown PDE parameters and quantify their uncertainties from sparse, exact observations. The first stage leverages physics-based DKL to train a surrogate model, which jointly yields an optimized neural network feature extractor and robust initial estimates for the PDE parameters. In the second stage, with the neural network weights fixed, HMC is employed within a full Bayesian framework to efficiently sample the joint posterior distribution of the kernel hyperparameters and the PDE parameters. Numerical experiments on canonical and high-dimensional inverse PDE problems demonstrate that our framework accurately estimates parameters, provides reliable uncertainty estimates, and effectively addresses challenges of data sparsity and model complexity, offering a robust and scalable tool for diverse scientific and engineering applications.

An invertible generative model for forward and inverse problems

Sep 04, 2025Abstract:We formulate the inverse problem in a Bayesian framework and aim to train a generative model that allows us to simulate (i.e., sample from the likelihood) and do inference (i.e., sample from the posterior). We review the use of triangular normalizing flows for conditional sampling in this context and show how to combine two such triangular maps (an upper and a lower one) in to one invertible mapping that can be used for simulation and inference. We work out several useful properties of this invertible generative model and propose a possible training loss for training the map directly. We illustrate the workings of this new approach to conditional generative modeling numerically on a few stylized examples.

Wall Shear Stress Estimation in Abdominal Aortic Aneurysms: Towards Generalisable Neural Surrogate Models

Jul 30, 2025Abstract:Abdominal aortic aneurysms (AAAs) are pathologic dilatations of the abdominal aorta posing a high fatality risk upon rupture. Studying AAA progression and rupture risk often involves in-silico blood flow modelling with computational fluid dynamics (CFD) and extraction of hemodynamic factors like time-averaged wall shear stress (TAWSS) or oscillatory shear index (OSI). However, CFD simulations are known to be computationally demanding. Hence, in recent years, geometric deep learning methods, operating directly on 3D shapes, have been proposed as compelling surrogates, estimating hemodynamic parameters in just a few seconds. In this work, we propose a geometric deep learning approach to estimating hemodynamics in AAA patients, and study its generalisability to common factors of real-world variation. We propose an E(3)-equivariant deep learning model utilising novel robust geometrical descriptors and projective geometric algebra. Our model is trained to estimate transient WSS using a dataset of CT scans of 100 AAA patients, from which lumen geometries are extracted and reference CFD simulations with varying boundary conditions are obtained. Results show that the model generalizes well within the distribution, as well as to the external test set. Moreover, the model can accurately estimate hemodynamics across geometry remodelling and changes in boundary conditions. Furthermore, we find that a trained model can be applied to different artery tree topologies, where new and unseen branches are added during inference. Finally, we find that the model is to a large extent agnostic to mesh resolution. These results show the accuracy and generalisation of the proposed model, and highlight its potential to contribute to hemodynamic parameter estimation in clinical practice.

Geometric deep learning for local growth prediction on abdominal aortic aneurysm surfaces

Jun 11, 2025Abstract:Abdominal aortic aneurysms (AAAs) are progressive focal dilatations of the abdominal aorta. AAAs may rupture, with a survival rate of only 20\%. Current clinical guidelines recommend elective surgical repair when the maximum AAA diameter exceeds 55 mm in men or 50 mm in women. Patients that do not meet these criteria are periodically monitored, with surveillance intervals based on the maximum AAA diameter. However, this diameter does not take into account the complex relation between the 3D AAA shape and its growth, making standardized intervals potentially unfit. Personalized AAA growth predictions could improve monitoring strategies. We propose to use an SE(3)-symmetric transformer model to predict AAA growth directly on the vascular model surface enriched with local, multi-physical features. In contrast to other works which have parameterized the AAA shape, this representation preserves the vascular surface's anatomical structure and geometric fidelity. We train our model using a longitudinal dataset of 113 computed tomography angiography (CTA) scans of 24 AAA patients at irregularly sampled intervals. After training, our model predicts AAA growth to the next scan moment with a median diameter error of 1.18 mm. We further demonstrate our model's utility to identify whether a patient will become eligible for elective repair within two years (acc = 0.93). Finally, we evaluate our model's generalization on an external validation set consisting of 25 CTAs from 7 AAA patients from a different hospital. Our results show that local directional AAA growth prediction from the vascular surface is feasible and may contribute to personalized surveillance strategies.

Joint Manifold Learning and Optimal Transport for Dynamic Imaging

May 17, 2025Abstract:Dynamic imaging is critical for understanding and visualizing dynamic biological processes in medicine and cell biology. These applications often encounter the challenge of a limited amount of time series data and time points, which hinders learning meaningful patterns. Regularization methods provide valuable prior knowledge to address this challenge, enabling the extraction of relevant information despite the scarcity of time-series data and time points. In particular, low-dimensionality assumptions on the image manifold address sample scarcity, while time progression models, such as optimal transport (OT), provide priors on image development to mitigate the lack of time points. Existing approaches using low-dimensionality assumptions disregard a temporal prior but leverage information from multiple time series. OT-prior methods, however, incorporate the temporal prior but regularize only individual time series, ignoring information from other time series of the same image modality. In this work, we investigate the effect of integrating a low-dimensionality assumption of the underlying image manifold with an OT regularizer for time-evolving images. In particular, we propose a latent model representation of the underlying image manifold and promote consistency between this representation, the time series data, and the OT prior on the time-evolving images. We discuss the advantages of enriching OT interpolations with latent models and integrating OT priors into latent models.

Data-Agnostic Augmentations for Unknown Variations: Out-of-Distribution Generalisation in MRI Segmentation

May 15, 2025

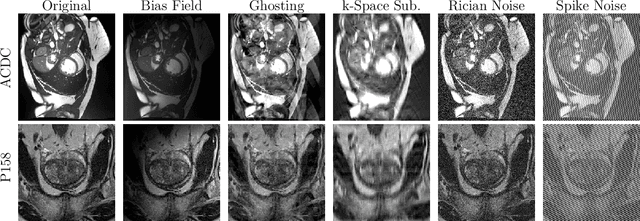

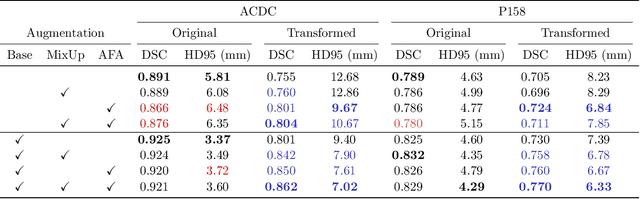

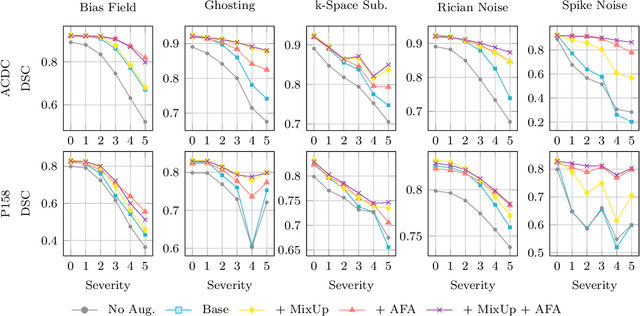

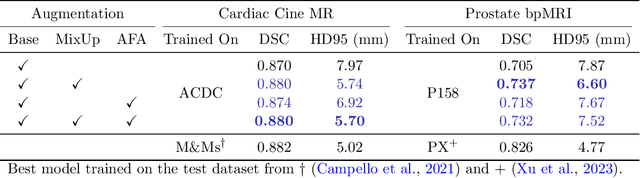

Abstract:Medical image segmentation models are often trained on curated datasets, leading to performance degradation when deployed in real-world clinical settings due to mismatches between training and test distributions. While data augmentation techniques are widely used to address these challenges, traditional visually consistent augmentation strategies lack the robustness needed for diverse real-world scenarios. In this work, we systematically evaluate alternative augmentation strategies, focusing on MixUp and Auxiliary Fourier Augmentation. These methods mitigate the effects of multiple variations without explicitly targeting specific sources of distribution shifts. We demonstrate how these techniques significantly improve out-of-distribution generalization and robustness to imaging variations across a wide range of transformations in cardiac cine MRI and prostate MRI segmentation. We quantitatively find that these augmentation methods enhance learned feature representations by promoting separability and compactness. Additionally, we highlight how their integration into nnU-Net training pipelines provides an easy-to-implement, effective solution for enhancing the reliability of medical segmentation models in real-world applications.

Active Learning for Deep Learning-Based Hemodynamic Parameter Estimation

Mar 05, 2025Abstract:Hemodynamic parameters such as pressure and wall shear stress play an important role in diagnosis, prognosis, and treatment planning in cardiovascular diseases. These parameters can be accurately computed using computational fluid dynamics (CFD), but CFD is computationally intensive. Hence, deep learning methods have been adopted as a surrogate to rapidly estimate CFD outcomes. A drawback of such data-driven models is the need for time-consuming reference CFD simulations for training. In this work, we introduce an active learning framework to reduce the number of CFD simulations required for the training of surrogate models, lowering the barriers to their deployment in new applications. We propose three distinct querying strategies to determine for which unlabeled samples CFD simulations should be obtained. These querying strategies are based on geometrical variance, ensemble uncertainty, and adherence to the physics governing fluid dynamics. We benchmark these methods on velocity field estimation in synthetic coronary artery bifurcations and find that they allow for substantial reductions in annotation cost. Notably, we find that our strategies reduce the number of samples required by up to 50% and make the trained models more robust to difficult cases. Our results show that active learning is a feasible strategy to increase the potential of deep learning-based CFD surrogates.

PDE-DKL: PDE-constrained deep kernel learning in high dimensionality

Jan 30, 2025

Abstract:Many physics-informed machine learning methods for PDE-based problems rely on Gaussian processes (GPs) or neural networks (NNs). However, both face limitations when data are scarce and the dimensionality is high. Although GPs are known for their robust uncertainty quantification in low-dimensional settings, their computational complexity becomes prohibitive as the dimensionality increases. In contrast, while conventional NNs can accommodate high-dimensional input, they often require extensive training data and do not offer uncertainty quantification. To address these challenges, we propose a PDE-constrained Deep Kernel Learning (PDE-DKL) framework that combines DL and GPs under explicit PDE constraints. Specifically, NNs learn a low-dimensional latent representation of the high-dimensional PDE problem, reducing the complexity of the problem. GPs then perform kernel regression subject to the governing PDEs, ensuring accurate solutions and principled uncertainty quantification, even when available data are limited. This synergy unifies the strengths of both NNs and GPs, yielding high accuracy, robust uncertainty estimates, and computational efficiency for high-dimensional PDEs. Numerical experiments demonstrate that PDE-DKL achieves high accuracy with reduced data requirements. They highlight its potential as a practical, reliable, and scalable solver for complex PDE-based applications in science and engineering.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge