Christian Gumbsch

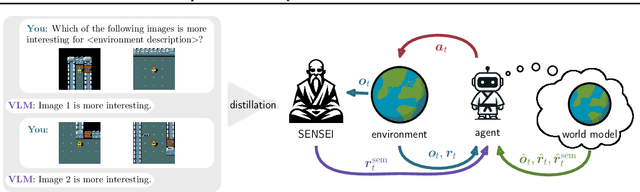

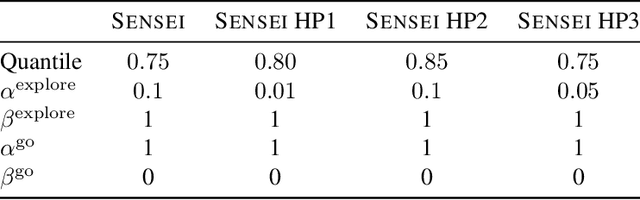

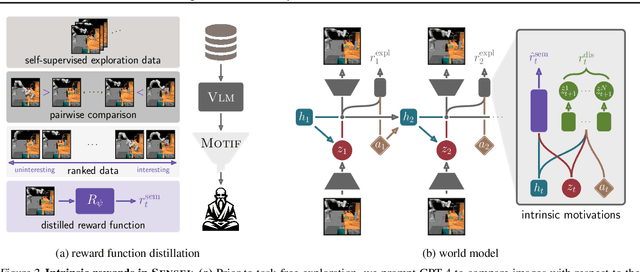

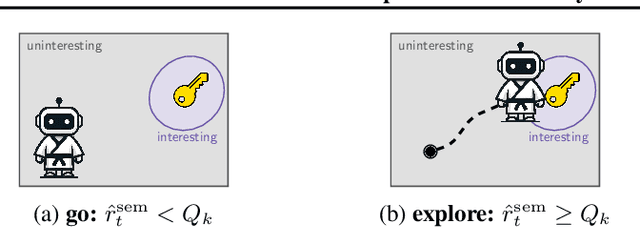

SENSEI: Semantic Exploration Guided by Foundation Models to Learn Versatile World Models

Mar 03, 2025

Abstract:Exploration is a cornerstone of reinforcement learning (RL). Intrinsic motivation attempts to decouple exploration from external, task-based rewards. However, established approaches to intrinsic motivation that follow general principles such as information gain, often only uncover low-level interactions. In contrast, children's play suggests that they engage in meaningful high-level behavior by imitating or interacting with their caregivers. Recent work has focused on using foundation models to inject these semantic biases into exploration. However, these methods often rely on unrealistic assumptions, such as language-embedded environments or access to high-level actions. We propose SEmaNtically Sensible ExploratIon (SENSEI), a framework to equip model-based RL agents with an intrinsic motivation for semantically meaningful behavior. SENSEI distills a reward signal of interestingness from Vision Language Model (VLM) annotations, enabling an agent to predict these rewards through a world model. Using model-based RL, SENSEI trains an exploration policy that jointly maximizes semantic rewards and uncertainty. We show that in both robotic and video game-like simulations SENSEI discovers a variety of meaningful behaviors from image observations and low-level actions. SENSEI provides a general tool for learning from foundation model feedback, a crucial research direction, as VLMs become more powerful.

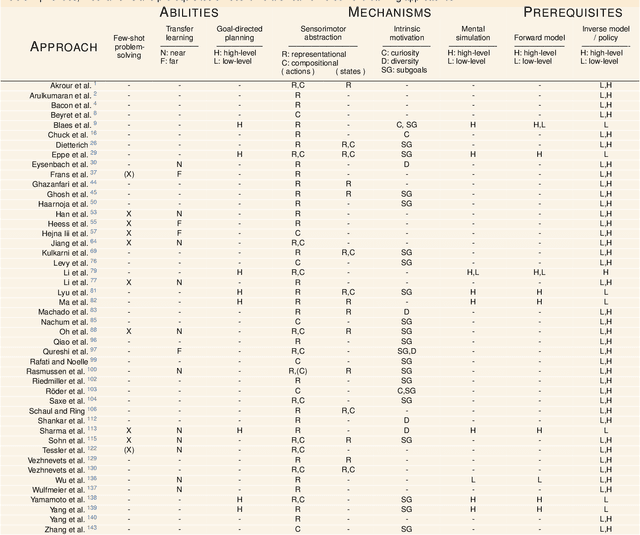

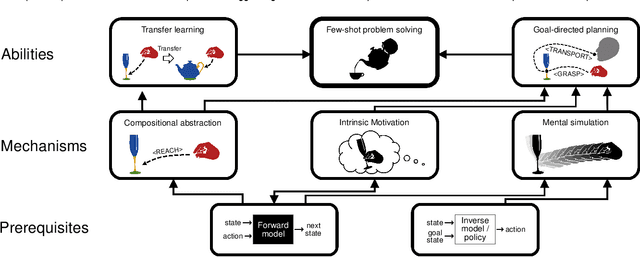

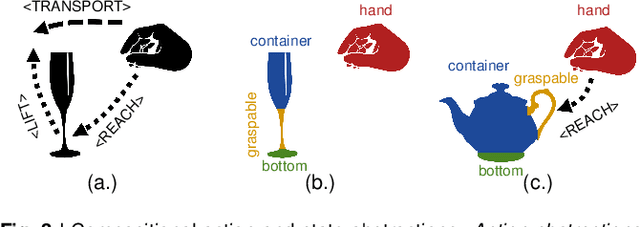

Intelligent problem-solving as integrated hierarchical reinforcement learning

Aug 18, 2022Abstract:According to cognitive psychology and related disciplines, the development of complex problem-solving behaviour in biological agents depends on hierarchical cognitive mechanisms. Hierarchical reinforcement learning is a promising computational approach that may eventually yield comparable problem-solving behaviour in artificial agents and robots. However, to date the problem-solving abilities of many human and non-human animals are clearly superior to those of artificial systems. Here, we propose steps to integrate biologically inspired hierarchical mechanisms to enable advanced problem-solving skills in artificial agents. Therefore, we first review the literature in cognitive psychology to highlight the importance of compositional abstraction and predictive processing. Then we relate the gained insights with contemporary hierarchical reinforcement learning methods. Interestingly, our results suggest that all identified cognitive mechanisms have been implemented individually in isolated computational architectures, raising the question of why there exists no single unifying architecture that integrates them. As our final contribution, we address this question by providing an integrative perspective on the computational challenges to develop such a unifying architecture. We expect our results to guide the development of more sophisticated cognitively inspired hierarchical machine learning architectures.

* Published as accepted article in Nature Machine Intelligence: https://www.nature.com/articles/s42256-021-00433-9. arXiv admin note: substantial text overlap with arXiv:2012.10147

Developing hierarchical anticipations via neural network-based event segmentation

Jun 04, 2022

Abstract:Humans can make predictions on various time scales and hierarchical levels. Thereby, the learning of event encodings seems to play a crucial role. In this work we model the development of hierarchical predictions via autonomously learned latent event codes. We present a hierarchical recurrent neural network architecture, whose inductive learning biases foster the development of sparsely changing latent state that compress sensorimotor sequences. A higher level network learns to predict the situations in which the latent states tend to change. Using a simulated robotic manipulator, we demonstrate that the system (i) learns latent states that accurately reflect the event structure of the data, (ii) develops meaningful temporal abstract predictions on the higher level, and (iii) generates goal-anticipatory behavior similar to gaze behavior found in eye-tracking studies with infants. The architecture offers a step towards autonomous, self-motivated learning of compressed hierarchical encodings of gathered experiences and the exploitation of these encodings for the generation of highly versatile, adaptive behavior.

Inference of Affordances and Active Motor Control in Simulated Agents

Mar 18, 2022

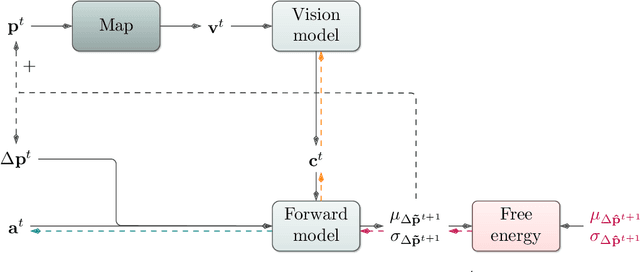

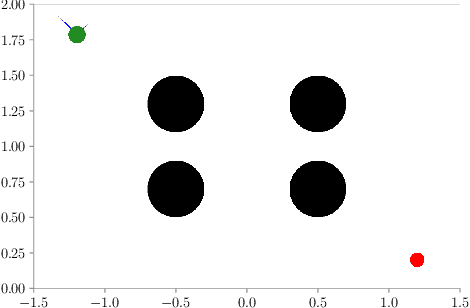

Abstract:Flexible, goal-directed behavior is a fundamental aspect of human life. Based on the free energy minimization principle, the theory of active inference formalizes the generation of such behavior from a computational neuroscience perspective. Based on the theory, we introduce an output-probabilistic, temporally predictive, modular artificial neural network architecture, which processes sensorimotor information, infers behavior-relevant aspects of its world, and invokes highly flexible, goal-directed behavior. We show that our architecture, which is trained end-to-end to minimize an approximation of free energy, develops latent states that can be interpreted as affordance maps. That is, the emerging latent states signal which actions lead to which effects dependent on the local context. In combination with active inference, we show that flexible, goal-directed behavior can be invoked, incorporating the emerging affordance maps. As a result, our simulated agent flexibly steers through continuous spaces, avoids collisions with obstacles, and prefers pathways that lead to the goal with high certainty. Additionally, we show that the learned agent is highly suitable for zero-shot generalization across environments: After training the agent in a handful of fixed environments with obstacles and other terrains affecting its behavior, it performs similarly well in procedurally generated environments containing different amounts of obstacles and terrains of various sizes at different locations. To improve and focus model learning further, we plan to invoke active inference-based, information-gain-oriented behavior also while learning the temporally predictive model itself in the near future. Moreover, we intend to foster the development of both deeper event-predictive abstractions and compact, habitual behavioral primitives.

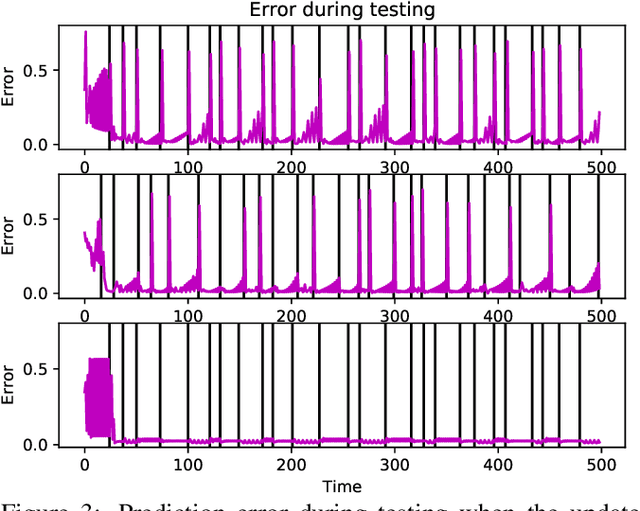

Sparsely Changing Latent States for Prediction and Planning in Partially Observable Domains

Oct 29, 2021

Abstract:A common approach to prediction and planning in partially observable domains is to use recurrent neural networks (RNNs), which ideally develop and maintain a latent memory about hidden, task-relevant factors. We hypothesize that many of these hidden factors in the physical world are constant over time, changing only sparsely. Accordingly, we propose Gated $L_0$ Regularized Dynamics (GateL0RD), a novel recurrent architecture that incorporates the inductive bias to maintain stable, sparsely changing latent states. The bias is implemented by means of a novel internal gating function and a penalty on the $L_0$ norm of latent state changes. We demonstrate that GateL0RD can compete with or outperform state-of-the-art RNNs in a variety of partially observable prediction and control tasks. GateL0RD tends to encode the underlying generative factors of the environment, ignores spurious temporal dependencies, and generalizes better, improving sampling efficiency and prediction accuracy as well as behavior in model-based planning and reinforcement learning tasks. Moreover, we show that the developing latent states can be easily interpreted, which is a step towards better explainability in RNNs.

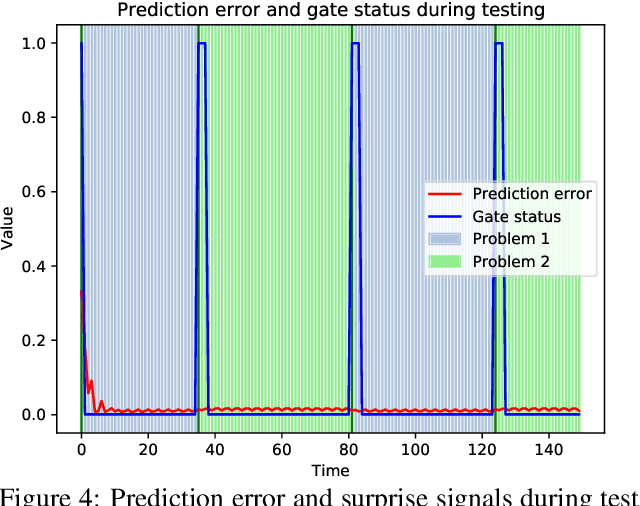

Latent Event-Predictive Encodings through Counterfactual Regularization

May 12, 2021

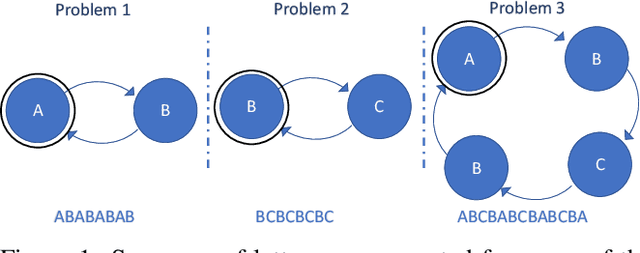

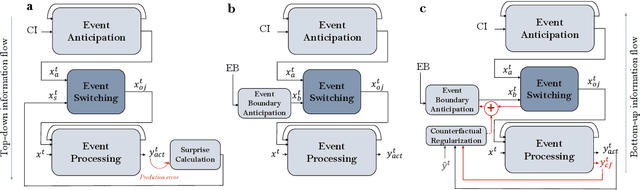

Abstract:A critical challenge for any intelligent system is to infer structure from continuous data streams. Theories of event-predictive cognition suggest that the brain segments sensorimotor information into compact event encodings, which are used to anticipate and interpret environmental dynamics. Here, we introduce a SUrprise-GAted Recurrent neural network (SUGAR) using a novel form of counterfactual regularization. We test the model on a hierarchical sequence prediction task, where sequences are generated by alternating hidden graph structures. Our model learns to both compress the temporal dynamics of the task into latent event-predictive encodings and anticipate event transitions at the right moments, given noisy hidden signals about them. The addition of the counterfactual regularization term ensures fluid transitions from one latent code to the next, whereby the resulting latent codes exhibit compositional properties. The implemented mechanisms offer a host of useful applications in other domains, including hierarchical reasoning, planning, and decision making.

Hierarchical principles of embodied reinforcement learning: A review

Dec 18, 2020

Abstract:Cognitive Psychology and related disciplines have identified several critical mechanisms that enable intelligent biological agents to learn to solve complex problems. There exists pressing evidence that the cognitive mechanisms that enable problem-solving skills in these species build on hierarchical mental representations. Among the most promising computational approaches to provide comparable learning-based problem-solving abilities for artificial agents and robots is hierarchical reinforcement learning. However, so far the existing computational approaches have not been able to equip artificial agents with problem-solving abilities that are comparable to intelligent animals, including human and non-human primates, crows, or octopuses. Here, we first survey the literature in Cognitive Psychology, and related disciplines, and find that many important mental mechanisms involve compositional abstraction, curiosity, and forward models. We then relate these insights with contemporary hierarchical reinforcement learning methods, and identify the key machine intelligence approaches that realise these mechanisms. As our main result, we show that all important cognitive mechanisms have been implemented independently in isolated computational architectures, and there is simply a lack of approaches that integrate them appropriately. We expect our results to guide the development of more sophisticated cognitively inspired hierarchical methods, so that future artificial agents achieve a problem-solving performance on the level of intelligent animals.

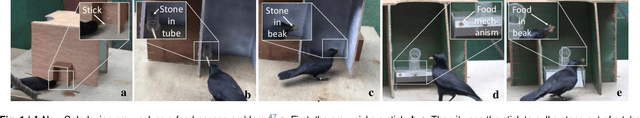

Autonomous Identification and Goal-Directed Invocation of Event-Predictive Behavioral Primitives

Feb 26, 2019

Abstract:Voluntary behavior of humans appears to be composed of small, elementary building blocks or behavioral primitives. While this modular organization seems crucial for the learning of complex motor skills and the flexible adaption of behavior to new circumstances, the problem of learning meaningful, compositional abstractions from sensorimotor experiences remains an open challenge. Here, we introduce a computational learning architecture, termed surprise-based behavioral modularization into event-predictive structures (SUBMODES), that explores behavior and identifies the underlying behavioral units completely from scratch. The SUBMODES architecture bootstraps sensorimotor exploration using a self-organizing neural controller. While exploring the behavioral capabilities of its own body, the system learns modular structures that predict the sensorimotor dynamics and generate the associated behavior. In line with recent theories of event perception, the system uses unexpected prediction error signals, i.e., surprise, to detect transitions between successive behavioral primitives. We show that, when applied to two robotic systems with completely different body kinematics, the system manages to learn a variety of complex and realistic behavioral primitives. Moreover, after initial self-exploration the system can use its learned predictive models progressively more effectively for invoking model predictive planning and goal-directed control in different tasks and environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge