Fedor Scholz

Quick and Accurate Affordance Learning

May 13, 2024

Abstract:Infants learn actively in their environments, shaping their own learning curricula. They learn about their environments' affordances, that is, how local circumstances determine how their behavior can affect the environment. Here we model this type of behavior by means of a deep learning architecture. The architecture mediates between global cognitive map exploration and local affordance learning. Inference processes actively move the simulated agent towards regions where they expect affordance-related knowledge gain. We contrast three measures of uncertainty to guide this exploration: predicted uncertainty of a model, standard deviation between the means of several models (SD), and the Jensen-Shannon Divergence (JSD) between several models. We show that the first measure gets fooled by aleatoric uncertainty inherent in the environment, while the two other measures focus learning on epistemic uncertainty. JSD exhibits the most balanced exploration strategy. From a computational perspective, our model suggests three key ingredients for coordinating the active generation of learning curricula: (1) Navigation behavior needs to be coordinated with local motor behavior for enabling active affordance learning. (2) Affordances need to be encoded locally for acquiring generalized knowledge. (3) Effective active affordance learning mechanisms should use density comparison techniques for estimating expected knowledge gain. Future work may seek collaborations with developmental psychology to model active play in children in more realistic scenarios.

Inference of Affordances and Active Motor Control in Simulated Agents

Mar 18, 2022

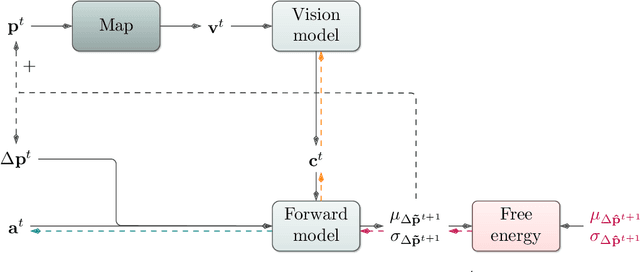

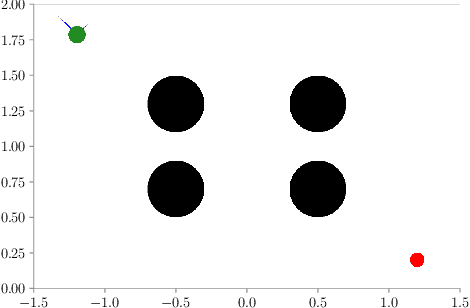

Abstract:Flexible, goal-directed behavior is a fundamental aspect of human life. Based on the free energy minimization principle, the theory of active inference formalizes the generation of such behavior from a computational neuroscience perspective. Based on the theory, we introduce an output-probabilistic, temporally predictive, modular artificial neural network architecture, which processes sensorimotor information, infers behavior-relevant aspects of its world, and invokes highly flexible, goal-directed behavior. We show that our architecture, which is trained end-to-end to minimize an approximation of free energy, develops latent states that can be interpreted as affordance maps. That is, the emerging latent states signal which actions lead to which effects dependent on the local context. In combination with active inference, we show that flexible, goal-directed behavior can be invoked, incorporating the emerging affordance maps. As a result, our simulated agent flexibly steers through continuous spaces, avoids collisions with obstacles, and prefers pathways that lead to the goal with high certainty. Additionally, we show that the learned agent is highly suitable for zero-shot generalization across environments: After training the agent in a handful of fixed environments with obstacles and other terrains affecting its behavior, it performs similarly well in procedurally generated environments containing different amounts of obstacles and terrains of various sizes at different locations. To improve and focus model learning further, we plan to invoke active inference-based, information-gain-oriented behavior also while learning the temporally predictive model itself in the near future. Moreover, we intend to foster the development of both deeper event-predictive abstractions and compact, habitual behavioral primitives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge