Christian Bock

SpIDER: Spatially Informed Dense Embedding Retrieval for Software Issue Localization

Dec 18, 2025Abstract:Retrieving code units (e.g., files, classes, functions) that are semantically relevant to a given user query, bug report, or feature request from large codebases is a fundamental challenge for LLM-based coding agents. Agentic approaches typically employ sparse retrieval methods like BM25 or dense embedding strategies to identify relevant units. While embedding-based approaches can outperform BM25 by large margins, they often lack exploration of the codebase and underutilize its underlying graph structure. To address this, we propose SpIDER (Spatially Informed Dense Embedding Retrieval), an enhanced dense retrieval approach that incorporates LLM-based reasoning over auxiliary context obtained through graph-based exploration of the codebase. Empirical results show that SpIDER consistently improves dense retrieval performance across several programming languages.

Textual Gradients are a Flawed Metaphor for Automatic Prompt Optimization

Dec 15, 2025

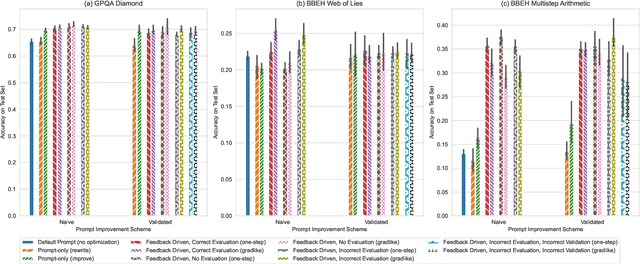

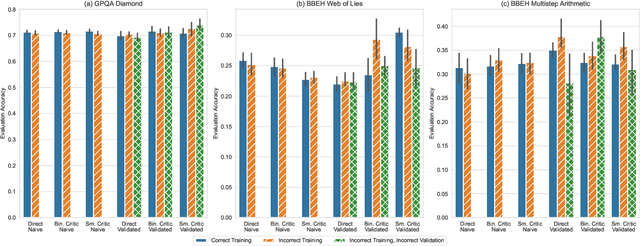

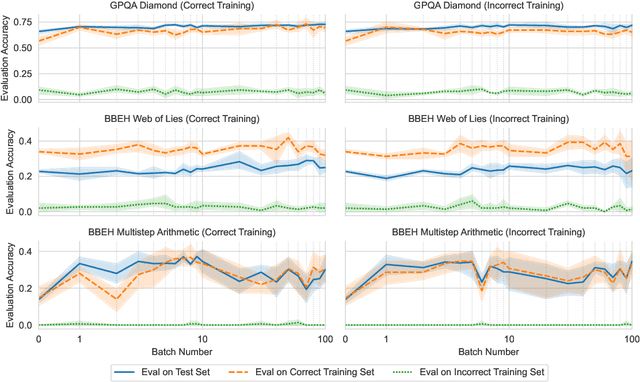

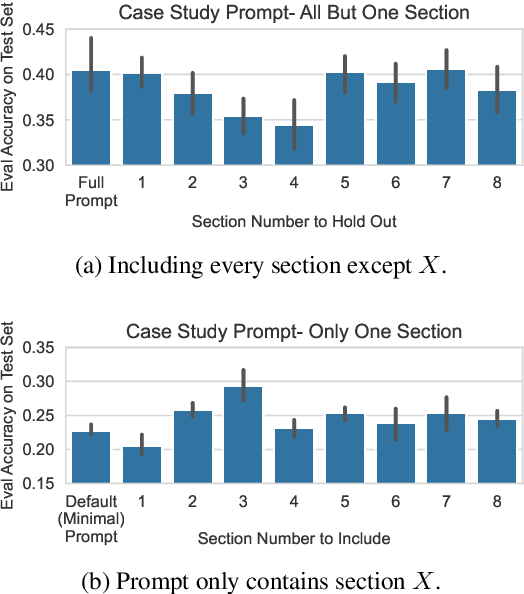

Abstract:A well-engineered prompt can increase the performance of large language models; automatic prompt optimization techniques aim to increase performance without requiring human effort to tune the prompts. One leading class of prompt optimization techniques introduces the analogy of textual gradients. We investigate the behavior of these textual gradient methods through a series of experiments and case studies. While such methods often result in a performance improvement, our experiments suggest that the gradient analogy does not accurately explain their behavior. Our insights may inform the selection of prompt optimization strategies, and development of new approaches.

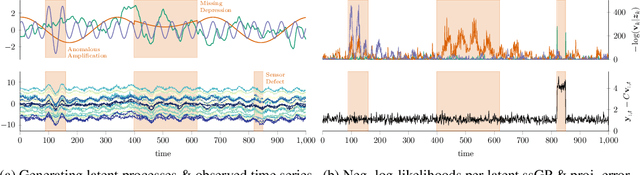

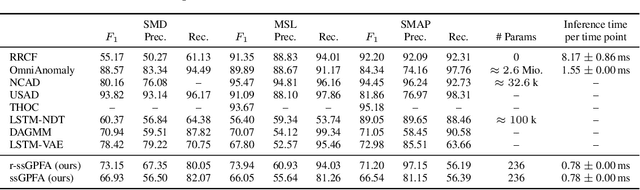

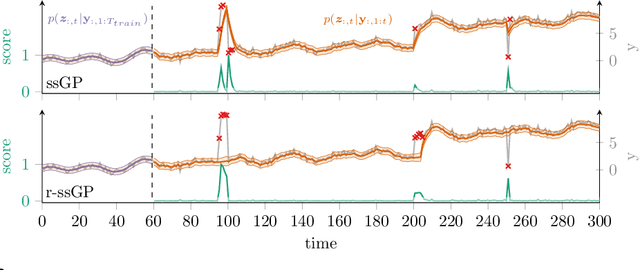

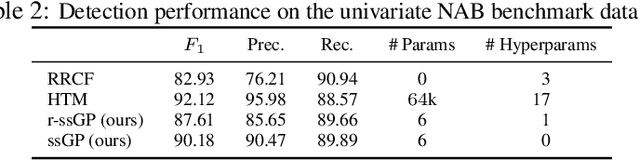

Online Time Series Anomaly Detection with State Space Gaussian Processes

Jan 18, 2022

Abstract:We propose r-ssGPFA, an unsupervised online anomaly detection model for uni- and multivariate time series building on the efficient state space formulation of Gaussian processes. For high-dimensional time series, we propose an extension of Gaussian process factor analysis to identify the common latent processes of the time series, allowing us to detect anomalies efficiently in an interpretable manner. We gain explainability while speeding up computations by imposing an orthogonality constraint on the mapping from the latent to the observed. Our model's robustness is improved by using a simple heuristic to skip Kalman updates when encountering anomalous observations. We investigate the behaviour of our model on synthetic data and show on standard benchmark datasets that our method is competitive with state-of-the-art methods while being computationally cheaper.

Uncovering the Topology of Time-Varying fMRI Data using Cubical Persistence

Jun 14, 2020

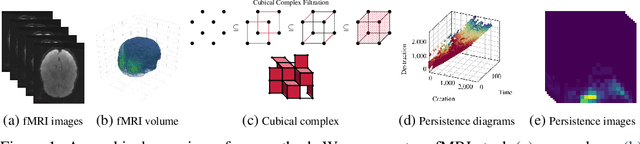

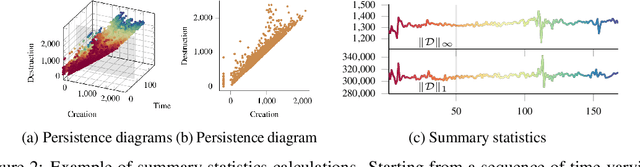

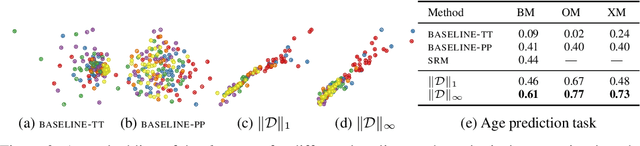

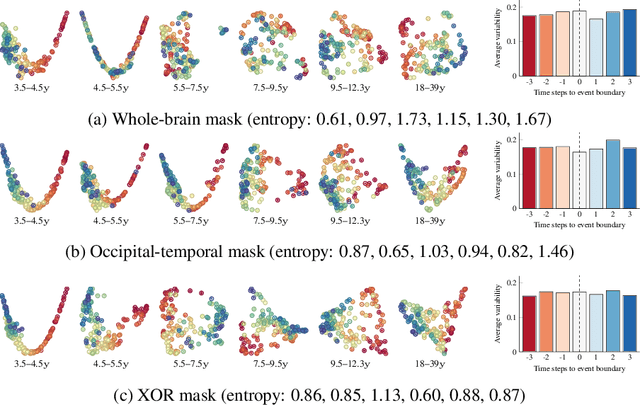

Abstract:Functional magnetic resonance imaging (fMRI) is a crucial technology for gaining insights into cognitive processes in humans. Data amassed from fMRI measurements result in volumetric data sets that vary over time. However, analysing such data presents a challenge due to the large degree of noise and person-to-person variation in how information is represented in the brain. To address this challenge, we present a novel topological approach that encodes each time point in an fMRI data set as a persistence diagram of topological features, i.e. high-dimensional voids present in the data. This representation naturally does not rely on voxel-by-voxel correspondence and is robust towards noise. We show that these time-varying persistence diagrams can be clustered to find meaningful groupings between participants, and that they are also useful in studying within-subject brain state trajectories of subjects performing a particular task. Here, we apply both clustering and trajectory analysis techniques to a group of participants watching the movie 'Partly Cloudy'. We observe significant differences in both brain state trajectories and overall topological activity between adults and children watching the same movie.

Path Imputation Strategies for Signature Models of Irregular Time Series

Jun 06, 2020

Abstract:The signature transform is a 'universal nonlinearity' on the space of continuous vector-valued paths, and has received attention for use in machine learning on time series. However, real-world temporal data is typically observed at discrete points in time, and must first be transformed into a continuous path before signature techniques can be applied. We make this step explicit by characterising it as an imputation problem, and empirically assess the impact of various imputation strategies when applying signature-based neural nets to irregular time series data. For one of these strategies, Gaussian process (GP) adapters, we propose an extension~(GP-PoM) that makes uncertainty information directly available to the subsequent classifier while at the same time preventing costly Monte-Carlo (MC) sampling. In our experiments, we find that the choice of imputation drastically affects shallow signature models, whereas deeper architectures are more robust. Next, we observe that uncertainty-aware predictions (based on GP-PoM or indicator imputations) are beneficial for predictive performance, even compared to the uncertainty-aware training of conventional GP adapters. In conclusion, we have demonstrated that the path construction is indeed crucial for signature models and that our proposed strategy leads to competitive performance in general, while improving robustness of signature models in particular.

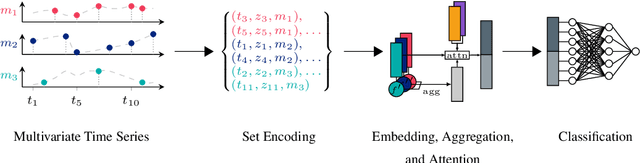

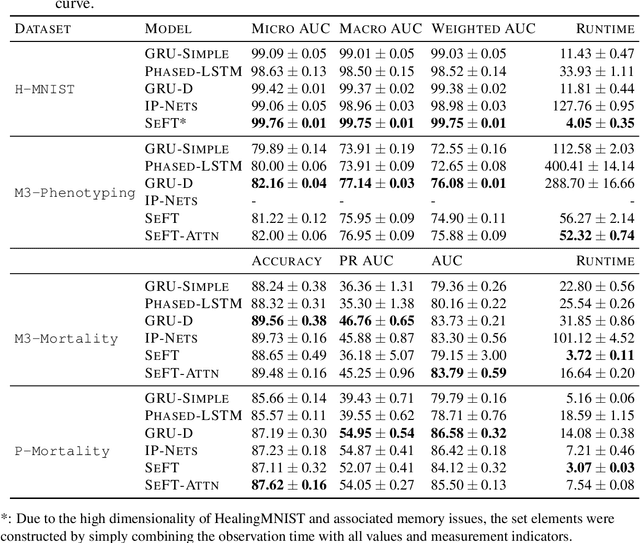

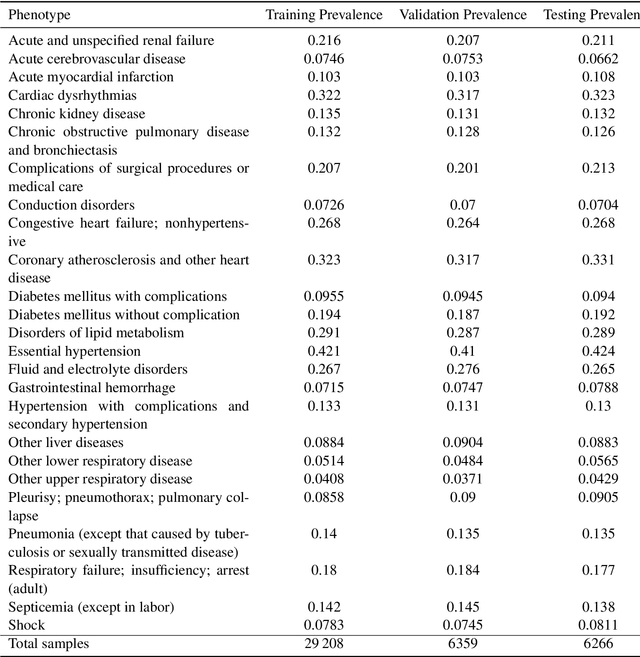

Set Functions for Time Series

Sep 26, 2019

Abstract:Despite the eminent successes of deep neural networks, many architectures are often hard to transfer to irregularly-sampled and asynchronous time series that occur in many real-world datasets, such as healthcare applications. This paper proposes a novel framework for classifying irregularly sampled time series with unaligned measurements, focusing on high scalability and data efficiency. Our method SEFT (Set Functions for Time Series) is based on recent advances in differentiable set function learning, extremely parallelizable, and scales well to very large datasets and online monitoring scenarios. We extensively compare our method to competitors on multiple healthcare time series datasets and show that it performs competitively whilst significantly reducing runtime.

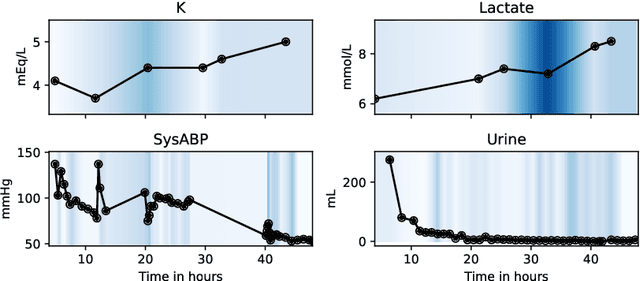

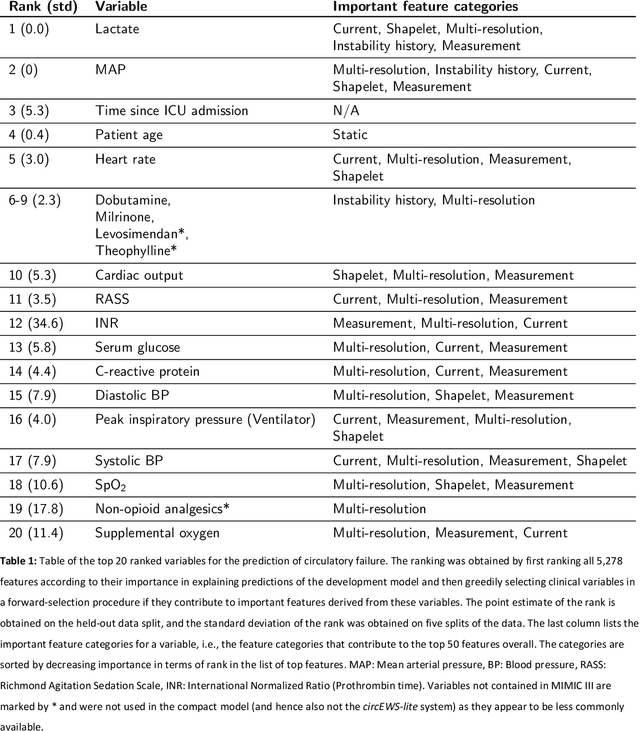

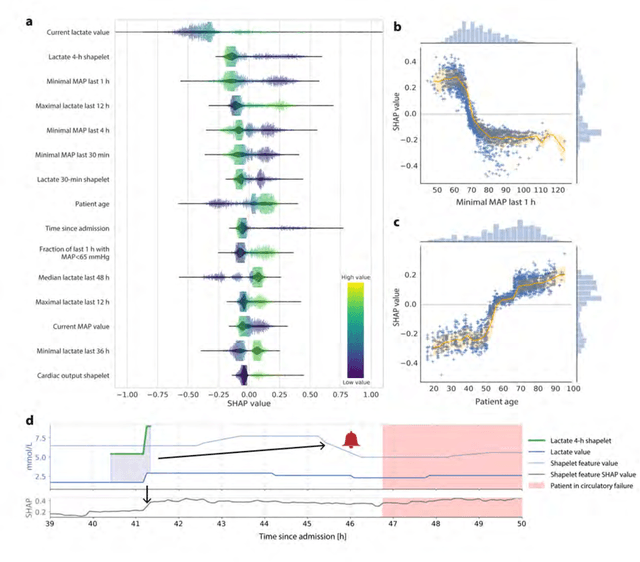

Machine learning for early prediction of circulatory failure in the intensive care unit

Apr 19, 2019

Abstract:Intensive care clinicians are presented with large quantities of patient information and measurements from a multitude of monitoring systems. The limited ability of humans to process such complex information hinders physicians to readily recognize and act on early signs of patient deterioration. We used machine learning to develop an early warning system for circulatory failure based on a high-resolution ICU database with 240 patient years of data. This automatic system predicts 90.0% of circulatory failure events (prevalence 3.1%), with 81.8% identified more than two hours in advance, resulting in an area under the receiver operating characteristic curve of 94.0% and area under the precision-recall curve of 63.0%. The model was externally validated in a large independent patient cohort.

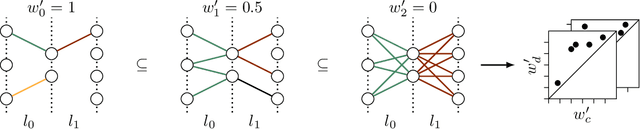

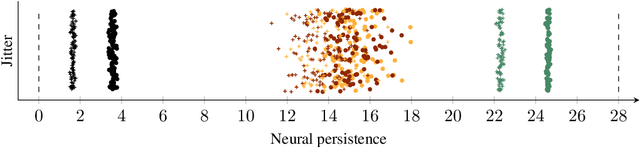

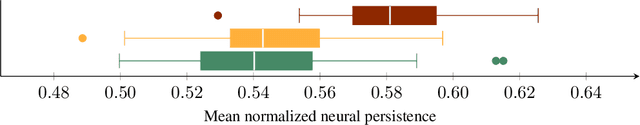

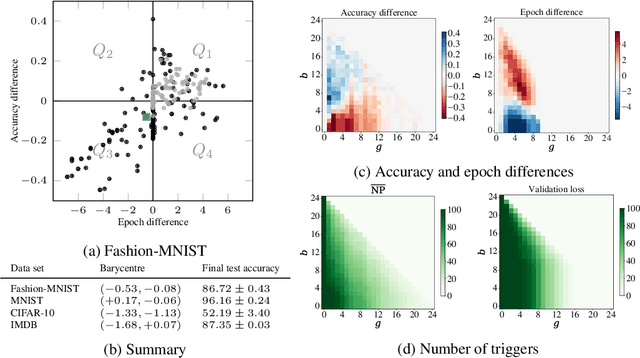

Neural Persistence: A Complexity Measure for Deep Neural Networks Using Algebraic Topology

Dec 23, 2018

Abstract:While many approaches to make neural networks more fathomable have been proposed, they are restricted to interrogating the network with input data. Measures for characterizing and monitoring structural properties, however, have not been developed. In this work, we propose neural persistence, a complexity measure for neural network architectures based on topological data analysis on weighted stratified graphs. To demonstrate the usefulness of our approach, we show that neural persistence reflects best practices developed in the deep learning community such as dropout and batch normalization. Moreover, we derive a neural persistence-based stopping criterion that shortens the training process while achieving comparable accuracies as early stopping based on validation loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge