Chengkang Pan

Multi-User Matching and Resource Allocation in Vision Aided Communications

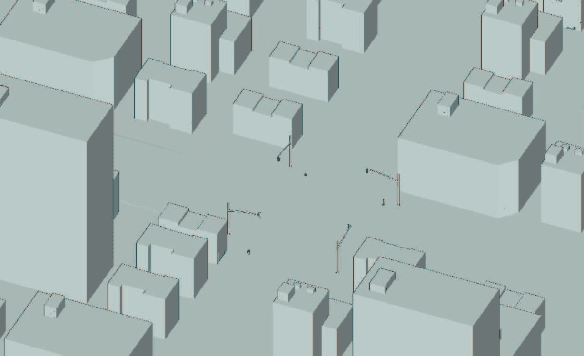

Apr 18, 2023Abstract:Visual perception is an effective way to obtain the spatial characteristics of wireless channels and to reduce the overhead for communications system. A critical problem for the visual assistance is that the communications system needs to match the radio signal with the visual information of the corresponding user, i.e., to identify the visual user that corresponds to the target radio signal from all the environmental objects. In this paper, we propose a user matching method for environment with a variable number of objects. Specifically, we apply 3D detection to extract all the environmental objects from the images taken by multiple cameras. Then, we design a deep neural network (DNN) to estimate the location distribution of users by the images and beam pairs at multiple moments, and thereby identify the users from all the extracted environmental objects. Moreover, we present a resource allocation method based on the taken images to reduce the time and spectrum overhead compared to traditional resource allocation methods. Simulation results show that the proposed user matching method outperforms the existing methods, and the proposed resource allocation method can achieve $92\%$ transmission rate of the traditional resource allocation method but with the time and spectrum overhead significantly reduced.

Vision Aided Environment Semantics Extraction and Its Application in mmWave Beam Selection

Jan 21, 2023

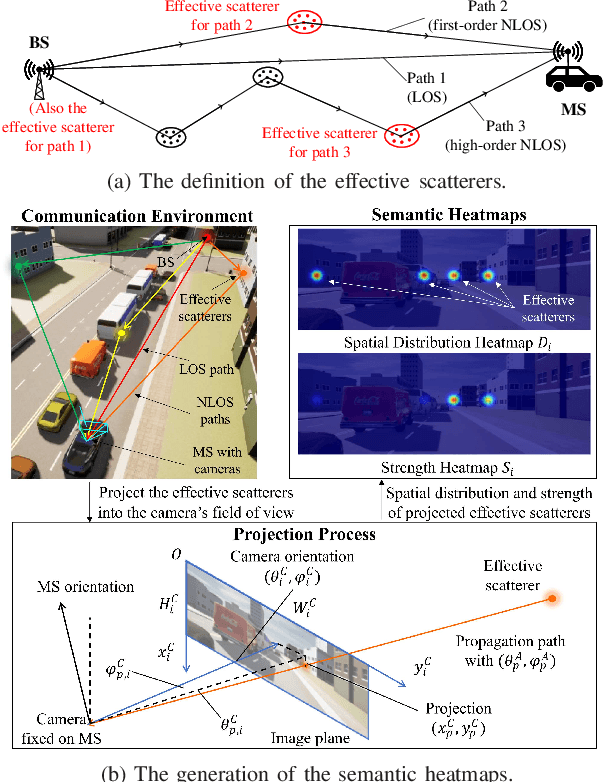

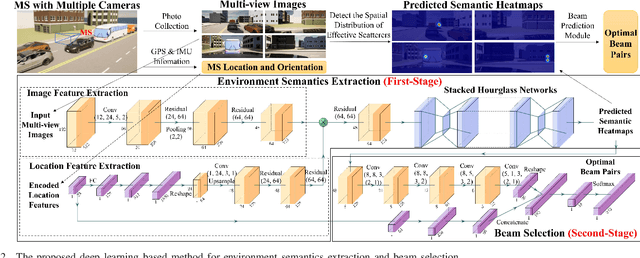

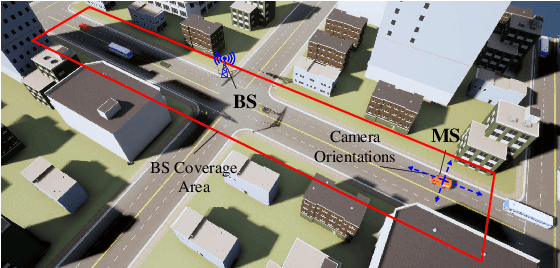

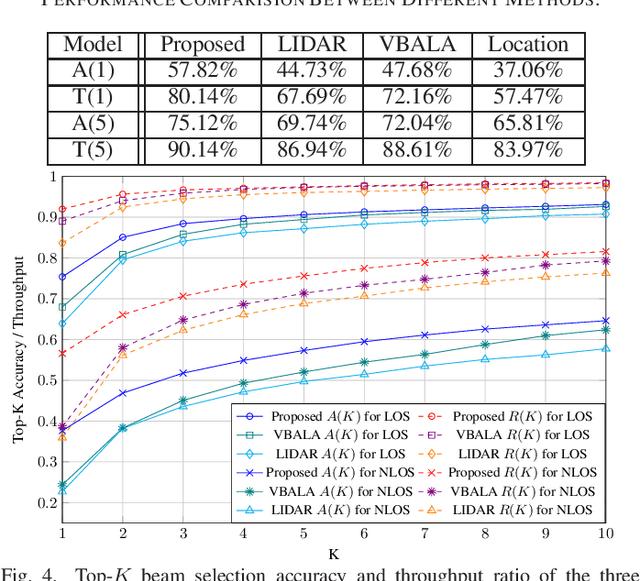

Abstract:In this letter, we propose a novel mmWave beam selection method based on the environment semantics that are extracted from camera images taken at the user side. Specifically, we first define the environment semantics as the spatial distribution of the scatterers that affect the wireless propagation channels and utilize the keypoint detection technique to extract them from the input images. Then, we design a deep neural network with environment semantics as the input that can output the optimal beam pairs at UE and BS. Compared with the existing beam selection approaches that directly use images as the input, the proposed semantic-based method can explicitly obtain the environmental features that account for the propagation of wireless signals, and thus reduce the burden of storage and computation. Simulation results show that the proposed method can precisely estimate the location of the scatterers and outperform the existing image or LIDAR based works.

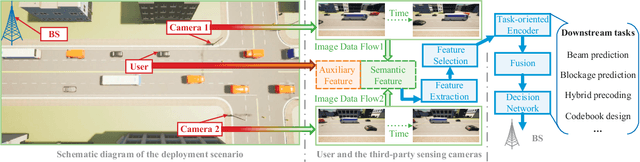

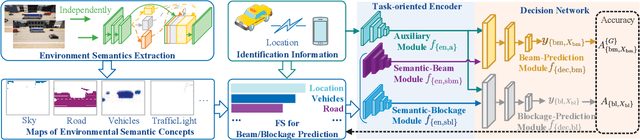

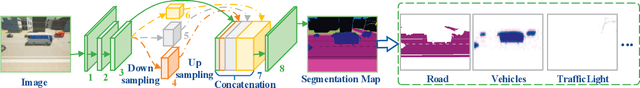

Environment Semantics Aided Wireless Communications: A Case Study of mmWave Beam Prediction and Blockage Prediction

Jan 14, 2023

Abstract:In this paper, we propose an environment semantics aided wireless communication framework to reduce the transmission latency and improve the transmission reliability, where semantic information is extracted from environment image data, selectively encoded based on its task-relevance, and then fused to make decisions for channel related tasks. As a case study, we develop an environment semantics aided network architecture for mmWave communication systems, which is composed of a semantic feature extraction network, a feature selection algorithm, a task-oriented encoder, and a decision network. With images taken from street cameras and user's identification information as the inputs, the environment semantics aided network architecture is trained to predict the optimal beam index and the blockage state for the base station. It is seen that without pilot training or the costly beam scans, the environment semantics aided network architecture can realize extremely efficient beam prediction and timely blockage prediction, thus meeting requirements for ultra-reliable and low-latency communications (URLLCs). Simulation results demonstrate that compared with existing works, the proposed environment semantics aided network architecture can reduce system overheads such as storage space and computational cost while achieving satisfactory prediction accuracy and protecting user privacy.

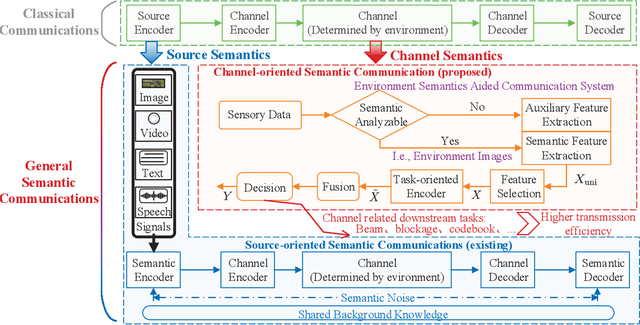

A Generalized Semantic Communication System: from Sources to Channels

Jan 11, 2023Abstract:Semantic communication is regarded as the breakthrough beyond the Shannon paradigm, which transmits only semantic information to significantly improve communication efficiency. This article introduces a framework for generalized semantic communication system, which exploits the semantic information in both the multimodal source and the wireless channel environment. Subsequently, the developed deep learning enabled end-to-end semantic communication and environment semantics aided wireless communication techniques are demonstrated through two examples. The article concludes with several research challenges to boost the development of such a generalized semantic communication system.

5G PRS-Based Sensing: A Sensing Reference Signal Approach for Joint Sensing and Communication System

Nov 21, 2022Abstract:The emerging joint sensing and communication (JSC) technology is expected to support new applications and services, such as autonomous driving and extended reality (XR), in the future wireless communication systems. Pilot (or reference) signals in wireless communications usually have good passive detection performance, strong anti-noise capability and good auto-correlation characteristics, hence they bear the potential for applying in radar sensing. In this paper, we investigate how to apply the positioning reference signal (PRS) of the 5th generation (5G) mobile communications in radar sensing. This approach has the unique benefit of compatibility with the most advanced mobile communication system available so far. Thus, the PRS can be regarded as a sensing reference signal to simultaneously realize the functions of radar sensing, communication and positioning in a convenient manner. Firstly, we propose a PRS based radar sensing scheme and analyze its range and velocity estimation performance, based on which we propose a method that improves the accuracy of velocity estimation by using multiple frames. Furthermore, the Cramer-Rao lower bound (CRLB) of the range and velocity estimation for PRS based radar sensing and the CRLB of the range estimation for PRS based positioning are derived. Our analysis and simulation results demonstrate the feasibility and superiority of PRS over other pilot signals in radar sensing. Finally, some suggestions for the future 5G-Advanced and 6th generation (6G) frame structure design containing the sensing reference signal are derived based on our study.

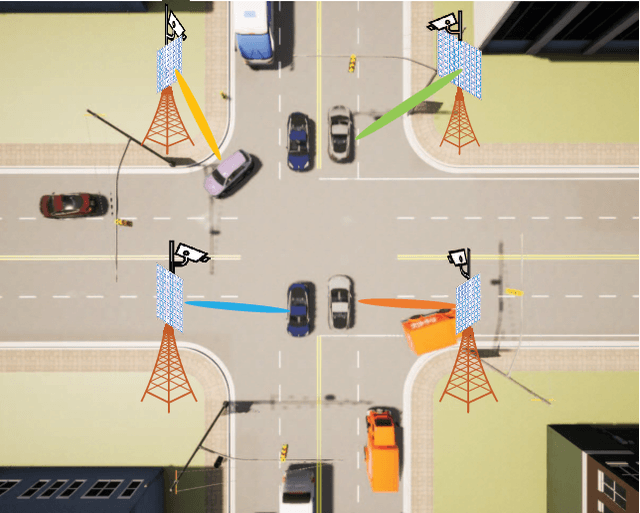

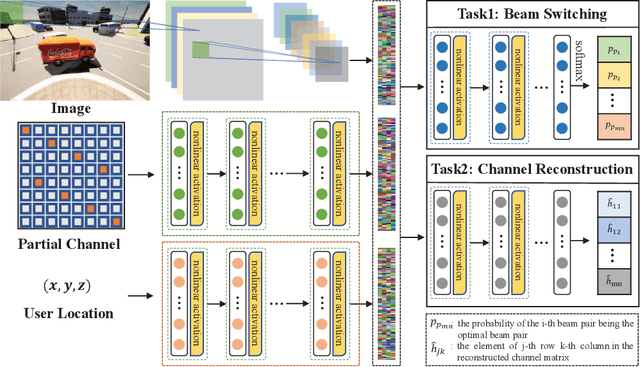

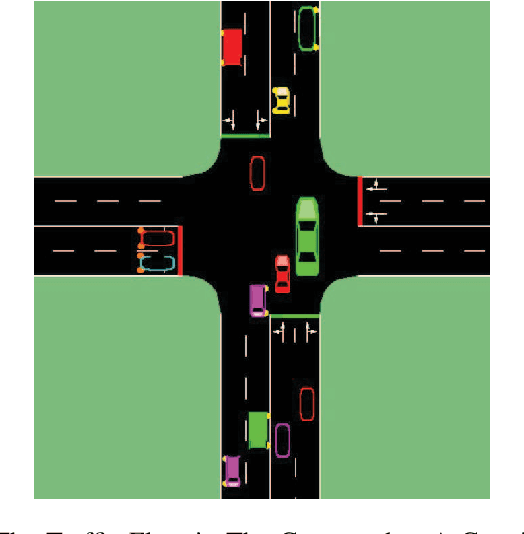

Multi-Camera View Based Proactive BS Selection and Beam Switching for V2X

Jul 12, 2022

Abstract:Due to the short wavelength and large attenuation of millimeter-wave (mmWave), mmWave BSs are densely distributed and require beamforming with high directivity. When the user moves out of the coverage of the current BS or is severely blocked, the mmWave BS must be switched to ensure the communication quality. In this paper, we proposed a multi-camera view based proactive BS selection and beam switching that can predict the optimal BS of the user in the future frame and switch the corresponding beam pair. Specifically, we extract the features of multi-camera view images and a small part of channel state information (CSI) in historical frames, and dynamically adjust the weight of each modality feature. Then we design a multi-task learning module to guide the network to better understand the main task, thereby enhancing the accuracy and the robustness of BS selection and beam switching. Using the outputs of all tasks, a prior knowledge based fine tuning network is designed to further increase the BS switching accuracy. After the optimal BS is obtained, a beam pair switching network is proposed to directly predict the optimal beam pair of the corresponding BS. Simulation results in an outdoor intersection environment show the superior performance of our proposed solution under several metrics such as predicting accuracy, achievable rate, harmonic mean of precision and recall.

A Robust Deep Learning Enabled Semantic Communication System for Text

Jun 06, 2022

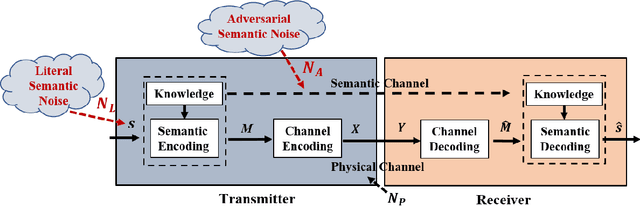

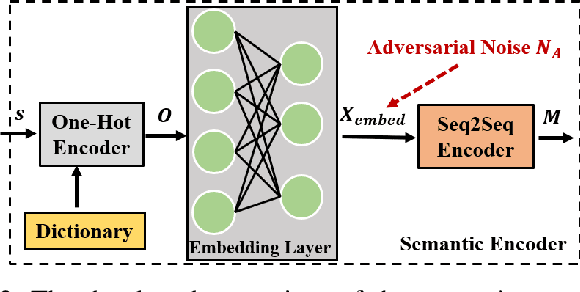

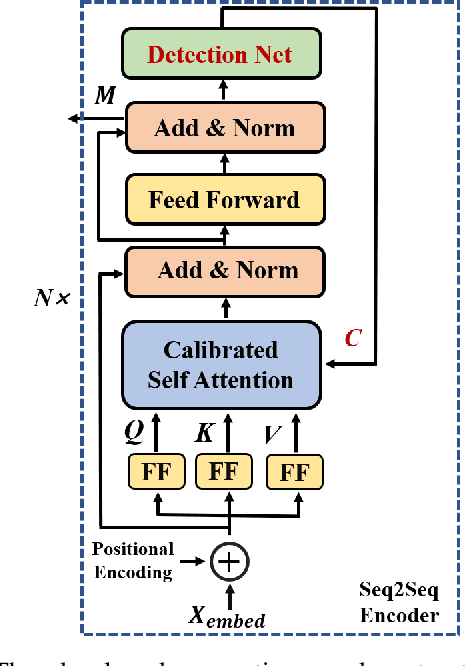

Abstract:With the advent of the 6G era, the concept of semantic communication has attracted increasing attention. Compared with conventional communication systems, semantic communication systems are not only affected by physical noise existing in the wireless communication environment, e.g., additional white Gaussian noise, but also by semantic noise due to the source and the nature of deep learning-based systems. In this paper, we elaborate on the mechanism of semantic noise. In particular, we categorize semantic noise into two categories: literal semantic noise and adversarial semantic noise. The former is caused by written errors or expression ambiguity, while the latter is caused by perturbations or attacks added to the embedding layer via the semantic channel. To prevent semantic noise from influencing semantic communication systems, we present a robust deep learning enabled semantic communication system (R-DeepSC) that leverages a calibrated self-attention mechanism and adversarial training to tackle semantic noise. Compared with baseline models that only consider physical noise for text transmission, the proposed R-DeepSC achieves remarkable performance in dealing with semantic noise under different signal-to-noise ratios.

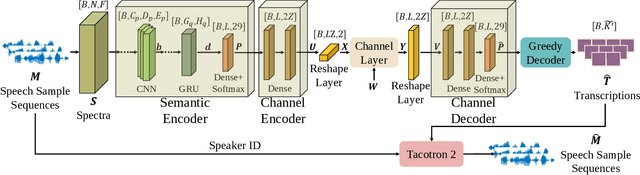

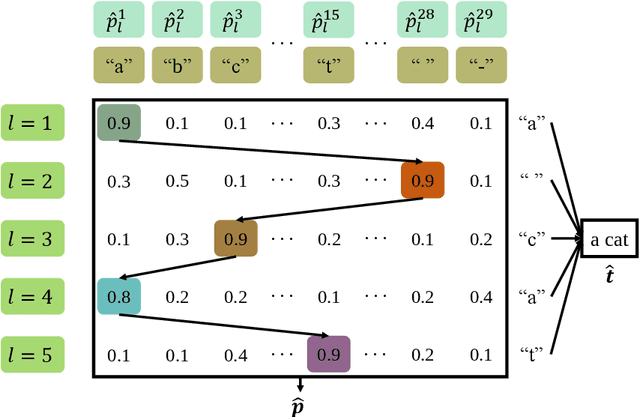

Deep Learning Enabled Semantic Communications with Speech Recognition and Synthesis

May 09, 2022

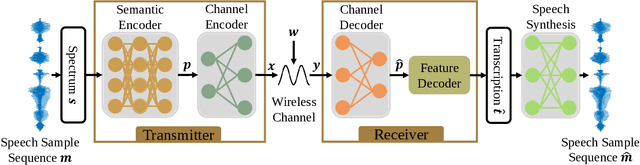

Abstract:In this paper, we develop a deep learning based semantic communication system for speech transmission, named DeepSC-ST. We take the speech recognition and speech synthesis as the transmission tasks of the communication system, respectively. First, the speech recognition-related semantic features are extracted for transmission by a joint semantic-channel encoder and the text is recovered at the receiver based on the received semantic features, which significantly reduces the required amount of data transmission without performance degradation. Then, we perform speech synthesis at the receiver, which dedicates to re-generate the speech signals by feeding the recognized text transcription into a neural network based speech synthesis module. To enable the DeepSC-ST adaptive to dynamic channel environments, we identify a robust model to cope with different channel conditions. According to the simulation results, the proposed DeepSC-ST significantly outperforms conventional communication systems, especially in the low signal-to-noise ratio (SNR) regime. A demonstration is further developed as a proof-of-concept of the DeepSC-ST.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge