Chengkai Dai

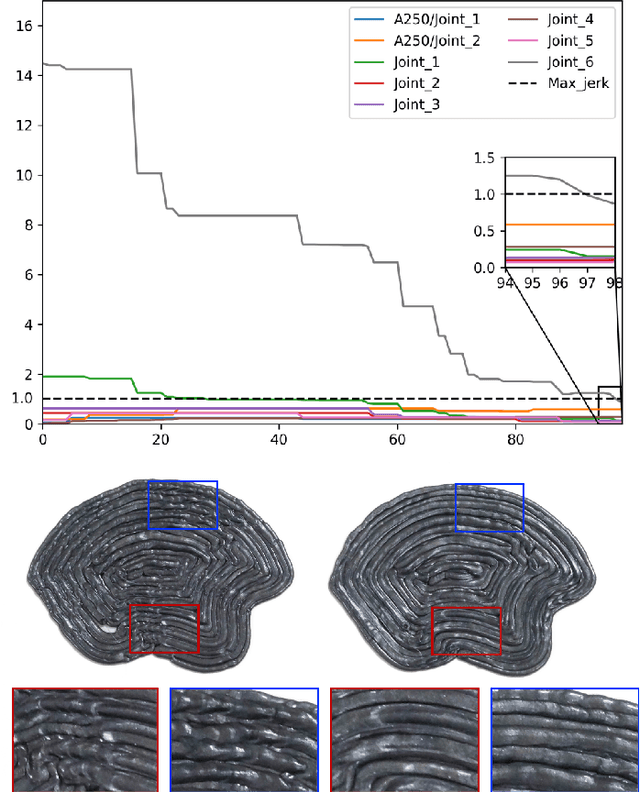

Computer-Controlled 3D Freeform Surface Weaving

Mar 01, 2024

Abstract:In this paper, we present a new computer-controlled weaving technology that enables the fabrication of woven structures in the shape of given 3D surfaces by using threads in non-traditional materials with high bending-stiffness, allowing for multiple applications with the resultant woven fabrics. A new weaving machine and a new manufacturing process are developed to realize the function of 3D surface weaving by the principle of short-row shaping. A computational solution is investigated to convert input 3D freeform surfaces into the corresponding weaving operations (indicated as W-code) to guide the operation of this system. A variety of examples using cotton threads, conductive threads and optical fibres are fabricated by our prototype system to demonstrate its functionality.

Fast Generation of High Fidelity RGB-D Images by Deep-Learning with Adaptive Convolution

Feb 12, 2020

Abstract:Using the raw data from consumer-level RGB-D cameras as input, we propose a deep-learning based approach to efficiently generate RGB-D images with completed information in high resolution. To process the input images in low resolution with missing regions, new operators for adaptive convolution are introduced in our deep-learning network that consists of three cascaded modules -- the completion module, the refinement module and the super-resolution module. The completion module is based on an architecture of encoder-decoder, where the features of input raw RGB-D will be automatically extracted by the encoding layers of a deep neural-network. The decoding layers are applied to reconstruct the completed depth map, which is followed by a refinement module to sharpen the boundary of different regions. For the super-resolution module, we generate RGB-D images in high resolution by multiple layers for feature extraction and a layer for up-sampling. Benefited from the adaptive convolution operators newly proposed in this paper, our results outperform the existing deep-learning based approaches for RGB-D image complete and super-resolution. As an end-to-end approach, high fidelity RGB-D images can be generated efficiently at the rate of around 21 frames per second.

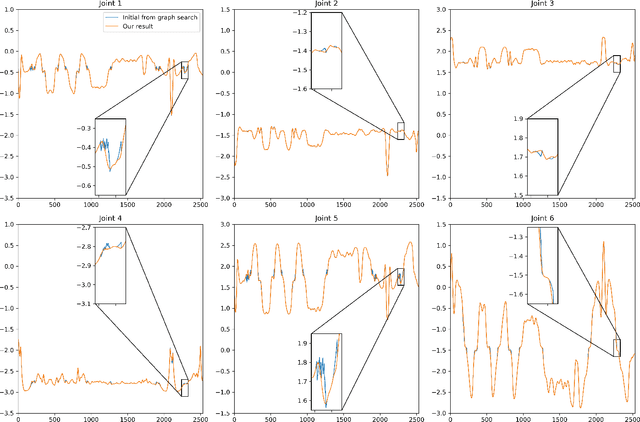

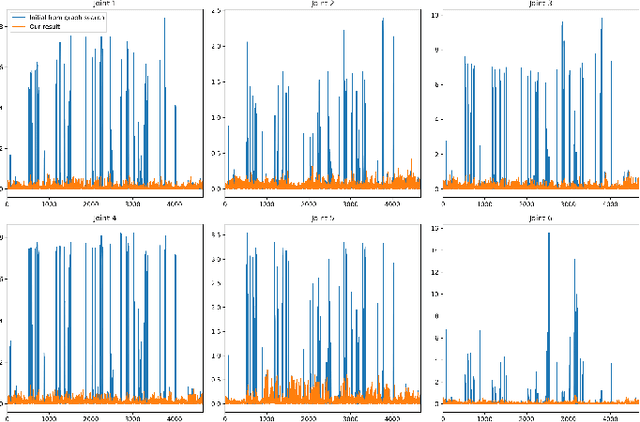

Planning Jerk-Optimized Trajectory with Discrete-Time Constraints for Redundant Robots

Sep 14, 2019

Abstract:We present a method for effectively planning the motion trajectory of robots in manufacturing tasks, the tool-paths of which are usually complex and have a large number of discrete-time constraints as waypoints. Kinematic redundancy also exists in these robotic systems. The jerk of motion is optimized in our trajectory planning method at the meanwhile of fabrication process to improve the quality of fabrication.

General Support-Effective Decomposition for Multi-Directional 3D Printing

Dec 03, 2018

Abstract:We present a method to fabricate general models by multi-directional 3D printing systems, in which different regions of a model are printed along different directions. The core of our method is a support-effective volume decomposition algorithm that targets on minimizing the usage of support-structures for the regions with large overhang. Optimal volume decomposition represented by a sequence of clipping planes is determined by a beam-guided searching algorithm according to manufacturing constraints. Different from existing approaches that need manually assemble 3D printed components into a final model, regions decomposed by our algorithm can be automatically fabricated in a collision-free way on a multi-directional 3D printing system. Our approach is general and can be applied to models with loops and handles. For those models that cannot completely eliminate support for large overhang, an algorithm is developed to generate special supporting structures for multi-directional 3D printing. We developed two different hardware systems to physically verify the effectiveness of our method: a Cartesian-motion based system and an angular-motion based system. A variety of 3D models have been successfully fabricated on these systems.

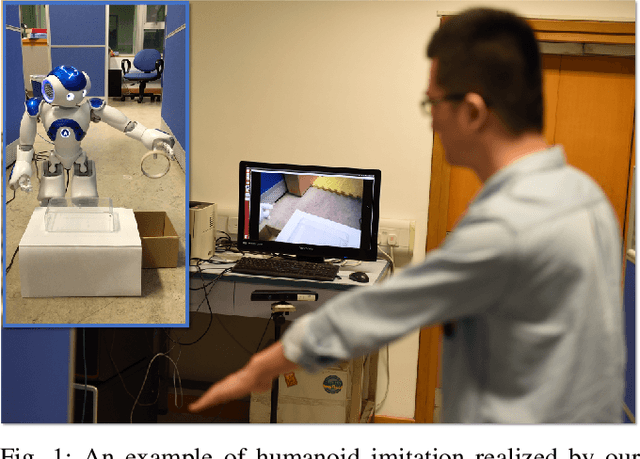

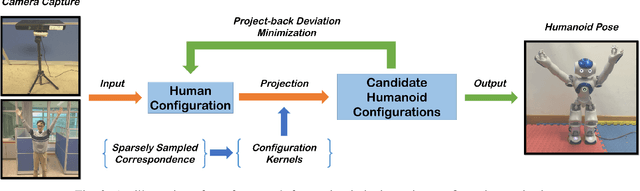

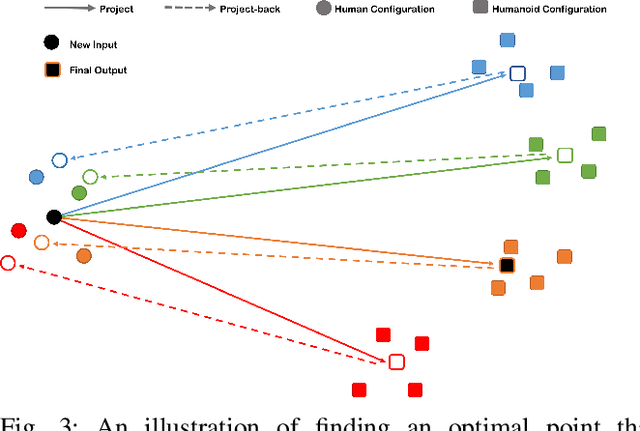

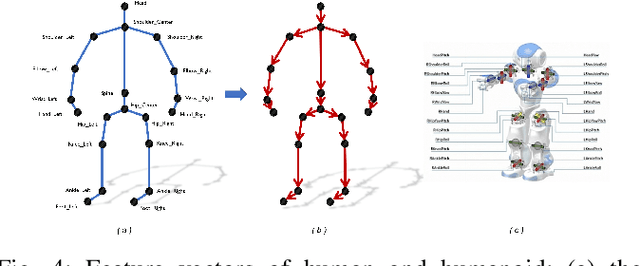

Motion Imitation Based on Sparsely Sampled Correspondence

Jul 25, 2016

Abstract:Existing techniques for motion imitation often suffer a certain level of latency due to their computational overhead or a large set of correspondence samples to search. To achieve real-time imitation with small latency, we present a framework in this paper to reconstruct motion on humanoids based on sparsely sampled correspondence. The imitation problem is formulated as finding the projection of a point from the configuration space of a human's poses into the configuration space of a humanoid. An optimal projection is defined as the one that minimizes a back-projected deviation among a group of candidates, which can be determined in a very efficient way. Benefited from this formulation, effective projections can be obtained by using sparse correspondence. Methods for generating these sparse correspondence samples have also been introduced. Our method is evaluated by applying the human's motion captured by a RGB-D sensor to a humanoid in real-time. Continuous motion can be realized and used in the example application of tele-operation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge