Celia Riga

End-to-End Real-time Catheter Segmentation with Optical Flow-Guided Warping during Endovascular Intervention

Jun 16, 2020

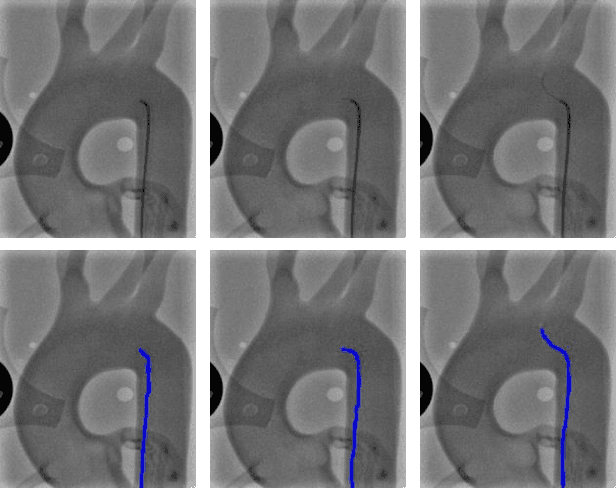

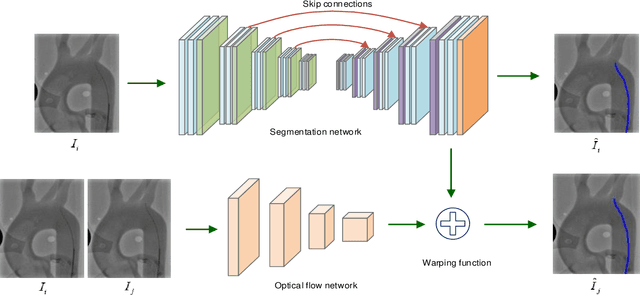

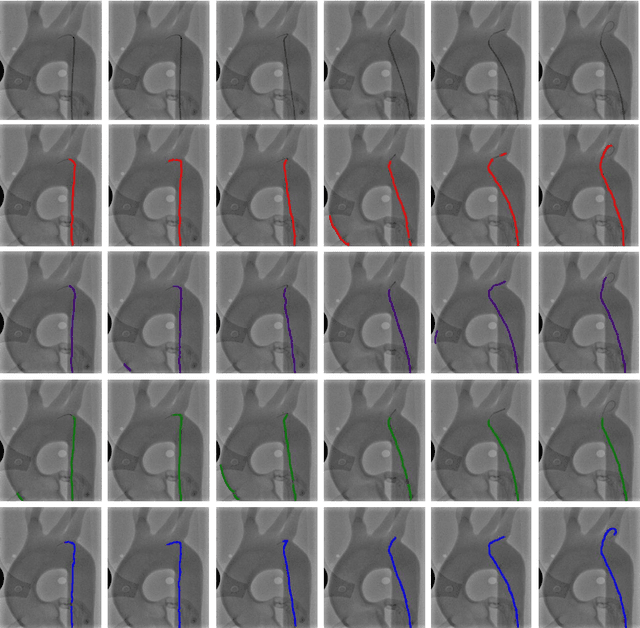

Abstract:Accurate real-time catheter segmentation is an important pre-requisite for robot-assisted endovascular intervention. Most of the existing learning-based methods for catheter segmentation and tracking are only trained on small-scale datasets or synthetic data due to the difficulties of ground-truth annotation. Furthermore, the temporal continuity in intraoperative imaging sequences is not fully utilised. In this paper, we present FW-Net, an end-to-end and real-time deep learning framework for endovascular intervention. The proposed FW-Net has three modules: a segmentation network with encoder-decoder architecture, a flow network to extract optical flow information, and a novel flow-guided warping function to learn the frame-to-frame temporal continuity. We show that by effectively learning temporal continuity, the network can successfully segment and track the catheters in real-time sequences using only raw ground-truth for training. Detailed validation results confirm that our FW-Net outperforms state-of-the-art techniques while achieving real-time performance.

Instantiation-Net: 3D Mesh Reconstruction from Single 2D Image for Right Ventricle

Sep 16, 2019

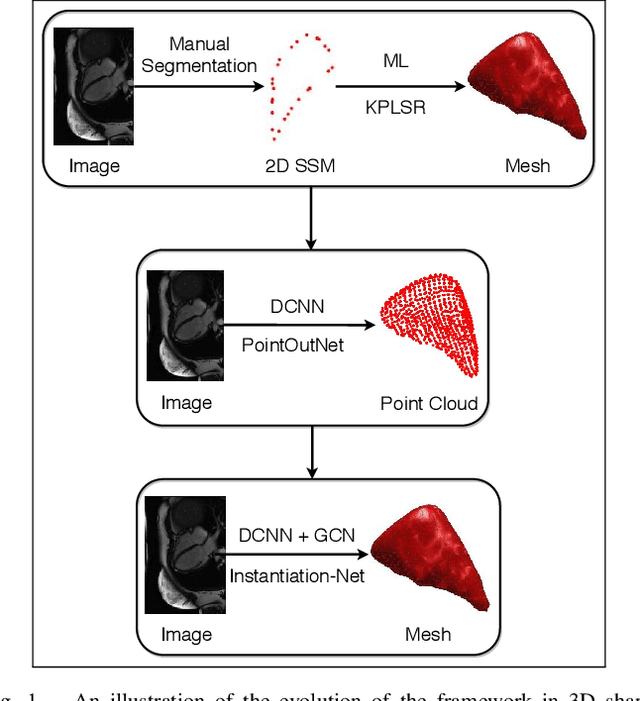

Abstract:3D shape instantiation which reconstructs the 3D shape of a target from limited 2D images or projections is an emerging technique for surgical intervention. It improves the currently less-informative and insufficient 2D navigation schemes for robot-assisted Minimally Invasive Surgery (MIS) to 3D navigation. Previously, a general and registration-free framework was proposed for 3D shape instantiation based on Kernel Partial Least Square Regression (KPLSR), requiring manually segmented anatomical structures as the pre-requisite. Two hyper-parameters including the Gaussian width and component number also need to be carefully adjusted. Deep Convolutional Neural Network (DCNN) based framework has also been proposed to reconstruct a 3D point cloud from a single 2D image, with end-to-end and fully automatic learning. In this paper, an Instantiation-Net is proposed to reconstruct the 3D mesh of a target from its a single 2D image, by using DCNN to extract features from the 2D image and Graph Convolutional Network (GCN) to reconstruct the 3D mesh, and using Fully Connected (FC) layers to connect the DCNN to GCN. Detailed validation was performed to demonstrate the practical strength of the method and its potential clinical use.

3D Path Planning from a Single 2D Fluoroscopic Image for Robot Assisted Fenestrated Endovascular Aortic Repair

Sep 16, 2018

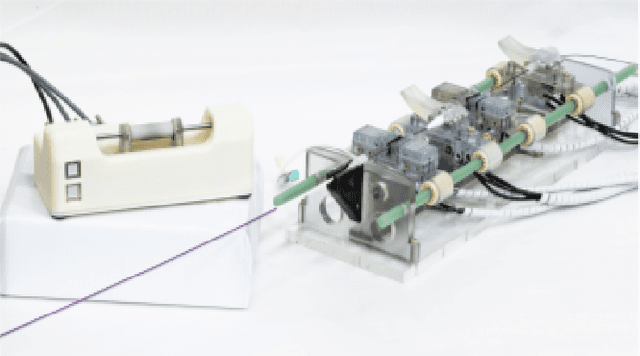

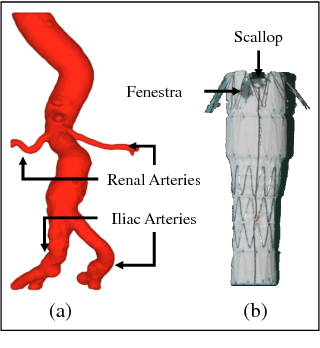

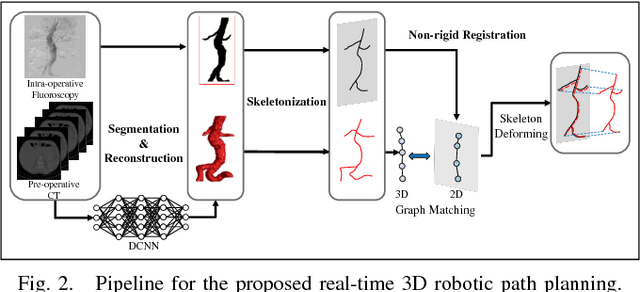

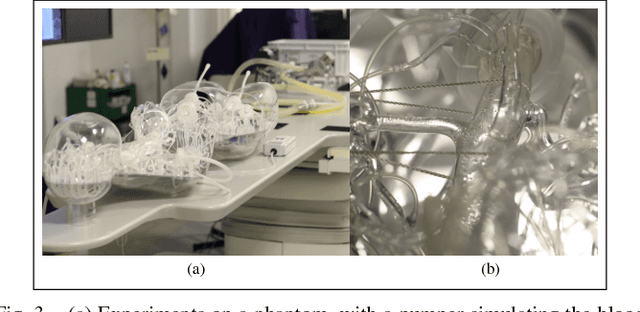

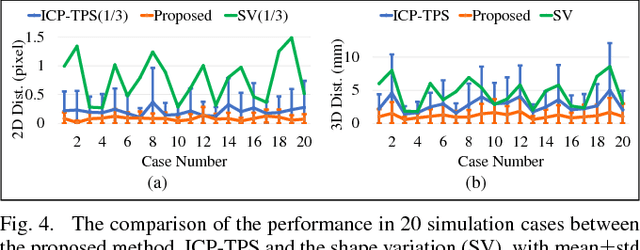

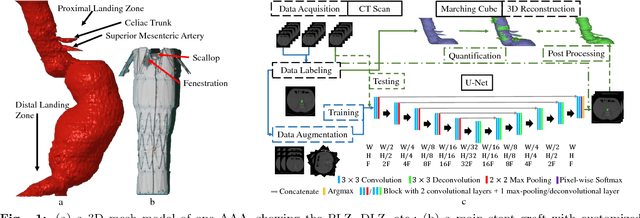

Abstract:The current standard of intra-operative navigation during Fenestrated Endovascular Aortic Repair (FEVAR) calls for need of 3D alignments between inserted devices and aortic branches. The navigation commonly via 2D fluoroscopic images, lacks anatomical information, resulting in longer operation hours and radiation exposure. In this paper, a framework for real-time 3D robotic path planning from a single 2D fluoroscopic image of Abdominal Aortic Aneurysm (AAA) is introduced. A graph matching method is proposed to establish the correspondence between the 3D preoperative and 2D intra-operative AAA skeletons, and then the two skeletons are registered by skeleton deformation and regularization in respect to skeleton length and smoothness. Furthermore, deep learning was used to segment 3D pre-operative AAA from Computed Tomography (CT) scans to facilitate the framework automation. Simulation, phantom and patient AAA data sets have been used to validate the proposed framework. 3D distance error of 2mm was achieved in the phantom setup. Performance advantages were also achieved in terms of accuracy, robustness and time-efficiency. All the code will be open source.

Abdominal Aortic Aneurysm Segmentation with a Small Number of Training Subjects

Apr 09, 2018

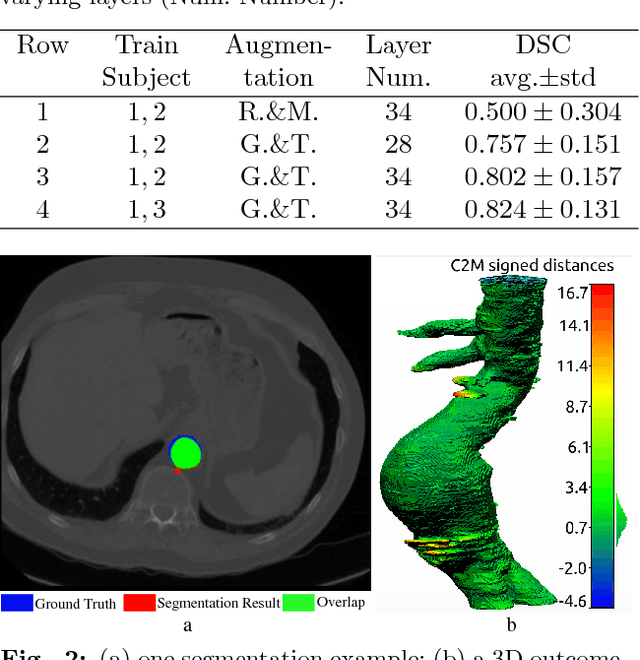

Abstract:Pre-operative Abdominal Aortic Aneurysm (AAA) 3D shape is critical for customized stent-graft design in Fenestrated Endovascular Aortic Repair (FEVAR). Traditional segmentation approaches implement expert-designed feature extractors while recent deep neural networks extract features automatically with multiple non-linear modules. Usually, a large training dataset is essential for applying deep learning on AAA segmentation. In this paper, the AAA was segmented using U-net with a small number (two) of training subjects. Firstly, Computed Tomography Angiography (CTA) slices were augmented with gray value variation and translation to avoid the overfitting caused by the small number of training subjects. Then, U-net was trained to segment the AAA. Dice Similarity Coefficients (DSCs) over 0.8 were achieved on the testing subjects. The PLZ, DLZ and aortic branches are all reconstructed reasonably, which will facilitate stent graft customization and help shape instantiation for intra-operative surgery navigation in FEVAR.

Real-time 3D Shape Instantiation from Single Fluoroscopy Projection for Fenestrated Stent Graft Deployment

Jan 08, 2018

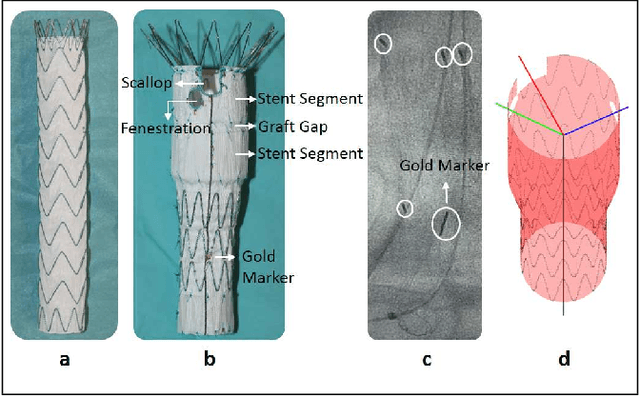

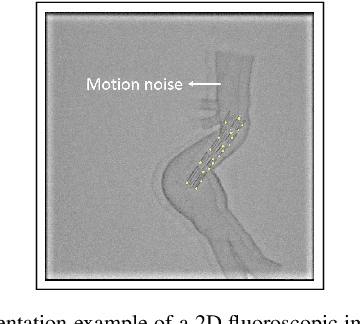

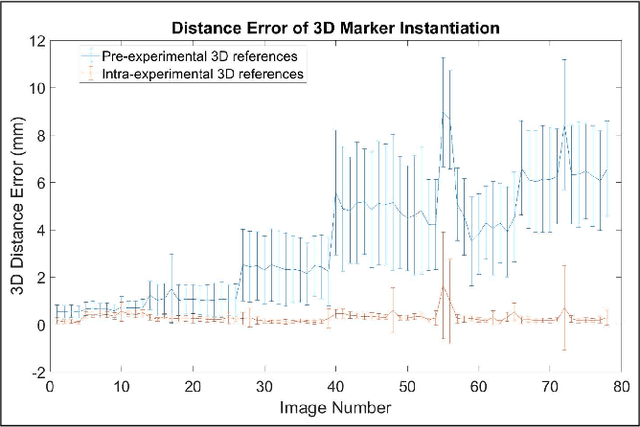

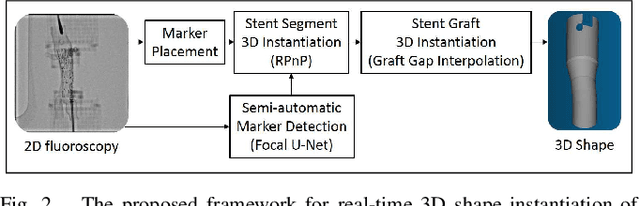

Abstract:Robot-assisted deployment of fenestrated stent grafts in Fenestrated Endovascular Aortic Repair (FEVAR) requires accurate geometrical alignment. Currently, this process is guided by 2D fluoroscopy, which is uninformative and error prone. In this paper, a real-time framework is proposed to instantiate the 3D shape of a fenestrated stent graft based on only a single low-dose 2D fluoroscopic image. Firstly, the fenestrated stent graft was placed with markers. Secondly, the 3D pose of each stent segment was instantiated by the RPnP (Robust Perspective-n-Point) method. Thirdly, the 3D shape of the whole stent graft was instantiated via graft gap interpolation. Focal-Unet was proposed to segment the markers from 2D fluoroscopic images to achieve semi-automatic marker detection. The proposed framework was validated on five patient-specific 3D printed phantoms of aortic aneurysms and three stent grafts with new marker placements, showing an average distance error of 1-3mm and an average angle error of 4 degree.

* 7 pages, 10 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge