Instantiation-Net: 3D Mesh Reconstruction from Single 2D Image for Right Ventricle

Paper and Code

Sep 16, 2019

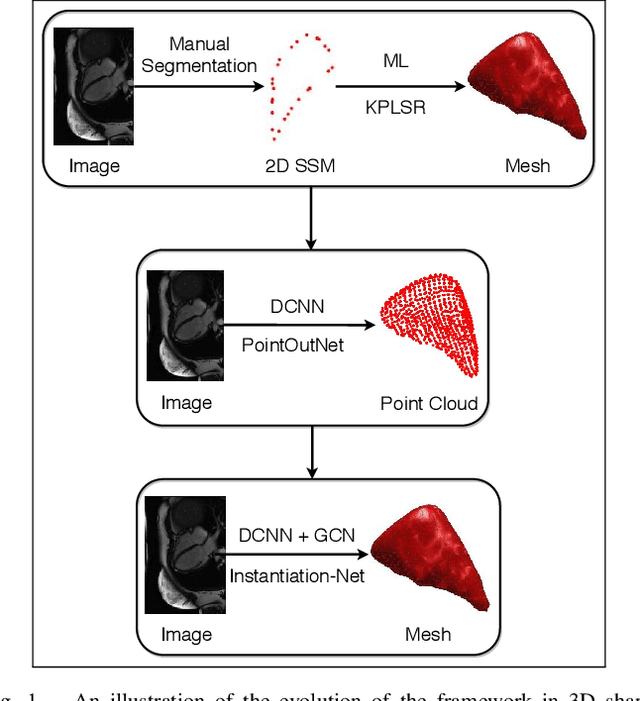

3D shape instantiation which reconstructs the 3D shape of a target from limited 2D images or projections is an emerging technique for surgical intervention. It improves the currently less-informative and insufficient 2D navigation schemes for robot-assisted Minimally Invasive Surgery (MIS) to 3D navigation. Previously, a general and registration-free framework was proposed for 3D shape instantiation based on Kernel Partial Least Square Regression (KPLSR), requiring manually segmented anatomical structures as the pre-requisite. Two hyper-parameters including the Gaussian width and component number also need to be carefully adjusted. Deep Convolutional Neural Network (DCNN) based framework has also been proposed to reconstruct a 3D point cloud from a single 2D image, with end-to-end and fully automatic learning. In this paper, an Instantiation-Net is proposed to reconstruct the 3D mesh of a target from its a single 2D image, by using DCNN to extract features from the 2D image and Graph Convolutional Network (GCN) to reconstruct the 3D mesh, and using Fully Connected (FC) layers to connect the DCNN to GCN. Detailed validation was performed to demonstrate the practical strength of the method and its potential clinical use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge