Carme Armentano-Oller

Enhancing Crowdsourced Audio for Text-to-Speech Models

Oct 17, 2024

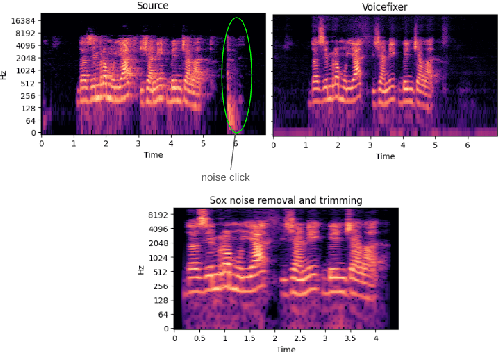

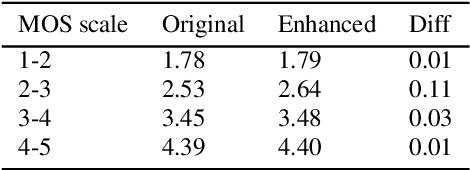

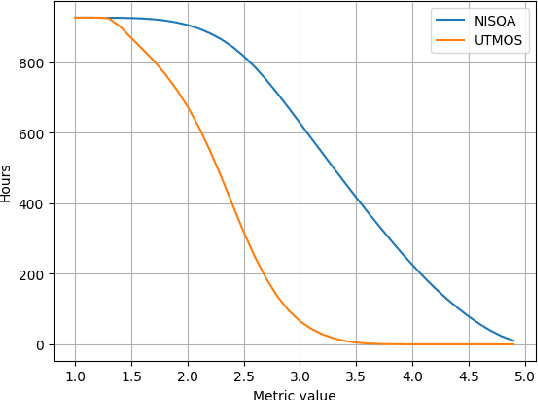

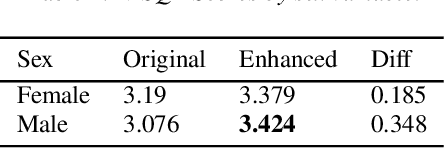

Abstract:High-quality audio data is a critical prerequisite for training robust text-to-speech models, which often limits the use of opportunistic or crowdsourced datasets. This paper presents an approach to overcome this limitation by implementing a denoising pipeline on the Catalan subset of Commonvoice, a crowd-sourced corpus known for its inherent noise and variability. The pipeline incorporates an audio enhancement phase followed by a selective filtering strategy. We developed an automatic filtering mechanism leveraging Non-Intrusive Speech Quality Assessment (NISQA) models to identify and retain the highest quality samples post-enhancement. To evaluate the efficacy of this approach, we trained a state of the art diffusion-based TTS model on the processed dataset. The results show a significant improvement, with an increase of 0.4 in the UTMOS Score compared to the baseline dataset without enhancement. This methodology shows promise for expanding the utility of crowdsourced data in TTS applications, particularly for mid to low resource languages like Catalan.

The Catalan Language CLUB

Dec 03, 2021

Abstract:The Catalan Language Understanding Benchmark (CLUB) encompasses various datasets representative of different NLU tasks that enable accurate evaluations of language models, following the General Language Understanding Evaluation (GLUE) example. It is part of AINA and PlanTL, two public funding initiatives to empower the Catalan language in the Artificial Intelligence era.

Spanish Language Models

Aug 13, 2021

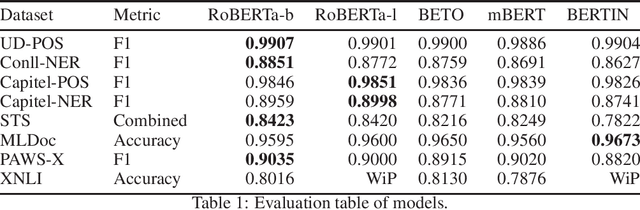

Abstract:This paper presents the Spanish RoBERTa-base and RoBERTa-large models, as well as the corresponding performance evaluations. Both models were pre-trained using the largest Spanish corpus known to date, with a total of 570GB of clean and deduplicated text processed for this work, compiled from the web crawlings performed by the National Library of Spain from 2009 to 2019. We extended the current evaluation datasets with an extractive Question Answering dataset and our models outperform the existing Spanish models across tasks and settings.

Are Multilingual Models the Best Choice for Moderately Under-resourced Languages? A Comprehensive Assessment for Catalan

Jul 16, 2021

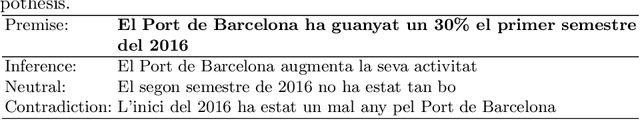

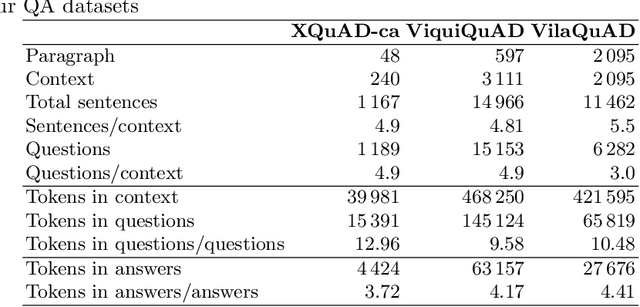

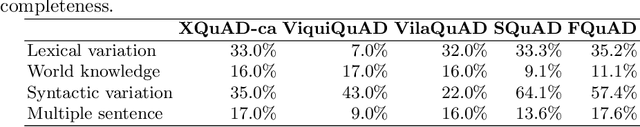

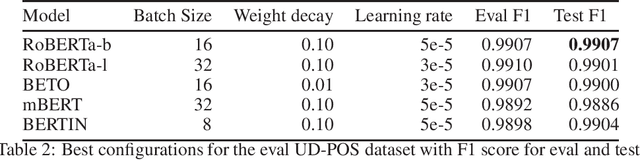

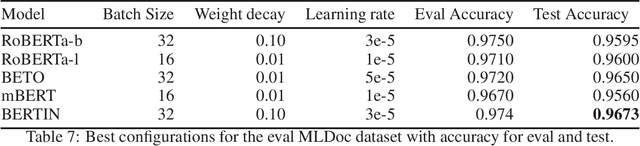

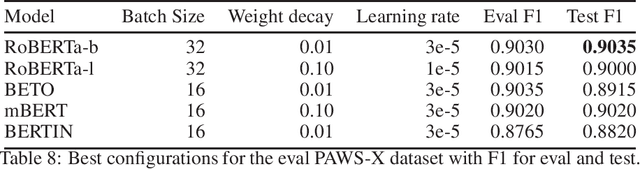

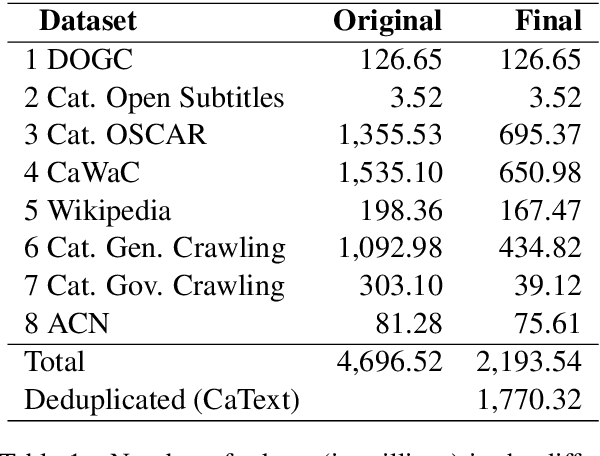

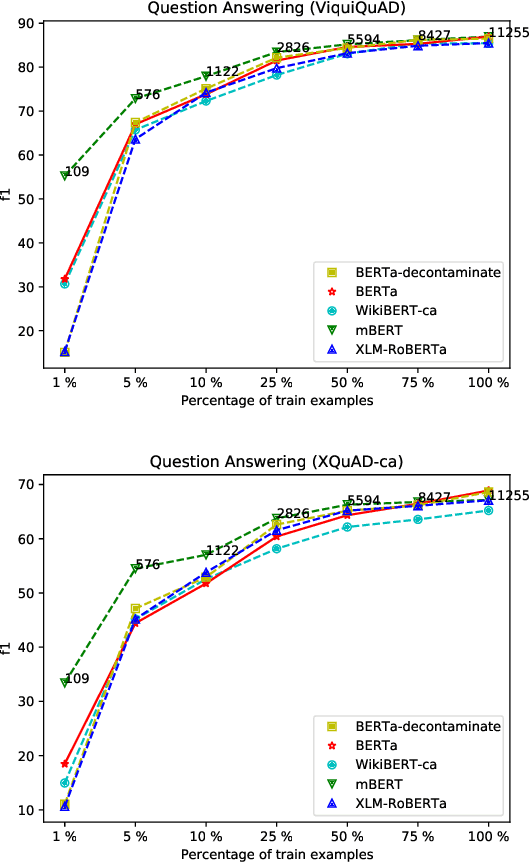

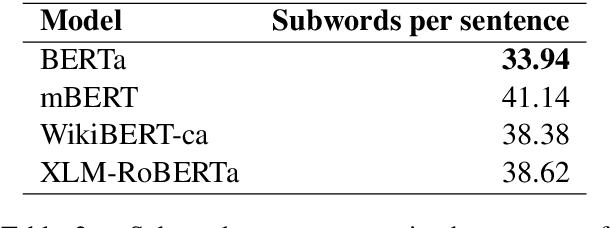

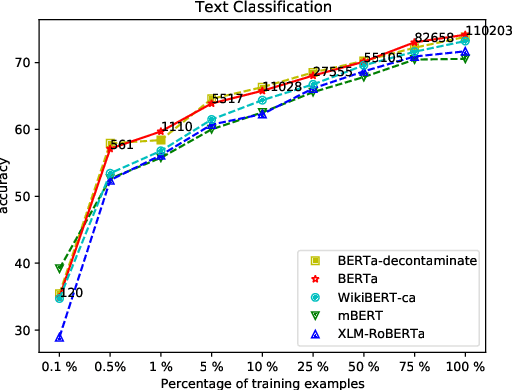

Abstract:Multilingual language models have been a crucial breakthrough as they considerably reduce the need of data for under-resourced languages. Nevertheless, the superiority of language-specific models has already been proven for languages having access to large amounts of data. In this work, we focus on Catalan with the aim to explore to what extent a medium-sized monolingual language model is competitive with state-of-the-art large multilingual models. For this, we: (1) build a clean, high-quality textual Catalan corpus (CaText), the largest to date (but only a fraction of the usual size of the previous work in monolingual language models), (2) train a Transformer-based language model for Catalan (BERTa), and (3) devise a thorough evaluation in a diversity of settings, comprising a complete array of downstream tasks, namely, Part of Speech Tagging, Named Entity Recognition and Classification, Text Classification, Question Answering, and Semantic Textual Similarity, with most of the corresponding datasets being created ex novo. The result is a new benchmark, the Catalan Language Understanding Benchmark (CLUB), which we publish as an open resource, together with the clean textual corpus, the language model, and the cleaning pipeline. Using state-of-the-art multilingual models and a monolingual model trained only on Wikipedia as baselines, we consistently observe the superiority of our model across tasks and settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge