Carlos A. Coello Coello

Nearest-Better Network for Visualizing and Analyzing Combinatorial Optimization Problems: A Unified Tool

Jul 30, 2025Abstract:The Nearest-Better Network (NBN) is a powerful method to visualize sampled data for continuous optimization problems while preserving multiple landscape features. However, the calculation of NBN is very time-consuming, and the extension of the method to combinatorial optimization problems is challenging but very important for analyzing the algorithm's behavior. This paper provides a straightforward theoretical derivation showing that the NBN network essentially functions as the maximum probability transition network for algorithms. This paper also presents an efficient NBN computation method with logarithmic linear time complexity to address the time-consuming issue. By applying this efficient NBN algorithm to the OneMax problem and the Traveling Salesman Problem (TSP), we have made several remarkable discoveries for the first time: The fitness landscape of OneMax exhibits neutrality, ruggedness, and modality features. The primary challenges of TSP problems are ruggedness, modality, and deception. Two state-of-the-art TSP algorithms (i.e., EAX and LKH) have limitations when addressing challenges related to modality and deception, respectively. LKH, based on local search operators, fails when there are deceptive solutions near global optima. EAX, which is based on a single population, can efficiently maintain diversity. However, when multiple attraction basins exist, EAX retains individuals within multiple basins simultaneously, reducing inter-basin interaction efficiency and leading to algorithm's stagnation.

A Tutorial on the Design, Experimentation and Application of Metaheuristic Algorithms to Real-World Optimization Problems

Oct 04, 2024

Abstract:In the last few years, the formulation of real-world optimization problems and their efficient solution via metaheuristic algorithms has been a catalyst for a myriad of research studies. In spite of decades of historical advancements on the design and use of metaheuristics, large difficulties still remain in regards to the understandability, algorithmic design uprightness, and performance verifiability of new technical achievements. A clear example stems from the scarce replicability of works dealing with metaheuristics used for optimization, which is often infeasible due to ambiguity and lack of detail in the presentation of the methods to be reproduced. Additionally, in many cases, there is a questionable statistical significance of their reported results. This work aims at providing the audience with a proposal of good practices which should be embraced when conducting studies about metaheuristics methods used for optimization in order to provide scientific rigor, value and transparency. To this end, we introduce a step by step methodology covering every research phase that should be followed when addressing this scientific field. Specifically, frequently overlooked yet crucial aspects and useful recommendations will be discussed in regards to the formulation of the problem, solution encoding, implementation of search operators, evaluation metrics, design of experiments, and considerations for real-world performance, among others. Finally, we will outline important considerations, challenges, and research directions for the success of newly developed optimization metaheuristics in their deployment and operation over real-world application environments.

Bridging the Gap Between Theory and Practice: Benchmarking Transfer Evolutionary Optimization

Apr 20, 2024

Abstract:In recent years, the field of Transfer Evolutionary Optimization (TrEO) has witnessed substantial growth, fueled by the realization of its profound impact on solving complex problems. Numerous algorithms have emerged to address the challenges posed by transferring knowledge between tasks. However, the recently highlighted ``no free lunch theorem'' in transfer optimization clarifies that no single algorithm reigns supreme across diverse problem types. This paper addresses this conundrum by adopting a benchmarking approach to evaluate the performance of various TrEO algorithms in realistic scenarios. Despite the growing methodological focus on transfer optimization, existing benchmark problems often fall short due to inadequate design, predominantly featuring synthetic problems that lack real-world relevance. This paper pioneers a practical TrEO benchmark suite, integrating problems from the literature categorized based on the three essential aspects of Big Source Task-Instances: volume, variety, and velocity. Our primary objective is to provide a comprehensive analysis of existing TrEO algorithms and pave the way for the development of new approaches to tackle practical challenges. By introducing realistic benchmarks that embody the three dimensions of volume, variety, and velocity, we aim to foster a deeper understanding of algorithmic performance in the face of diverse and complex transfer scenarios. This benchmark suite is poised to serve as a valuable resource for researchers, facilitating the refinement and advancement of TrEO algorithms in the pursuit of solving real-world problems.

Preference Incorporation into Many-Objective Optimization: An Outranking-based Ant Colony Algorithm

Jul 15, 2021

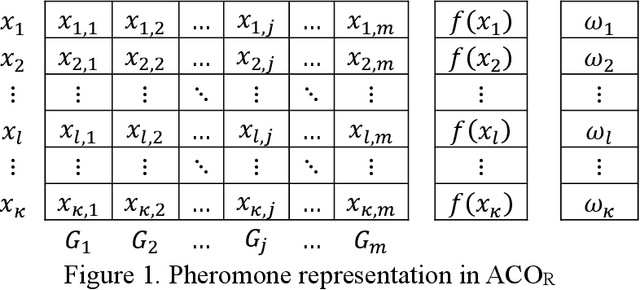

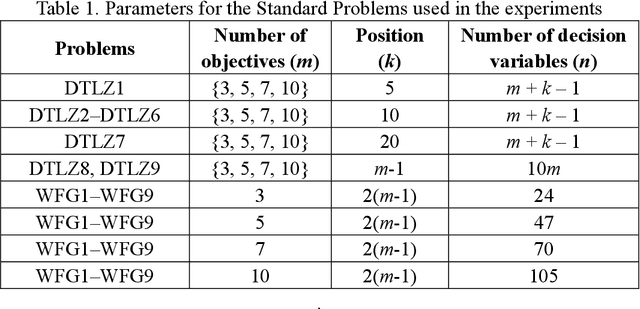

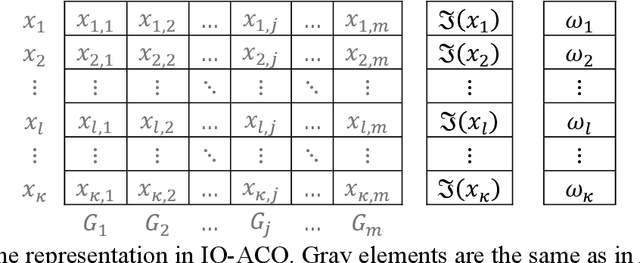

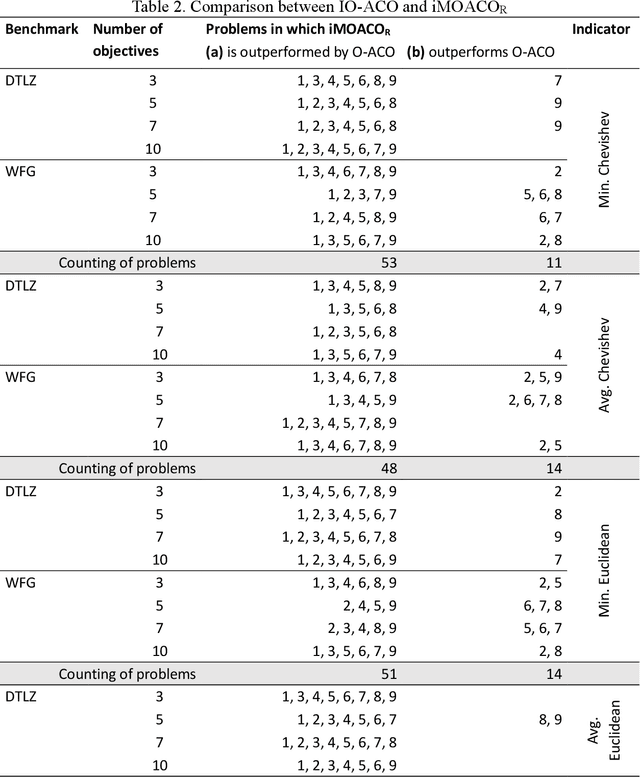

Abstract:In this paper, we enriched Ant Colony Optimization (ACO) with interval outranking to develop a novel multiobjective ACO optimizer to approach problems with many objective functions. This proposal is suitable if the preferences of the Decision Maker (DM) can be modeled through outranking relations. The introduced algorithm (named Interval Outranking-based ACO, IO-ACO) is the first ant-colony optimizer that embeds an outranking model to bear vagueness and ill-definition of DM preferences. This capacity is the most differentiating feature of IO-ACO because this issue is highly relevant in practice. IO-ACO biases the search towards the Region of Interest (RoI), the privileged zone of the Pareto frontier containing the solutions that better match the DM preferences. Two widely studied benchmarks were utilized to measure the efficiency of IO-ACO, i.e., the DTLZ and WFG test suites. Accordingly, IO-ACO was compared with two competitive many-objective optimizers: The Indicator-based Many-Objective ACO and the Multiobjective Evolutionary Algorithm Based on Decomposition. The numerical results show that IO-ACO approximates the Region of Interest (RoI) better than the leading metaheuristics based on approximating the Pareto frontier alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge