Brooke Armfield

Global Contrastive Training for Multimodal Electronic Health Records with Language Supervision

Apr 10, 2024

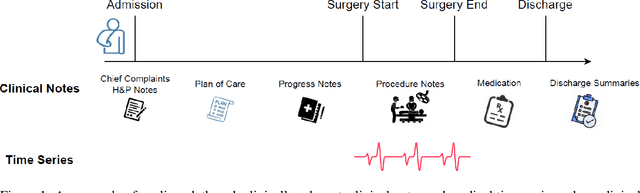

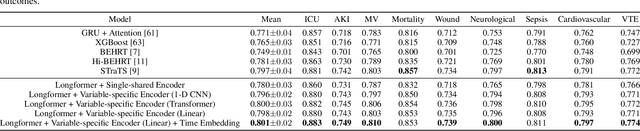

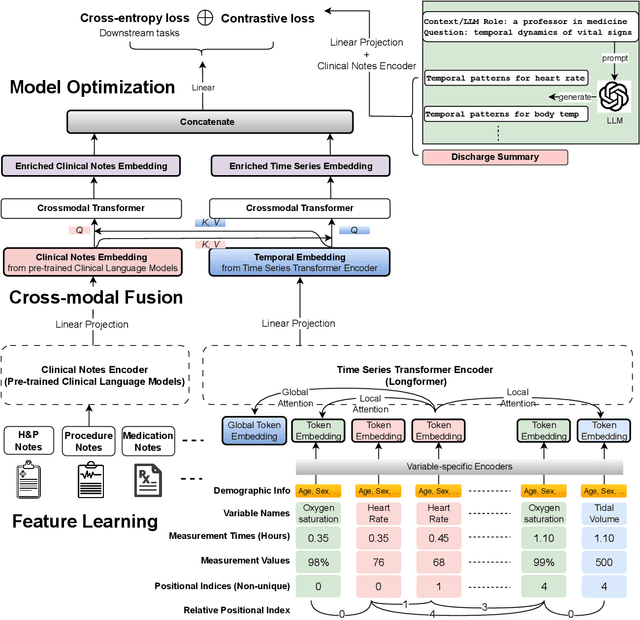

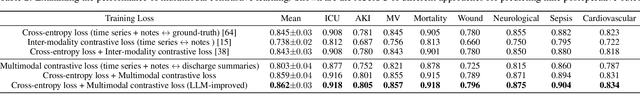

Abstract:Modern electronic health records (EHRs) hold immense promise in tracking personalized patient health trajectories through sequential deep learning, owing to their extensive breadth, scale, and temporal granularity. Nonetheless, how to effectively leverage multiple modalities from EHRs poses significant challenges, given its complex characteristics such as high dimensionality, multimodality, sparsity, varied recording frequencies, and temporal irregularities. To this end, this paper introduces a novel multimodal contrastive learning framework, specifically focusing on medical time series and clinical notes. To tackle the challenge of sparsity and irregular time intervals in medical time series, the framework integrates temporal cross-attention transformers with a dynamic embedding and tokenization scheme for learning multimodal feature representations. To harness the interconnected relationships between medical time series and clinical notes, the framework equips a global contrastive loss, aligning a patient's multimodal feature representations with the corresponding discharge summaries. Since discharge summaries uniquely pertain to individual patients and represent a holistic view of the patient's hospital stay, machine learning models are led to learn discriminative multimodal features via global contrasting. Extensive experiments with a real-world EHR dataset demonstrated that our framework outperformed state-of-the-art approaches on the exemplar task of predicting the occurrence of nine postoperative complications for more than 120,000 major inpatient surgeries using multimodal data from UF health system split among three hospitals (UF Health Gainesville, UF Health Jacksonville, and UF Health Jacksonville-North).

Temporal Cross-Attention for Dynamic Embedding and Tokenization of Multimodal Electronic Health Records

Mar 06, 2024Abstract:The breadth, scale, and temporal granularity of modern electronic health records (EHR) systems offers great potential for estimating personalized and contextual patient health trajectories using sequential deep learning. However, learning useful representations of EHR data is challenging due to its high dimensionality, sparsity, multimodality, irregular and variable-specific recording frequency, and timestamp duplication when multiple measurements are recorded simultaneously. Although recent efforts to fuse structured EHR and unstructured clinical notes suggest the potential for more accurate prediction of clinical outcomes, less focus has been placed on EHR embedding approaches that directly address temporal EHR challenges by learning time-aware representations from multimodal patient time series. In this paper, we introduce a dynamic embedding and tokenization framework for precise representation of multimodal clinical time series that combines novel methods for encoding time and sequential position with temporal cross-attention. Our embedding and tokenization framework, when integrated into a multitask transformer classifier with sliding window attention, outperformed baseline approaches on the exemplar task of predicting the occurrence of nine postoperative complications of more than 120,000 major inpatient surgeries using multimodal data from three hospitals and two academic health centers in the United States.

Detecting Visual Cues in the Intensive Care Unit and Association with Patient Clinical Status

Nov 01, 2023

Abstract:Intensive Care Units (ICU) provide close supervision and continuous care to patients with life-threatening conditions. However, continuous patient assessment in the ICU is still limited due to time constraints and the workload on healthcare providers. Existing patient assessments in the ICU such as pain or mobility assessment are mostly sporadic and administered manually, thus introducing the potential for human errors. Developing Artificial intelligence (AI) tools that can augment human assessments in the ICU can be beneficial for providing more objective and granular monitoring capabilities. For example, capturing the variations in a patient's facial cues related to pain or agitation can help in adjusting pain-related medications or detecting agitation-inducing conditions such as delirium. Additionally, subtle changes in visual cues during or prior to adverse clinical events could potentially aid in continuous patient monitoring when combined with high-resolution physiological signals and Electronic Health Record (EHR) data. In this paper, we examined the association between visual cues and patient condition including acuity status, acute brain dysfunction, and pain. We leveraged our AU-ICU dataset with 107,064 frames collected in the ICU annotated with facial action units (AUs) labels by trained annotators. We developed a new "masked loss computation" technique that addresses the data imbalance problem by maximizing data resource utilization. We trained the model using our AU-ICU dataset in conjunction with three external datasets to detect 18 AUs. The SWIN Transformer model achieved 0.57 mean F1-score and 0.89 mean accuracy on the test set. Additionally, we performed AU inference on 634,054 frames to evaluate the association between facial AUs and clinically important patient conditions such as acuity status, acute brain dysfunction, and pain.

Predicting risk of delirium from ambient noise and light information in the ICU

Mar 11, 2023

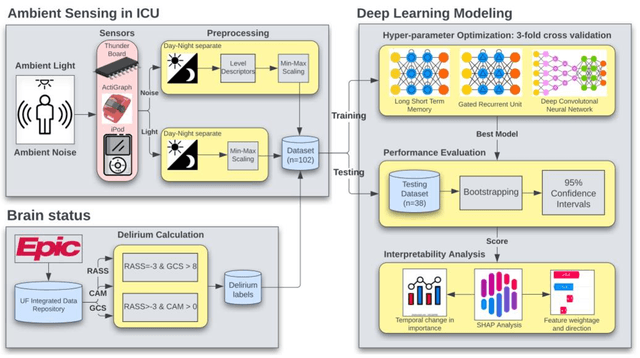

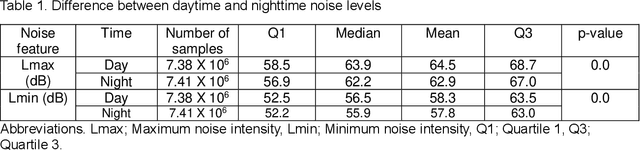

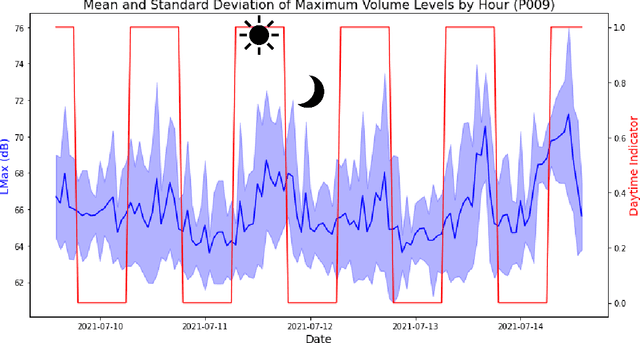

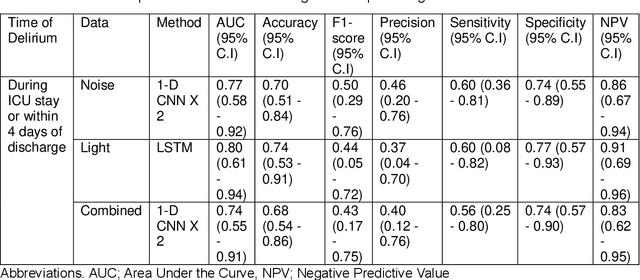

Abstract:Existing Intensive Care Unit (ICU) delirium prediction models do not consider environmental factors despite strong evidence of their influence on delirium. This study reports the first deep-learning based delirium prediction model for ICU patients using only ambient noise and light information. Ambient light and noise intensities were measured from ICU rooms of 102 patients from May 2021 to September 2022 using Thunderboard, ActiGraph sensors and an iPod with AudioTools application. These measurements were divided into daytime (0700 to 1859) and nighttime (1900 to 0659). Deep learning models were trained using this data to predict the incidence of delirium during ICU stay or within 4 days of discharge. Finally, outcome scores were analyzed to evaluate the importance and directionality of every feature. Daytime noise levels were significantly higher than nighttime noise levels. When using only noise features or a combination of noise and light features 1-D convolutional neural networks (CNN) achieved the strongest performance: AUC=0.77, 0.74; Sensitivity=0.60, 0.56; Specificity=0.74, 0.74; Precision=0.46, 0.40 respectively. Using only light features, Long Short-Term Memory (LSTM) networks performed best: AUC=0.80, Sensitivity=0.60, Specificity=0.77, Precision=0.37. Maximum nighttime and minimum daytime noise levels were the strongest positive and negative predictors of delirium respectively. Nighttime light level was a stronger predictor of delirium than daytime light level. Total influence of light features outweighed that of noise features on the second and fourth day of ICU stay. This study shows that ambient light and noise intensities are strong predictors of long-term delirium incidence in the ICU. It reveals that daytime and nighttime environmental factors might influence delirium differently and that the importance of light and noise levels vary over the course of an ICU stay.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge