Bartosz Krawczyk

Deep Imbalanced Multi-Target Regression: 3D Point Cloud Voxel Content Estimation in Simulated Forests

Nov 16, 2025Abstract:Voxelization is an effective approach to reduce the computational cost of processing Light Detection and Ranging (LiDAR) data, yet it results in a loss of fine-scale structural information. This study explores whether low-level voxel content information, specifically target occupancy percentage within a voxel, can be inferred from high-level voxelized LiDAR point cloud data collected from Digital Imaging and remote Sensing Image Generation (DIRSIG) software. In our study, the targets include bark, leaf, soil, and miscellaneous materials. We propose a multi-target regression approach in the context of imbalanced learning using Kernel Point Convolutions (KPConv). Our research leverages cost-sensitive learning to address class imbalance called density-based relevance (DBR). We employ weighted Mean Saquared Erorr (MSE), Focal Regression (FocalR), and regularization to improve the optimization of KPConv. This study performs a sensitivity analysis on the voxel size (0.25 - 2 meters) to evaluate the effect of various grid representations in capturing the nuances of the forest. This sensitivity analysis reveals that larger voxel sizes (e.g., 2 meters) result in lower errors due to reduced variability, while smaller voxel sizes (e.g., 0.25 or 0.5 meter) exhibit higher errors, particularly within the canopy, where variability is greatest. For bark and leaf targets, error values at smaller voxel size datasets (0.25 and 0.5 meter) were significantly higher than those in larger voxel size datasets (2 meters), highlighting the difficulty in accurately estimating within-canopy voxel content at fine resolutions. This suggests that the choice of voxel size is application-dependent. Our work fills the gap in deep imbalance learning models for multi-target regression and simulated datasets for 3D LiDAR point clouds of forests.

OpenScout v1.1 mobile robot: a case study on open hardware continuation

Aug 01, 2025Abstract:OpenScout is an Open Source Hardware (OSH) mobile robot for research and industry. It is extended to v1.1 which includes simplified, cheaper and more powerful onboard compute hardware; a simulated ROS2 interface; and a Gazebo simulation. Changes, their rationale, project methodology, and results are reported as an OSH case study.

Holistic Continual Learning under Concept Drift with Adaptive Memory Realignment

Jul 03, 2025Abstract:Traditional continual learning methods prioritize knowledge retention and focus primarily on mitigating catastrophic forgetting, implicitly assuming that the data distribution of previously learned tasks remains static. This overlooks the dynamic nature of real-world data streams, where concept drift permanently alters previously seen data and demands both stability and rapid adaptation. We introduce a holistic framework for continual learning under concept drift that simulates realistic scenarios by evolving task distributions. As a baseline, we consider Full Relearning (FR), in which the model is retrained from scratch on newly labeled samples from the drifted distribution. While effective, this approach incurs substantial annotation and computational overhead. To address these limitations, we propose Adaptive Memory Realignment (AMR), a lightweight alternative that equips rehearsal-based learners with a drift-aware adaptation mechanism. AMR selectively removes outdated samples of drifted classes from the replay buffer and repopulates it with a small number of up-to-date instances, effectively realigning memory with the new distribution. This targeted resampling matches the performance of FR while reducing the need for labeled data and computation by orders of magnitude. To enable reproducible evaluation, we introduce four concept-drift variants of standard vision benchmarks: Fashion-MNIST-CD, CIFAR10-CD, CIFAR100-CD, and Tiny-ImageNet-CD, where previously seen classes reappear with shifted representations. Comprehensive experiments on these datasets using several rehearsal-based baselines show that AMR consistently counters concept drift, maintaining high accuracy with minimal overhead. These results position AMR as a scalable solution that reconciles stability and plasticity in non-stationary continual learning environments.

What is the role of memorization in Continual Learning?

May 23, 2025Abstract:Memorization impacts the performance of deep learning algorithms. Prior works have studied memorization primarily in the context of generalization and privacy. This work studies the memorization effect on incremental learning scenarios. Forgetting prevention and memorization seem similar. However, one should discuss their differences. We designed extensive experiments to evaluate the impact of memorization on continual learning. We clarified that learning examples with high memorization scores are forgotten faster than regular samples. Our findings also indicated that memorization is necessary to achieve the highest performance. However, at low memory regimes, forgetting regular samples is more important. We showed that the importance of a high-memorization score sample rises with an increase in the buffer size. We introduced a memorization proxy and employed it in the buffer policy problem to showcase how memorization could be used during incremental training. We demonstrated that including samples with a higher proxy memorization score is beneficial when the buffer size is large.

Balanced Gradient Sample Retrieval for Enhanced Knowledge Retention in Proxy-based Continual Learning

Dec 19, 2024

Abstract:Continual learning in deep neural networks often suffers from catastrophic forgetting, where representations for previous tasks are overwritten during subsequent training. We propose a novel sample retrieval strategy from the memory buffer that leverages both gradient-conflicting and gradient-aligned samples to effectively retain knowledge about past tasks within a supervised contrastive learning framework. Gradient-conflicting samples are selected for their potential to reduce interference by re-aligning gradients, thereby preserving past task knowledge. Meanwhile, gradient-aligned samples are incorporated to reinforce stable, shared representations across tasks. By balancing gradient correction from conflicting samples with alignment reinforcement from aligned ones, our approach increases the diversity among retrieved instances and achieves superior alignment in parameter space, significantly enhancing knowledge retention and mitigating proxy drift. Empirical results demonstrate that using both sample types outperforms methods relying solely on one sample type or random retrieval. Experiments on popular continual learning benchmarks in computer vision validate our method's state-of-the-art performance in mitigating forgetting while maintaining competitive accuracy on new tasks.

Continual Learning with Weight Interpolation

Apr 09, 2024

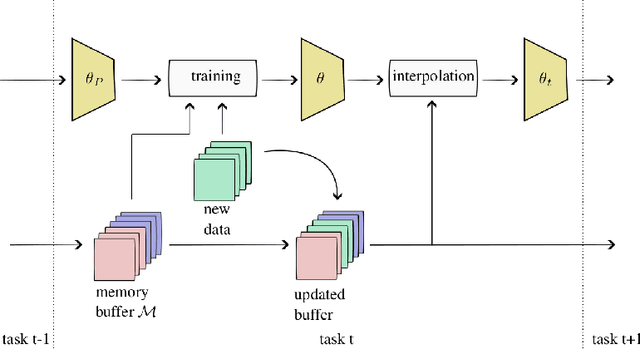

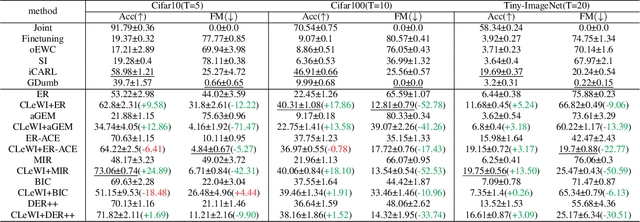

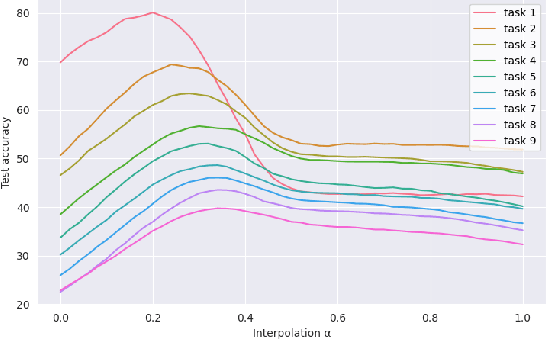

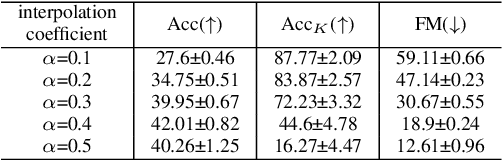

Abstract:Continual learning poses a fundamental challenge for modern machine learning systems, requiring models to adapt to new tasks while retaining knowledge from previous ones. Addressing this challenge necessitates the development of efficient algorithms capable of learning from data streams and accumulating knowledge over time. This paper proposes a novel approach to continual learning utilizing the weight consolidation method. Our method, a simple yet powerful technique, enhances robustness against catastrophic forgetting by interpolating between old and new model weights after each novel task, effectively merging two models to facilitate exploration of local minima emerging after arrival of new concepts. Moreover, we demonstrate that our approach can complement existing rehearsal-based replay approaches, improving their accuracy and further mitigating the forgetting phenomenon. Additionally, our method provides an intuitive mechanism for controlling the stability-plasticity trade-off. Experimental results showcase the significant performance enhancement to state-of-the-art experience replay algorithms the proposed weight consolidation approach offers. Our algorithm can be downloaded from https://github.com/jedrzejkozal/weight-interpolation-cl.

Class-Incremental Mixture of Gaussians for Deep Continual Learning

Jul 09, 2023Abstract:Continual learning models for stationary data focus on learning and retaining concepts coming to them in a sequential manner. In the most generic class-incremental environment, we have to be ready to deal with classes coming one by one, without any higher-level grouping. This requirement invalidates many previously proposed methods and forces researchers to look for more flexible alternative approaches. In this work, we follow the idea of centroid-driven methods and propose end-to-end incorporation of the mixture of Gaussians model into the continual learning framework. By employing the gradient-based approach and designing losses capable of learning discriminative features while avoiding degenerate solutions, we successfully combine the mixture model with a deep feature extractor allowing for joint optimization and adjustments in the latent space. Additionally, we show that our model can effectively learn in memory-free scenarios with fixed extractors. In the conducted experiments, we empirically demonstrate the effectiveness of the proposed solutions and exhibit the competitiveness of our model when compared with state-of-the-art continual learning baselines evaluated in the context of image classification problems.

Interpretable ML for Imbalanced Data

Dec 15, 2022

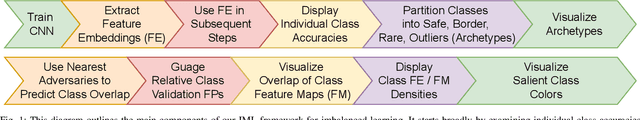

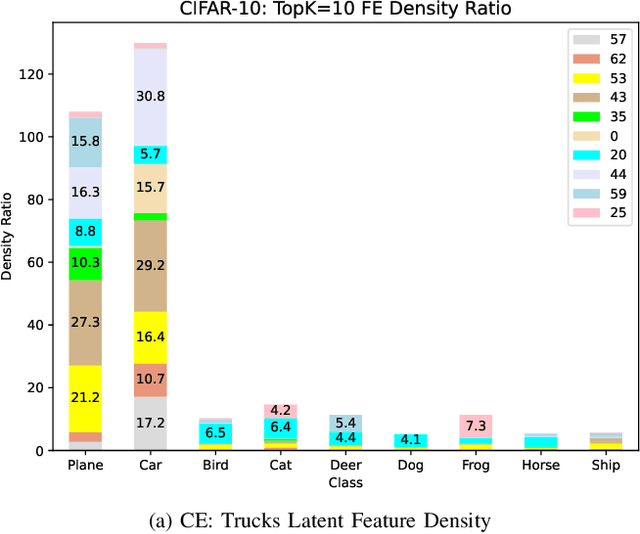

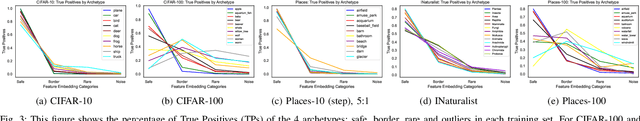

Abstract:Deep learning models are being increasingly applied to imbalanced data in high stakes fields such as medicine, autonomous driving, and intelligence analysis. Imbalanced data compounds the black-box nature of deep networks because the relationships between classes may be highly skewed and unclear. This can reduce trust by model users and hamper the progress of developers of imbalanced learning algorithms. Existing methods that investigate imbalanced data complexity are geared toward binary classification, shallow learning models and low dimensional data. In addition, current eXplainable Artificial Intelligence (XAI) techniques mainly focus on converting opaque deep learning models into simpler models (e.g., decision trees) or mapping predictions for specific instances to inputs, instead of examining global data properties and complexities. Therefore, there is a need for a framework that is tailored to modern deep networks, that incorporates large, high dimensional, multi-class datasets, and uncovers data complexities commonly found in imbalanced data (e.g., class overlap, sub-concepts, and outlier instances). We propose a set of techniques that can be used by both deep learning model users to identify, visualize and understand class prototypes, sub-concepts and outlier instances; and by imbalanced learning algorithm developers to detect features and class exemplars that are key to model performance. Our framework also identifies instances that reside on the border of class decision boundaries, which can carry highly discriminative information. Unlike many existing XAI techniques which map model decisions to gray-scale pixel locations, we use saliency through back-propagation to identify and aggregate image color bands across entire classes. Our framework is publicly available at \url{https://github.com/dd1github/XAI_for_Imbalanced_Learning}

Towards A Holistic View of Bias in Machine Learning: Bridging Algorithmic Fairness and Imbalanced Learning

Jul 13, 2022

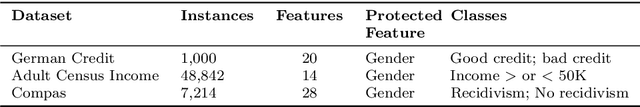

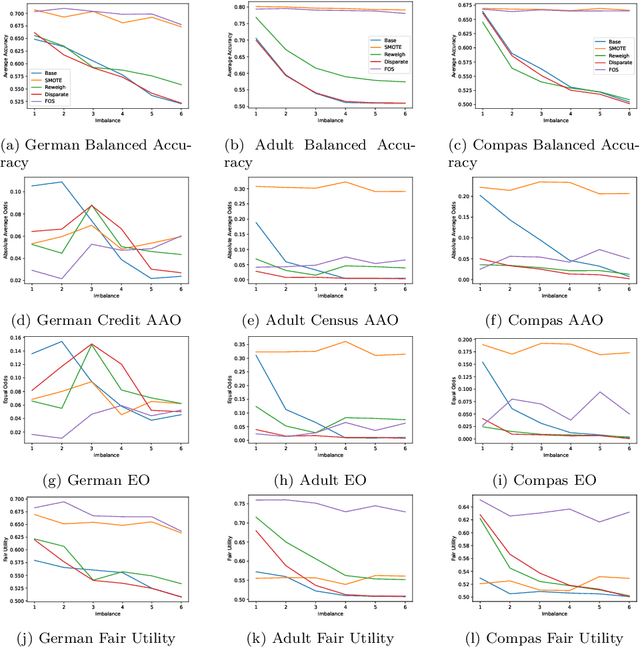

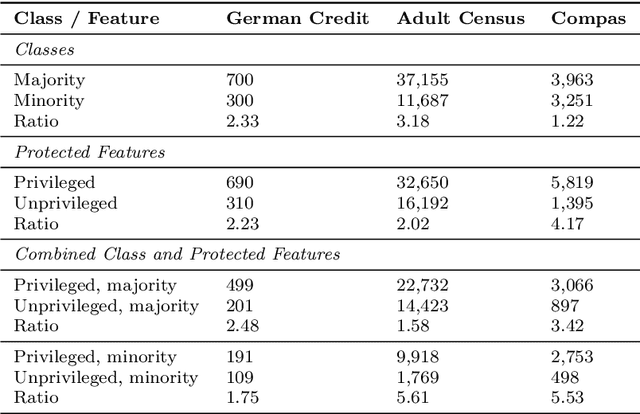

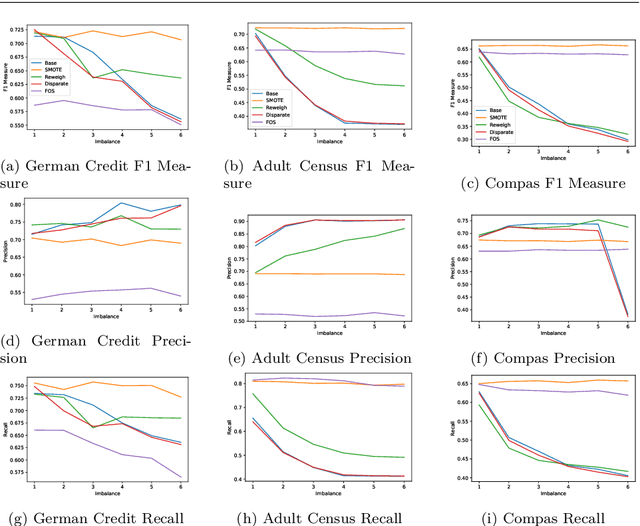

Abstract:Machine learning (ML) is playing an increasingly important role in rendering decisions that affect a broad range of groups in society. ML models inform decisions in criminal justice, the extension of credit in banking, and the hiring practices of corporations. This posits the requirement of model fairness, which holds that automated decisions should be equitable with respect to protected features (e.g., gender, race, or age) that are often under-represented in the data. We postulate that this problem of under-representation has a corollary to the problem of imbalanced data learning. This class imbalance is often reflected in both classes and protected features. For example, one class (those receiving credit) may be over-represented with respect to another class (those not receiving credit) and a particular group (females) may be under-represented with respect to another group (males). A key element in achieving algorithmic fairness with respect to protected groups is the simultaneous reduction of class and protected group imbalance in the underlying training data, which facilitates increases in both model accuracy and fairness. We discuss the importance of bridging imbalanced learning and group fairness by showing how key concepts in these fields overlap and complement each other; and propose a novel oversampling algorithm, Fair Oversampling, that addresses both skewed class distributions and protected features. Our method: (i) can be used as an efficient pre-processing algorithm for standard ML algorithms to jointly address imbalance and group equity; and (ii) can be combined with fairness-aware learning algorithms to improve their robustness to varying levels of class imbalance. Additionally, we take a step toward bridging the gap between fairness and imbalanced learning with a new metric, Fair Utility, that combines balanced accuracy with fairness.

Efficient Augmentation for Imbalanced Deep Learning

Jul 13, 2022

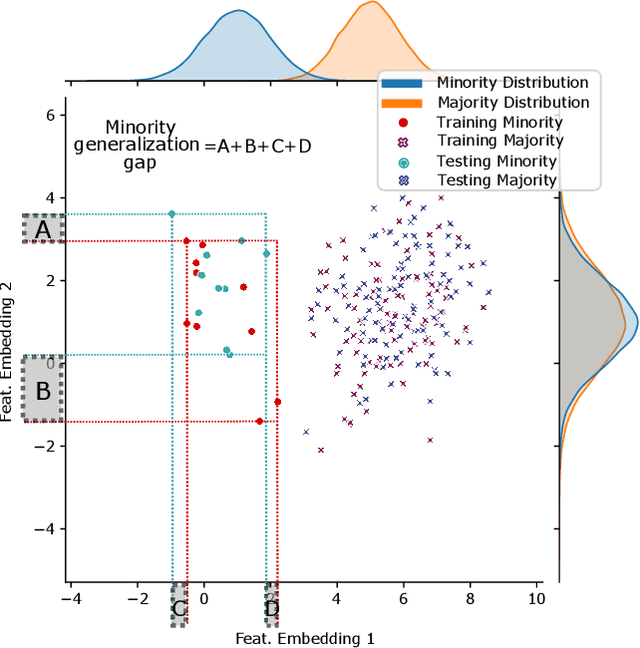

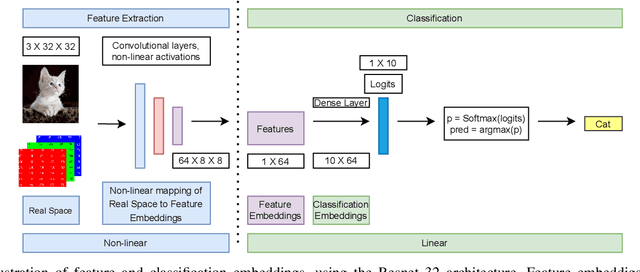

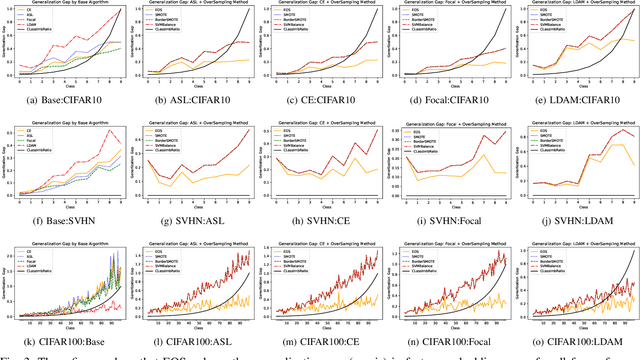

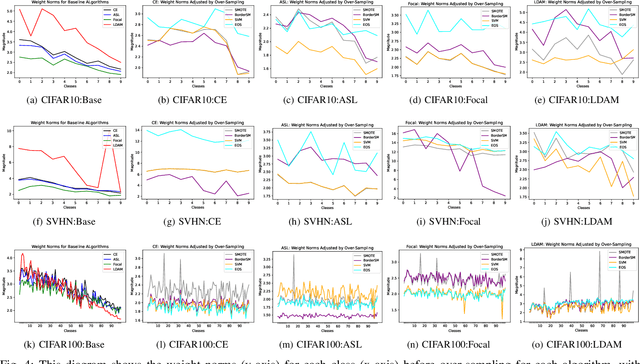

Abstract:Deep learning models memorize training data, which hurts their ability to generalize to under-represented classes. We empirically study a convolutional neural network's internal representation of imbalanced image data and measure the generalization gap between a model's feature embeddings in the training and test sets, showing that the gap is wider for minority classes. This insight enables us to design an efficient three-phase CNN training framework for imbalanced data. The framework involves training the network end-to-end on imbalanced data to learn accurate feature embeddings, performing data augmentation in the learned embedded space to balance the train distribution, and fine-tuning the classifier head on the embedded balanced training data. We propose Expansive Over-Sampling (EOS) as a data augmentation technique to utilize in the training framework. EOS forms synthetic training instances as convex combinations between the minority class samples and their nearest enemies in the embedded space to reduce the generalization gap. The proposed framework improves the accuracy over leading cost-sensitive and resampling methods commonly used in imbalanced learning. Moreover, it is more computationally efficient than standard data pre-processing methods, such as SMOTE and GAN-based oversampling, as it requires fewer parameters and less training time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge