Azeddine Beghdadi

D-PerceptCT: Deep Perceptual Enhancement for Low-Dose CT Images

Nov 18, 2025

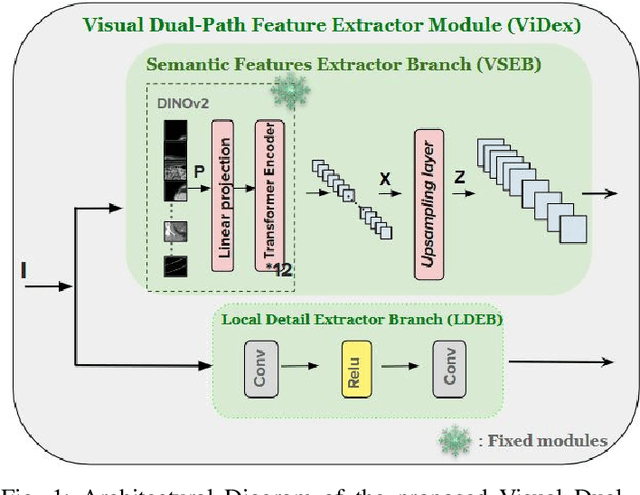

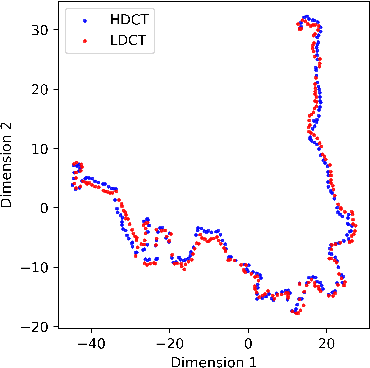

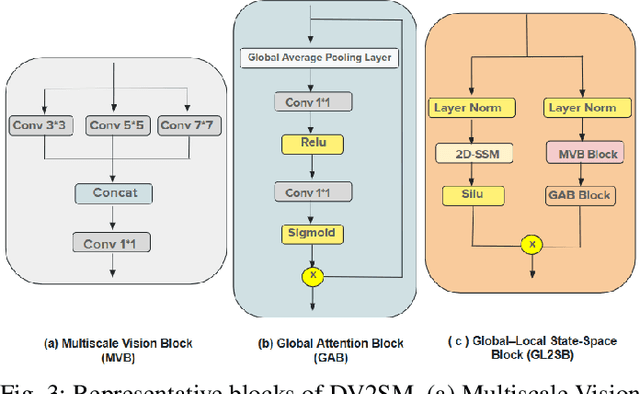

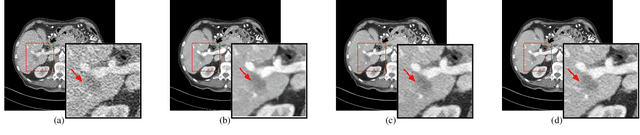

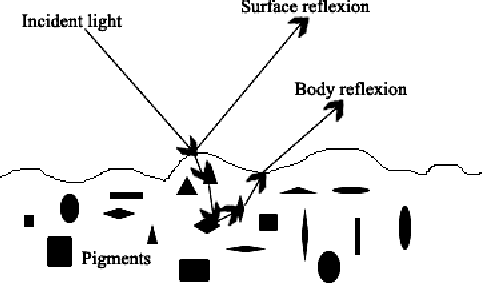

Abstract:Low Dose Computed Tomography (LDCT) is widely used as an imaging solution to aid diagnosis and other clinical tasks. However, this comes at the price of a deterioration in image quality due to the low dose of radiation used to reduce the risk of secondary cancer development. While some efficient methods have been proposed to enhance LDCT quality, many overestimate noise and perform excessive smoothing, leading to a loss of critical details. In this paper, we introduce D-PerceptCT, a novel architecture inspired by key principles of the Human Visual System (HVS) to enhance LDCT images. The objective is to guide the model to enhance or preserve perceptually relevant features, thereby providing radiologists with CT images where critical anatomical structures and fine pathological details are perceptu- ally visible. D-PerceptCT consists of two main blocks: 1) a Visual Dual-path Extractor (ViDex), which integrates semantic priors from a pretrained DINOv2 model with local spatial features, allowing the network to incorporate semantic-awareness during enhancement; (2) a Global-Local State-Space block that captures long-range information and multiscale features to preserve the important structures and fine details for diagnosis. In addition, we propose a novel deep perceptual loss, designated as the Deep Perceptual Relevancy Loss Function (DPRLF), which is inspired by human contrast sensitivity, to further emphasize perceptually important features. Extensive experiments on the Mayo2016 dataset demonstrate the effectiveness of D-PerceptCT method for LDCT enhancement, showing better preservation of structural and textural information within LDCT images compared to SOTA methods.

A New Lightweight Hybrid Graph Convolutional Neural Network -- CNN Scheme for Scene Classification using Object Detection Inference

Jul 19, 2024

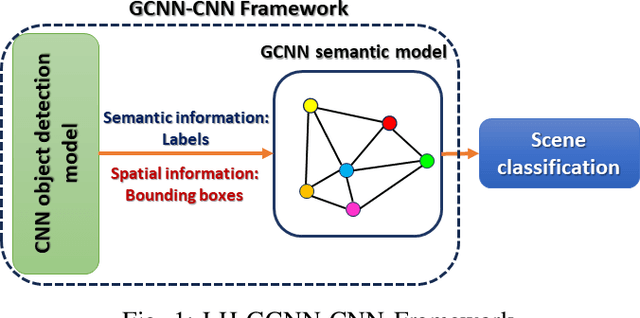

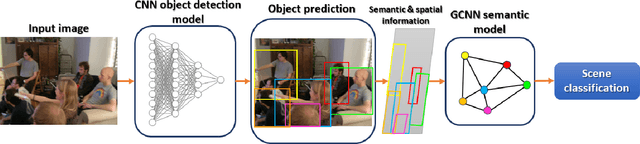

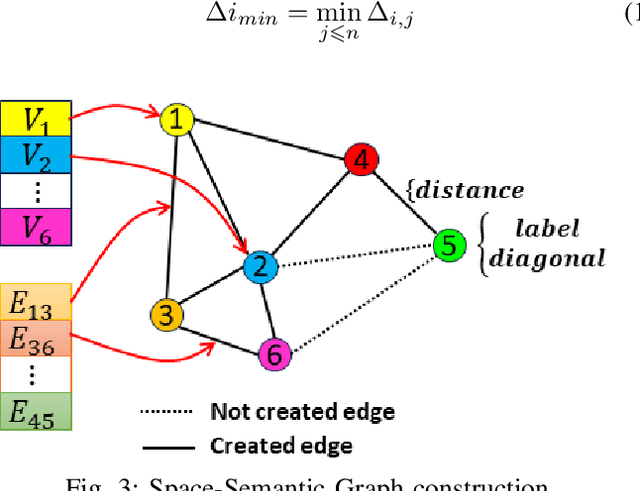

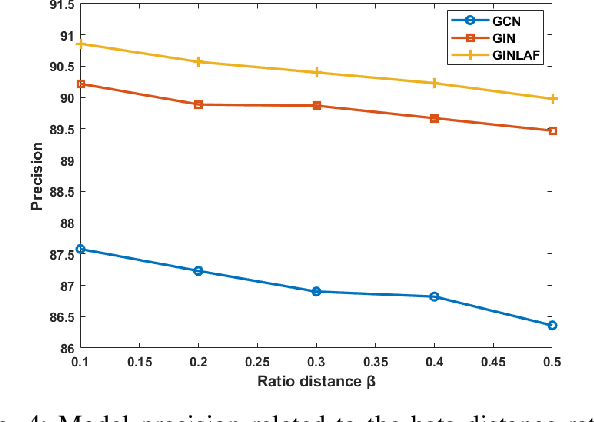

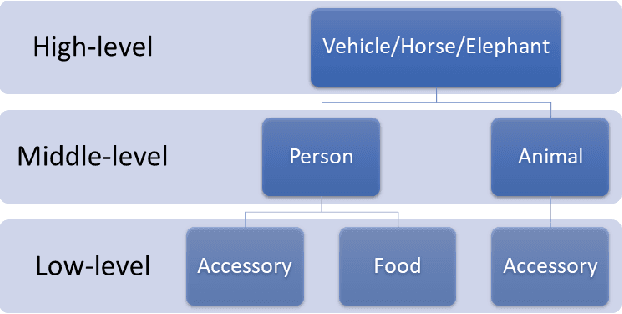

Abstract:Scene understanding plays an important role in several high-level computer vision applications, such as autonomous vehicles, intelligent video surveillance, or robotics. However, too few solutions have been proposed for indoor/outdoor scene classification to ensure scene context adaptability for computer vision frameworks. We propose the first Lightweight Hybrid Graph Convolutional Neural Network (LH-GCNN)-CNN framework as an add-on to object detection models. The proposed approach uses the output of the CNN object detection model to predict the observed scene type by generating a coherent GCNN representing the semantic and geometric content of the observed scene. This new method, applied to natural scenes, achieves an efficiency of over 90\% for scene classification in a COCO-derived dataset containing a large number of different scenes, while requiring fewer parameters than traditional CNN methods. For the benefit of the scientific community, we will make the source code publicly available: https://github.com/Aymanbegh/Hybrid-GCNN-CNN.

CD-COCO: A Versatile Complex Distorted COCO Database for Scene-Context-Aware Computer Vision

Nov 12, 2023

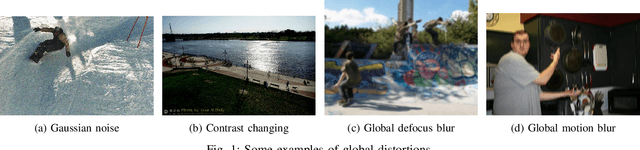

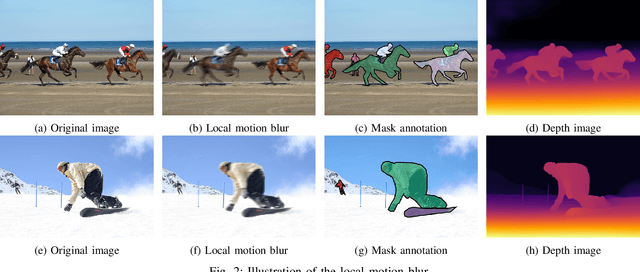

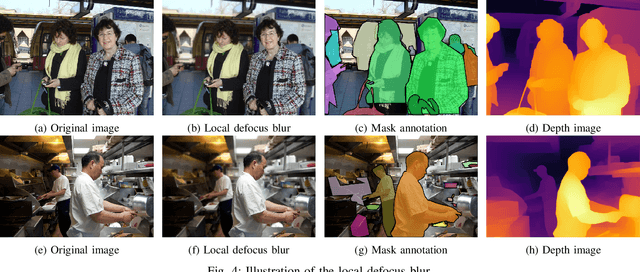

Abstract:The recent development of deep learning methods applied to vision has enabled their increasing integration into real-world applications to perform complex Computer Vision (CV) tasks. However, image acquisition conditions have a major impact on the performance of high-level image processing. A possible solution to overcome these limitations is to artificially augment the training databases or to design deep learning models that are robust to signal distortions. We opt here for the first solution by enriching the database with complex and realistic distortions which were ignored until now in the existing databases. To this end, we built a new versatile database derived from the well-known MS-COCO database to which we applied local and global photo-realistic distortions. These new local distortions are generated by considering the scene context of the images that guarantees a high level of photo-realism. Distortions are generated by exploiting the depth information of the objects in the scene as well as their semantics. This guarantees a high level of photo-realism and allows to explore real scenarios ignored in conventional databases dedicated to various CV applications. Our versatile database offers an efficient solution to improve the robustness of various CV tasks such as Object Detection (OD), scene segmentation, and distortion-type classification methods. The image database, scene classification index, and distortion generation codes are publicly available \footnote{\url{https://github.com/Aymanbegh/CD-COCO}}

Self-supervised Learning for Gastrointestinal Pathologies Endoscopy Image Classification with Triplet Loss

Mar 03, 2023Abstract:Recently, the amount of GI tract datasets is introduced more and more by gathering from contests and challenges. The most common task needs to solve that is to classify images from the GI tract into various classes. However, the contributions of the existing approaches exhibit lots of limitations. In this paper, we aim to develop a computer-aided diagnosis system to classify the pathological findings in endoscopy images, the system can classify some common pathologies including polyps, esophagitis, and ulcerative -- colitis. To evaluate the proposed work, we use the public dataset which is Hyper--Kvasir instead of gathering the data. The key idea of our system is to develop self-supervised learning based on the Barlow Twins framework with a downstream task which is an endoscopy image classification integrated with triplet loss and focal loss functions. The self-supervision framework and focal loss function are used to overcome class-imbalanced data, while the triplet loss function is to tackle the domain-specific properties in endoscopy images which are inter/intra class problems. An extensive experimental study on the pathological finding images in the Hyper--Kvasir dataset has shown that our proposed system is in general better than the compared methods, whereas using a simple neural network model. This means the proposed system can be used efficiently and capable of accurately for the classification of pathology images in the GI tract.

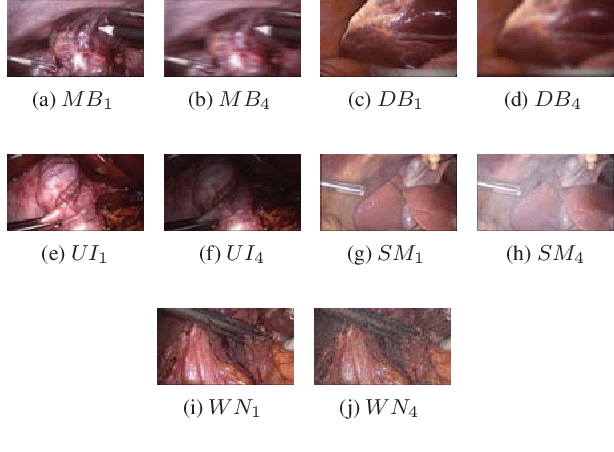

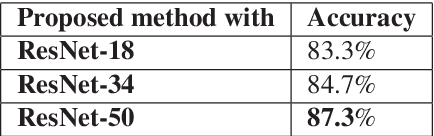

End-to-End Blind Quality Assessment for Laparoscopic Videos using Neural Networks

Feb 09, 2022

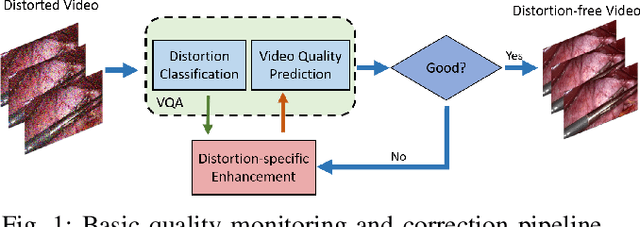

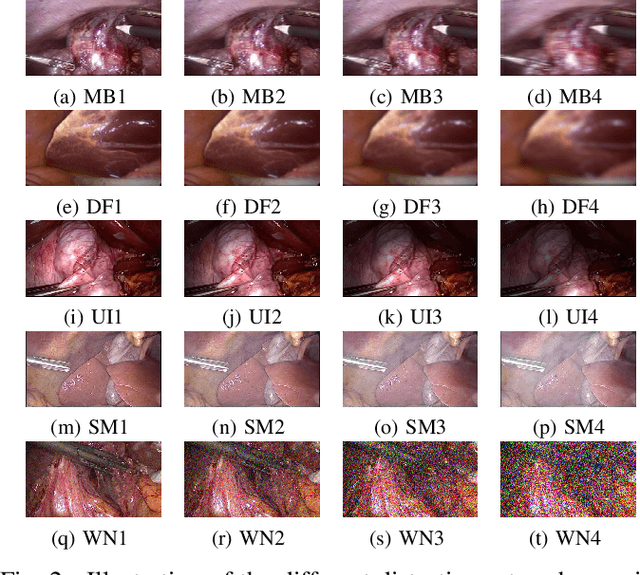

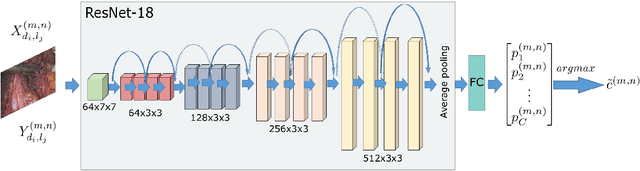

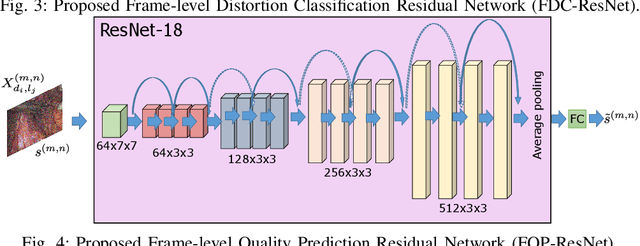

Abstract:Video quality assessment is a challenging problem having a critical significance in the context of medical imaging. For instance, in laparoscopic surgery, the acquired video data suffers from different kinds of distortion that not only hinder surgery performance but also affect the execution of subsequent tasks in surgical navigation and robotic surgeries. For this reason, we propose in this paper neural network-based approaches for distortion classification as well as quality prediction. More precisely, a Residual Network (ResNet) based approach is firstly developed for simultaneous ranking and classification task. Then, this architecture is extended to make it appropriate for the quality prediction task by using an additional Fully Connected Neural Network (FCNN). To train the overall architecture (ResNet and FCNN models), transfer learning and end-to-end learning approaches are investigated. Experimental results, carried out on a new laparoscopic video quality database, have shown the efficiency of the proposed methods compared to recent conventional and deep learning based approaches.

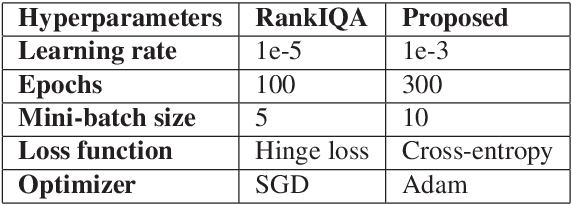

Residual Networks based Distortion Classification and Ranking for Laparoscopic Image Quality Assessment

Jun 12, 2021

Abstract:Laparoscopic images and videos are often affected by different types of distortion like noise, smoke, blur and nonuniform illumination. Automatic detection of these distortions, followed generally by application of appropriate image quality enhancement methods, is critical to avoid errors during surgery. In this context, a crucial step involves an objective assessment of the image quality, which is a two-fold problem requiring both the classification of the distortion type affecting the image and the estimation of the severity level of that distortion. Unlike existing image quality measures which focus mainly on estimating a quality score, we propose in this paper to formulate the image quality assessment task as a multi-label classification problem taking into account both the type as well as the severity level (or rank) of distortions. Here, this problem is then solved by resorting to a deep neural networks based approach. The obtained results on a laparoscopic image dataset show the efficiency of the proposed approach.

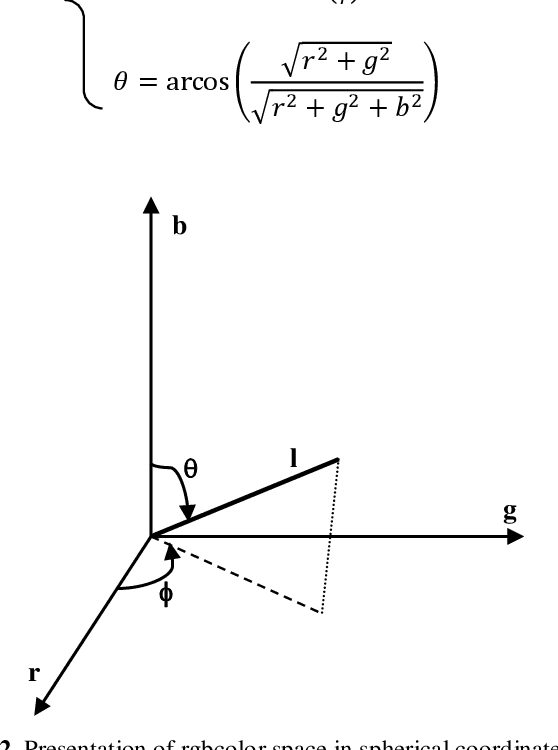

Enhancing road signs segmentation using photometric invariants

Oct 26, 2020

Abstract:Road signs detection and recognition in natural scenes is one of the most important tasksin the design of Intelligent Transport Systems (ITS). However, illumination changes remain a major problem. In this paper, an efficient ap-proach of road signs segmentation based on photometric invariants is proposed. This method is based on color in-formation using a hybrid distance, by exploiting the chro-matic distance and the red and blue ratio, on l Theta Phi color space which is invariant to highlight, shading and shadow changes. A comparative study is performed to demonstrate the robustness of this approach over the most frequently used methods for road sign segmentation. The experimental results and the detailed analysis show the high performance of the algorithm described in this paper.

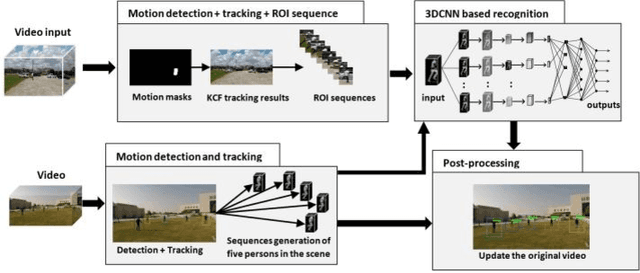

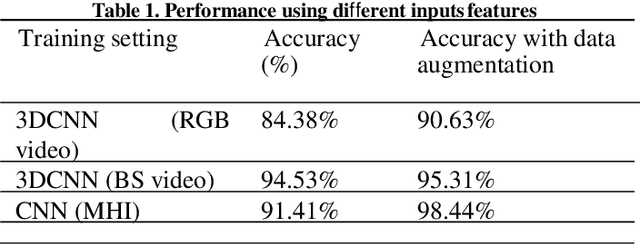

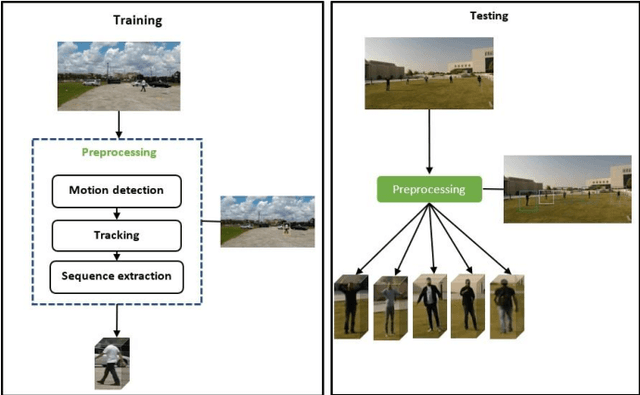

A Novel Approach for Robust Multi Human Action Detection and Recognition based on 3-Dimentional Convolutional Neural Networks

Jul 25, 2019

Abstract:In recent years, various attempts have been proposed to explore the use of spatial and temporal information for human action recognition using convolutional neural networks (CNNs). However, only a small number of methods are available for the recognition of many human actions performed by more than one person in the same surveillance video. This paper proposes a novel technique for multiple human action recognition using a new architecture based on 3Dimdenisional deep learning with application to video surveillance systems. The first stage of the model uses a new representation of the data by extracting the sequence of each person acting in the scene. An analysis of each sequence to detect the corresponding actions is also proposed. KTH, Weizmann and UCF-ARG datasets were used for training, new datasets were also constructed which include a number of persons having multiple actions were used for testing the proposed algorithm. The results of this work revealed that the proposed method provides more accurate multi human action recognition achieving 98%. Other videos were used for the evaluation including datasets (UCF101, Hollywood2, HDMB51, and YouTube) without any preprocessing and the results obtained suggest that our proposed method clearly improves the performances when compared to state-of-the-art methods.

Adaptive Context Encoding Module for Semantic Segmentation

Jul 13, 2019

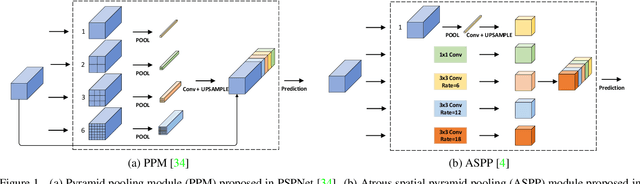

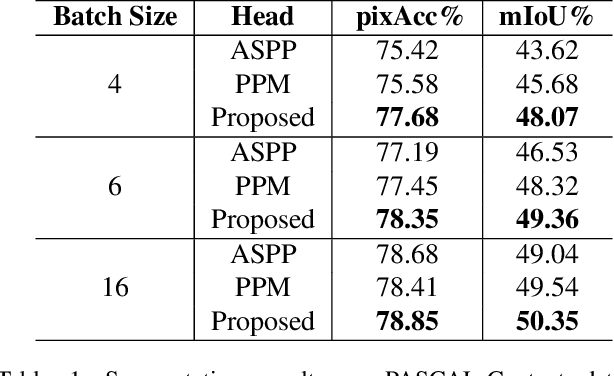

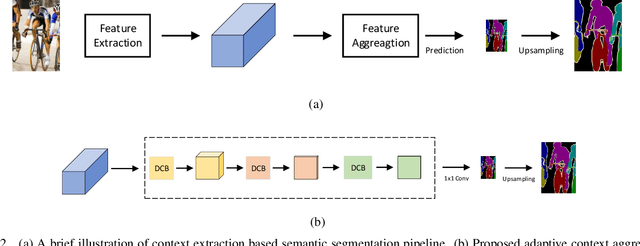

Abstract:The object sizes in images are diverse, therefore, capturing multiple scale context information is essential for semantic segmentation. Existing context aggregation methods such as pyramid pooling module (PPM) and atrous spatial pyramid pooling (ASPP) design different pooling size or atrous rate, such that multiple scale information is captured. However, the pooling sizes and atrous rates are chosen manually and empirically. In order to capture object context information adaptively, in this paper, we propose an adaptive context encoding (ACE) module based on deformable convolution operation to argument multiple scale information. Our ACE module can be embedded into other Convolutional Neural Networks (CNN) easily for context aggregation. The effectiveness of the proposed module is demonstrated on Pascal-Context and ADE20K datasets. Although our proposed ACE only consists of three deformable convolution blocks, it outperforms PPM and ASPP in terms of mean Intersection of Union (mIoU) on both datasets. All the experiment study confirms that our proposed module is effective as compared to the state-of-the-art methods.

Can Image Enhancement be Beneficial to Find Smoke Images in Laparoscopic Surgery?

Dec 27, 2018

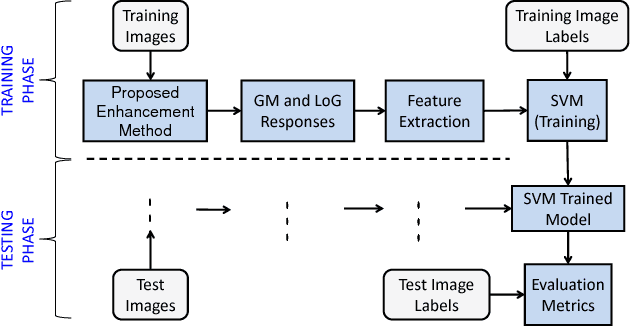

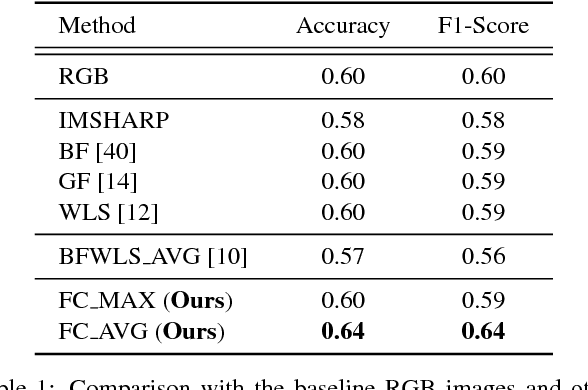

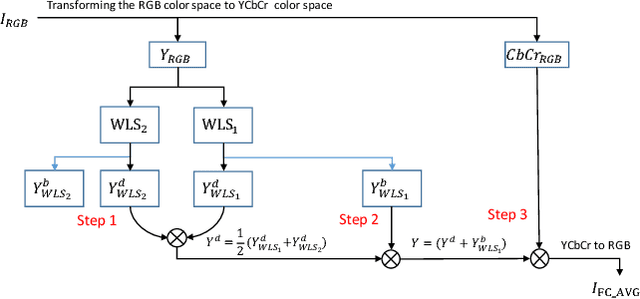

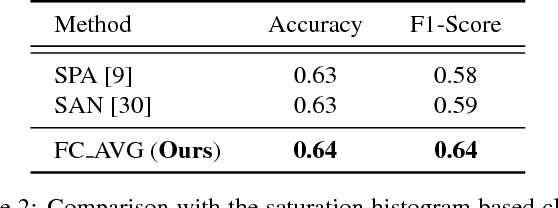

Abstract:Laparoscopic surgery has a limited field of view. Laser ablation in a laproscopic surgery causes smoke, which inevitably influences the surgeon's visibility. Therefore, it is of vital importance to remove the smoke, such that a clear visualization is possible. In order to employ a desmoking technique, one needs to know beforehand if the image contains smoke or not, to this date, there exists no accurate method that could classify the smoke/non-smoke images completely. In this work, we propose a new enhancement method which enhances the informative details in the RGB images for discrimination of smoke/non-smoke images. Our proposed method utilizes weighted least squares optimization framework~(WLS). For feature extraction, we use statistical features based on bivariate histogram distribution of gradient magnitude~(GM) and Laplacian of Gaussian~(LoG). We then train a SVM classifier with binary smoke/non-smoke classification task. We demonstrate the effectiveness of our method on Cholec80 dataset. Experiments using our proposed enhancement method show promising results with improvements of 4\% in accuracy and 4\% in F1-Score over the baseline performance of RGB images. In addition, our approach improves over the saturation histogram based classification methodologies Saturation Analysis~(SAN) and Saturation Peak Analysis~(SPA) by 1/5\% and 1/6\% in accuracy/F1-Score metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge