Auralee Edelen

A Start To End Machine Learning Approach To Maximize Scientific Throughput From The LCLS-II-HE

May 29, 2025Abstract:With the increasing brightness of Light sources, including the Diffraction-Limited brightness upgrade of APS and the high-repetition-rate upgrade of LCLS, the proposed experiments therein are becoming increasingly complex. For instance, experiments at LCLS-II-HE will require the X-ray beam to be within a fraction of a micron in diameter, with pointing stability of a few nanoradians, at the end of a kilometer-long electron accelerator, a hundred-meter-long undulator section, and tens of meters long X-ray optics. This enhancement of brightness will increase the data production rate to rival the largest data generators in the world. Without real-time active feedback control and an optimized pipeline to transform measurements to scientific information and insights, researchers will drown in a deluge of mostly useless data, and fail to extract the highly sophisticated insights that the recent brightness upgrades promise. In this article, we outline the strategy we are developing at SLAC to implement Machine Learning driven optimization, automation and real-time knowledge extraction from the electron-injector at the start of the electron accelerator, to the multidimensional X-ray optical systems, and till the experimental endstations and the high readout rate, multi-megapixel detectors at LCLS to deliver the design performance to the users. This is illustrated via examples from Accelerator, Optics and End User applications.

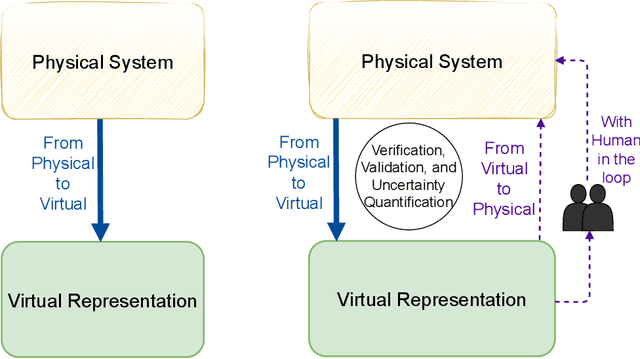

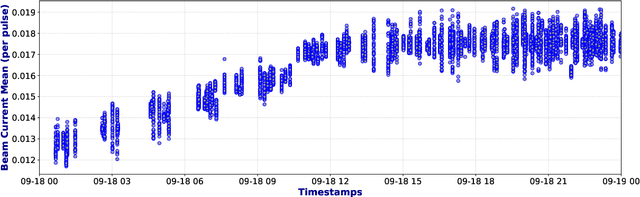

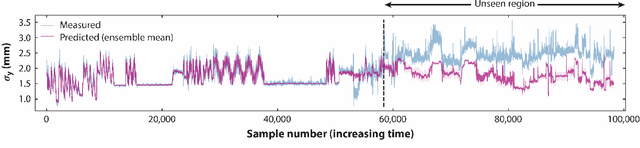

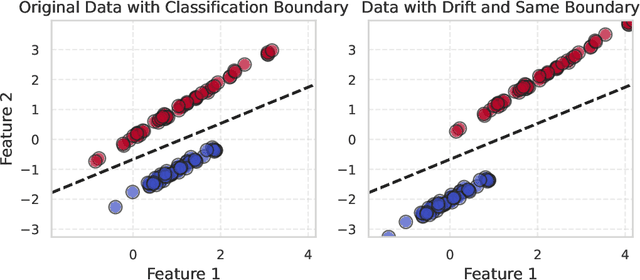

Outlook Towards Deployable Continual Learning for Particle Accelerators

Apr 04, 2025

Abstract:Particle Accelerators are high power complex machines. To ensure uninterrupted operation of these machines, thousands of pieces of equipment need to be synchronized, which requires addressing many challenges including design, optimization and control, anomaly detection and machine protection. With recent advancements, Machine Learning (ML) holds promise to assist in more advance prognostics, optimization, and control. While ML based solutions have been developed for several applications in particle accelerators, only few have reached deployment and even fewer to long term usage, due to particle accelerator data distribution drifts caused by changes in both measurable and non-measurable parameters. In this paper, we identify some of the key areas within particle accelerators where continual learning can allow maintenance of ML model performance with distribution drifts. Particularly, we first discuss existing applications of ML in particle accelerators, and their limitations due to distribution drift. Next, we review existing continual learning techniques and investigate their potential applications to address data distribution drifts in accelerators. By identifying the opportunities and challenges in applying continual learning, this paper seeks to open up the new field and inspire more research efforts towards deployable continual learning for particle accelerators.

Harnessing the Power of Gradient-Based Simulations for Multi-Objective Optimization in Particle Accelerators

Nov 07, 2024

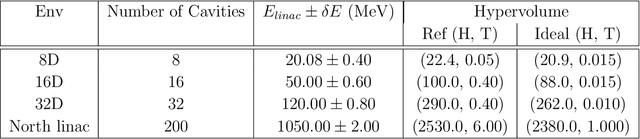

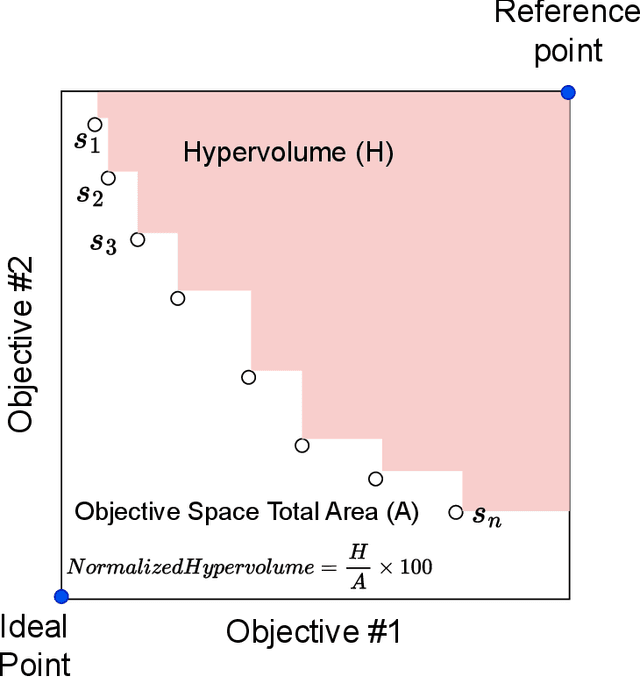

Abstract:Particle accelerator operation requires simultaneous optimization of multiple objectives. Multi-Objective Optimization (MOO) is particularly challenging due to trade-offs between the objectives. Evolutionary algorithms, such as genetic algorithm (GA), have been leveraged for many optimization problems, however, they do not apply to complex control problems by design. This paper demonstrates the power of differentiability for solving MOO problems using a Deep Differentiable Reinforcement Learning (DDRL) algorithm in particle accelerators. We compare DDRL algorithm with Model Free Reinforcement Learning (MFRL), GA and Bayesian Optimization (BO) for simultaneous optimization of heat load and trip rates in the Continuous Electron Beam Accelerator Facility (CEBAF). The underlying problem enforces strict constraints on both individual states and actions as well as cumulative (global) constraint for energy requirements of the beam. A physics-based surrogate model based on real data is developed. This surrogate model is differentiable and allows back-propagation of gradients. The results are evaluated in the form of a Pareto-front for two objectives. We show that the DDRL outperforms MFRL, BO, and GA on high dimensional problems.

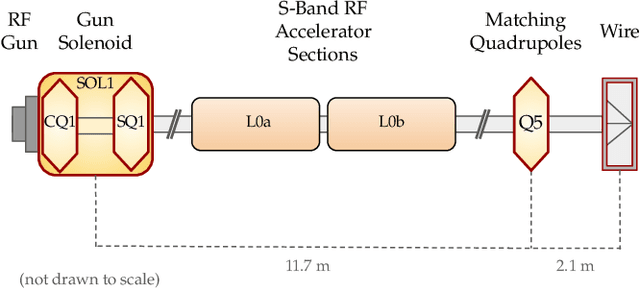

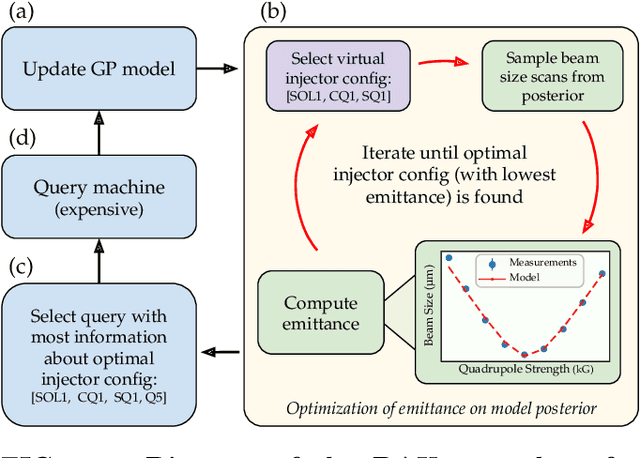

Bayesian Algorithm Execution for Tuning Particle Accelerator Emittance with Partial Measurements

Sep 10, 2022

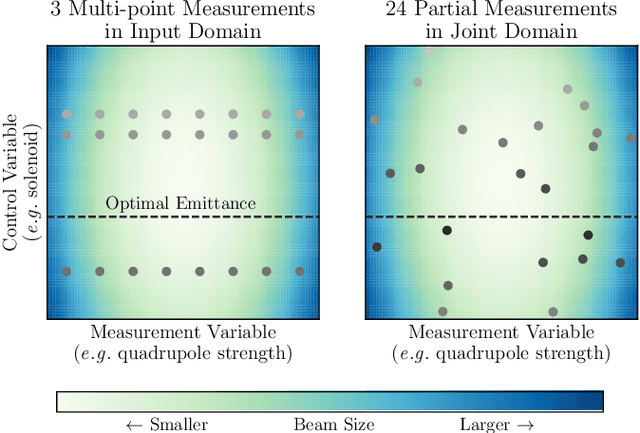

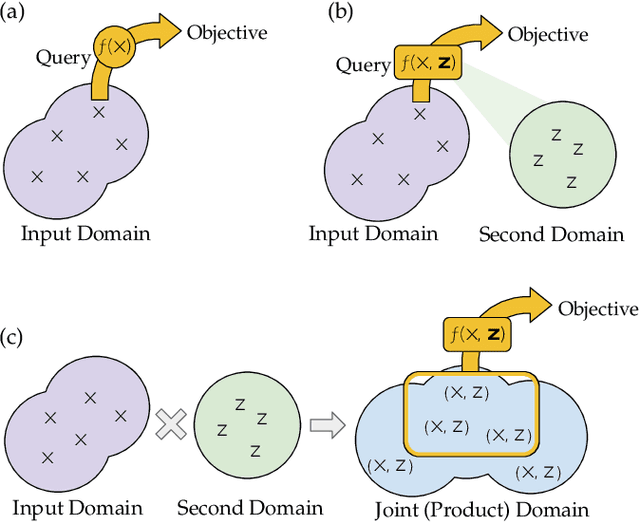

Abstract:Traditional black-box optimization methods are inefficient when dealing with multi-point measurement, i.e. when each query in the control domain requires a set of measurements in a secondary domain to calculate the objective. In particle accelerators, emittance tuning from quadrupole scans is an example of optimization with multi-point measurements. Although the emittance is a critical parameter for the performance of high-brightness machines, including X-ray lasers and linear colliders, comprehensive optimization is often limited by the time required for tuning. Here, we extend the recently-proposed Bayesian Algorithm Execution (BAX) to the task of optimization with multi-point measurements. BAX achieves sample-efficiency by selecting and modeling individual points in the joint control-measurement domain. We apply BAX to emittance minimization at the Linac Coherent Light Source (LCLS) and the Facility for Advanced Accelerator Experimental Tests II (FACET-II) particle accelerators. In an LCLS simulation environment, we show that BAX delivers a 20x increase in efficiency while also being more robust to noise compared to traditional optimization methods. Additionally, we ran BAX live at both LCLS and FACET-II, matching the hand-tuned emittance at FACET-II and achieving an optimal emittance that was 24% lower than that obtained by hand-tuning at LCLS. We anticipate that our approach can readily be adapted to other types of optimization problems involving multi-point measurements commonly found in scientific instruments.

Neural Network Solver for Coherent Synchrotron Radiation Wakefield Calculations in Accelerator-based Charged Particle Beams

Mar 14, 2022

Abstract:Particle accelerators support a wide array of scientific, industrial, and medical applications. To meet the needs of these applications, accelerator physicists rely heavily on detailed simulations of the complicated particle beam dynamics through the accelerator. One of the most computationally expensive and difficult-to-model effects is the impact of Coherent Synchrotron Radiation (CSR). As a beam travels through a curved trajectory (e.g. due to a bending magnet), it emits radiation that in turn interacts with the rest of the beam. At each step through the trajectory, the electromagnetic field introduced by CSR (called the CSR wakefield) needs to computed and used when calculating the updates to the positions and momenta of every particle in the beam. CSR is one of the major drivers of growth in the beam emittance, which is a key metric of beam quality that is critical in many applications. The CSR wakefield is very computationally intensive to compute with traditional electromagnetic solvers, and this is a major limitation in accurately simulating accelerators. Here, we demonstrate a new approach for the CSR wakefield computation using a neural network solver structured in a way that is readily generalizable to new setups. We validate its performance by adding it to a standard beam tracking test problem and show a ten-fold speedup along with high accuracy.

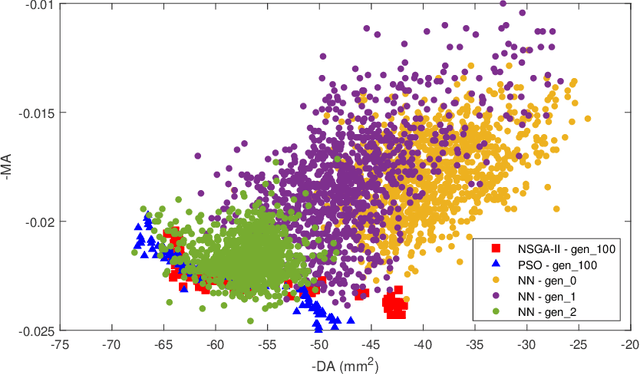

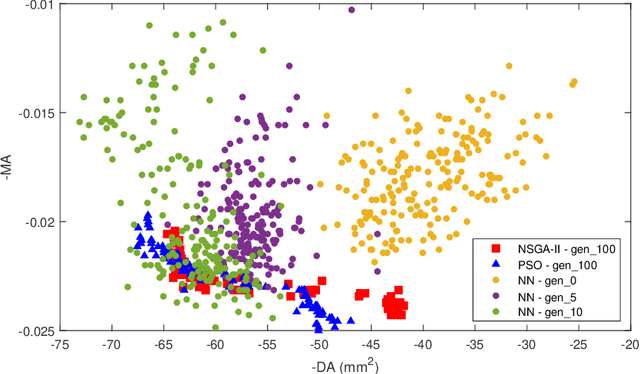

Machine learning for design optimization of storage ring nonlinear dynamics

Oct 31, 2019

Abstract:A novel approach to expedite design optimization of nonlinear beam dynamics in storage rings is proposed and demonstrated in this study. At each iteration, a neural network surrogate model is used to suggest new trial solutions in a multi-objective optimization task. The surrogate model is then updated with the new solutions, and this process is repeated until the final optimized solution is obtained. We apply this approach to optimize the nonlinear beam dynamics of the SPEAR3 storage ring, where sextupole knobs are adjusted to simultaneously improve the dynamic aperture and the momentum aperture. The approach is shown to converge to the Pareto front considerably faster than the genetic and particle swarm algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge