Assaf Zeevi

Decision, Risk and Operations, Columbia Business School

A Broader View of Thompson Sampling

Oct 08, 2025Abstract:Thompson Sampling is one of the most widely used and studied bandit algorithms, known for its simple structure, low regret performance, and solid theoretical guarantees. Yet, in stark contrast to most other families of bandit algorithms, the exact mechanism through which posterior sampling (as introduced by Thompson) is able to "properly" balance exploration and exploitation, remains a mystery. In this paper we show that the core insight to address this question stems from recasting Thompson Sampling as an online optimization algorithm. To distill this, a key conceptual tool is introduced, which we refer to as "faithful" stationarization of the regret formulation. Essentially, the finite horizon dynamic optimization problem is converted into a stationary counterpart which "closely resembles" the original objective (in contrast, the classical infinite horizon discounted formulation, that leads to the Gittins index, alters the problem and objective in too significant a manner). The newly crafted time invariant objective can be studied using Bellman's principle which leads to a time invariant optimal policy. When viewed through this lens, Thompson Sampling admits a simple online optimization form that mimics the structure of the Bellman-optimal policy, and where greediness is regularized by a measure of residual uncertainty based on point-biserial correlation. This answers the question of how Thompson Sampling balances exploration-exploitation, and moreover, provides a principled framework to study and further improve Thompson's original idea.

Linear Bandits with Partially Observable Features

Feb 10, 2025Abstract:We introduce a novel linear bandit problem with partially observable features, resulting in partial reward information and spurious estimates. Without proper address for latent part, regret possibly grows linearly in decision horizon $T$, as their influence on rewards are unknown. To tackle this, we propose a novel analysis to handle the latent features and an algorithm that achieves sublinear regret. The core of our algorithm involves (i) augmenting basis vectors orthogonal to the observed feature space, and (ii) introducing an efficient doubly robust estimator. Our approach achieves a regret bound of $\tilde{O}(\sqrt{(d + d_h)T})$, where $d$ is the dimension of observed features, and $d_h$ is the unknown dimension of the subspace of the unobserved features. Notably, our algorithm requires no prior knowledge of the unobserved feature space, which may expand as more features become hidden. Numerical experiments confirm that our algorithm outperforms both non-contextual multi-armed bandits and linear bandit algorithms depending solely on observed features.

A Doubly Robust Approach to Sparse Reinforcement Learning

Oct 23, 2023

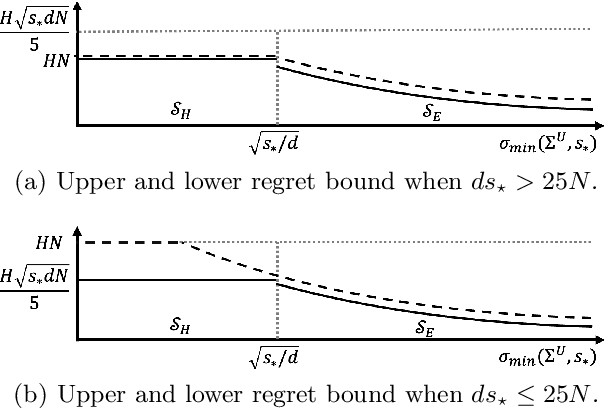

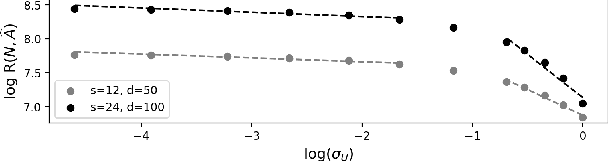

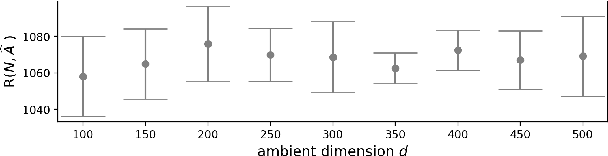

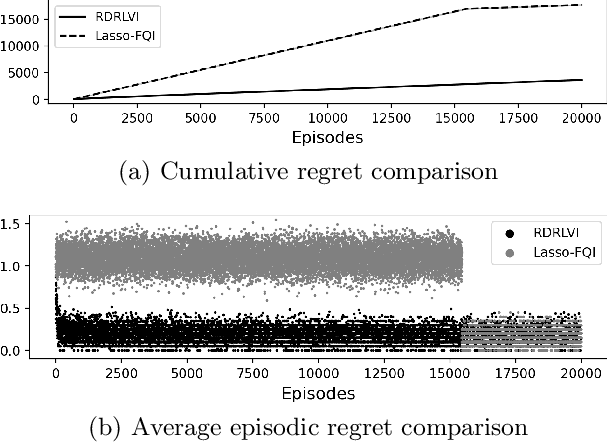

Abstract:We propose a new regret minimization algorithm for episodic sparse linear Markov decision process (SMDP) where the state-transition distribution is a linear function of observed features. The only previously known algorithm for SMDP requires the knowledge of the sparsity parameter and oracle access to an unknown policy. We overcome these limitations by combining the doubly robust method that allows one to use feature vectors of \emph{all} actions with a novel analysis technique that enables the algorithm to use data from all periods in all episodes. The regret of the proposed algorithm is $\tilde{O}(\sigma^{-1}_{\min} s_{\star} H \sqrt{N})$, where $\sigma_{\min}$ denotes the restrictive the minimum eigenvalue of the average Gram matrix of feature vectors, $s_\star$ is the sparsity parameter, $H$ is the length of an episode, and $N$ is the number of rounds. We provide a lower regret bound that matches the upper bound up to logarithmic factors on a newly identified subclass of SMDPs. Our numerical experiments support our theoretical results and demonstrate the superior performance of our algorithm.

Bayesian Design Principles for Frequentist Sequential Learning

Oct 01, 2023

Abstract:We develop a general theory to optimize the frequentist regret for sequential learning problems, where efficient bandit and reinforcement learning algorithms can be derived from unified Bayesian principles. We propose a novel optimization approach to generate "algorithmic beliefs" at each round, and use Bayesian posteriors to make decisions. The optimization objective to create "algorithmic beliefs," which we term "Algorithmic Information Ratio," represents an intrinsic complexity measure that effectively characterizes the frequentist regret of any algorithm. To the best of our knowledge, this is the first systematical approach to make Bayesian-type algorithms prior-free and applicable to adversarial settings, in a generic and optimal manner. Moreover, the algorithms are simple and often efficient to implement. As a major application, we present a novel algorithm for multi-armed bandits that achieves the "best-of-all-worlds" empirical performance in the stochastic, adversarial, and non-stationary environments. And we illustrate how these principles can be used in linear bandits, bandit convex optimization, and reinforcement learning.

Last Switch Dependent Bandits with Monotone Payoff Functions

Jun 01, 2023Abstract:In a recent work, Laforgue et al. introduce the model of last switch dependent (LSD) bandits, in an attempt to capture nonstationary phenomena induced by the interaction between the player and the environment. Examples include satiation, where consecutive plays of the same action lead to decreased performance, or deprivation, where the payoff of an action increases after an interval of inactivity. In this work, we take a step towards understanding the approximability of planning LSD bandits, namely, the (NP-hard) problem of computing an optimal arm-pulling strategy under complete knowledge of the model. In particular, we design the first efficient constant approximation algorithm for the problem and show that, under a natural monotonicity assumption on the payoffs, its approximation guarantee (almost) matches the state-of-the-art for the special and well-studied class of recharging bandits (also known as delay-dependent). In this attempt, we develop new tools and insights for this class of problems, including a novel higher-dimensional relaxation and the technique of mirroring the evolution of virtual states. We believe that these novel elements could potentially be used for approaching richer classes of action-induced nonstationary bandits (e.g., special instances of restless bandits). In the case where the model parameters are initially unknown, we develop an online learning adaptation of our algorithm for which we provide sublinear regret guarantees against its full-information counterpart.

Pareto Front Identification with Regret Minimization

May 31, 2023

Abstract:We consider Pareto front identification for linear bandits (PFILin) where the goal is to identify a set of arms whose reward vectors are not dominated by any of the others when the mean reward vector is a linear function of the context. PFILin includes the best arm identification problem and multi-objective active learning as special cases. The sample complexity of our proposed algorithm is $\tilde{O}(d/\Delta^2)$, where $d$ is the dimension of contexts and $\Delta$ is a measure of problem complexity. Our sample complexity is optimal up to a logarithmic factor. A novel feature of our algorithm is that it uses the contexts of all actions. In addition to efficiently identifying the Pareto front, our algorithm also guarantees $\tilde{O}(\sqrt{d/t})$ bound for instantaneous Pareto regret when the number of samples is larger than $\Omega(d\log dL)$ for $L$ dimensional vector rewards. By using the contexts of all arms, our proposed algorithm simultaneously provides efficient Pareto front identification and regret minimization. Numerical experiments demonstrate that the proposed algorithm successfully identifies the Pareto front while minimizing the regret.

Improved Algorithms for Multi-period Multi-class Packing Problems with~Bandit~Feedback

Jan 31, 2023Abstract:We consider the linear contextual multi-class multi-period packing problem~(LMMP) where the goal is to pack items such that the total vector of consumption is below a given budget vector and the total value is as large as possible. We consider the setting where the reward and the consumption vector associated with each action is a class-dependent linear function of the context, and the decision-maker receives bandit feedback. LMMP includes linear contextual bandits with knapsacks and online revenue management as special cases. We establish a new more efficient estimator which guarantees a faster convergence rate, and consequently, a lower regret in such problems. We propose a bandit policy that is a closed-form function of said estimated parameters. When the contexts are non-degenerate, the regret of the proposed policy is sublinear in the context dimension, the number of classes, and the time horizon~$T$ when the budget grows at least as $\sqrt{T}$. We also resolve an open problem posed in Agrawal & Devanur (2016), and extend the result to a multi-class setting. Our numerical experiments clearly demonstrate that the performance of our policy is superior to other benchmarks in the literature.

Complexity Analysis of a Countable-armed Bandit Problem

Jan 18, 2023

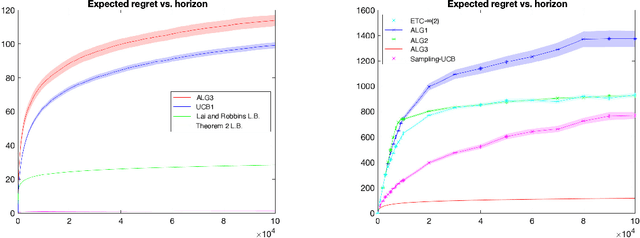

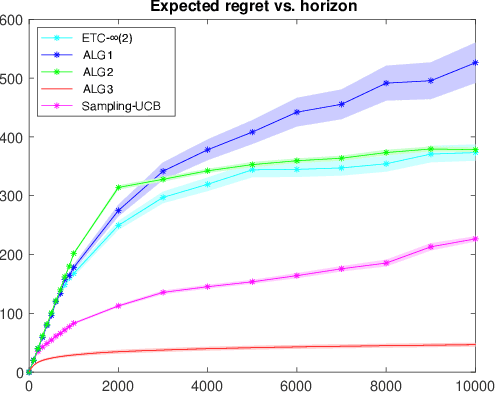

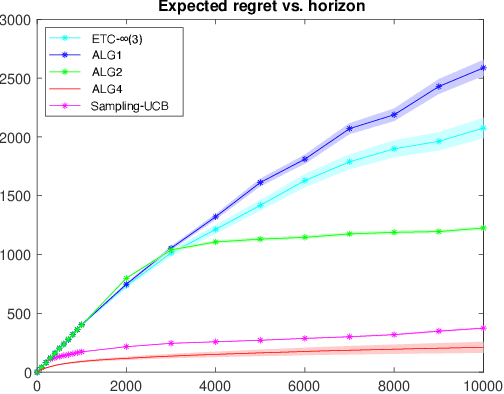

Abstract:We consider a stochastic multi-armed bandit (MAB) problem motivated by ``large'' action spaces, and endowed with a population of arms containing exactly $K$ arm-types, each characterized by a distinct mean reward. The decision maker is oblivious to the statistical properties of reward distributions as well as the population-level distribution of different arm-types, and is precluded also from observing the type of an arm after play. We study the classical problem of minimizing the expected cumulative regret over a horizon of play $n$, and propose algorithms that achieve a rate-optimal finite-time instance-dependent regret of $\mathcal{O}\left( \log n \right)$. We also show that the instance-independent (minimax) regret is $\tilde{\mathcal{O}}\left( \sqrt{n} \right)$ when $K=2$. While the order of regret and complexity of the problem suggests a great degree of similarity to the classical MAB problem, properties of the performance bounds and salient aspects of algorithm design are quite distinct from the latter, as are the key primitives that determine complexity along with the analysis tools needed to study them.

Online Allocation and Learning in the Presence of Strategic Agents

Sep 25, 2022Abstract:We study the problem of allocating $T$ sequentially arriving items among $n$ homogeneous agents under the constraint that each agent must receive a pre-specified fraction of all items, with the objective of maximizing the agents' total valuation of items allocated to them. The agents' valuations for the item in each round are assumed to be i.i.d. but their distribution is a priori unknown to the central planner. Therefore, the central planner needs to implicitly learn these distributions from the observed values in order to pick a good allocation policy. However, an added challenge here is that the agents are strategic with incentives to misreport their valuations in order to receive better allocations. This sets our work apart both from the online auction design settings which typically assume known valuation distributions and/or involve payments, and from the online learning settings that do not consider strategic agents. To that end, our main contribution is an online learning based allocation mechanism that is approximately Bayesian incentive compatible, and when all agents are truthful, guarantees a sublinear regret for individual agents' utility compared to that under the optimal offline allocation policy.

The Countable-armed Bandit with Vanishing Arms

Oct 23, 2021

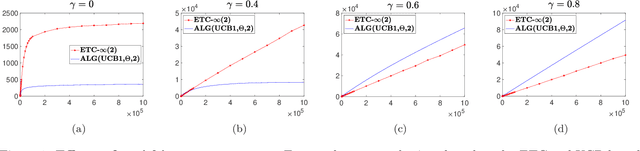

Abstract:We consider a bandit problem with countably many arms, partitioned into finitely many "types," each characterized by a unique mean reward. A "non-stationary" distribution governs the relative abundance of each arm-type in the population of arms, aka the "arm-reservoir." This non-stationarity is attributable to a probabilistic leakage of "optimal" arms from the reservoir over time, which we refer to as the "vanishing arms" phenomenon; this induces a time-varying (potentially "endogenous," policy-dependent) distribution over the reservoir. The objective is minimization of the expected cumulative regret. We characterize necessary and sufficient conditions for achievability of sub-linear regret in terms of a critical vanishing rate of optimal arms. We also discuss two reservoir distribution-oblivious algorithms that are long-run-average optimal whenever sub-linear regret is statistically achievable. Numerical experiments highlight a distinctive characteristic of this problem related to ex ante knowledge of the "gap" parameter (the difference between the top two mean rewards): in contrast to the stationary bandit formulation, regret in our setting may suffer substantial inflation under adaptive exploration-based (gap-oblivious) algorithms such as UCB vis-`a-vis their non-adaptive forced exploration-based (gap-aware) counterparts like ETC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge