Aseem Srivastava

Grin

Figurative-cum-Commonsense Knowledge Infusion for Multimodal Mental Health Meme Classification

Jan 25, 2025Abstract:The expression of mental health symptoms through non-traditional means, such as memes, has gained remarkable attention over the past few years, with users often highlighting their mental health struggles through figurative intricacies within memes. While humans rely on commonsense knowledge to interpret these complex expressions, current Multimodal Language Models (MLMs) struggle to capture these figurative aspects inherent in memes. To address this gap, we introduce a novel dataset, AxiOM, derived from the GAD anxiety questionnaire, which categorizes memes into six fine-grained anxiety symptoms. Next, we propose a commonsense and domain-enriched framework, M3H, to enhance MLMs' ability to interpret figurative language and commonsense knowledge. The overarching goal remains to first understand and then classify the mental health symptoms expressed in memes. We benchmark M3H against 6 competitive baselines (with 20 variations), demonstrating improvements in both quantitative and qualitative metrics, including a detailed human evaluation. We observe a clear improvement of 4.20% and 4.66% on weighted-F1 metric. To assess the generalizability, we perform extensive experiments on a public dataset, RESTORE, for depressive symptom identification, presenting an extensive ablation study that highlights the contribution of each module in both datasets. Our findings reveal limitations in existing models and the advantage of employing commonsense to enhance figurative understanding.

Trust Modeling in Counseling Conversations: A Benchmark Study

Jan 06, 2025Abstract:In mental health counseling, a variety of earlier studies have focused on dialogue modeling. However, most of these studies give limited to no emphasis on the quality of interaction between a patient and a therapist. The therapeutic bond between a patient and a therapist directly correlates with effective mental health counseling. It involves developing the patient's trust on the therapist over the course of counseling. To assess the therapeutic bond in counseling, we introduce trust as a therapist-assistive metric. Our definition of trust involves patients' willingness and openness to express themselves and, consequently, receive better care. We conceptualize it as a dynamic trajectory observable through textual interactions during the counseling. To facilitate trust modeling, we present MENTAL-TRUST, a novel counseling dataset comprising manual annotation of 212 counseling sessions with first-of-its-kind seven expert-verified ordinal trust levels. We project our problem statement as an ordinal classification task for trust quantification and propose a new benchmark, TrustBench, comprising a suite of classical and state-of-the-art language models on MENTAL-TRUST. We evaluate the performance across a suite of metrics and lay out an exhaustive set of findings. Our study aims to unfold how trust evolves in therapeutic interactions.

Sentiment-guided Commonsense-aware Response Generation for Mental Health Counseling

Jan 06, 2025

Abstract:The crisis of mental health issues is escalating. Effective counseling serves as a critical lifeline for individuals suffering from conditions like PTSD, stress, etc. Therapists forge a crucial therapeutic bond with clients, steering them towards positivity. Unfortunately, the massive shortage of professionals, high costs, and mental health stigma pose significant barriers to consulting therapists. As a substitute, Virtual Mental Health Assistants (VMHAs) have emerged in the digital healthcare space. However, most existing VMHAs lack the commonsense to understand the nuanced sentiments of clients to generate effective responses. To this end, we propose EmpRes, a novel sentiment-guided mechanism incorporating commonsense awareness for generating responses. By leveraging foundation models and harnessing commonsense knowledge, EmpRes aims to generate responses that effectively shape the client's sentiment towards positivity. We evaluate the performance of EmpRes on HOPE, a benchmark counseling dataset, and observe a remarkable performance improvement compared to the existing baselines across a suite of qualitative and quantitative metrics. Moreover, our extensive empirical analysis and human evaluation show that the generation ability of EmpRes is well-suited and, in some cases, surpasses the gold standard. Further, we deploy EmpRes as a chat interface for users seeking mental health support. We address the deployed system's effectiveness through an exhaustive user study with a significant positive response. Our findings show that 91% of users find the system effective, 80% express satisfaction, and over 85.45% convey a willingness to continue using the interface and recommend it to others, demonstrating the practical applicability of EmpRes in addressing the pressing challenges of mental health support, emphasizing user feedback, and ethical considerations in a real-world context.

Exploring the Efficacy of Large Language Models in Summarizing Mental Health Counseling Sessions: A Benchmark Study

Feb 29, 2024Abstract:Comprehensive summaries of sessions enable an effective continuity in mental health counseling, facilitating informed therapy planning. Yet, manual summarization presents a significant challenge, diverting experts' attention from the core counseling process. This study evaluates the effectiveness of state-of-the-art Large Language Models (LLMs) in selectively summarizing various components of therapy sessions through aspect-based summarization, aiming to benchmark their performance. We introduce MentalCLOUDS, a counseling-component guided summarization dataset consisting of 191 counseling sessions with summaries focused on three distinct counseling components (aka counseling aspects). Additionally, we assess the capabilities of 11 state-of-the-art LLMs in addressing the task of component-guided summarization in counseling. The generated summaries are evaluated quantitatively using standard summarization metrics and verified qualitatively by mental health professionals. Our findings demonstrate the superior performance of task-specific LLMs such as MentalLlama, Mistral, and MentalBART in terms of standard quantitative metrics such as Rouge-1, Rouge-2, Rouge-L, and BERTScore across all aspects of counseling components. Further, expert evaluation reveals that Mistral supersedes both MentalLlama and MentalBART based on six parameters -- affective attitude, burden, ethicality, coherence, opportunity costs, and perceived effectiveness. However, these models share the same weakness by demonstrating a potential for improvement in the opportunity costs and perceived effectiveness metrics.

Critical Behavioral Traits Foster Peer Engagement in Online Mental Health Communities

Sep 04, 2023Abstract:Online Mental Health Communities (OMHCs), such as Reddit, have witnessed a surge in popularity as go-to platforms for seeking information and support in managing mental health needs. Platforms like Reddit offer immediate interactions with peers, granting users a vital space for seeking mental health assistance. However, the largely unregulated nature of these platforms introduces intricate challenges for both users and society at large. This study explores the factors that drive peer engagement within counseling threads, aiming to enhance our understanding of this critical phenomenon. We introduce BeCOPE, a novel behavior encoded Peer counseling dataset comprising over 10,118 posts and 58,279 comments sourced from 21 mental health-specific subreddits. The dataset is annotated using three major fine-grained behavior labels: (a) intent, (b) criticism, and (c) readability, along with the emotion labels. Our analysis indicates the prominence of ``self-criticism'' as the most prevalent form of criticism expressed by help-seekers, accounting for a significant 43% of interactions. Intriguingly, we observe that individuals who explicitly express their need for help are 18.01% more likely to receive assistance compared to those who present ``surveys'' or engage in ``rants.'' Furthermore, we highlight the pivotal role of well-articulated problem descriptions, showing that superior readability effectively doubles the likelihood of receiving the sought-after support. Our study emphasizes the essential role of OMHCs in offering personalized guidance and unveils behavior-driven engagement patterns.

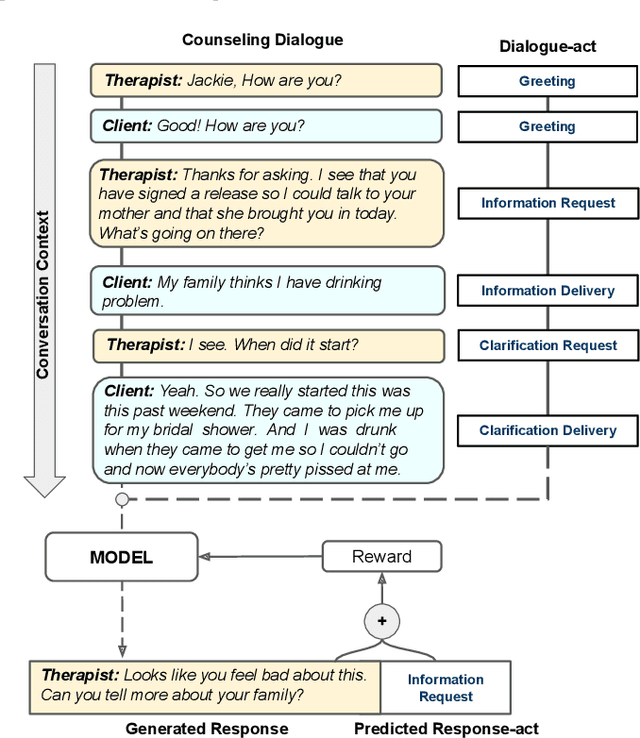

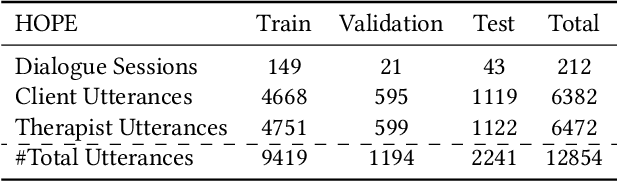

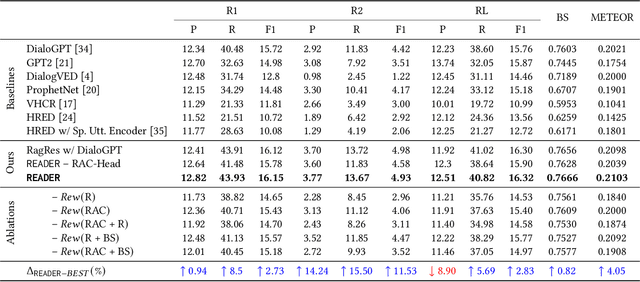

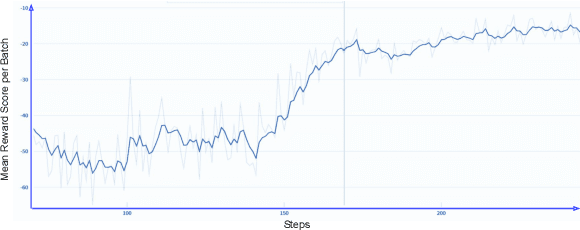

Response-act Guided Reinforced Dialogue Generation for Mental Health Counseling

Jan 30, 2023

Abstract:Virtual Mental Health Assistants (VMHAs) have become a prevalent method for receiving mental health counseling in the digital healthcare space. An assistive counseling conversation commences with natural open-ended topics to familiarize the client with the environment and later converges into more fine-grained domain-specific topics. Unlike other conversational systems, which are categorized as open-domain or task-oriented systems, VMHAs possess a hybrid conversational flow. These counseling bots need to comprehend various aspects of the conversation, such as dialogue-acts, intents, etc., to engage the client in an effective conversation. Although the surge in digital health research highlights applications of many general-purpose response generation systems, they are barely suitable in the mental health domain -- the prime reason is the lack of understanding in mental health counseling. Moreover, in general, dialogue-act guided response generators are either limited to a template-based paradigm or lack appropriate semantics. To this end, we propose READER -- a REsponse-Act guided reinforced Dialogue genERation model for the mental health counseling conversations. READER is built on transformer to jointly predict a potential dialogue-act d(t+1) for the next utterance (aka response-act) and to generate an appropriate response u(t+1). Through the transformer-reinforcement-learning (TRL) with Proximal Policy Optimization (PPO), we guide the response generator to abide by d(t+1) and ensure the semantic richness of the responses via BERTScore in our reward computation. We evaluate READER on HOPE, a benchmark counseling conversation dataset and observe that it outperforms several baselines across several evaluation metrics -- METEOR, ROUGE, and BERTScore. We also furnish extensive qualitative and quantitative analyses on results, including error analysis, human evaluation, etc.

Counseling Summarization using Mental Health Knowledge Guided Utterance Filtering

Jun 08, 2022

Abstract:The psychotherapy intervention technique is a multifaceted conversation between a therapist and a patient. Unlike general clinical discussions, psychotherapy's core components (viz. symptoms) are hard to distinguish, thus becoming a complex problem to summarize later. A structured counseling conversation may contain discussions about symptoms, history of mental health issues, or the discovery of the patient's behavior. It may also contain discussion filler words irrelevant to a clinical summary. We refer to these elements of structured psychotherapy as counseling components. In this paper, the aim is mental health counseling summarization to build upon domain knowledge and to help clinicians quickly glean meaning. We create a new dataset after annotating 12.9K utterances of counseling components and reference summaries for each dialogue. Further, we propose ConSum, a novel counseling-component guided summarization model. ConSum undergoes three independent modules. First, to assess the presence of depressive symptoms, it filters utterances utilizing the Patient Health Questionnaire (PHQ-9), while the second and third modules aim to classify counseling components. At last, we propose a problem-specific Mental Health Information Capture (MHIC) evaluation metric for counseling summaries. Our comparative study shows that we improve on performance and generate cohesive, semantic, and coherent summaries. We comprehensively analyze the generated summaries to investigate the capturing of psychotherapy elements. Human and clinical evaluations on the summary show that ConSum generates quality summary. Further, mental health experts validate the clinical acceptability of the ConSum. Lastly, we discuss the uniqueness in mental health counseling summarization in the real world and show evidences of its deployment on an online application with the support of mpathic.ai

A Computational Approach to Understand Mental Health from Reddit: Knowledge-aware Multitask Learning Framework

Mar 22, 2022

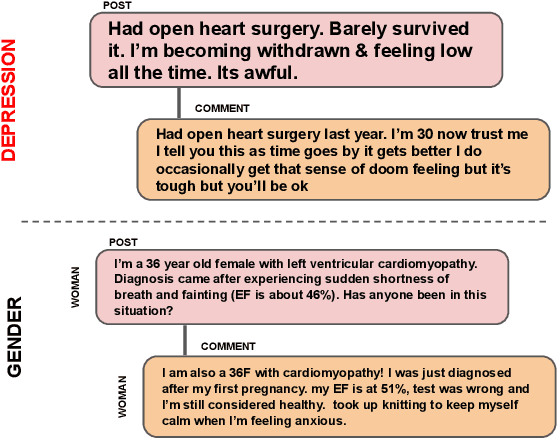

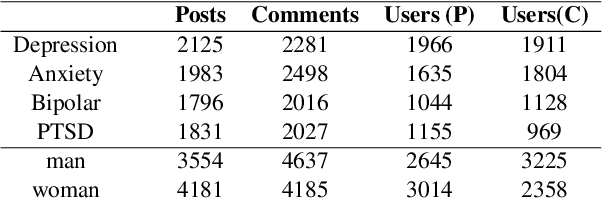

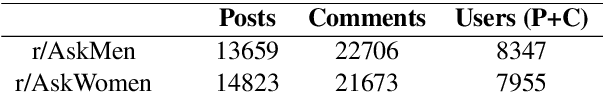

Abstract:Analyzing gender is critical to study mental health (MH) support in CVD (cardiovascular disease). The existing studies on using social media for extracting MH symptoms consider symptom detection and tend to ignore user context, disease, or gender. The current study aims to design and evaluate a system to capture how MH symptoms associated with CVD are expressed differently with the gender on social media. We observe that the reliable detection of MH symptoms expressed by persons with heart disease in user posts is challenging because of the co-existence of (dis)similar MH symptoms in one post and due to variation in the description of symptoms based on gender. We collect a corpus of $150k$ items (posts and comments) annotated using the subreddit labels and transfer learning approaches. We propose GeM, a novel task-adaptive multi-task learning approach to identify the MH symptoms in CVD patients based on gender. Specifically, we adapt a knowledge-assisted RoBERTa based bi-encoder model to capture CVD-related MH symptoms. Moreover, it enhances the reliability for differentiating the gender language in MH symptoms when compared to the state-of-art language models. Our model achieves high (statistically significant) performance and predicts four labels of MH issues and two gender labels, which outperforms RoBERTa, improving the recall by 2.14% on the symptom identification task and by 2.55% on the gender identification task.

Speaker and Time-aware Joint Contextual Learning for Dialogue-act Classification in Counselling Conversations

Nov 12, 2021

Abstract:The onset of the COVID-19 pandemic has brought the mental health of people under risk. Social counselling has gained remarkable significance in this environment. Unlike general goal-oriented dialogues, a conversation between a patient and a therapist is considerably implicit, though the objective of the conversation is quite apparent. In such a case, understanding the intent of the patient is imperative in providing effective counselling in therapy sessions, and the same applies to a dialogue system as well. In this work, we take forward a small but an important step in the development of an automated dialogue system for mental-health counselling. We develop a novel dataset, named HOPE, to provide a platform for the dialogue-act classification in counselling conversations. We identify the requirement of such conversation and propose twelve domain-specific dialogue-act (DAC) labels. We collect 12.9K utterances from publicly-available counselling session videos on YouTube, extract their transcripts, clean, and annotate them with DAC labels. Further, we propose SPARTA, a transformer-based architecture with a novel speaker- and time-aware contextual learning for the dialogue-act classification. Our evaluation shows convincing performance over several baselines, achieving state-of-the-art on HOPE. We also supplement our experiments with extensive empirical and qualitative analyses of SPARTA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge