Arun Kumar

Computer Science and Engineering, University of California San Diego, San Diego, USA

ZEST: Zero-shot Embodied Skill Transfer for Athletic Robot Control

Jan 30, 2026Abstract:Achieving robust, human-like whole-body control on humanoid robots for agile, contact-rich behaviors remains a central challenge, demanding heavy per-skill engineering and a brittle process of tuning controllers. We introduce ZEST (Zero-shot Embodied Skill Transfer), a streamlined motion-imitation framework that trains policies via reinforcement learning from diverse sources -- high-fidelity motion capture, noisy monocular video, and non-physics-constrained animation -- and deploys them to hardware zero-shot. ZEST generalizes across behaviors and platforms while avoiding contact labels, reference or observation windows, state estimators, and extensive reward shaping. Its training pipeline combines adaptive sampling, which focuses training on difficult motion segments, and an automatic curriculum using a model-based assistive wrench, together enabling dynamic, long-horizon maneuvers. We further provide a procedure for selecting joint-level gains from approximate analytical armature values for closed-chain actuators, along with a refined model of actuators. Trained entirely in simulation with moderate domain randomization, ZEST demonstrates remarkable generality. On Boston Dynamics' Atlas humanoid, ZEST learns dynamic, multi-contact skills (e.g., army crawl, breakdancing) from motion capture. It transfers expressive dance and scene-interaction skills, such as box-climbing, directly from videos to Atlas and the Unitree G1. Furthermore, it extends across morphologies to the Spot quadruped, enabling acrobatics, such as a continuous backflip, through animation. Together, these results demonstrate robust zero-shot deployment across heterogeneous data sources and embodiments, establishing ZEST as a scalable interface between biological movements and their robotic counterparts.

Multi-RADS Synthetic Radiology Report Dataset and Head-to-Head Benchmarking of 41 Open-Weight and Proprietary Language Models

Jan 06, 2026Abstract:Background: Reporting and Data Systems (RADS) standardize radiology risk communication but automated RADS assignment from narrative reports is challenging because of guideline complexity, output-format constraints, and limited benchmarking across RADS frameworks and model sizes. Purpose: To create RXL-RADSet, a radiologist-verified synthetic multi-RADS benchmark, and compare validity and accuracy of open-weight small language models (SLMs) with a proprietary model for RADS assignment. Materials and Methods: RXL-RADSet contains 1,600 synthetic radiology reports across 10 RADS (BI-RADS, CAD-RADS, GB-RADS, LI-RADS, Lung-RADS, NI-RADS, O-RADS, PI-RADS, TI-RADS, VI-RADS) and multiple modalities. Reports were generated by LLMs using scenario plans and simulated radiologist styles and underwent two-stage radiologist verification. We evaluated 41 quantized SLMs (12 families, 0.135-32B parameters) and GPT-5.2 under a fixed guided prompt. Primary endpoints were validity and accuracy; a secondary analysis compared guided versus zero-shot prompting. Results: Under guided prompting GPT-5.2 achieved 99.8% validity and 81.1% accuracy (1,600 predictions). Pooled SLMs (65,600 predictions) achieved 96.8% validity and 61.1% accuracy; top SLMs in the 20-32B range reached ~99% validity and mid-to-high 70% accuracy. Performance scaled with model size (inflection between <1B and >=10B) and declined with RADS complexity primarily due to classification difficulty rather than invalid outputs. Guided prompting improved validity (99.2% vs 96.7%) and accuracy (78.5% vs 69.6%) compared with zero-shot. Conclusion: RXL-RADSet provides a radiologist-verified multi-RADS benchmark; large SLMs (20-32B) can approach proprietary-model performance under guided prompting, but gaps remain for higher-complexity schemes.

Analysis of Null Related Beampattern Measures and Signal Quantization Effects for Linear Differential Microphone Arrays

Jun 26, 2025Abstract:A differential microphone array (DMA) offers enhanced capabilities to obtain sharp nulls at the cost of relatively broad peaks in the beam power pattern. This can be used for applications that require nullification or attenuation of interfering sources. To the best of our knowledge, the existing literature lacks measures that directly assess the efficacy of nulls, and null-related measures have not been investigated in the context of differential microphone arrays (DMAs). This paper offers new insights about the utility of DMAs by proposing measures that characterize the nulls in their beam power patterns. We investigate the performance of differential beamformers by presenting and evaluating null-related measures namely null depth (ND) and Null Width (NW) as a function of depth level relative to the beam power pattern maxima. A study of signal quantization effects due to data acquisition for 1st, 2nd and 3rd order linear DMAs and for different beampatterns i.e. dipole, cardioid, hypercardioid and supercardioid is presented. An analytical expression for the quantized beamformed output for any general $ N^{th} $ order DMA is formulated. Simulation results of the variation of ND with number of quantization bits and the variation of NW as a function of depth are also presented and inferences are drawn. Lab experiments are conducted in a fully anechoic room to support the simulation results. The measured beampattern exhibits a pronounced null depth, confirming the effectiveness of the experimental setup.

Effect of Signal Quantization on Performance Measures of a 1st Order One Dimensional Differential Microphone Array

Jun 18, 2025Abstract:In practical systems, recorded analog signals must be digitized for processing, introducing quantization as a critical aspect of data acquisition. While prior studies have examined quantization effects in various signal processing contexts, its impact on differential microphone arrays (DMAs), particularly in one-dimensional (1D) first-order configurations, remains unexplored. This paper investigates the influence of signal quantization on performance of first-order 1D DMAs across various beampatterns. An analytical expression for quantized beamformed output for a first-order 1D DMA has been formulated. The effect of signal quantization has been studied on array performance measures such as the Beampattern, Directivity Factor (DF), Front-to-Back Ratio (FBR), and null depth (ND). Simulation results reveal that beampattern shape remains structurally invariant across quantization bit depths, with quantization primarily affecting ND. DF and FBR remain constant with the varying number of quantization bits. Additionally, ND is shown to be frequency-independent; however, it increases with increasing quantization bit depths, enhancing interference suppression. The study also examines the effect of steering nulls across the azimuthal range, showing that ND degrades as the null moves closer to the source look direction, indicating reduced interference suppression.

Structured Representation

May 17, 2025Abstract:Invariant representations are core to representation learning, yet a central challenge remains: uncovering invariants that are stable and transferable without suppressing task-relevant signals. This raises fundamental questions, requiring further inquiry, about the appropriate level of abstraction at which such invariants should be defined, and which aspects of a system they should characterize. Interpretation of the environment relies on abstract knowledge structures to make sense of the current state, which leads to interactions, essential drivers of learning and knowledge acquisition. We posit that interpretation operates at the level of higher-order relational knowledge; hence, invariant structures must be where knowledge resides, specifically, as partitions defined by the closure of relational paths within an abstract knowledge space. These partitions serve as the core invariant representations, forming the structural substrate where knowledge is stored and learning occurs. On the other hand, inter-partition connectors enable the deployment of these knowledge partitions encoding task-relevant transitions. Thus, invariant partitions provide the foundational primitives of structured representation. We formalize the computational foundations for structured representation of the invariant partitions based on closed semiring, a relational algebraic structure.

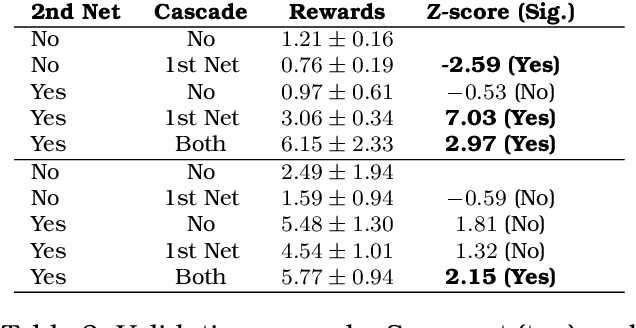

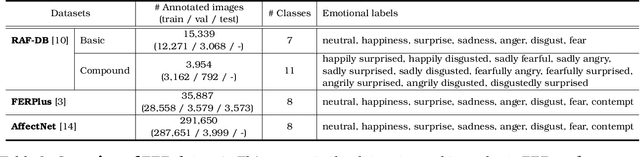

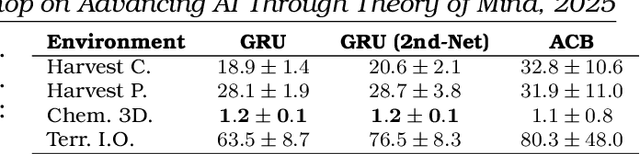

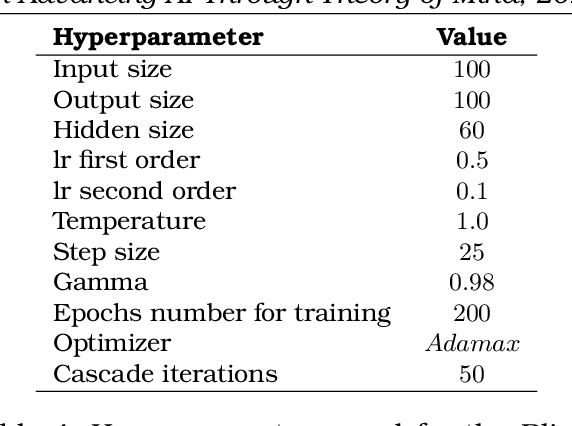

Proceedings of 1st Workshop on Advancing Artificial Intelligence through Theory of Mind

Apr 28, 2025

Abstract:This volume includes a selection of papers presented at the Workshop on Advancing Artificial Intelligence through Theory of Mind held at AAAI 2025 in Philadelphia US on 3rd March 2025. The purpose of this volume is to provide an open access and curated anthology for the ToM and AI research community.

Unseen Object Reasoning with Shared Appearance Cues

Jun 21, 2024

Abstract:This paper introduces an innovative approach to open world recognition (OWR), where we leverage knowledge acquired from known objects to address the recognition of previously unseen objects. The traditional method of object modeling relies on supervised learning with strict closed-set assumptions, presupposing that objects encountered during inference are already known at the training phase. However, this assumption proves inadequate for real-world scenarios due to the impracticality of accounting for the immense diversity of objects. Our hypothesis posits that object appearances can be represented as collections of "shareable" mid-level features, arranged in constellations to form object instances. By adopting this framework, we can efficiently dissect and represent both known and unknown objects in terms of their appearance cues. Our paper introduces a straightforward yet elegant method for modeling novel or unseen objects, utilizing established appearance cues and accounting for inherent uncertainties. This representation not only enables the detection of out-of-distribution objects or novel categories among unseen objects but also facilitates a deeper level of reasoning, empowering the identification of the superclass to which an unknown instance belongs. This novel approach holds promise for advancing open world recognition in diverse applications.

KIX: A Metacognitive Generalization Framework

Feb 08, 2024Abstract:Humans and other animals aptly exhibit general intelligence behaviors in solving a variety of tasks with flexibility and ability to adapt to novel situations by reusing and applying high level knowledge acquired over time. But artificial agents are more of a specialist, lacking such generalist behaviors. Artificial agents will require understanding and exploiting critical structured knowledge representations. We present a metacognitive generalization framework, Knowledge-Interaction-eXecution (KIX), and argue that interactions with objects leveraging type space facilitate the learning of transferable interaction concepts and generalization. It is a natural way of integrating knowledge into reinforcement learning and promising to act as an enabler for autonomous and generalist behaviors in artificial intelligence systems.

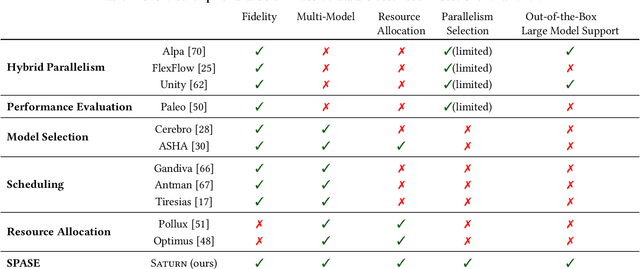

Saturn: Efficient Multi-Large-Model Deep Learning

Nov 06, 2023Abstract:In this paper, we propose Saturn, a new data system to improve the efficiency of multi-large-model training (e.g., during model selection/hyperparameter optimization). We first identify three key interconnected systems challenges for users building large models in this setting -- parallelism technique selection, distribution of GPUs over jobs, and scheduling. We then formalize these as a joint problem, and build a new system architecture to tackle these challenges simultaneously. Our evaluations show that our joint-optimization approach yields 39-49% lower model selection runtimes than typical current DL practice.

Saturn: An Optimized Data System for Large Model Deep Learning Workloads

Sep 03, 2023

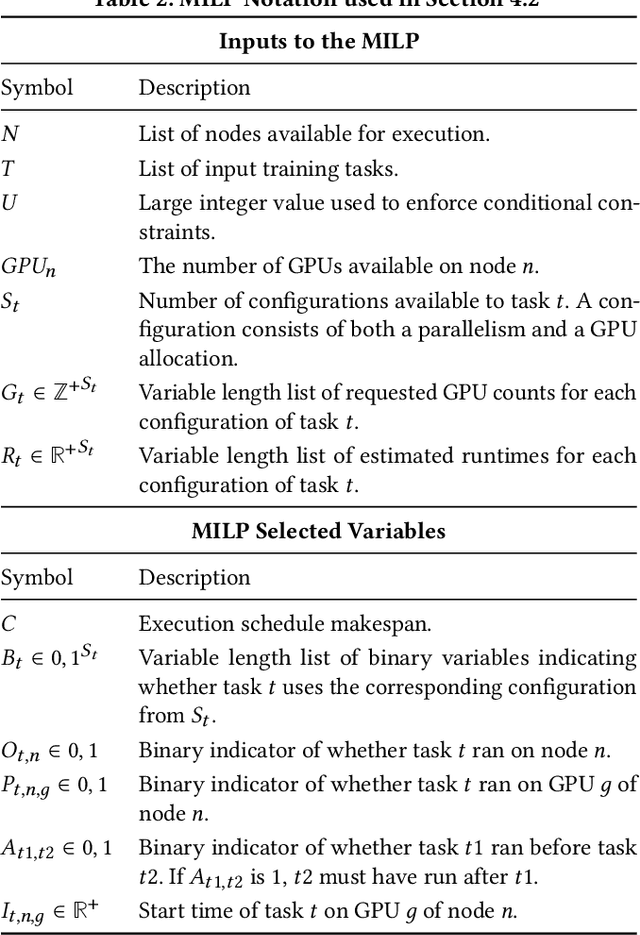

Abstract:Large language models such as GPT-3 & ChatGPT have transformed deep learning (DL), powering applications that have captured the public's imagination. These models are rapidly being adopted across domains for analytics on various modalities, often by finetuning pre-trained base models. Such models need multiple GPUs due to both their size and computational load, driving the development of a bevy of "model parallelism" techniques & tools. Navigating such parallelism choices, however, is a new burden for end users of DL such as data scientists, domain scientists, etc. who may lack the necessary systems knowhow. The need for model selection, which leads to many models to train due to hyper-parameter tuning or layer-wise finetuning, compounds the situation with two more burdens: resource apportioning and scheduling. In this work, we tackle these three burdens for DL users in a unified manner by formalizing them as a joint problem that we call SPASE: Select a Parallelism, Allocate resources, and SchedulE. We propose a new information system architecture to tackle the SPASE problem holistically, representing a key step toward enabling wider adoption of large DL models. We devise an extensible template for existing parallelism schemes and combine it with an automated empirical profiler for runtime estimation. We then formulate SPASE as an MILP. We find that direct use of an MILP-solver is significantly more effective than several baseline heuristics. We optimize the system runtime further with an introspective scheduling approach. We implement all these techniques into a new data system we call Saturn. Experiments with benchmark DL workloads show that Saturn achieves 39-49% lower model selection runtimes than typical current DL practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge