Artur Dubrawski

Carnegie Mellon University, Auton Lab, The Robotics Institute, Pittsburgh, USA

Multimodal Bayesian Network for Robust Assessment of Casualties in Autonomous Triage

Dec 21, 2025Abstract:Mass Casualty Incidents can overwhelm emergency medical systems and resulting delays or errors in the assessment of casualties can lead to preventable deaths. We present a decision support framework that fuses outputs from multiple computer vision models, estimating signs of severe hemorrhage, respiratory distress, physical alertness, or visible trauma, into a Bayesian network constructed entirely from expert-defined rules. Unlike traditional data-driven models, our approach does not require training data, supports inference with incomplete information, and is robust to noisy or uncertain observations. We report performance for two missions involving 11 and 9 casualties, respectively, where our Bayesian network model substantially outperformed vision-only baselines during evaluation of our system in the DARPA Triage Challenge (DTC) field scenarios. The accuracy of physiological assessment improved from 15% to 42% in the first scenario and from 19% to 46% in the second, representing nearly threefold increase in performance. More importantly, overall triage accuracy increased from 14% to 53% in all patients, while the diagnostic coverage of the system expanded from 31% to 95% of the cases requiring assessment. These results demonstrate that expert-knowledge-guided probabilistic reasoning can significantly enhance automated triage systems, offering a promising approach to supporting emergency responders in MCIs. This approach enabled Team Chiron to achieve 4th place out of 11 teams during the 1st physical round of the DTC.

STAMP: Spatial-Temporal Adapter with Multi-Head Pooling

Nov 13, 2025Abstract:Time series foundation models (TSFMs) pretrained on data from multiple domains have shown strong performance on diverse modeling tasks. Various efforts have been made to develop foundation models specific to electroencephalography (EEG) data, which records brain electrical activity as time series. However, no comparative analysis of EEG-specific foundation models (EEGFMs) versus general TSFMs has been performed on EEG-specific tasks. We introduce a novel Spatial-Temporal Adapter with Multi-Head Pooling (STAMP), which leverages univariate embeddings produced by a general TSFM, implicitly models spatial-temporal characteristics of EEG data, and achieves performance comparable to state-of-the-art EEGFMs. A comprehensive analysis is performed on 8 benchmark datasets of clinical tasks using EEG for classification, along with ablation studies. Our proposed adapter is lightweight in trainable parameters and flexible in the inputs it can accommodate, supporting easy modeling of EEG data using TSFMs.

Automatic Cannulation of Femoral Vessels in a Porcine Shock Model

Jun 17, 2025Abstract:Rapid and reliable vascular access is critical in trauma and critical care. Central vascular catheterization enables high-volume resuscitation, hemodynamic monitoring, and advanced interventions like ECMO and REBOA. While peripheral access is common, central access is often necessary but requires specialized ultrasound-guided skills, posing challenges in prehospital settings. The complexity arises from deep target vessels and the precision needed for needle placement. Traditional techniques, like the Seldinger method, demand expertise to avoid complications. Despite its importance, ultrasound-guided central access is underutilized due to limited field expertise. While autonomous needle insertion has been explored for peripheral vessels, only semi-autonomous methods exist for femoral access. This work advances toward full automation, integrating robotic ultrasound for minimally invasive emergency procedures. Our key contribution is the successful femoral vein and artery cannulation in a porcine hemorrhagic shock model.

* 2 pages, 2 figures, conference

Frame-Level Real-Time Assessment of Stroke Rehabilitation Exercises from Video-Level Labeled Data: Task-Specific vs. Foundation Models

Jun 04, 2025Abstract:The growing demands of stroke rehabilitation have increased the need for solutions to support autonomous exercising. Virtual coaches can provide real-time exercise feedback from video data, helping patients improve motor function and keep engagement. However, training real-time motion analysis systems demands frame-level annotations, which are time-consuming and costly to obtain. In this work, we present a framework that learns to classify individual frames from video-level annotations for real-time assessment of compensatory motions in rehabilitation exercises. We use a gradient-based technique and a pseudo-label selection method to create frame-level pseudo-labels for training a frame-level classifier. We leverage pre-trained task-specific models - Action Transformer, SkateFormer - and a foundation model - MOMENT - for pseudo-label generation, aiming to improve generalization to new patients. To validate the approach, we use the \textit{SERE} dataset with 18 post-stroke patients performing five rehabilitation exercises annotated on compensatory motions. MOMENT achieves better video-level assessment results (AUC = $73\%$), outperforming the baseline LSTM (AUC = $58\%$). The Action Transformer, with the Integrated Gradient technique, leads to better outcomes (AUC = $72\%$) for frame-level assessment, outperforming the baseline trained with ground truth frame-level labeling (AUC = $69\%$). We show that our proposed approach with pre-trained models enhances model generalization ability and facilitates the customization to new patients, reducing the demands of data labeling.

TimeSeriesGym: A Scalable Benchmark for (Time Series) Machine Learning Engineering Agents

May 19, 2025Abstract:We introduce TimeSeriesGym, a scalable benchmarking framework for evaluating Artificial Intelligence (AI) agents on time series machine learning engineering challenges. Existing benchmarks lack scalability, focus narrowly on model building in well-defined settings, and evaluate only a limited set of research artifacts (e.g., CSV submission files). To make AI agent benchmarking more relevant to the practice of machine learning engineering, our framework scales along two critical dimensions. First, recognizing that effective ML engineering requires a range of diverse skills, TimeSeriesGym incorporates challenges from diverse sources spanning multiple domains and tasks. We design challenges to evaluate both isolated capabilities (including data handling, understanding research repositories, and code translation) and their combinations, and rather than addressing each challenge independently, we develop tools that support designing multiple challenges at scale. Second, we implement evaluation mechanisms for multiple research artifacts, including submission files, code, and models, using both precise numeric measures and more flexible LLM-based evaluation approaches. This dual strategy balances objective assessment with contextual judgment. Although our initial focus is on time series applications, our framework can be readily extended to other data modalities, broadly enhancing the comprehensiveness and practical utility of agentic AI evaluation. We open-source our benchmarking framework to facilitate future research on the ML engineering capabilities of AI agents.

Investigating Compositional Reasoning in Time Series Foundation Models

Feb 09, 2025

Abstract:Large pre-trained time series foundation models (TSFMs) have demonstrated promising zero-shot performance across a wide range of domains. However, a question remains: Do TSFMs succeed solely by memorizing training patterns, or do they possess the ability to reason? While reasoning is a topic of great interest in the study of Large Language Models (LLMs), it is undefined and largely unexplored in the context of TSFMs. In this work, inspired by language modeling literature, we formally define compositional reasoning in forecasting and distinguish it from in-distribution generalization. We evaluate the reasoning and generalization capabilities of 23 popular deep learning forecasting models on multiple synthetic and real-world datasets. Additionally, through controlled studies, we systematically examine which design choices in TSFMs contribute to improved reasoning abilities. Our study yields key insights into the impact of TSFM architecture design on compositional reasoning and generalization. We find that patch-based Transformers have the best reasoning performance, closely followed by residualized MLP-based architectures, which are 97\% less computationally complex in terms of FLOPs and 86\% smaller in terms of the number of trainable parameters. Interestingly, in some zero-shot out-of-distribution scenarios, these models can outperform moving average and exponential smoothing statistical baselines trained on in-distribution data. Only a few design choices, such as the tokenization method, had a significant (negative) impact on Transformer model performance.

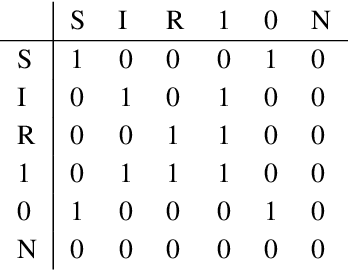

Multimodal Structure Preservation Learning

Oct 29, 2024

Abstract:When selecting data to build machine learning models in practical applications, factors such as availability, acquisition cost, and discriminatory power are crucial considerations. Different data modalities often capture unique aspects of the underlying phenomenon, making their utilities complementary. On the other hand, some sources of data host structural information that is key to their value. Hence, the utility of one data type can sometimes be enhanced by matching the structure of another. We propose Multimodal Structure Preservation Learning (MSPL) as a novel method of learning data representations that leverages the clustering structure provided by one data modality to enhance the utility of data from another modality. We demonstrate the effectiveness of MSPL in uncovering latent structures in synthetic time series data and recovering clusters from whole genome sequencing and antimicrobial resistance data using mass spectrometry data in support of epidemiology applications. The results show that MSPL can imbue the learned features with external structures and help reap the beneficial synergies occurring across disparate data modalities.

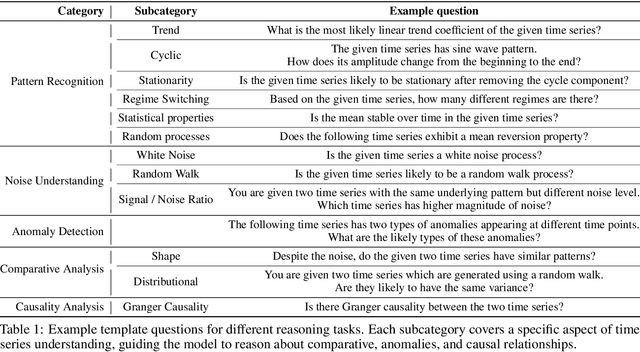

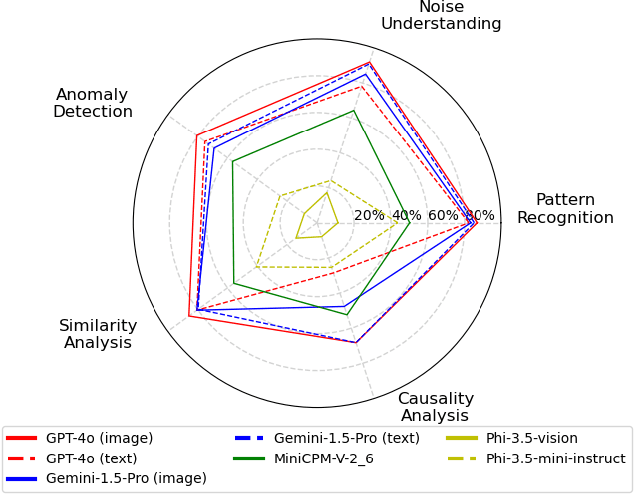

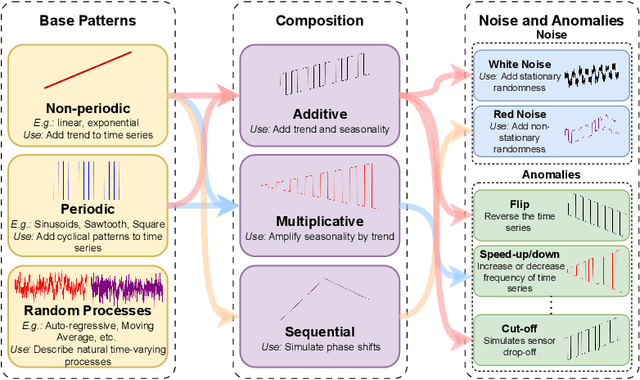

TimeSeriesExam: A time series understanding exam

Oct 18, 2024

Abstract:Large Language Models (LLMs) have recently demonstrated a remarkable ability to model time series data. These capabilities can be partly explained if LLMs understand basic time series concepts. However, our knowledge of what these models understand about time series data remains relatively limited. To address this gap, we introduce TimeSeriesExam, a configurable and scalable multiple-choice question exam designed to assess LLMs across five core time series understanding categories: pattern recognition, noise understanding, similarity analysis, anomaly detection, and causality analysis. TimeSeriesExam comprises of over 700 questions, procedurally generated using 104 carefully curated templates and iteratively refined to balance difficulty and their ability to discriminate good from bad models. We test 7 state-of-the-art LLMs on the TimeSeriesExam and provide the first comprehensive evaluation of their time series understanding abilities. Our results suggest that closed-source models such as GPT-4 and Gemini understand simple time series concepts significantly better than their open-source counterparts, while all models struggle with complex concepts such as causality analysis. We believe that the ability to programatically generate questions is fundamental to assessing and improving LLM's ability to understand and reason about time series data.

Implicit Reasoning in Deep Time Series Forecasting

Sep 18, 2024

Abstract:Recently, time series foundation models have shown promising zero-shot forecasting performance on time series from a wide range of domains. However, it remains unclear whether their success stems from a true understanding of temporal dynamics or simply from memorizing the training data. While implicit reasoning in language models has been studied, similar evaluations for time series models have been largely unexplored. This work takes an initial step toward assessing the reasoning abilities of deep time series forecasting models. We find that certain linear, MLP-based, and patch-based Transformer models generalize effectively in systematically orchestrated out-of-distribution scenarios, suggesting underexplored reasoning capabilities beyond simple pattern memorization.

Bifurcation Identification for Ultrasound-driven Robotic Cannulation

Sep 10, 2024

Abstract:In trauma and critical care settings, rapid and precise intravascular access is key to patients' survival. Our research aims at ensuring this access, even when skilled medical personnel are not readily available. Vessel bifurcations are anatomical landmarks that can guide the safe placement of catheters or needles during medical procedures. Although ultrasound is advantageous in navigating anatomical landmarks in emergency scenarios due to its portability and safety, to our knowledge no existing algorithm can autonomously extract vessel bifurcations using ultrasound images. This is primarily due to the limited availability of ground truth data, in particular, data from live subjects, needed for training and validating reliable models. Researchers often resort to using data from anatomical phantoms or simulations. We introduce BIFURC, Bifurcation Identification for Ultrasound-driven Robot Cannulation, a novel algorithm that identifies vessel bifurcations and provides optimal needle insertion sites for an autonomous robotic cannulation system. BIFURC integrates expert knowledge with deep learning techniques to efficiently detect vessel bifurcations within the femoral region and can be trained on a limited amount of in-vivo data. We evaluated our algorithm using a medical phantom as well as real-world experiments involving live pigs. In all cases, BIFURC consistently identified bifurcation points and needle insertion locations in alignment with those identified by expert clinicians.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge